Assessment Literacy in College Teaching:

Empirical Evidence on the Role and Effectiveness of a Faculty Training Course

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Abstract

This research explores how faculty members’ conceptions of assessment and confidence in assessment change as a result of an instructor training course. Based on a sample of 27 faculty members enrolled in a semester-long instructional development course, this survey-based study provides initial evidence that faculty members can develop confidence in assessment while adopting increasingly complex conceptions of assessment. Based on this study’s findings, we argue that instructional development programs for college faculty have a critical role to play in stimulating faculty learning about assessment of student learning and are an important component in promoting a positive assessment culture.

Keywords: assessment, instructional development, faculty development

Introduction

In many disciplines, postsecondary education (PSE) faculty members have little formal preparation in the art and science of teaching, curriculum design, and assessment (Knapper, 2010). Previous research indicates that college instructors’ and university professors’ approaches to teaching are highly variable and frequently associated with their own experiences as learners (Colbeck et al., 2002; Kember & Kwan, 2000; Knapper, 2010). As a result, faculty members often use traditional, didactic, teacher-centered instruction with minimal integration of formative feedback and learner-driven assessments. While most instruction in PSE relies on traditional techniques, research has consistently noted that students benefit more from engaging in active rather than passive learning situations and value feedback-rich learning contexts (Komarraju & Karau, 2008; Kuh et al., 2006; Machemer & Crawford, 2007). Accordingly, it is not surprising that PSE instruction has been repeatedly critiqued for lacking pedagogical innovation and cultivating a learning culture driven by exam-based summative measures of student achievement (Yorke, 2003).

In response to these critiques, most universities and colleges now offer training opportunities for faculty members to enhance their teaching, curriculum planning, and assessment practice in order to enhance the student learning experience (Taylor & Colet, 2010). These training opportunities, known as instructional development activities, involve workshops, seminars, consultations, or classroom observations focused on specific skills and competencies. In an effort to change the culture of assessment within universities and colleges, faculty training opportunities are beginning to focus on enhancing instructors’ conceptions of student assessment toward more contemporary approaches. Contemporary approaches to assessment in PSE recognize the role and important function of summative testing but also encourage an integrated approach to assessment that utilizes formative feedback and dialogical assessment structures to propel learning forward (Boud, 2000; Nicol & Macfarlane-Dick, 2006).

Changing faculty members’ approaches to student assessment is challenging. In addition to their entrenched beliefs about the role and form of assessment within specific disciplines, shifting toward a more balanced orientation between summative and formative assessment approaches is increasingly difficult given current accountability mandates and the highly competitive cultures evident within many universities (Taras, 2005, 2007). Despite evidence that direct training in teaching and learning methods provided by instructional development programs can lead to positive impacts on student learning (Condon et al., 2016; Perez et al., 2012), few studies have examined how these programs contribute toward shifts in faculty members’ understandings of, confidence in, and subsequent adoption of contemporary assessment practices (Fishman et al., 2003).

The purpose of this study is to explore college instructors’ learning in response to focused training on assessment of student learning as part of a semester-long instructional development course. Specifically, the course centered on constructivist teaching strategies, with a discrete focus on contemporary approaches to student assessment. Guiding this research on instructor learning were the following questions:

- How do new instructors’ conceptions of assessment differ between the beginning and end of an instructor training course?

- How does new instructors’ confidence toward assessment differ between the beginning and end of an instructor training course?

Literature Review

Assessment in Postsecondary Education

Student assessment in PSE continues to undergo reforms as constructivist teaching methodologies are more widely adopted, as accountability requirements intensify, and as assessment is increasingly recognized as having potential to improve teaching and learning (Taras, 2005, 2007). Requirements from accreditors and other agencies have increased institutional efforts to measure student learning, making assessment more important than ever. It is not likely that the focus of accreditation requirements on assessment will diminish any time soon, so institutional efforts to document, understand, and improve student learning must continue (Krzykowski & Kinser, 2014). Additionally, earlier empirical research has documented the importance of assessment as part of the teaching and learning process and its key influence on student learning (Fernandes et al., 2012; Struyven et al., 2005; Webber, 2012). As the demand for assessment literacy among postsecondary instructors increases, there is a greater need to understand how new faculty members develop their competency in using effective and contemporary classroom assessments within their instructional practice.

Advances in cognitive science have enhanced our understanding of how students learn and have had significant implications for assessment practices. Research over the last several decades has supported an increased focus on constructivist teaching methods that help students to actively assimilate and accommodate new material as a result of active engagement in authentic learning experiences and feedback-driven learning tasks (Black & Wiliam, 1998; Gardner, 2006). Compared to traditional instructional approaches, in which students are seen as passive receivers of information, current thinking about how students learn elevates assessment beyond its summative means to a central component of active learning. In this view, students are provided with ongoing feedback on their learning in relation to defined learning outcomes. These ongoing assessments are formative (i.e., not graded) and used as the basis for remediation or acceleration of learning. In addition, students are encouraged to take ownership of their own learning trajectories by using assessment information to direct their study. This contemporary view of assessment has required institutions to promote new assessment practices and thus support faculty members in designing and integrating constructivist assessment methods aimed at enhancing student learning, not just measuring it (Shepard, 2000). In PSE, instructors must balance this newer formative conception of assessment with traditional summative functions in order to support as well as evaluate student learning. In the following section, we delineate various conceptions of assessment that faculty members must negotiate as they aim to integrate assessment throughout their instructional practice.

The Role of Instructional Development

Until only recently, the predominant assumption with regard to preparing PSE faculty for their role as teachers has been that a trained and skilled researcher or subject matter expert would naturally be an effective teacher. Seen as dichotomous by some, the enduring struggle between research preparation and teaching preparation still lingers today; however, it is now generally acknowledged that at least some direct preparation for teaching is essential (Austin, 2003; Hubball & Burt, 2006). As a result, intentional efforts to offer training to faculty members to help them improve their teaching competencies have become increasingly prevalent within PSE institutions. While terms such as professional development and faculty development typically refer to initiatives concerning the entire career development of faculty (Centra, 1989), including activities designed to enhance one’s teaching, research, and administration activities (Sheets & Schwenk, 1990), the more specific term instructional development refers to activities explicitly aimed at developing faculty members in their role as teachers (Stes et al., 2010).

Several factors have persuaded PSE leaders in the last few decades that instructional development programs are worthwhile initiatives. Specifically, evidence has been accumulating of the ineffectiveness of the traditional, teacher-centered ways of teaching (Gardiner, 1998). Lectures, for example, have been shown to only support lower levels of learning, and students do not retain even this knowledge very long (Bligh, 2000). To help faculty members adopt contemporary pedagogical strategies and improve their teaching practice, a variety of instructional development programs and activities have been designed and implemented at colleges and universities. These activities have included formats such as workshops and seminars, newsletters and manuals, consulting, peer assessment and mentoring, student assessment of teaching, classroom observations, short courses, fellowships, and other longitudinal programs (Prebble et al., 2004; Steinert et al., 2006). Many studies have emerged investigating the effects of instructional development initiatives, and the majority report positive outcomes. Research reviewed by Stes et al. (2010), together with more recent studies, have demonstrated positive impacts on faculty in terms of their attitudes toward teaching and learning (Bennett & Bennett, 2003; Howland & Wedman, 2004), conceptions of teaching (Gibbs & Coffey, 2004; Postareff et al., 2007), learning new concepts and principles (Howland & Wedman, 2004; Nasmith et al., 1997), and acquiring or improving teaching skills (Dixon & Scott, 2003; Pfund et al., 2009). Previous research has also examined the impacts of instructional development on students and have demonstrated positive changes in student perceptions (Gibbs & Coffey, 2004; Medsker, 1992), behaviors (Howland & Wedman, 2004; Stepp-Greany, 2004), and learning outcomes (Brauchle & Jerich, 1998; Condon et al., 2016; Perez et al., 2012; Stepp-Greany, 2004).

Studies have consistently demonstrated that faculty development programs are generally assessed using only superficial means (e.g., Beach et al., 2016; Chism & Szabó, 1998; Hines, 2009). Findings from Beach et al. (2016) indicate that as the complexity of the assessment approach increases (e.g., measuring the change in teaching practice or student learning), the percentage of use declines. Specifically, the reporting of the effects of faculty development programs more often takes the form of tracking participation numbers and participant self-report satisfaction and rarely includes data on an increase in the knowledge or skills of participants or a change in the practice of participants, even more rarely paying attention to changes in the learning of the students served by participants and/or changes in the institution’s culture of teaching. The recent work of Condon et al. (2016) offers a rare example of a sustained effort to provide measurable evidence that faculty development leads to improved instruction and improved student learning.

Conceptions of Assessment

Assessment serves a variety of purposes and can be implemented in many forms. As an umbrella term, understandings of assessment vary, depending upon how faculty members see the role of assessment in relation to student learning. Within PSE contexts, there is a core difference in conceptions of assessment when learning is situated within an “assessment culture” versus a “testing culture” (Birenbaum, 1996, 2003). The traditional testing culture is based on the behaviorist model of learning, focusing on objective and standardized testing with the practice of testing as separate from instruction (Shepard, 2000). In this testing culture, multiple-choice and close-ended assessments that focus on memorized knowledge are typical test formats. In contrast, an assessment culture shifts assessment toward a socio-constructivist learning paradigm in which assessments are opportunities for learning as mediated by other students’ learning and teachers’ responsive instruction (Shepard, 2000). In such a culture, assessments are used both formatively and summatively but are reflective of the enacted curriculum and students’ constructed understandings (Birenbaum, 2003).

Given these paradigmatic views of assessment, faculty members may espouse specific orientations toward assessment within their context of practice. Drawing on the work of DeLuca et al. (2013), we explicate four conceptions of assessment that shape faculty members’ practices. These conceptions are (a) assessment as testing, (b) assessment as format, (c) assessment as purpose, and (d) assessment as process.

The conception of assessment as testing is characterized as regarding assessment primarily as measuring the success of knowledge transmission. This conception of assessment is common among educators who regard teaching as simply the transmission of knowledge and are therefore likely to view assessment as an activity that follows learning, rather than as an integral and formative element of learning itself (Parpala & Lindblom-Ylänne, 2007). Testing instruments typically used in PSE include written tests given at the end of a term, chapter, semester, or year for grading, evaluation, or certification purposes. Such assessments often include closed-ended questions, such as multiple-choice, true/false, and fill-in-the-blank questions, as well as open-ended response questions with distinct and narrow correct answers. Such testing practices often focus largely on memorizing and recalling facts and concepts and are used primarily for evaluation in which there is limited or no feedback beyond the reporting of a grade or score. The conception of assessment as testing is rooted in the dominant paradigm of the 20th century, which Shepard (2000) associates with behaviorist learning theories, social efficiency, and scientific measurement, all of which negate the formative role of assessment in teaching and learning.

In contrast to an understanding of assessment as testing, an assessment as format conception is based on the understanding that assessment can take on a variety of forms, beyond the narrow view of testing. The methods used by faculty to assess student learning in PSE has expanded considerably in recent decades. Once characterized only by multiple-choice tests, traditional essays, and research papers (Sambell et al., 1997), assessment in the PSE context now often includes portfolios, projects, self- and peer-assessment, simulations, and other innovative methods (Struyven et al., 2005). If assessment tasks are representative of the context being studied and both relevant and meaningful to those involved, then they may be described as authentic. The authenticity of tasks and activities is what sets innovative assessments apart from more traditional and contrived assessments (Birenbaum, 2003; Gulikers et al., 2004). Flexibility in assessment formats can be seen as a first step toward a more student-led pedagogy that engages students in the assessment process (Irwin & Hepplestone, 2012).

When instructors move beyond conceptualizing assessment as strictly for summative purposes, they begin to understand assessment as serving multiple important functions. An assessment as purpose orientation encourages multiple uses for assessment within teaching, learning, and administrative processes. Following Bloom et al. (1971), a distinction is typically made between formative and summative assessment. Summative assessment is concerned with determining the extent to which curricular objectives have been received, whereas formative assessment is conceptualized as the “systematic evaluation in the process of curriculum construction, teaching, and learning for the purposes of improving any of these three processes” (Bloom et al., 1971, p. 117). The distinction between formative and summative assessment, however, is often blurred (Boud, 2000). For example, assessments activities such as course assignments are often designed to be simultaneously formative and summative. Activities can be formative because the student is provided feedback from which to learn, and they can be summative because the grade awarded contributes to the overall grade for the course.

The final conception, assessment as process, is closely linked to assessment as, for, and of learning and conceptualizes assessment as a process of interpretation and integration. Pellegrino et al. (2001) state that “assessment is a process of reasoning from evidence” (p. 36). While recognizing that assessments used in various contexts, for differing purposes, often look quite different, this conception of assessment focuses on the fact that all assessment activities share the common principle of always being a process of reasoning from evidence. To conceive of assessment in this way is to understand that without a direct pipeline into a student’s mind, the process of assessing what a student knows and can do is not straightforward. Various tools and techniques must be employed by educators to observe students’ behavior and generate data that can be used to draw reasonable inferences about what students know (Popham, 2013).

Although there are other taxonomies for categorizing and conceptualizing assessment in PSE, these four conceptions––assessment as testing, assessment as format, assessment as purpose, and assessment as process––highlight several dominant conceptions that drive teaching practice in universities and colleges. In this research, we used these four conceptions of assessment as an analytic heuristic in order to analyze faculty members’ changing conceptions of assessment in response to an instructional training course.

Confidence in Assessment

Arguably, to apply one’s understanding of assessment effectively, a postsecondary instructor must also be confident in their assessment knowledge and skills. The construct of self-efficacy in social cognitive theory (Bandura, 1986) suggests that when an individual feels competent and has confidence in completing a task, they will choose to engage in it. In contrast, when an individual feels incompetent and lacks confidence in a task, they will avoid engaging in it. It is therefore critical that faculty members not only develop complex and more nuanced conceptions of assessment but also feel confident in their assessment abilities in order to fully engage in the process of assessment. As Bandura (1986) explains, several factors such as practice, exposure, and application contribute to how confident an individual is when using or applying a skill to a new context. This means that when college instructors have a solid understanding of how to construct a grading rubric that both communicates expectations to students and guides instructional decisions, for example, they are more likely to be successful in their use and application of assessment. Therefore, to create assessment-literate educators, assessment confidence must be addressed.

Although not yet widely explored in the context of PSE, assessment confidence is a concept considered in K–12 education literature, often understood as a teacher’s self-perceived confidence in administering various approaches of assessments (Ludwig, 2014; Stiggins et al., 2004). In their study investigating the assessment confidence of preservice teacher candidates, DeLuca and Klinger (2010) conceptualized three domains of assessment confidence: —confidence in assessment practice, assessment theory, and assessment philosophy. DeLuca et al. (2013) identified two principal components of assessment confidence: confidence in using practical assessment approaches to measure student learning and confidence in engaging in assessment praxis. Praxis, as differentiated from practice, is the dynamic interplay between thought and action involving a continuous process of understanding, interpretation, and application.

Methods

This research is premised on the work of DeLuca et al. (2013), which investigated the value and effectiveness of a course on educational measurement and assessment for preservice K–12 teachers. In this study, we adapted DeLuca and colleagues’ validated survey to examine PSE faculty members’ learning about assessment in response to an instructional development course. Data were collected from instructors enrolled in a semester-long instructor training course at a two-year college in Texas.

Context

This research is based on an instructor training course required for all newly hired faculty at the college and was also open to other faculty members at their department chair’s request. This semester-long course focused on teaching and learning styles, strategies to enhance student engagement and motivation, and various approaches to student assessment. Faculty participants attended a two-hour face-to-face class session each week that was facilitated by two faculty development officers. These class sessions involved active participation with numerous opportunities for participants to practice teaching and learning techniques and receive feedback from peers and facilitators. Additionally, the course included a variety of weekly assignments, which participants accessed and completed online. These activities included readings, quizzes, discussion forums, and practical assignments such as designing instructional materials (e.g., syllabi, lesson plans, and assessment instruments).

Participants

After obtaining institutional review board (IRB) approval, all 35 instructors enrolled in the summer 2013, fall 2013, and spring 2014 administration of the instructor training course were invited to participate in this study. Of the 35 instructors, 27 agreed, for a 77% response rate. Twenty-two (82%) of the participants were male, and five (18%) were female (see Table 1). These 27 instructors represented a cross-section of the college’s instructional departments, with representation from programs such as welding, building construction, culinary arts, computer graphics, landscaping, electronics, and aviation. Most of the participants had no prior teaching experience, but eight reported some teaching experience including some combination of postsecondary (7), K–12 (3), or other teaching (4), such as having worked as a military instructor, dance teacher, or flight instructor.

| Cohort | N | Male | Female | Previous teaching experience | ||||

|---|---|---|---|---|---|---|---|---|

| No | Yes | PSE | K–12 | Other | ||||

| Summer 2013 | 7 | 6 | 1 | 5 | 2 | 1 | 0 | 2 |

| Fall 2013 | 12 | 10 | 2 | 7 | 5 | 5 | 3 | 2 |

| Spring 2014 | 8 | 6 | 2 | 7 | 1 | 1 | 0 | 0 |

| Total sample | 27 | 22 | 5 | 19 | 8 | 7 | 3 | 4 |

Data Collection

Data were collected from participants through two linked questionnaires: one administered during the first day of the instructor training course and one administered on the last day of the course. The questionnaires, adapted from the instrument used by DeLuca et al. (2013), consisted of both open-ended and close-ended items. Open-ended items asked participants to describe their conceptions of assessment (e.g., When you think about the word assessment what comes to mind?). Close-ended items asked participants to identify their confidence in (a) using practical assessment approaches to measure student learning (e.g., testing, performance assessment, portfolios, and observation) and (b) engaging in assessment praxis as represented through various assessment scenarios. Each paper-based questionnaire included 25 items, and the pre-questionnaire also had a seven-item demographic section. Pre- and post-questionnaires were completed by participants in a familiar classroom setting.

Data Analysis

Results were analyzed in relation to participants’ changing (a) conceptions of assessment and (b) confidence in assessment. Data analyses were modeled after processes implemented by DeLuca et al. (2013). In order to determine participants’ changing conceptions of assessment over time, their open-response questionnaire responses before and after the instructional development course were coded through standard, deductive coding (Patton, 2002) based on the theoretical definitions of conceptions of assessment. Specifically, data were coded based on participants’ primary and, when available, secondary conceptions of (a) assessment as testing, (b) assessment as format, (c) assessment as purpose, and (d) assessment as process. Data were independently coded by two raters with an inter-rater reliability of 97%. Frequencies for primary and secondary conception codes were based on the number of individual participants who mentioned a conception rather than the total number of times a conception appeared in the questionnaire data (Namey et al., 2008).

A primary code frequency table matrix for both pre- and post-questionnaire responses was constructed. Codes were then assigned numerical values in order of increasing complexity (testing = 1, format = 2, purpose = 4, and process = 8), and each participant received a numerical score representing assessment conception on pre- and post- open-response questionnaire items. As appropriate, dual codes were combined to create a composite assessment conception score. Pre- and post-assessment conception scores were summarized in a frequency table. Dependent t tests were conducted to determine significant differences between participants’ pre- and post-questionnaire assessment conception composite scores.

Descriptive statistics were conducted on fixed-response questionnaire items pertaining to two scales of assessment confidence in practical assessment approaches and assessment praxis. Pre-questionnaire items pertaining to each assessment confidence scale were then analyzed through principal components analysis using oblique (promax) rotation to determine subscales. A minimum subscale loading was set at 0.40, and Cronbach’s alpha values were calculated as internal consistency estimates for each subscale. Means for each identified subscale were then calculated for pre- and post-questionnaire responses and compared using dependent t tests to determine significant differences between participants’ responses before and after the instructional development course. Open-response items pertaining to assessment confidence were analyzed to enrich understanding of these fixed-response items.

The small sample size limits the reliability of the measure, the internal consistency of subscale component loadings, and the resulting effect sizes. These limitations reduce the generalizability of results from the principal component analyses and t tests. However, despite the small sample, this study contributes validation evidence of trends revealed in the work of DeLuca et al. (2013) and provides an initial basis for examining faculty members’ changing conceptions of and confidence in assessment as a result of an instructional development course.

Results

Results are presented in two sections aligned with the research questions: (a) changes in participants’ conceptions of assessment and (b) changes in participants’ confidence in assessment.

Conceptions of Assessment

Participants’ conceptions of assessment developed from an initial view of assessment as testing to more complex conceptions of assessment as purpose and process. Table 2 summarizes the frequency distribution of participants’ conceptions of assessment for pre- and post-questionnaire responses. The frequency of assessment as testing decreased most from pre- to post-questionnaire implementation (19 to 3), whereas the frequency of assessment as process increased most (0 to 9). Assessment as format was not mentioned as a primary concern on either implementation of the questionnaire, and assessment as purpose was the most frequently reported conception on the post-questionnaire (15).

| Conceptions of assessment | Pre-questionnaire | Post-questionnaire |

|---|---|---|

| Assessment as testing | 19 | 3 |

| Assessment as format | 0 | 0 |

| Assessment as purpose | 8 | 15 |

| Assessment as process | 0 | 9 |

In pre-questionnaire open responses, participants’ descriptions of assessment were simplistic and concise in nature, emphasizing the summative role of assessment. Pre-questionnaire responses generally described assessment as a means to “test students,” “measure learning,” and “evaluate what students know.” Post-questionnaire responses consistently demonstrated participants’ richer and expanding conceptions of assessment that included both purpose and process. Furthermore, all participants’ post-questionnaire responses shifted beyond primarily summative conceptions of assessment to include the formative role of assessment as well. As one participant explained on the post-questionnaire, assessment serves the purpose of “examining student learning strengths and needs, telling students how to improve, and guiding teachers’ next steps.” Another participant described assessment on the post-questionnaire as a process that allows educators to “evaluate students’ understanding of lessons, evaluate teacher effectiveness, and identify areas for improvement in teaching and learning processes.” Overall, these results show that by the end of the instructional development course, participants’ primary conceptions of assessment had shifted to emphasize assessment as a formative process that supports both teaching and learning.

| Composite conceptions of assessment | Pre-questionnaire | Post-questionnaire |

|---|---|---|

| Assessment as testing | 17 | 0 |

| Assessment as purpose | 5 | 13 |

| Assessment as testing and purpose | 3 | 5 |

| Assessment as format and purpose | 2 | 0 |

| Assessment as process | 0 | 9 |

A frequency distribution of participants’ composite scores for conceptions of assessment is reported in Table 3. The most frequent composite score on the pre-questionnaire was associated with the conception of assessment as testing, whereas assessment as purpose was the most frequent conception on the post-questionnaire. Specifically, the most frequently mentioned purpose of assessment on the post-questionnaire was formative. Interestingly, few participants reported multiple conceptions of assessment: assessment as testing and purpose (3 and 5 on pre- and post-questionnaires, respectively) and assessment as format and purpose (2 on pre-questionnaire). This illustrates that despite participants’ increasingly complex conceptions of assessment, they were not systematically describing assessment as a multifaceted concept that encompasses testing, format, purpose, and process.

At an individual level, 25 of 27 participants’ assessment conception composite scores increased over time while two remained the same. A dependent t test revealed that changes in participants’ conception of assessment composite scores over time were statistically significant from pre- (M = 2.37, SD = 1.88) to post-questionnaire (M = 5.56, SD = 1.83; t (26) = -9.20, p < .05) (see also Table 5). Qualitative questionnaire responses elaborated on this finding. One participant initially described assessment as “a college requirement to measure learning.” On the post-questionnaire, the same participant explained that assessment serves to “support the learning process, inform teaching practice, and provide constructive feedback to students.” Another participant’s responses shifted from “finding out if students get it” to “evaluating students’ learning of content, and supporting the learning process by providing feedback to students and teachers.” These examples illustrate an important shift as participants began to view assessment as less of an instructor-led measurement requirement and more of an interactive process among instructors and students to guide teaching and learning.

Confidence in Assessment

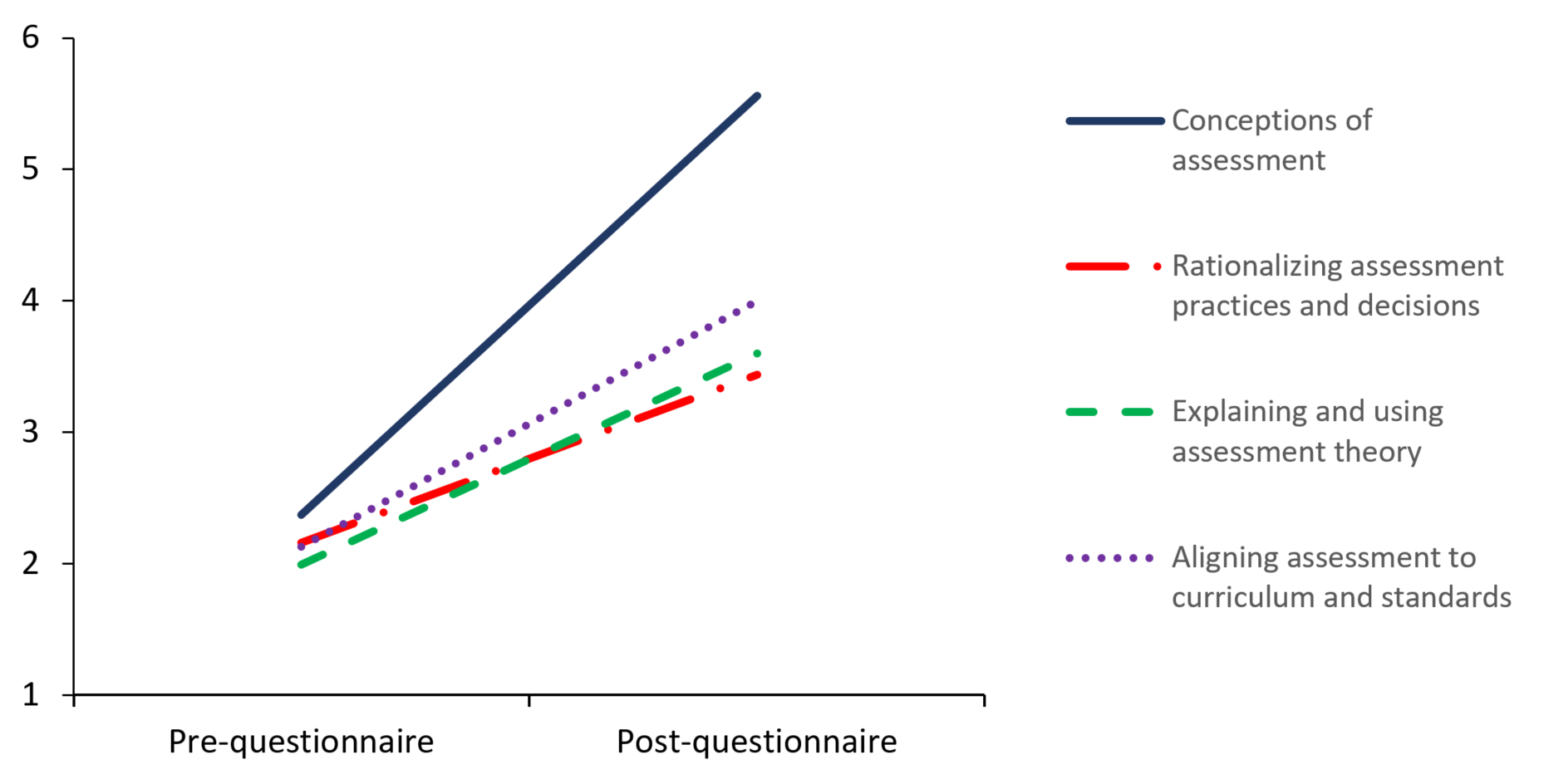

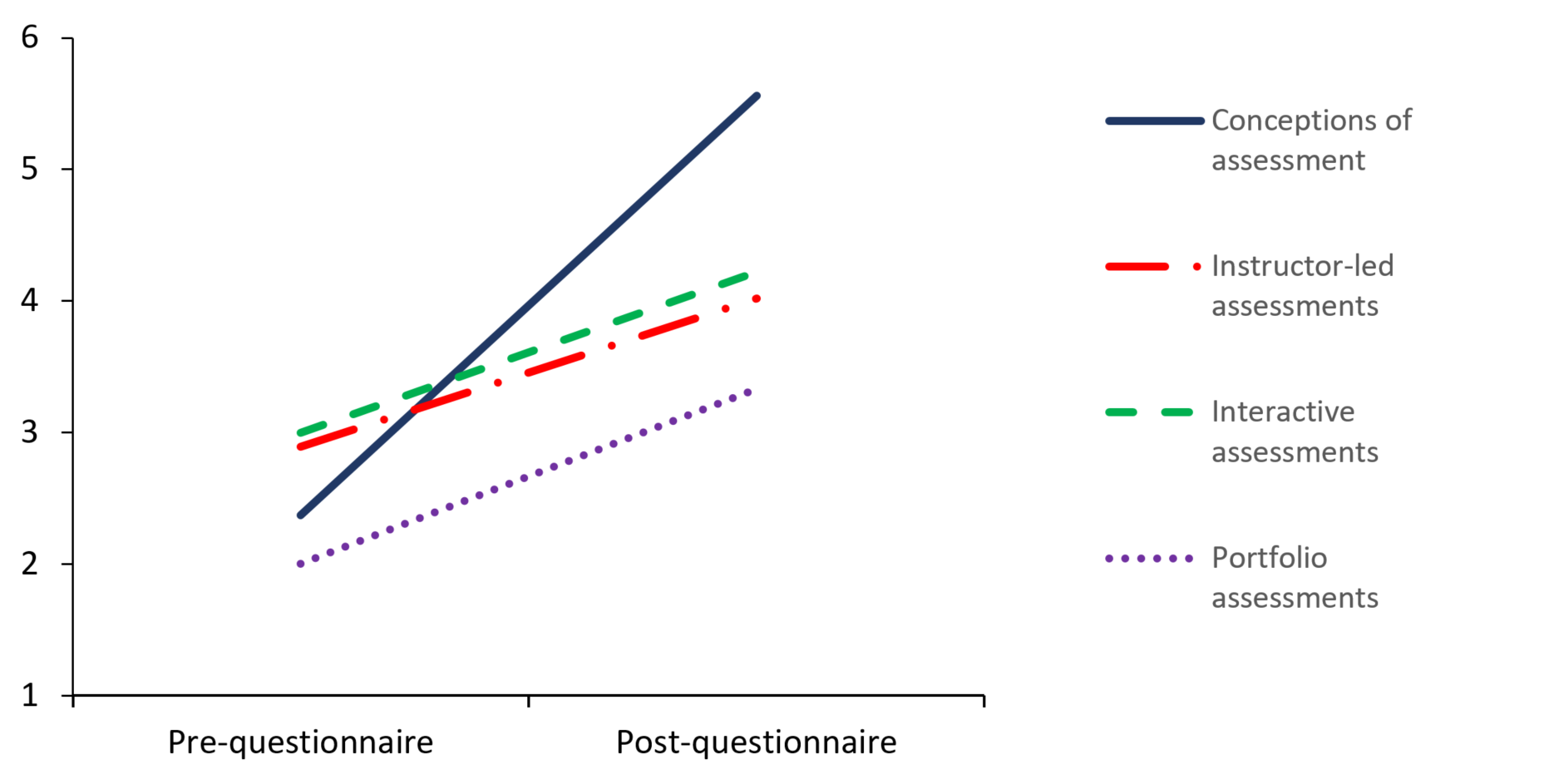

Principal component analyses conducted on each confidence scale—practical assessment approaches (11 items) and assessment praxis (12 items)—revealed three subscales in each scale with eigenvalues greater than 1 (see Table 4). Internal consistency values associated with each subscale were determined to be adequate (α = .68 to .84). Items related to the practical assessment approaches scale were named instructor-led assessments (α = .84), interactive assessments (α = .69), and portfolio assessments (α = n/a; 1 item). Items related to the assessment praxis scale were named: rationalizing assessment practices and decisions (α = .81), explaining and using assessment theory (α = .81), and aligning assessment to curriculum and standards (α = .68).

Mean values and dependent sample t tests for participants’ overall confidence in assessment across both confidence scales before and after the instructional development course, as well as confidence in practical assessment approaches and assessment praxis, are reported in Table 5. There was a significant change in participants’ overall confidence from pre- (M = 2.78, SD = .75) to post-questionnaire administration (M = 4.19, SD = .68; t (26) = -7.11, p < .05). All individual assessment confidence subscales also exhibited significant increases (see Table 5).

| Items | Subscales | ||

|---|---|---|---|

| 1 | 2 | 3 | |

| Confidence in practical assessment approaches | |||

| • Selected response questions | 0.77 | -0.38 | 0.28 |

| • Constructed response questions | 0.70 | 0.39 | -0.01 |

| • Performance assessments | 0.72 | 0.07 | -0.29 |

| • Portfolio assessment | -0.11 | 0.16 | 0.96 |

| • Personal communication | 0.83 | 0.04 | 0.10 |

| • Observation techniques | 0.88 | 0.05 | -0.12 |

| • Questioning techniques | 0.11 | 0.78 | 0.16 |

| • Feedback techniques | -0.03 | 0.90 | 0.03 |

| Confidence in assessment praxis | |||

| • Explain to a group of beginning instructors what is meant by the expression “learning outcomes” | -0.01 | 0.82 | 0.11 |

| • Make a brief presentation to instructors on “Why College Instructors Need to Know About Assessment” | -0.16 | 0.77 | 0.38 |

| • Accurately classify a set of learning outcomes according to their learning domain in Bloom’s Taxonomy | 0.08 | 0.17 | 0.72 |

| • Write a brief explanatory note for a departmental or college newsletter explaining the key differences between norm-referenced and criterion-referenced interpretations of students’ test performances | 0.74 | 0.38 | -0.41 |

| • Construct selected-response test items that do not violate any established guidelines for constructing such items | 0.77 | -0.18 | 0.27 |

| • Construct constructed-response test items that do not violate any established guidelines for constructing such items | 0.82 | -0.35 | 0.29 |

| • Organize and implement a portfolio assessment program for a course | 0.79 | 0.21 | -0.03 |

| • Construct a grading rubric that would help students better understand expectations and help an instructor make better instructional decisions | 0.08 | 0.34 | 0.76 |

| • Explain to a group of novice instructors the nature of the formative assessment process | 0.12 | 0.80 | 0.10 |

| Scales and subscales | Pre-questionnaire | Post-questionnaire | |||

|---|---|---|---|---|---|

| M | SD | M | SD | t (26) | |

| Conceptions of assessment | 2.37 | 1.88 | 5.56 | 1.83 | -9.20* |

| Overall assessment confidence | 2.78 | 0.75 | 4.19 | 0.68 | -7.11* |

| Confidence in practical assessment approaches | |||||

| Instructor-led assessments | 2.89 | 0.71 | 4.02 | 0.66 | -7.04* |

| Interactive assessments | 3.00 | 0.64 | 4.22 | 0.80 | -6.06* |

| Portfolio assessments | 2.00 | 0.96 | 3.33 | 1.24 | -6.55* |

| Confidence in assessment praxis | |||||

| Rationalizing assessment practices and decisions | 2.16 | 0.81 | 3.44 | 0.62 | -11.21* |

| Explaining and using assessment theory | 1.99 | 0.72 | 3.60 | 0.78 | -11.43* |

| Aligning assessment to curriculum and standards | 2.13 | 0.88 | 4.00 | 0.65 | -9.18* |

Open-response items pertaining to assessment confidence were analyzed to enrich understanding of these fixed responses. It is interesting to note that increases in assessment confidence differed based on participants’ previous teaching experience. For example, the three participants with previous K–12 teaching experience and four of the participants with PSE teaching experience reported that the instructional development course had the greatest impact on their ability to explain and use assessment theory but had minimal impact on the other subscales related to assessment approaches and praxis. These participants began the course with relatively high levels of confidence on all practical assessment approach subscales (instructor-led, interactive, and portfolio assessments) and two of three assessment praxis subscales (rationalizing assessment practices and decisions and aligning assessment to curriculum standards). Although the small overall sample size and even smaller size of this subgroup limits any deeper analysis of the effect of previous teaching experience, these results may highlight the importance of differentiating assessment training initiatives for college instructors based on previous teaching experience.

The following figures illustrate how participants’ conceptions of assessment changed in relation to their increasing confidence in practical assessment approaches (see Figure 1) and assessment praxis (see Figure 2). As participants’ conceptions of assessment increased in complexity, their confidence in assessment approaches and praxis also increased. Taken together, these figures suggest the importance of instructional development courses designed to foster more complex conceptions of assessment in order to positively impact college instructors’ confidence in both practical assessment approaches and assessment praxis.

Figure 1. Comparison of Change Patterns in Participants’ Assessment Conceptions and Confidence in Practical Assessment Approaches

Figure 1. Comparison of Change Patterns in Participants’ Assessment Conceptions and Confidence in Practical Assessment ApproachesDiscussion

The purpose of this research was to explore changes in faculty members’ conceptions of and confidence in assessment as a result of focused training provided in an instructional development course. Although previous studies have investigated K–12 teachers’ conceptions of assessment (Brown & Harris, 2010; DeLuca et al., 2013), there is a lack of research in this area with respect to PSE instructors. This lack of research is problematic given the known influence of assessment-based teaching on student learning (Boud, 2000; Nicol & Macfarlane-Dick, 2006) and the persistent critiques on the quality of PSE instructional practices (Yorke, 2003). As such, we build on the work of others who have endeavored to explore the role and effectiveness of instructional development programs for enhancing teaching quality (Gibbs & Coffey, 2004; Steinert et al., 2006; Taylor & Colet, 2010). Specifically, our interest is to help support a shift from a testing culture to an assessment culture within PSE classrooms. An assessment culture integrates feedback-rich tasks throughout learning periods and leverages formative data to enhance teaching and learning (Birenbaum, 1996, 2003). Accordingly, we follow Shepard’s (2000) conception of a socio-constructivist orientation in which assessment not only provides evidence of student learning (i.e., summative) but also shapes what is learned and how students engage in learning.

Based on a sample of 27 faculty members enrolled in a semester-long instructor training course at a two-year college in Texas, this study provides initial evidence that faculty members can develop confidence in assessment while adopting increasingly complex conceptions of assessment. Based on qualitative results, it was evident that participants shifted their view of assessment from a mostly traditional, teacher-centered perspective to a more student-centered understanding that recognizes the importance of feedback to guide and improve student learning. Some faculty members in this study were even able to articulate a conception of assessment as an integrated and interpretative process within teaching and learning. Hence the results of this research demonstrate the potential for explicit training in assessment practices to positively shift faculty understandings of assessment from simplistic conceptions to more complex understandings. After completing an instructional development course with a focus on assessment practices, new faculty began to engage the challenging idea of assessment as a multi-faceted and integral part of teaching and learning, not simply a straightforward process of summative testing.

Faculty participants also expressed significantly greater confidence in factor components related to both assessment praxis and assessment practice. These findings suggest that the training course served to provide practical strategies for integrating assessments into PSE instruction. While the training course did serve to promote conceptual understandings, its primary focus was on the development of skills and practices so that faculty members had greater confidence in implementing assessments within their teaching practice. Hence, in this research, our observation of instructors’ changes in confidence-related practical assessment activities was positive. Following Guskey’s (1986) seminal research, we support the notion that changes in practice will facilitate further changes in an instructor’s conceptual understandings about teaching and assessment. Instructors with greater confidence via an instructional development course are more likely to implement new practices and subsequently further shift their conceptions of assessment. As a result, these instructors will be in a better position to rationalize and negotiate the multiple purposes of assessment within the increasingly accountability-driven context of PSE (Taras, 2005, 2007). The findings of this study provide support for the value of educational development activities focused on enhancing assessment literacy. PSE institutions wishing to enhance the assessment of student learning should, therefore, continue to direct resources toward the work of educational developers involved in assessment training. Ultimately, working toward enhancing faculty members’ practical and conceptual understandings of assessment—in effect, developing assessment-literate faculty members—will serve accountability mandates while promoting an assessment culture for enhanced student learning.

Although this research has produced valuable findings that support the use of instructional development courses to positively impact assessment conceptions and confidence, there are some limitations in study site selection, sample, and instrumentation that warrant caution when interpreting results. All participants in this study were faculty members at the same college and completed the same instructional development course, which limits the generalizability of the results. Furthermore, the small sample size of 27 participants tempers the effect sizes of quantitative analyses and may have negatively impacted questionnaire reliability and the internal stability of factors. While we had intended to further scrutinize the variable of amount of teaching experience as it relates to changing conceptions of and confidence in assessment owing to the instructional development course, the small number of participants with previous teaching experience prohibited meaningful analysis. Finally, our study design did not allow for the collection of data on the participants’ future teaching practice or their students’ learning outcomes. As noted by Condon et al. (2016), there is a need to move beyond self-report data and continue to investigate the long-term impact of instructional training courses on teaching practice. Subsequent research should take up these changes by examining PSE instructors’ learning across diverse contexts in sustained studies.

Despite these limitations, this study’s integration of qualitative and quantitative evidences to examine the effects of an instructor training course has shown that explicit training in assessment strategies can enhance new faculty members’ confidence in assessment praxis and approaches while deepening their conceptual and theoretical understandings of educational assessment. These data are useful in providing a formative basis for continued development of instructional training courses in assessment. Future research should continue to examine the development, implementation, and impact of differentiated instructional development courses to better understand their effectiveness across diverse groups of faculty members (e.g., those with previous teaching or training experience).

We recognize that a single training course will not sufficiently support faculty members in continuing to refine their assessment practices. Developing the art and science of assessment is a career-long pursuit, and commitment to this pursuit requires sustained professional development opportunities. Our study shows that an instructional training course can stimulate faculty members’ learning, but training courses are only one means to engaging faculty members in deep learning about their instructional practices. Hence, future research needs to consider a multifaceted approach to instructional development in assessments––a series of differentiated courses, job-embedded coaching and support, and ongoing peer mentoring. The instructional training course is solely the tip of the iceberg. If a positive assessment culture is to prevail within PSE contexts, then focusing on faculty members’ assessment literacy through sustained and differentiated assessment education appears to be a promising starting place.

Biographies

Kyle D. Massey is the Coordinator of Evaluation, Data, and Project Management in Undergraduate Medical Education at Western University in Canada (Schulich School of Medicine and Dentistry). He is an Assistant Professor (Limited Duties) in the Faculty of Education at Western University and an Instructor in Social & Behavioral Sciences at Texas State Technical College. He earned his PhD in Educational Leadership and Policy from the University of Texas at Austin. Dr. Massey’s recent research is focused on faculty development and professional identity of higher education administrators.

Christopher DeLuca is an Associate Professor of Classroom Assessment in the Faculty of Education at Queen’s University in Canada. Dr. DeLuca leads the Classroom Assessment Research Team and is a member of Queen’s Assessment and Evaluation Group. He earned his PhD in Education from Queen’s University. Dr. DeLuca’s research examines the complex intersection of curriculum, pedagogy, and assessment as operating within the current context of school accountability and standards-based education.

Danielle LaPointe-McEwan is an Adjunct Professor in the Faculty of Education at Queen’s University in Canada. Dr. LaPointe-McEwan is a member of the Assessment and Evaluation Group as well as the Classroom Assessment Research Team at Queen’s University. She earned her PhD in Education from Queen’s University. Dr. LaPointe-McEwan’s research interests include pre- and in-service teacher education in assessment and evaluation, educator professional learning models, coaching and feedback in professional learning, and assessment in e-learning contexts.

References

- Austin, A. E. (2003). Creating a bridge to the future: Preparing new faculty to face changing expectations in a shifting context. The Review of Higher Education, 26(2), 119–144. https://doi.org/10.1353/rhe.2002.0031

- Bandura, A. (1986). Social foundations of thought and action: A social cognitive theory. Prentice-Hall.

- Beach, A. L., Sorcinelli, M. D., Austin, A. E., & Rivard, J. K. (2016). Faculty development in the age of evidence: Current practices, future imperatives. Stylus Publishing.

- Bennett, J., & Bennett, L. (2003). A review of factors that influence the diffusion of innovation when structuring a faculty training program. The Internet and Higher Education, 6(1), 53–63. https://doi.org/10.1016/S1096-7516(02)00161-6

- Birenbaum, M. (1996). Assessment 2000: Towards a pluralistic approach to assessment. In M. Birenbaum & F. J. R. C. Dochy (Eds.), Alternatives in assessment of achievements, learning processes and prior knowledge (Vol. 42, pp. 3–29). Kluwer Academic/Plenum Publishers.

- Birenbaum, M. (2003). New insights into learning and teaching and their implications for assessment. In M. Segers, F. Dochy, & E. Cascallar (Eds.), Optimising new modes of assessment: In search of qualities and standards (pp. 13–36). Kluwer Academic Publishers.

- Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy & Practice, 5(1), 7–74. https://doi.org/10.1080/0969595980050102

- Bligh, D. A. (2000). What’s the use of lectures? Jossey Bass

- Bloom, B. S., Hastings, J. T., & Madaus, G. F. (1971). Handbook on formative and summative evaluation of student learning. McGraw-Hill.

- Boud, D. (2000). Sustainable assessment: Rethinking assessment for the learning society. Studies in Continuing Education, 22(2), 151–167. https://doi.org/10.1080/713695728

- Brauchle, P. E., & Jerich, K. F. (1998). Improving instructional development of industrial technology graduate teaching assistants. Journal of Industrial Teacher Education, 35(3), 67–92.

- Brown, G. T. L., & Harris, L. R. (2010). Teachers’ enacted curriculum: Understanding teacher beliefs and practices of classroom assessment [Paper presentation]. International Association for Education Assessment Annual Conference, Bangkok, Thailand.

- Centra, J. A. (1989). Faculty evaluation and faculty development in higher education. In J. C. Smart (Ed.), Higher education: Handbook of theory and research (pp. 155–179). Agathon Press.

- Chism, N. V. N., & Szabó, B. (1998). How faculty development programs evaluate their services. Journal of Staff, Program & Organization Development, 15(2), 55–62.

- Colbeck, C., Cabrera, A. F., & Marine, R. J. (2002). Faculty motivation to use alternative teaching methods (ERIC Document Reproduction Service No. ED465342). http://eric.ed.gov/?id=ED465342

- Condon, W., Iverson, E. R., Manduca, C. A., Rutz, C., & Willett, G. (2016). Faculty development and student learning: Assessing the connections. Indiana University Press.

- DeLuca, C., & Klinger, D. A. (2010). Assessment literacy development: Identifying gaps in teacher candidates’ learning. Assessment in Education: Principles, Policy & Practice, 17(4), 419–438. https://doi.org/10.1080/0969594X.2010.516643

- DeLuca, C., Chavez, T., & Cao, C. (2013). Establishing a foundation for valid teacher judgement on student learning: The role of pre-service assessment education. Assessment in Education: Principles, Policy & Practice, 20(1), 107–126. https://doi.org/10.1080/0969594X.2012.668870

- Dixon, K., & Scott, S. (2003). The evaluation of an offshore professional-development programme as part of a university’s strategic plan: A case study approach. Quality in Higher Education, 9(3), 287–294. https://doi.org/10.1080/1353832032000151148

- Fernandes, S., Flores, M. A., & Lima, R. M. (2012). Students’ views of assessment in project-led engineering education: Findings from a case study in Portugal. Assessment & Evaluation in Higher Education, 37(2), 163–178. https://doi.org/10.1080/02602938.2010.515015

- Fishman, B. J., Marx, R. W., Best, S., & Tal, R. T. (2003). Linking teacher and student learning to improve professional development in systemic reform. Teaching and Teacher Education, 19(6), 643–658. https://doi.org/10.1016/S0742-051X(03)00059-3

- Gardiner, L. F. (1998). Why we must change: The research evidence. Thought & Action: The NEA Higher Education Journal, 14(1), 71–88. https://www.nea.org/assets/img/

- PubThoughtAndAction/TAA_00Fal_13.pdf

- Gardner, J. (Ed.). (2006). Assessment and learning. SAGE Publications.

- Gibbs, G., & Coffey, M. (2004). The impact of training of university teachers on their teaching skills, their approach to teaching and the approach to learning of their students. Active Learning in Higher Education, 5(1), 87–100. https://doi.org/10.1177/1469787404040463

- Gulikers, J. T. M., Bastiaens, T. J., & Kirschner, P. A. (2004). A five-dimensional framework for authentic assessment. Educational Technology Research and Development, 52(3), 67–85. https://doi.org/10.1007/BF02504676

- Guskey, T. R. (1986). Staff development and the process of teacher change. Educational Researcher, 15(5), 5–12.

- Hines, S. R. (2009). Investigating faculty development program assessment practices: What’s being done and how can it be improved? Journal of Faculty Development, 23(3): 5–19.

- Howland, J., & Wedman, J. (2004). A process model for faculty development: Individualizing technology learning. Journal of Technology and Teacher Education, 12(2), 239–262.

- Hubball, H. T., & Burt, H. (2006). The scholarship of teaching and learning: Theory-practice integration in a faculty certificate program. Innovative Higher Education, 30(5), 327–344. https://doi.org/10.1007/s10755-005-9000-6

- Irwin, B., & Hepplestone, S. (2012). Examining increased flexibility in assessment formats. Assessment & Evaluation in Higher Education, 37(7), 773–785. https://doi.org/10.1080/02602938.2011.573842

- Kember, D., & Kwan, K.-P. (2000). Lecturers’ approaches to teaching and their relationship to conceptions of good teaching. Instructional Science, 28, 469–490. https://doi.org/10.1023/A:1026569608656

- Knapper, C. (2010). Changing teaching practice: Barriers and strategies. In J. Christensen Hughes & J. Mighty (Eds.), Taking stock: Research on teaching and learning in higher education (pp. 229–242). McGill-Queen’s University Press.

- Komarraju, M., & Karau, S. J. (2008). Relationships between the perceived value of instructional techniques and academic motivation. Journal of Instructional Psychology, 34(4), 70–82.

- Krzykowski, L., & Kinser, K. (2014). Transparency in student learning assessment: Can accreditation standards make a difference? Change: The Magazine of Higher Learning, 46(3), 67–73. https://doi.org/10.1080/00091383.2014.905428

- Kuh, G. D., Kinzie, J., Buckley, J. A., Bridges, B. K., & Hayek, J. C. (2006, July). What matters to student success: A review of the literature [Commissioned paper]. National Postsecondary Education Cooperative. http://nces.ed.gov/npec/pdf/Kuh_Team_Report.pdf

- Ludwig, N. W. (2014). Exploring the relationship between K–12 public school teachers’ conceptions of assessment and their classroom assessment confidence levels (Publication No. 1513993136) [Doctoral dissertation, Regent University]. ProQuest Dissertations & Theses Global.

- Machemer, P. L., & Crawford, P. (2007). Student perceptions of active learning in a large cross-disciplinary classroom. Active Learning in Higher Education, 8(1), 9–30. https://doi.org/1177/1469787407074008

- Medsker, K. L. (1992). NETwork for excellent teaching: A case study in university instructional development. Performance Improvement Quarterly, 5(1), 35–48. https://doi.org/10.1111/j.1937-8327.1992.tb00533.x

- Namey, E., Guest, G., Thairu, L., & Johnson, L. (2008). Data reduction techniques for large qualitative data sets. In G. Guest & K. M. MacQueen (Eds.), Handbook for team-based qualitative research (pp. 137–162). AltaMira Press.

- Nasmith, L., Saroyan, A., Steinert, Y., Daigle, N., & Franco, E. D. (1997). Long-term impact of faculty development workshops: A pilot study. In A. J. J. A. Scherpbier, C. P. M. van der Vleuten, J. J. Rethans, & A. F. W. van der Steeg (Eds.), Advances in medical education (pp. 237–240). Springer.

- Nicol, D. J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education, 31(2), 199–218. https://doi.org/10.1080/03075070600572090

- Parpala, A., & Lindblom-Ylänne, S. (2007). University teachers’ conceptions of good teaching in the units of high-quality education. Studies in Educational Evaluation, 33(3–4), 355–370. https://doi.org/10.1016/j.stueduc.2007.07.009

- Patton, M. Q. (2002). Qualitative research and evaluation methods (3rd ed.). Sage.

- Pellegrino, J. W., Chudowsky, N., & Glaser, R. (Eds.). (2001). Knowing what students know: The science of design of educational assessment. National Academies Press.

- Perez, A. M., McShannon, J., & Hynes, P. (2012). Community college faculty development program and student achievement. Community College Journal of Research and Practice, 36(5), 379–385. https://doi.org/10.1080/10668920902813469

- Pfund, C., Miller, S., Brenner, K., Bruns, P., Chang, A., Ebert-May, D., Fagen, A. P., Gentile, J., Gossens, S., Khan, I. M., Labov, J. B., Pribbenow, C. M., Susman, M., Tong, L., Wright, R., Yuan, R. T., Wood, W. B., & Handelsman, J. (2009). Summer institute to improve university science teaching. Science, 324(5926), 470–471. https://doi.org/10.1126/science.1170015

- Popham, W. J. (2013). Classroom assessment: What teachers need to know (7th ed.). Pearson.

- Postareff, L., Lindblom-Ylänne, S., & Nevgi, A. (2007). The effect of pedagogical training on teaching in higher education. Teaching and Teacher Education, 23(5), 557–571. https://doi.org/10.1016/j.tate.2006.11.013

- Prebble, T., Hargraves, H., Leach, L., Naidoo, K., Suddaby, G., & Zepke, N. (2004). Impact of student support services and academic development programmes on student outcomes in undergraduate tertiary study: A synthesis of the research [Report]. Ministry of Education, Massey University College of Education, New Zealand.

- Sambell, K., McDowell, L., & Brown, S. (1997). “But is it fair?”: An exploratory study of student perceptions of the consequential validity of assessment. Studies in Educational Evaluation, 23(4), 349–371. https://doi.org/10.1016/S0191-491X(97)86215-3

- Sheets, K. J., & Schwenk, T. L. (1990). Faculty development for family medicine educators: An agenda for future activities. Teaching and Learning in Medicine, 2(3), 141–148. https://doi.org/10.1080/10401339009539447

- Shepard, L. A. (2000). The role of assessment in a learning culture. Educational Researcher, 29(7), 4–14. https://doi.org/10.2307/1176145

- Steinert, Y., Mann, K., Centeno, A., Dolmans, D., Spencer, J., Gelula, M., & Prideaux, D. (2006). A systematic review of faculty development initiatives designed to improve teaching effectiveness in medical education: BEME Guide No. 8. Medical Teacher, 28(6), 497–526. https://doi.org/10.1080/01421590600902976

- Stepp-Greany, J. (2004). Collaborative teaching in an intensive Spanish course: A professional development experience for teaching assistants. Foreign Language Annals, 37(3), 417–424. https://doi.org/10.1111/j.1944-9720.2004.tb02699.x

- Stes, A., Min-Leliveld, M., Gijbels, D., & Van Petegem, P. (2010). The impact of instructional development in higher education: The state-of-the-art of the research. Educational Research Review, 5(1), 25–49. https://doi.org/10.1016/j.edurev.2009.07.001

- Stiggins, R. J., Arter, J. A., Chappuis, J., & Chappuis, S. (2004). Classroom assessment for student learning: Doing it right—Using it well. ETS Assessment Training Institute.

- Struyven, K., Dochy, F., & Janssens, S. (2005). Students’ perceptions about evaluation and assessment in higher education: A review. Assessment & Evaluation in Higher Education, 30(4), 325–341. https://doi.org/10.1080/02602930500099102

- Taras, M. (2005). Assessment—summative and formative—some theoretical reflections. British Journal of Educational Studies, 53(4), 466–478. https;//doi.org/10.1111/j.1467-8527.2005.00307.x

- Taras, M. (2007). Assessment for learning: Understanding theory to improve practice. Journal of Further and Higher Education, 31(4), 363–371. https://doi.org/10.1080/03098770701625746

- Taylor, K. L., & Colet, N. R. (2010). Making the shift from faculty development to educational development: A conceptual framework grounded in practice. In A. Saroyan & M. Frenay (Eds.), Building teaching capacities in higher education: A comprehensive international model (pp. 139–167). Stylus Publishing.

- Webber, K. L. (2012). The use of learner-centered assessment in US colleges and universities. Research in Higher Education, 53(2), 201–228. https://doi.org/10.1007/s11162-011-9245-0

- Yorke, M. (2003). Formative assessment in higher education: Moves towards theory and the enhancement of pedagogic practice. Higher Education, 45(4), 477–501. https://doi.org/10.1023/a:1023967026413