Motivations and Obstacles Influencing Faculty Engagement in Adopting Teaching Innovations

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Abstract

Significant progress has been made in understanding science, technology, engineering, and mathematics (STEM) education and ways it can be improved, but propagation of change remains a challenge. This study presents an analysis of STEM faculty responses to open-text survey questions that asked them to identify motivations and obstacles to making changes in their teaching. Responses reveal wide variability among faculty perceptions, conceptualizations of change, and understandings of evidence. These findings suggest reasons faculty are not uniformly receptive to calls for change and challenge educational developers and advocates of STEM education reform to be explicit about their own understandings of meaningful change and evidence-based pedagogical practices.

Keywords: STEM, faculty motivation, educational development, evidence-based teaching

Introduction

Educational developers and advocates for science, technology, engineering, and mathematics (STEM) education reform have made considerable progress in understanding ways to improve teaching and learning in STEM (see, e.g., American Association for the Advancement of Science, 2012; Association of American Universities, 2011; Austin, 2018; National Research Council, 2012, 2015). Despite progress in understanding change that is needed, however, pathways to effective propagation of change remain uncertain (Froyd et al., 2017; Henderson & Dancy, 2011; Stanford et al., 2016). In its five-year status report on the Undergraduate STEM Education Initiative, the Association of American Universities (AAU) notes, “The biggest barrier to improving undergraduate STEM education is the lack of knowledge about how to effectively spread the use of currently available and tested research‐based instructional ideas and strategies” (2017, p. 20).

Because propagation of classroom practices ultimately rests on faculty engagement in implementing them, this study examines faculty motivations and perceived obstacles to changing how they teach. Advocates for STEM education reform and educational developers often interact with faculty, and many are themselves faculty members, so it is true that many have an experiential basis for inferring faculty perceptions. However, our incidental observations do not necessarily represent all faculty and may disproportionately reflect interactions with habitual innovators (sometimes known as “usual suspects”) who regularly participate in education-related committees, meetings, or events. In this study, therefore, we have sought to go beyond the habitual innovators and ask all STEM faculty what prompts or inhibits consideration of change.

We administered the Survey of Climate for Instructional Improvement (SCII; Walter et al., 2016) to STEM faculty at a public research university. We received responses from 120 faculty members representing all 14 STEM departments that were surveyed, for an overall response rate of 31.3%. Among respondents, 67% were tenured faculty members, nearly 18% were in non-tenure track appointments, and the remaining 15% were not yet tenured but on the tenure track. Respondents reported an average 18.6 years of experience teaching in higher education, with an average 15.6 years at their current institution. Males accounted for 68% of respondents. For the purposes of this study, our goal is to examine the variety of STEM faculty perceptions represented campus-wide; the range of experience represented among respondents assures us that we are hearing from a cross-section of faculty ranks and years at the institution, including faculty at all levels of experience, on and off the tenure-track, and in all STEM departments.

For this study, we examined open-text responses to two SCII items:

- What would motivate you to make changes to your own teaching practice?

- What do you see as the most significant barriers to changing your own teaching practice?

Responses to these items served as a basis for examining factors that might be shaping faculty receptiveness to institutional change initiatives related to STEM education.

Methodological Framework

Our goal in this qualitative analysis is exploration of faculty perceptions. We are not seeking to generalize findings from the sample to all STEM faculty at the institution or to higher education at large; rather, we are using grounded theory (Strauss & Corbin, 1997) to catalogue and categorize the range and variety of perceptions that exist. Furthermore, the goal of this analysis is not necessarily to delineate factors that have given rise to faculty perceptions. Therefore, responses reported in this study are not attributed to or associated with respondents’ prior experience, personal traits, or demographic characteristics, except in cases where respondents themselves refer to these characteristics in their responses.

This study also did not examine the extent to which an individual’s perceptions and reported experiences are congruent with independently identifiable circumstances; for example, if a hypothetical respondent identified “inadequate student preparation” as an obstacle to improving teaching, we did not examine the extent to which this perception reflected verifiable student characteristics. Rather, we recognize that comments reflect obstacles as the respondent perceives them, and our goal is to gain insight into ways in which these reported perceptions (whether they are accurate, partially accurate, or inaccurate) are seen by faculty as factors in their decisions about making changes in their teaching.

While we do not assume that respondents speak for all STEM faculty or that they are always accurate interpreters of the circumstances they describe, we do recognize respondents as experts on their own perceptions, and we need to understand faculty perceptions of change if we want to understand faculty receptiveness to change initiatives. To cite once again the central challenge identified by AAU, “to effectively spread the use of currently available and tested research‐based instructional ideas and strategies” (2017, p. 20), we must understand the motivations and obstacles to change perceived by intended users of these strategies.

Methods

To begin exploring the range of factors that might be influencing faculty receptiveness to consideration of change, responses were initially categorized through open coding (Benaquisto, 2008). At this stage of analysis, codes were primarily low-inference descriptors representing motivations and obstacles in terms and categories used by respondents. For example, if a respondent identified an obstacle as “lack of time” without further explanation, this response was coded as “time” with no additional inference about causal factors. In other cases, respondents identified time-related concerns and named specific causes such as institutional policies on teaching load or multiple commitments faced by new faculty members. In these cases, comments were assigned multiple codes reflecting both “time” and causal factors identified in the comment.

“Time” is one of many factors that appear in responses as both a motivator and an obstacle. In the coding process, the decision to identify a comment as a motivator or an obstacle was determined simply by noting which question the respondent was answering. Responses to the item, “What would motivate you to make changes to your own teaching practice?” were identified as motivators; responses to the item, “What do you see as the most significant barriers to changing your own teaching practice?” were identified as obstacles. Open coding identified a wide variety of motivators and obstacles in faculty responses (see Table 1).

|

|

|

Further deductive coding (Miles et al., 2014) was used to examine comments in terms of potential points of agency or intervention; that is, to address the obstacle or activate the motivation represented in the comment, where would action need to be taken? Specifically, comments were reviewed to identify points of action related to the following:

- Institutional policies or practices

- Student actions or attitudes

- Peer actions or attitudes

- Personal motivation or ability

- Time (retained as an open code and also as a potential focal point for intervention)

Given the nature of open-text survey data, researchers did not have the opportunity to ask follow-up questions that might have helped assign relative weights, draw connections, identify priorities, or resolve apparent contradictions. Therefore, observations of these data are not a comprehensive account of all factors influencing faculty decisions or behaviors; however, they offer a window on the variety of faculty understandings associated with the possibility of change.

Findings

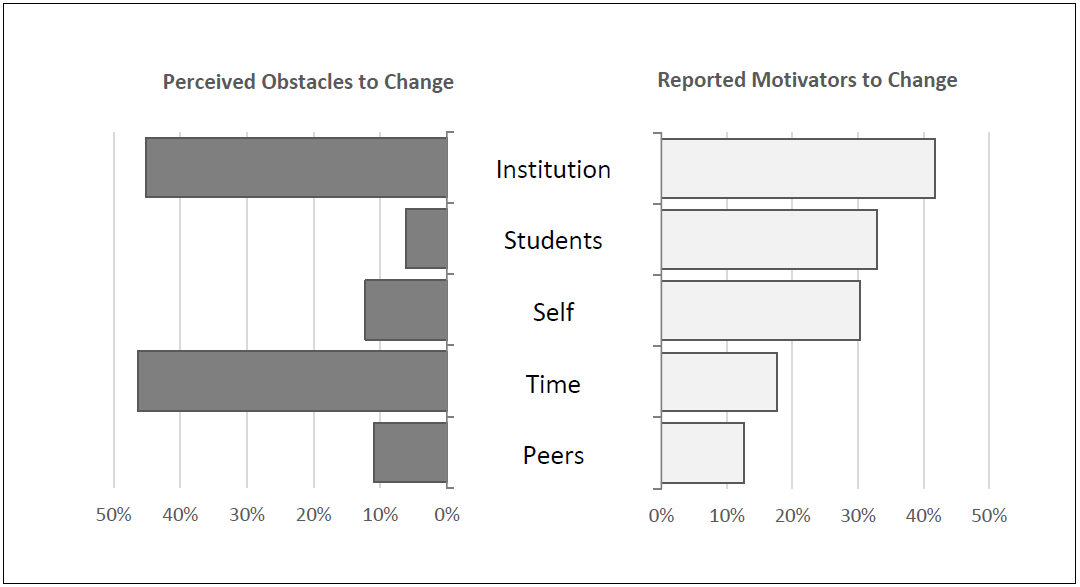

Figure 1 summarizes the distribution of comments assigned through deductive coding. The quantity of comments assigned to a code does not necessarily suggest that one type of obstacle or motivator is a more strategic point of intervention than others or argue for a hierarchy of factors influencing change. Creswell (2012) argues that it is not necessarily the case that “all codes should be given equal emphasis” and notes that “passages coded may actually represent contradictory views” (p. 185). Rather, Figure 1 shows the variability among faculty perceptions and visually demonstrates that we are unlikely to find a singular generalized strategy that uniformly addresses faculty perceptions.

Figure 1. Percentage of comments reflecting themes assigned through deductive codingNote: Total > 100% because some comments reflect more than one theme

Figure 1. Percentage of comments reflecting themes assigned through deductive codingNote: Total > 100% because some comments reflect more than one themeThe theme most frequently assigned in deductive coding was institutional. Examples include reported policies or practices that disincentivize attention to teaching quality or innovation; one respondent noted, “my annual review has no metric on teaching so sometimes I don’t find the motivation to go that extra mile for the teaching aspect of my job” (see Table 2 for additional examples). Other respondents described these same policies or practices as opportunities to motivate innovation, should institutional leaders choose to make strategic changes in those areas (Table 3). Overall, nearly half of respondents identified institutional factors as obstacles to change, and nearly as many described them as potential motivators.

“Policies that value the number of students taught over the quality of the teaching.” “The pressure is #1 to obtain grants, #2 to publish. I'd say there are more institutional incentives for service than for teaching, as long as a faculty member's teaching is reasonably good.” “Risk of poor evaluations that then get used to give subpar raises is the most significant barrier to teaching innovation.” |

“Less teaching load that would allow more time to increase quality.” “Evidence-based practices that improve teaching, combined with Departmental support for their implementation, while not being overly burdened with evaluation and assessment.” “Having a clear signal from the Provost that teaching should be embraced by all faculty through a transparent reward system.” |

Time was the most frequently identified obstacle to change. Some time-related comments touched on institutional issues with implications for time use, which were coded as both institution related and time related. Others focused on areas such as other faculty obligations or the perception that change is inherently time intensive (Table 4). While nearly half of respondents identified lack of time as an obstacle to change, only about one in five cited availability of more time as a potential motivator (Table 5).

“Clearly, time involved in making dramatic changes is huge and would not be seen by my department as a good choice of how to spend time that should be used for research projects.” “Teaching is a small part of what I do. I'm mainly judged by my research program which takes an overwhelming amount of time. I guess the only way to be a better teacher is to hurt my research program.” “It will take a tremendous amount of time to revamp the courses I teach, and now that I am in my fourth or fifth offering, it's easier to go with the status quo.” |

“More time. There are only so many hours in the day.” “What holds me back is lack of time. I'm relatively new so when I get more used to teaching my core courses, I'll have a lot more time to implement thoughtful changes.” “For me, time freedom would enable innovation. It is difficult with the teaching load and other requirements to invest in changing something for a small level of improvements over traditionally successful modes of teaching that are efficient for the faculty.” |

About one in 10 respondents identified their own personal characteristics as obstacles, including those who saw no need to change as well as those who expressed lack of confidence or motivation (Table 6). However, about one in three cited personal characteristics as motivators, including both a desire to “do the best job” and a reported sense of responsibility to support students (Table 7).

“Motivation. It is hard work!” “I'm not sure if my ideas are that good.” “Who says I need to change my teaching practices?” |

“I want to do the best job.” “I have little incentive from my institution to make improvements. The only incentive is my sense of responsibility to do right by students.” “I have made a lot of changes to my own teaching practice over the years and have been incredibly well supported in doing so. I wouldn't change anything about how I have been supported or motivated to make changes. The Center for Teaching and Learning support and workshops have been fundamental to my development as a teacher. I wish more faculty would make use of their services.” |

Only a small number (about one in 20) identified students as an obstacle to change (Table 8). One-third identified students as their chief motivator for change, expressing concern about both loss of students from STEM and support for helping students achieve satisfactory learning outcomes (Table 9).

“The students seem to be very happy with my teaching for the past many years! Why try to fix something that is not broken?” “The presence of smarter students.” “It is hard to guess (or understand) students' expectations.” |

“Unhappiness from the current students about my teaching.” “I'm motivated because I see kids dropping out of my class and transferring out of STEM fields in droves. And I see even lower retention rates in subsequent courses because what I teach does not adequately prepare them to be successful in other classes.” “I regularly make changes to my teaching practice to try to improve student learning outcomes.” |

Finally, about one in 10 described peers as an obstacle, which included both an absence of peer support and also active peer opposition to teaching innovation (Table 10). Just as many cited peers as a potential motivator, including the presence of supportive colleagues and the opportunity to be part of a community of practice in which teaching challenges and strategies are discussed (Table 11).

“Pushback from colleagues in my department who teach similar courses. There is a fear of rocking the boat and having faculty invest more effort in their teaching.” “Old fogies who say ‘it's worked for 40+ years’ so why should we change it now (while blatantly ignoring evidence that improvement is needed).” “Trying to change my practices alone.” |

“Having more people around me trying different practices and having a forum to discuss challenges and strategies to deal with them.” “Co-teaching a course with someone using ‘active teaching.’" “I think the desire to improve the learning environment without pushback from colleagues is enough of a motivation.” |

Across these points of intervention, institutional concerns appear frequently as both motivators and obstacles to innovation, but it is noteworthy that more than half of respondents did not directly identify institutional factors when asked to identify the most significant obstacles or motivators to change. It is also noteworthy that concern for students stands out as a motivator far more often than student characteristics are identified as obstacles and that personal factors stand out as a motivator for change far more often than as an obstacle.

Discussion

Three patterns stand out in this analysis of faculty comments as particularly relevant for STEM education reform advocates and educational developers : (1) motivations and obstacles to change vary widely among faculty members, (2) faculty conceptualizations of “change” do not necessarily align with those of STEM reform advocates and educational developers, and (3) “evidence” does not mean the same thing to all faculty that it means to advocates of evidence-based teaching.

There Is No Singular Answer to the Question, “What do faculty think?”

As educational developers and STEM education reform advocates work to create educational interventions that are both pedagogically sound and recognized by faculty as relevant, it will be essential to remain aware of variability that exists among faculty perceptions. Appeals to concern for student attrition from STEM are likely to engage some faculty more than others, for example, and some will continue to consider changes in their teaching even though they work under the same institutional conditions that others see as obstacles to change.

Awareness of this variability is even more important because surface-level similarities may suggest a higher degree of uniformity than actually exists; faculty tend to agree more about where change is needed than about what needs to change. When considering points of intervention, for instance, it may appear that there is wide agreement about the need for institutional change, but this perceived agreement reflects concern about numerous distinct issues, including demands of research, department politics, workload policies, incentive structures, need for instructional support, and lack of recognition for past improvement efforts. Faculty who hold these views agree that institutional change is needed, but none are asking for the same institutional change.

This variability is further complicated by the fact that views of some faculty members are at odds with views of others. For example, some report that experience teaching a class is an advantage when trying to improve it, but others report previous experience teaching a course as a reason not to consider changing it. Some insist on seeing evidence as a basis for making change, whereas others see it as a burden to be asked to provide evidence. Some are motivated to change by student ratings, and others suggest student ratings are a reason not to risk change.

In cases where faculty express greater agreement, their consensus isn’t necessarily tied to advancing the quality of teaching or learning. For example, some suggest that teaching innovations are a matter of making students “happy.” Linking innovation to student happiness may motivate change (“Unhappiness from the current students about my teaching”) or eliminate the need for it (“The students seem to be very happy with my teaching . . .! Why try to fix something that is not broken?”). Others described change in terms of doing what students want. One reported making changes based on student ratings but added, “If I change it one way, the following year’s students want it changed back again.” Another noted, “It is hard to guess (or understand) students’ expectations.”

Finally, although faculty responses reflect a wide range of varying concerns, it is noteworthy that the majority do not describe motivations for change identified by much of the STEM education reform literature. Only two respondents identify concerns about equity or about retention of students in the major as motivating factors, although this concern has long been central to STEM education researchers (Allen-Ramdial & Campbell, 2014; Carnevale & Strohl, 2013; Estrada et al., 2016). No respondents referred to related concerns about STEM workforce development (National Academies of Sciences, Engineering, and Medicine, 2016). There were no references to development of students’ scientific reasoning or higher order thinking as reasons to consider changing classroom practices (Crowe et al., 2008; Holmes et al., 2015; Wieman & Gilbert, 2015). No one referred to being motivated by research on effective teaching practices (Freeman et al., 2014; National Research Council, 2015; Wieman, 2017) or by their own scholarly interest in discipline-based educational research (Dewar et al., 2018; Mulnix, 2016; National Research Council, 2012).

We hesitate to draw conclusions based on the absence of evidence, and the nature of the study does not let us determine whether this pattern reflects particular characteristics of those who chose to respond to this survey, the institution where the study was conducted, or the larger community of STEM faculty. We have no basis for claiming that faculty are unconcerned about the issues identified in these bodies of literature, only that issues and arguments raised in the STEM education literature, which are well-known to us as reform advocates and educational developers, are not identified as primary motivators by most STEM faculty who responded to the survey.

In addition to this variability among faculty perceptions, we observe two other patterns in ways that faculty describe motivations and obstacles that may shape their receptiveness to STEM education reform and educational development efforts.

Faculty Generally Do Not Conceptualize Change in the Same Ways That STEM Education Reform Advocates and Educational Developers Typically Do

Many comments reflect the theme of change suggested by the question prompts, “What would motivate you to make changes . . .?” and “What do you see as the most significant barriers to changing . . .?” However, it is clear in many comments that respondents associate change with characteristics that are not necessarily what STEM education reform advocates or educational developers intend when we promote change. Table 12 provides examples of faculty comments, some of which are reiterated from earlier tables, that reveal reactions to the word change and convey a number of underlying assumptions:

- Change is huge and requires tremendous effort.

- The cost of change is prohibitive.

- The benefits of change are limited.

- The status quo is beneficial for students and efficient for faculty.

By drawing attention to this pattern, we are not necessarily suggesting that faculty have no reason to think in these ways. However, these comments are worth noting because they suggest that, for faculty members whose perceptions are shaped by these assumptions, the potential value of considering change may not be self-evident.

“Time involved in making dramatic changes is huge and would not be seen by my department as a good choice of how to spend time that should be used for research projects. Also, I am not convinced such changes would benefit the students.” “It will take a tremendous amount of time to revamp the courses I teach. . . . it's easier to go with the status quo.” “It is difficult with the teaching load and other requirements to invest in changing something for a small level of improvements over traditionally successful modes of teaching that are efficient for the faculty.” “You are assuming that innovation is good. The best innovation is to strip away all fluff. Teach by example and . . . drill.” “The main barrier for me is the activation energy to change my teaching practice . . . most of the better students learn no matter which teaching strategy is employed and are turned off by ‘clicker questions.’” |

“You keep using that word …”

A similar pattern in faculty responses recalls the memorable observation of Inigo Montoya, “You keep using that word, but I do not think it means what you think it means” (Scheinman & Reiner, 1987). A number of faculty members raised the issue of evidence in their comments. For these faculty members, having evidence for the benefits of change is identified as a motivator for adopting a teaching innovation, and lack of evidence for the benefits of change is seen as a barrier. However, it is not clear in these comments what would be considered appropriate or satisfactory evidence (Table 13).

“Most important to me is to have evidence that new techniques actually work. For example, I have yet to see a proper double-blind study that actually proves that flipping a class produce[s] a significantly better learning result than the traditional lecture.” “Strong evidence that my practices do not work well.” “A belief that it would actually affect learning outcomes sufficiently to be worth the effort. The papers I read on so-called innovative teaching methods, most of which have been around for decades if not centuries, indicate only small gains . . . I do not believe the difference is large enough to warrant the extra effort the faculty member had to put in.” “What worries me . . . are people who [are] chasing fads about teaching. This typically does not happen in [my field], but seems to be the province of people in education.” “I have thought about the flipped classroom and seen numerous very negative student evaluations on that method. For me to do that, I would basically feel like I was not doing the job I am being paid to do in educating our students in a manner that works for them.” |

Comments like these raise questions about the nature and quality of evidence that are likely to influence faculty interpretation of claims that teaching and learning initiatives are evidence based:

- What do we consider to be legitimate evidence?

- What would constitute sufficient gain?

- Where is the burden of proof?

- Positive student evaluations = doing what works = doing my job = evidence that change isn’t needed

Like earlier comments on the nature of change, these comments suggest that faculty members do not necessarily conceptualize evidence in the same ways as do educational developers or STEM education reform advocates, and it is understandable that faculty members who hold perceptions similar to those expressed in Table 13 are likely to question the value of evidence (as they understand and use the term) as a basis for making decisions about their teaching practices. Many resources exist to support faculty adoption of evidence-based teaching practices, but a faculty member who is holding out for a “proper double-blind study” would not necessarily be persuaded by evidence emerging from classroom-based formative assessments (Angelo & Cross, 1993; Handelsman et al., 2007), thorough documentation of classroom practices (American Association for the Advancement of Science, 2012), or course-based studies showing improved outcomes for students traditionally underrepresented in STEM (Freeman et al., 2014; Haak et al., 2011; Snyder et al., 2016).

These observations have prompted us to formulate a working definition of evidence-based teaching that can be used as a basis for establishing a common frame of reference in our work with faculty. Because many STEM faculty members do not appear to share our conceptualizations of evidence or change, we believe explicitly stated definitions are essential to increase the likelihood that faculty members will understand what we are proposing when we advocate for implementation of evidence-based teaching. Thus, while there is in fact a basis in research for practices identified as evidence based, we emphasize that evidence-based teaching actually relies on real-time evidence collected through teaching (i.e., not simply using methods established with evidence by others in their teaching), and we highlight the value of examining classroom practices and their effects on student learning by using evidence collected through active classroom engagement, formative assessments, and frequent monitoring of student learning through low-stakes assignments (Table 14).

Evidence-based teaching refers to a broad set of classroom practices that have been established by research to support student learning, many of which are guided by the faculty member’s ongoing collection of evidence of student learning in their own classrooms as a basis for decisions about further teaching. Because evidence-based teaching relies on real-time review of student learning in a course, it tends to be better supported by active classroom engagement, frequent monitoring of student learning through low-stakes assignments and activities, and regular formative assessments of student progress. |

Implications for STEM Education Reform Advocates and Educational Developers

Although there are limitations to conclusions that can be drawn from survey responses in a 500-character text box, we see important lessons that can be gleaned from this analysis and further questions for STEM education reform advocates and educational developers to explore.

Recognizing the Diversity of Faculty Perceptions

First, for those of us who are engaged in supporting dissemination of tested strategies for improving teaching and learning in STEM, these findings are a challenge to better understand and address the diverse values, motivations, and obstacles to change perceived by intended users of these strategies. This study suggests that even among STEM faculty at a research university (a group that might be seen as sharing many common interests), no single approach is likely to foster uniformly broad engagement in consideration of change.

The effects created by the fact that a diversity of perceptions exists must also be considered. To cite one example, many faculty members expressed concern about how teaching is evaluated and agree that they dislike student ratings, but for many different reasons that are not all based on accurate understandings of what student ratings are designed to accomplish or how they are used at the institution. To what extent is this lack of shared understanding about changes that are needed, in and of itself, an obstacle to meaningful consideration of change?

Second, STEM faculty may be strongly committed to the examination of evidence in their disciplinary fields of inquiry, but a number of respondents expressed skepticism about the potential value of using evidence to guide their classroom practices. If faculty members are relying on their own disciplinary understandings of the nature and quality of evidence, it is understandable that they might see limited applicability to pedagogical initiatives, and it is our responsibility to explicitly articulate principles of evidence-based practice as they are defined within the domains of educational development and STEM education reform. We offer one definition (Table 14) as a step toward operationalizing terms and clarifying intentions in our advocacy for evidence-based teaching; others may want to further refine or expand this definition, but all of us should recognize the need to define our terms when we seek to engage STEM colleagues who appear to share a common vocabulary but who might not share our assumptions about the concepts represented by the words that we use.

Strategically Supporting Change

Reported perceptions of educational development offer another area for further consideration. For example, comments on the institution’s Center for Teaching and Learning were uniformly positive, but those who requested more professional development tended to do so in terms of discipline-focused development or peer mentoring, which suggests that some may be interested in exploring change, but they prefer to seek help within their home departments. Developers and advocates, regardless of their location in the institution, would benefit from examining implications of these perceptions for how to situate and represent opportunities to support teaching innovations.

For educational developers, it should not be surprising that a number of respondents report being motivated by concern for students or by personal commitments to pursuing excellence in their teaching; faculty members who hold these perceptions are well represented among the habitual innovators who regularly take advantage of educational development resources and opportunities. However, we also see in their comments that many of these respondents don’t believe their views are widely shared among colleagues. While educational developers and STEM education reform advocates continue supporting these habitual innovators in their own teaching, can we also seek to identify forms of organizational support that might help them contribute to wider dissemination of tested teaching strategies within their departments?

Engaging the Institution

Although this analysis has drawn attention to variability among faculty views, it is worth noting that faculty comments pinpointed areas for institutional change that may be strategically important for intervention or further investigation. For instance, respondents expressed a desire for clear and consistent messages from administrators about the value of teaching, which suggests that an institution might be able to facilitate progress by conducting an inventory of its policies and practices related to how teaching is valued, and then examining their clarity and consistency across central, collegiate, and departmental leadership. A second example can be drawn from the observation that many respondents appear to associate change with large-scale interventions and prohibitive costs, which suggests that an institution might be able to facilitate progress by showcasing tested models that demonstrate the feasibility and potential value of small-scale, low-cost change initiatives (e.g., Freeman et al., 2014; Haak et al., 2011).

Considering the range of institutional conditions identified by faculty as primary motivators or obstacles to change, it will also serve us well to more systematically identify which institutional conditions have greatest potential to influence implementation of change. The challenge for institutions is not that strategies for improving teaching and learning in STEM are unknown or untested; educational practices that reliably contribute to student success in STEM are well established and readily accessible (e.g., American Association for the Advancement of Science, 2012; Austin, 2018; National Research Council, 2015). Rather, the challenge is to identify and address ways in which structural factors within our institutions are creating conditions that facilitate or inhibit propagation of these tested strategies.

Gaining a Deeper Understanding of Faculty Perceptions of Motivations and Obstacles to Change

Further studies that do not rely solely on qualitative data may shed more light on the distribution and relative weight of motivations and obstacles that emerged through this study. Additional qualitative studies that allow for more extended engagement with respondents through interviews or focus groups may be able to probe more deeply into motivations and obstacles identified by faculty members and to resolve incompatible or contradictory perceptions.

It will also be valuable to examine ways in which diversity of perceptions reflects diversity of respondents. We have not examined differences in faculty perceptions in terms of their demographic characteristics, faculty rank, or field within STEM, all of which could arguably play a role in their lived experiences as faculty members and their perceptions of motivations and obstacles to change. A similar examination of faculty perceptions at multiple institutions could offer valuable insights on the extent to which their perceptions are shaped by particular institutional contexts.

Although there is still much to learn, the findings of this present study provide an initial snapshot of the range and variability of perceptions held by faculty that may be influencing their receptiveness to adopting teaching innovations and their willingness to become users of the research-based instructional strategies that STEM education reform advocates and educational developers are seeking to propagate and institutionalize.

Acknowledgments

The authors would like to acknowledge research team members Jean Florman, Jane Russell, Sam Van Horne, and Ashlie Wrenne for their contributions to the larger research project on which this analysis is based. This study is based upon work supported by National Science Foundation Grant No. 1432728. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the National Science Foundation.

Biographies

Wayne Jacobson (PhD, University of Wisconsin–Madison) is Assessment Director in the Office of the Provost at the University of Iowa. Scholarly interests include institutional assessment, organizational change, and equity and inclusion in higher education.

Renee Cole (PhD, University of Oklahoma) is a Professor of Chemistry at the University of Iowa. Her research focuses on how students learn chemistry and how that guides the design of instructional materials and teaching strategies. She is also interested in examining how to effectively translate discipline-based education research to the practice of teaching, thus increasing the impact of this research and improving undergraduate STEM education.

References

- Allen-Ramdial, S. A., & Campbell, A. G. (2014). Reimagining the pipeline: Advancing STEM diversity, persistence, and success. Bioscience, 64(7), 612–618. https://doi.org/10.1093/biosci/biu076

- American Association for the Advancement of Science. (2012, December 17-19). Describing & measuring undergraduate STEM teaching practices. AAAS. https://live-ccliconference.pantheonsite.io/wp-content/uploads/2013/11/Measuring-STEM-Teaching-Practices.pdf

- Angelo, T. A., & Cross, K. P. (1993). Classroom assessment techniques: A handbook for college teachers. Jossey-Bass.

- Association of American Universities. (2011). Undergraduate STEM Education Initiative. https://www.aau.edu/education-community-impact/undergraduate-education/undergraduate-stem-education-initiative-3

- Association of American Universities. (2017). Progress toward achieving systemic change: A five-year status report on the AAU Undergraduate STEM Education Initiative.https://www.aau.edu/education-community-impact/undergraduate-education/undergraduate-stem-education-initiative/progress

- Austin, A. E. (2018). Vision and change in undergraduate biology education: Unpacking a movement and sharing lessons learned. American Association for the Advancement of Science. https://live-visionandchange.pantheonsite.io/wp-content/uploads/2018/09/VandC-2018-finrr.pdf

- Benaquisto, L. (2008). Codes and coding. In L. M. Given (Ed.), The SAGE encyclopedia of qualitative research methods (pp. 86–88). SAGE Publications. https://doi.org/10.4135/9781412963909.n48

- Carnevale, A. P., & Strohl, J. (2013). Separate and unequal: How higher education reinforces the intergenerational reproduction of white racial privilege. Georgetown Public Policy Institute. https://cew.georgetown.edu/cew-reports/separate-unequal/#full-report

- Creswell, J. W. (2012). Qualitative inquiry & research design: Choosing among five approaches (3rd ed.). SAGE Publications.

- Crowe, A., Dirks, C., & Wenderoth, M. P. (2008). Biology in bloom: Implementing Bloom's Taxonomy to enhance student learning in biology. CBE-Life Sciences Education, 7(4), 368–381. https://doi.org/10.1187/cbe.08-05-0024

- Dewar, J. M., Bennett, C. D., & Fisher, M. A. (2018). The scholarship of teaching and learning: A guide for scientists, engineers, and mathematicians. Oxford University Press.

- Estrada, M., Burnett, M., Campbell, A. G., Campbell, P. B., Denetclaw, W. F., Gutiérrez, C. G., Hurtado, S., John, G. H., Matsui, J., McGee, R., Okpodu, C. M., Robinson, T. J., Summers, M. F., Werner-Washburne, M., & Zavala, M. (2016). Improving underrepresented minority student persistence in STEM. CBE-Life Sciences Education, 15(3), es5. https://doi.org/10.1187/cbe.16-01-0038

- Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences, 111(23), 8410–8415. https://doi.org/10.1073/pnas.1319030111

- Froyd, J. E., Henderson, C., Cole, R. S., Friedrichsen, D., Khatri, R., & Stanford, C. (2017). From dissemination to propagation: A new paradigm for education developers. Change: The Magazine of Higher Learning, 49(4), 35–42. https://doi.org/10.1080/00091383.2017.1357098

- Haak, D. C., HilleRisLambers, J., Pitre, E., & Freeman, S. (2011). Increased structure and active learning reduce the achievement gap in introductory biology. Science, 332(6034), 1213–1216. https://doi.org/10.1126/science.1204820

- Handelsman, J., Miller, S., & Pfund, C. (2007). Scientific teaching. Macmillan.

- Henderson, C., & Dancy, M. (2011). Increasing the impact and diffusion of STEM education innovations [White paper]. Characterizing the Impact and Diffusion of Engineering Education Innovations Forum, New Orleans, LA, United States.

- Holmes, N. G., Wieman, C. E., & Bonn, D. A. (2015). Teaching critical thinking. Proceedings of the National Academy of Sciences, 112(36), 11199–11204. https://doi.org/10.1073/pnas.1505329112

- Miles, M. B., Huberman, A. M., & Saldaña, J. (2014). Qualitative data analysis: A methods sourcebook (3rd ed.). SAGE Publications.

- Mulnix, A. B. (2016). STEM faculty as learners in pedagogical reform and the role of research articles as professional development opportunities. CBE-Life Sciences Education, 15(4), es8. https://doi.org/10.1187/cbe.15-12-0251

- National Academies of Sciences, Engineering, and Medicine. (2016). Barriers and opportunities for 2-year and 4-year STEM degrees: Systemic change to support students' diverse pathways. The National Academies Press. https://doi.org/10.17226/21739

- National Research Council. (2012). Discipline-based education research: Understanding and improving learning in undergraduate science and engineering. The National Academies Press. https://doi.org/10.17226/13362

- National Research Council. (2015). Reaching students: What research says about effective instruction in undergraduate science and engineering. The National Academies Press. https://doi.org/10.17226/18687

- Scheinman, A. (Producer), & Reiner, R. (Producer & Director). (1987). The princess bride [Film]. 20th Century Fox.

- Snyder, J. J., Sloane, J. D., Dunk, R. D. P., & Wiles, J. R. (2016). Peer-led team learning helps minority students succeed. PLoS Biol, 14(3): e1002398. https://doi.org/10.1371/journal.pbio.1002398

- Stanford, C., Cole, R., Froyd, J., Friedrichsen, D., Khatri, R., & Henderson, C. (2016). Supporting sustained adoption of education innovations: The Designing for Sustained Adoption Assessment Instrument. International Journal of STEM Education, 3(1), 1. http://doi.org/10.1186/s40594-016-0034-3

- Strauss, A., & Corbin, J. M. (1997). Grounded theory in practice. SAGE Publications.

- Walter, E. M., Henderson, C. R., Beach, A. L., & Williams, C. T. (2016). Introducing the Postsecondary Instructional Practices Survey (PIPS): A Concise, Interdisciplinary, and Easy-to-Score Survey. CBE life sciences education, 15(4), ar53. https://doi.org/10.1187/cbe.15-09-0193

- Wieman, C. (2017). Improving how universities teach science. Harvard University Press.

- Wieman, C., & Gilbert, S. (2015). Taking a scientific approach to science education, part I—Research. Microbe, 10(4), 152–156.