Cultivating and Sustaining a Faculty Culture of Data-Driven Teaching and Learning:

A Systems Approach

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Abstract

A prominent goal of colleges and universities today is to enact data-driven teaching and learning. Faculty clearly play a key role, and yet they tend to have limited time, a lack of training in assessment or education research, and few incentives for engaging in this work. We describe a framework designed to address the practical and cultural aspects of these challenges via a cycle of educational development and support: motivate, educate, facilitate, disseminate. We illustrate this systems approach with concrete examples and conclude with lessons learned from our experiences that should translate to a variety of institutional contexts.

Keywords: data-driven teaching and learning, assessment, educational research, educational development, systems approach

Faculty at research-intensive universities can be innovators not only in their scholarly work but also in their teaching. As educational developers often discover, many faculty try something new in their courses semester to semester, and some engage in substantial transformations. In most cases, these changes are not informed by peer-reviewed education research. Instead, they are usually driven by intuition and then evaluated and refined based on student evaluations of teaching rather than direct measurements of learning outcomes, if refined based on data at all. This is not surprising, given the challenges faculty face: limited time, lack of training in assessment or education research, and few incentives to systematically study and iteratively improve their teaching. And yet there is such potential to enhance student outcomes if only universities could make data-driven approaches to education standard practice across the curriculum.

Data-driven approaches to education take two forms. First, by leveraging data collected by researchers in other contexts, one might adopt a teaching strategy informed by a rigorous body of peer-reviewed, empirical research on learning. For example, an instructor might infuse active learning into a lecture-based course after reading about the overwhelming evidence that students learn better and exhibit lower DFW rates when learning this way (Freeman et al., 2014). Second, one might engage in teaching as research (TAR), collecting new data on student outcomes within one’s own course. These two forms are not mutually exclusive. Imagine an instructor implementing active learning via two different research-backed techniques, such as posing multiple-choice questions for some topics and open-ended questions for other topics. She might wonder which was more efficacious for promoting students’ critical thinking. Rather than relying solely on how she perceived the two techniques’ efficacy or on past research conducted in a different context, she might compare students’ critical thinking performance on topics taught via the two different methods and use the results to guide future teaching.

Arguably, colleges and universities today are well positioned to make great strides toward data-driven education, especially compared to 20 years ago. For example, when the influential Boyer Commission Report (1998) advocated for significant shifts in undergraduate education practices at research universities, data-driven teaching was not among the recommendations. Today, there is an increased focus on accountability and student outcomes, and higher education institutions are subject to this public scrutiny (e.g., Arum & Roska, 2011), even when some critiques may be questioned (e.g., see Astin, 2011). Hence, many universities are placing greater value on the undergraduate education component of their mission and are taking deliberate action to improve student outcomes (Kuh & Ikenberry, 2018). Additionally, whereas the Boyer Report’s ninth recommendation—“Change Faculty Reward Systems”—might have seemed almost impossible when the report was first published, the last decade has seen some notable changes in how teaching is considered in the tenure review process (Miller & Seldin, 2014). Many of these changes involve increased use of data (i.e., direct measures of learning outcomes) and/or evidence-based practices in the teaching-related components of faculty evaluation. For example, the percentage of deans reporting the use of direct observations of teaching increased from 40% to 60% from 2000 to 2010, and reports of conducting research-based evaluation of teaching materials increased from 39% to 43%. Furthermore, there have been significant advances in research on teaching and learning in higher education.

Whereas educational research from 20 years ago mainly involved K–12 classrooms or laboratory studies of the “typical college sophomore,” today we have robust empirical results on college students’ learning in actual courses. This work is being conducted not only by education researchers but also by faculty members across various disciplines who are pursuing the scholarship of teaching and learning (SoTL) and discipline-based educational research (DBER). The new generation of conferences and journals dedicated to SoTL and DBER is consistent with the Boyer Report’s recommendation that professional meetings should take up a focus on new ideas and approaches in undergraduate education. As a result, faculty now have access to a rich literature on how to rigorously conduct SoTL and DBER (e.g., Bishop-Clark & Dietz-Uhler, 2012; Chick, 2018). And, the benefits of SoTL and DBER for faculty teaching and professional development are well documented (e.g., Fanghanel, 2013; Kreber, 2013; Trigwell, 2013). Moreover, centers for teaching and learning (CTLs) have increased their focus on evidence-based practices as they support the professional development of faculty (Beach et al., 2016; Haras et al., 2017; Hines, 2015; POD Network, 2018a, 2018b).

Of course, a considerable gap remains between where universities are now and the vision of every university functioning as a learner-focused, data-driven educational system. What are the fundamental features of such a system? First, the institutional culture values collecting and using data to improve learning outcomes. Second, the institutional infrastructure enables faculty to collect and use learning data (quantitative and/or qualitative) as a feasible part of their regular practice.

In this article, we describe a framework aimed at promoting change in both the culture and practice of teaching, and we illustrate this framework in action, using our home institution as a case study. We posit that CTLs are a natural hub for this work, and we highlight how CTLs can connect with university administration and other units on campus, such as the registrar and institutional review board (IRB) to strengthen the net effect. We conclude by sharing data regarding the impacts of implementing this framework, a set of potentially transferable lessons learned, and some future directions as we continue to iterate.

Framework

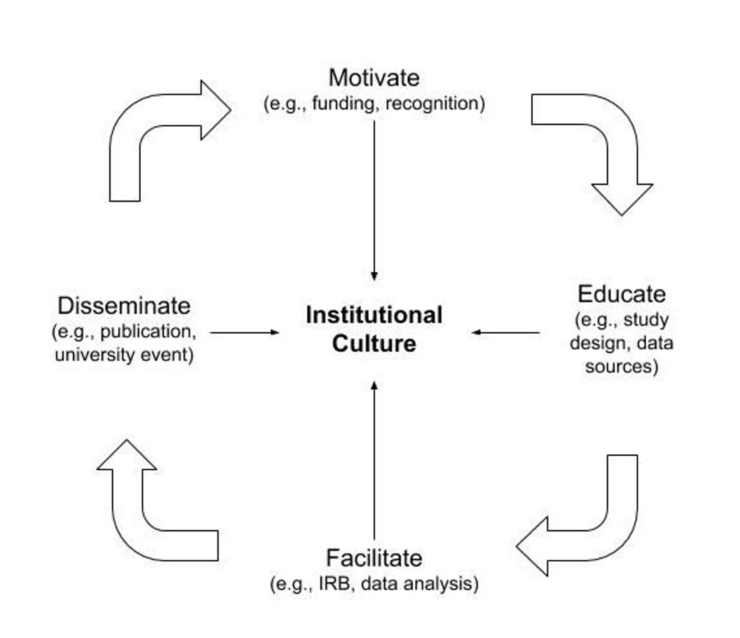

To help shift both educational culture and practice toward data-informed teaching, we posit a framework with four steps that interact in a mutually supporting cycle (Figure 1, hereafter MEFD framework): (a) motivate faculty to collect data as they teach; (b) educate faculty so they are comfortable and competent at systematically collecting data in their own courses; (c) facilitate this work by providing human and technical support to reduce the workload involved in data collection and analysis; and (d) promote venues to disseminate the results.

Figure 1. A Framework for Cultivating and Sustaining a Faculty Culture of Data-Driven Teaching and Learning

Figure 1. A Framework for Cultivating and Sustaining a Faculty Culture of Data-Driven Teaching and LearningTwo steps in this framework—motivate and disseminate—focus on cultural aspects by promoting the value of this work, whereas the other two—educate and facilitate—focus on practical aspects by lowering the barriers to faculty participation. Jointly addressing culture and practice is intentional and based on the notion that moving the needle for either one in isolation may not be sufficient or lead to sustained results (see research regarding diffusion of innovations that suggests adoption of a new practice depends on both its practical advantages over standard practice and its compatibility with values and beliefs; Rogers, 2003). Instead, shifts in both culture and practice positively interact. The MEFD framework aims to produce small shifts in both culture and practice, relying on their mutually supportive interactions to produce a bigger overall effect.

Miller-Young et al. (2017) discuss a related conceptual model regarding faculty adoption of SoTL. They describe a continuum of institutional contexts varying in two dimensions: an institution’s explicit support for SoTL (e.g., incentives, policies, dedicated resources) and its tacit faculty culture regarding SoTL (e.g., how faculty engage in, discuss, or value SoTL). Each dimension represents a continuum from emerging to established institutional support or culture. These dimensions combine to influence opportunities and challenges regarding faculty adoption of SoTL in a particular institutional context. When both institutional support and culture are well established (see Miller-Young et al., 2017, Figure 1, quadrant 4), they argue conditions are “optimal” for data-driven teaching to flourish as standard practice. Similarly, the components of our MEFD framework can work independently or in concert to cultivate institutional support for and a culture of data-driven pedagogy.

A key feature of our framework is that it is set up as a cycle, so that small steps, when iteratively applied, can accumulate to make a significant difference. Thus, one benefit of applying this framework is that it is possible to make headway while relying on limited resources at any point in time, as long as ongoing work keeps the cycle going. No single step of the framework is a linchpin. Instead, each is part of a coordinated system of programs, supports, and incentives that work together.

- When we motivate faculty to see that data can be valuable—to them, to their students, and to the administration—they will be more likely to consider collecting it;

- then, if educational opportunities are targeted to faculty needs regarding how to collect valid and reliable data, they will be more likely to engage in those opportunities;

- then, having learned enough to give it a try, faculty may start collecting data and be ready to take advantage of support that facilitates the work;

- if/when that work leads to actionable results, that is, findings that guide future teaching practices, faculty will be poised to disseminate what they learned among colleagues in their discipline, at their institution, and beyond;

- the act of dissemination then influences institutional culture by fostering conversations on evidence-based teaching strategies and leveraging the value associated with discovery for conceiving teaching as a research- and community-based activity;

- and contributing new results and exchanging strategies among colleagues is likely to motivate both the faculty sharing their results as well as their peers to join in the process. And so the cycle continues . . .

Stepping through this cycle, another key feature of the framework is illustrated. Each step specifies a goal but does not prescribe a particular strategy for how to achieve that goal. Each step in the framework may be implemented in a variety of ways, across institutions or even across iterations within an institution. We believe this creates a more flexible and sustainable approach because it allows for modular and evolving strategies that meet changing needs and resource constraints. In this way, effort can focus on filling gaps and refining implementation strategies rather than constructing an entire system at once (and inevitably needing to re-construct the system as the institutional context evolves).

CTLs are a natural hub to support and promote a campus-wide shift toward data-driven teaching. Cook and Kaplan (2011) illustrate the many ways that CTLs can position themselves to provide effective professional development on teaching for both current and future faculty. Through one-on-one consultation services, workshops, teaching orientations, learning communities, and more, CTLs can help disseminate evidence-based teaching approaches by distilling education research into practical strategies that faculty can readily adopt and adapt. Many CTLs already do this, reaching substantial numbers of instructors and future faculty. We argue that CTLs can also readily plug into the MEFD framework, adjusting their programs and services to actively support and/or lower barriers to data-informed teaching. Below, we highlight numerous ways CTLs can motivate, educate, facilitate, and disseminate to advance a culture of data-informed teaching.

Carnegie Mellon University as a Case Study

Carnegie Mellon University (CMU) is a research-intensive, private institution with approximately 14,000 graduate and undergraduate students and 1,400 faculty. Roughly 1,000 faculty teach at least one course annually. CMU has a long-standing CTL—the Eberly Center for Teaching Excellence & Educational Innovation. The Eberly Center has a history of providing seminars, workshops, and one-on-one teaching consultations. Annually, half of the Eberly Center’s work supports faculty and staff with instructional responsibilities. The other half supports graduate students. During the 2018–2019 academic year, the CTL served more than 600 faculty and over 600 graduate students. Services included consulting individually with 362 faculty and staff members with educational responsibilities, representing 280 courses and 342 instructor-course combinations, approximately one in three instructors teaching courses. These clients came from all seven CMU schools/colleges and all faculty ranks and appointment types.

The Eberly Center is currently part of the provost’s office, reporting directly to the vice provost for education. The CTL employs staff specializing in evidence-based pedagogy, educational technology, and/or formative assessment of student outcomes. Currently, it employs 23 full-time staff, including logistical support personnel. However, as little as six years ago, the CTL had eight employees. One should note that we piloted many approaches described below with fewer staff. In part, these successful pilots are responsible for the Eberly Center’s rapid growth.

A prominent goal of our CTL over the past several years has been supporting faculty to regularly reflect and act on learning data collected in their own courses. We started with faculty who were already making notable changes in their courses and curious about the efficacy of those changes. Our initial efforts attempted to reduce barriers to leveraging data to inform and refine these teaching innovations. We then expanded our target audience to include those who want to understand where student learning currently stands in order to devise targeted interventions.

In the next four sections, we describe some of the activities we established to implement the four steps of our framework. Each section begins with a general explanation of the corresponding step in the framework, including possible implementation strategies. Then, each section highlights one or more of the specific strategies we have used, illustrated through actual scenarios.

Motivate

Given Competing Demands on Faculty Time, What Incentives Might Persuade Faculty to Engage in Data-Informed Teaching and Course Design?

The first component of the MEFD framework involves motivating faculty to collect data as they teach. There are multiple ways to approach this, such as through financial and other direct incentives or activities that implicitly communicate the value an institution ascribes to collecting and using data in one’s teaching. A common financial incentive involves creating a faculty grants program (e.g., Cook et al., 2011) or providing special stipends and/or teaching relief for projects that involve collecting and using data to improve education. Another approach involves changing the reward structure, such as criteria for promotion and tenure and/or education-related awards. Though effective, these strategies can be tricky and costly to implement effectively. We have explored modest- and moderate-cost versions of these strategies in combination with appealing to faculty members’ intrinsic motivations—for example, to understand what their students are learning well (and not)—to ascertain whether their recent teaching innovation actually made a difference and to guide their future teaching efforts.

In 2014, CMU’s administration launched a Faculty Seed Grants Program (ProSEED) that encouraged faculty who wanted to innovate in their teaching via technology-enhanced learning to also collect data measuring the innovation’s impact. Once or twice per year, nine faculty projects, on average, have been funded. Note that this was not just a grants program for developing teaching innovations, as has become somewhat common across higher education, but one focused on (a) developing evidence-based teaching innovations and then (b) leveraging data to iteratively improve the teaching innovations. As such, selection criteria evaluate the strength of evidence suggesting that the innovation will enhance learning (Does previously conducted education research suggest it will work?) and the quality of the data and assessment plan (Are direct measures of student learning and performance included, rather than just surveys of students’ perceptions?; see Appendix). For each award, the university provides $15,000 to be spent directly by the faculty for summer support or materials for themselves and/or graduate students working on the project. An additional $15,000 may be “spent” on technical, pedagogical, and data support personnel from the CTL to help design a study, develop appropriate measures, analyze data, and so forth. In this sense, not only do the selection criteria promote data-driven innovation, but awardees are expected to leverage the in-kind, matching support of personnel resources to collect and analyze data relevant for assessing and improving their innovations. Over time, we have experimented with proposal writing help of various kinds, including a grant writing workshop, online resources, and ultimately pre-submission consultations while faculty colleagues are envisioning their projects.

Based on reviewer scores, the quality of grant proposals has risen significantly in terms of the proposals’ assessment plans. Specifically, we compared all proposals submitted in spring 2014 and fall 2018, before versus after MEFD framework implementation, respectively. On a 5-point scale, mean rubric scores for the criterion “collect and analyze data on student learning outcomes” were significantly higher in fall 2018 (M = 3.82) than spring 2014 (M = 3.12). A two-tailed t test suggested that the mean difference of 0.7 was significant, 95% CI [.168, 1.156], t (59) = 2.56, p < .05, d = .78. We tentatively attribute the observed change to concerted efforts to communicate the importance of direct measures of outcomes, above and beyond student self-reports and satisfaction surveys. Articulating a plan for collecting data to inform iterative refinement at key project milestones is required in the application and highlighted as a key award-selection criterion. Additionally, all proposal writing support mechanisms described above distribute resources to educate applicants regarding the pros and cons of potential data sources on student outcomes. Of course, we cannot rule out other causes for the observed increase. For example, we lack an appropriate comparison group to use as a control for ambient changes in proposal quality over time. We also acknowledge that reviewer scores have not been calibrated across years or raters. Thus, we simply interpret the positive change in rubric scores as suggestive and in the predicted direction.

Another award program that our CTL administers is the Wimmer Faculty Fellows program. This program is also selective and application based. However, it focuses on junior faculty developing new courses or teaching strategies and offers a comparatively modest $3,000 stipend to up to five faculty annually. We have gradually increased the degree to which data collection is encouraged, expected, and required as part of the program. Currently, participants are required to submit an evaluation plan as part of their fellowship application and may request a consultation to inform their plan prior to submission. Each faculty fellow is paired with a CTL support team, including teaching, assessment, and educational technology consultants, to facilitate the design, implementation, and evaluation of their proposed project. Teaching consultants provide a required Small Group Instructional Diagnosis (see Finelli et al., 2008; Finelli et al., 2011) in each fellow’s course to gather mid-semester student feedback on how students are experiencing the new teaching approach in time to make adjustments on its implementation. Additionally, assessment consultants help fellows collect, analyze, and interpret at least one direct measure of student learning outcomes. Consequently, all fellows now use multiple sources of data to refine their projects, and some have engaged in full-on educational research projects focused on direct measures of student outcomes (e.g., Christian et al., 2019).

Money is not always available as an incentive, but an award program can still leverage prestige and/or special treatment to motivate faculty who are willing (with help) to collect data on student outcomes. For example, the CMU Teaching Innovation Award (TIA) recognizes faculty members’ educational innovations, based on both their transferability to other teaching contexts and effectiveness for student outcomes. While many teaching awards focus on lifetime achievement, the TIA recognizes innovations that are fine-grained in scope and scale within a specific course. Busy faculty do not have to overhaul an entire course to be recognized. Instead, the TIA celebrates small changes, such as a single assignment, classroom activity, or teaching technique, that result in measurable differences in student learning or engagement. Consequently, the award promotes a data-valuing culture of teaching among faculty who are too busy or not motivated for large-scale course transformations. In contrast, the aforementioned ProSEED and Wimmer Faculty Fellows programs provide special treatment in the form of in-kind staff support to motivate faculty engagement. CTL consultants provide support for identifying data sources, designing and implementing assessments, and analyzing and interpreting data. By providing critical just-in-time support to faculty who already have a full plate, this special treatment lowers barriers to engagement.

In addition to their motivating aspects, prestige and special treatment also impact other steps of the MEFD framework. Teaching awards can help disseminate transferable, high-impact strategies among faculty colleagues through award ceremonies and publicity. Likewise, special treatment in the form of in-kind support can help educate and facilitate, increasing faculty skills regarding classroom research and sustaining projects that would otherwise falter during implementation, respectively.

The Framework in Action (Motivation)

Sarah Christian is an assistant teaching professor in civil and environmental engineering who was selected to participate in our Wimmer Faculty Fellows program. Her project revised the cookbook-style labs in her Material Properties course. Exam data showed that students were not transferring concepts from labs to exams. She wanted help to implement a more effective teaching strategy and to assess how it was working. Her collaboration with our CTL started as a teaching consultation focused on inquiry-based learning (IBL) as the evidence-based pedagogy of choice (Pedaste et al., 2015). The consultation revealed that her primarily “cookbook” labs actually included a lightweight component of IBL, making a prediction and testing it by following a recipe-style procedure provided to students (referred to as “structured IBL” by Tafoya et al., 1980). She worked with a teaching consultant to build a more open-ended form of “guided IBL” (Tafoya et al., 1980) into one lab. This guided IBL approach was more tightly aligned with her learning objectives regarding application of concepts and thinking critically like an engineer. She also worked with an assessment consultant to (a) revise exam questions to best measure the learning outcomes associated with each of the three labs and (b) set up a study design in which the impact of structured versus guided IBL could be measured by comparing exam performance on questions assessing the lab content encountered via structured versus guided IBL. By enhancing assessments already embedded in the course, she could directly and rigorously measure learning without creating extra course-related work for her or her students. Students performed 10% higher on the exam questions from the guided IBL lab compared to the more cookbook, structured IBL labs (Christian et al., 2019). Christian is now adding guided IBL to the other labs and continuing to collect data for ongoing iterative refinement. She repeatedly communicated to her consultants that the Wimmer Fellowship motivated her to pursue a project that she would not have done on her own, primarily by providing a formalized support structure, recognition for her teaching efforts via multiple campus events and websites, and mentorship for engaging in SoTL.

Educate

How Can Faculty Acquire (or Strengthen) the Skills Required to Navigate the Challenges of Designing, Conducting, and Interpreting Classroom Research?

A common goal of CTLs is to provide professional development for faculty members in their roles as educators. The educate step in our framework aims to design professional development that meets faculty where they are, in terms of interest and readiness to engage in data-informed teaching. CTLs often provide professional development through workshops and individual consultation with faculty colleagues. However, faculty members do not always partake. Many CTLs design their programming based on revealed interests of the faculty. For example, if there is an upswell of faculty asking about the “flipped classroom,” it is time to create workshops on this topic. It is also known from the institutional change literature that there is value in leveraging early adopters because, if they are opinion leaders, they can promote ideas and values to their peers (Rogers, 2003). These strategies have guided our approach.

A few years ago, at our CTL, we noticed a trend of numerous faculty consultations that involved issues around data collection and testing the impact of teaching innovations. So, in fall 2014, we hosted a faculty special interest group (SIG) on collecting data to inform teaching that gathered together faculty members who were interested in or already giving classroom-based research a try. Compared to developing a whole new workshop series around TAR, this discussion-based SIG not only required fewer of our resources but also gave us a chance to interact with faculty who were pushing this envelope so we could learn about their goals and struggles while offering support, guidance, and a venue for peer discussion, learning, and feedback. Our SIGs usually run for a single semester, with 12 to 15 faculty meeting three to four times. This SIG was immensely successful in that it drew over 25 participants, representing all seven of CMU’s schools/colleges, and the participants requested en masse that we continue hosting discussions—for four semesters in a row!

The success of our faculty-driven SIG gave us a sense of the key issues from the faculty perspective and an early read on how this topic could potentially draw a crowd. As with most CTL programs, we wanted to move beyond the early adopters to faculty who could become the next wave of interested adopters. Therefore, we launched the Teaching as Research Institute. Our goal for this program was to provide more structured educational support for colleagues who were new to collecting data in their courses while leveraging the resources we had already developed for the SIG audience. We also needed to think about what would interest a new audience in this work and how we could scale our support to address a broader set of research/assessment projects as well as faculty newer to this work.

Now an annual event, our TAR Institute engages a group of over 20 faculty per year in a four-day program to learn about study design, data sources, and ethical research practices. However, the institute is pitched as a chance to both do new pedagogical development—incorporate active learning into your course—and collect data to analyze and improve student learning. Participants leave this program ready to implement a new, evidence-based teaching strategy and simultaneously to collaborate on a classroom-based research project with CTL support. Faculty need not come with a research question in mind. As we engage with the pedagogical theme, we present the extant body of associated research, highlighting a menu of questions that have not yet been investigated or fully answered. Faculty then work one-on-one with consultants to select and refine a question aligned with their interests and teaching context.

To date, over 80 faculty and staff have attended a TAR Institute, jump-starting over 50 data-driven teaching projects. Some clients wished to publish from the outset. Others simply engaged to better inform their future teaching. Given our goal is promoting data-driven teaching, publication rate is not necessarily a valid measure of impacts, whereas the number of CTL clients collecting data to inform teaching is (e.g., see Table 1 and associated text). Additionally, experimental null results can be quite informative for course design but can be difficult to publish. Regardless, nine TAR projects (and counting) have led to peer-reviewed publications and/or presentations at disciplinary conferences.

The Framework in Action (Educate)

Copious research suggests that infusing active learning into traditional lectures leads to greater learning outcomes and reduces DFW rates (Freeman et al., 2014). However, questions remain about how best to implement active learning to maximize student outcomes. Does the way one debriefs an activity impact learning gains? Three information systems faculty—Mike McCarthy, Marty Barrett, and Joe Mertz—who attended our TAR Institute decided to explore this question in their Distributed Systems course, which enrolls over 100 students. Their students discuss concept-based multiple-choice questions in small groups and then vote on the correct answers. The faculty opted on a classroom research design to test whether debriefing all answer choices added value beyond debriefing the rationale for the correct answer alone (a less time-consuming approach). They did not enter the institute with this question, but the institute helped them formulate a data-driven inquiry to refine their implementation of active learning. Results showed that active learning enhanced average exam performance by 13% compared to a traditional lecture format (McCarthy et al., 2019). Furthermore, although debrief mode did not impact exam performance on recall questions, average exam performance on application questions increased more than 5% when instructors debriefed all answer choices. Consequently, following application-focused classroom activities, the instructors now debrief all answer choices rather than only the correct answer.

Facilitate

Are the Logistics of Conducting a Study a Dealbreaker for Faculty to Adopt Data-Driven Teaching?

In our experience, they most certainly are. For example, to conduct a classroom research study, a university’s IRB must review and approve a faculty member’s research plan. To the uninitiated, the IRB process can be opaque, convoluted, and time-consuming, despite the best efforts of well-meaning IRB offices. Typically, even though many faculty wish to use similar types of interventions and data sources to test their classroom research questions, they have to individually reinvent the wheel as they learn to navigate the IRB system. To lower this barrier, our CTL collaborated with colleagues in the IRB office to establish a broad protocol for classroom research focused on improving the design and delivery of specific courses. The broad protocol allows instructors-of-record to collaborate closely with our consultants to conduct classroom research, implement particular study designs, and collect certain types of data to conduct research within an approved protocol. As long as particular eligibility requirements are met, this piece of infrastructure eliminates the need for each faculty member interested in educational research to navigate the IRB system on her own. Our approach is similar to that pioneered at other CTLs (e.g., Wright, 2008; Wright et al., 2011).

Augmenting the IRB broad protocol, our CTL’s Teaching as Research consulting service also provides tangible support for several other steps of the research process that faculty may not be comfortable with or have time to do. This service includes help with study design, background research, assessment design, and data analysis and interpretation. Consultations focus on how best to implement an intervention, including comparison groups and accounting for potential confounding factors. Background research may entail finding relevant literature to inform the study design as well as identifying and vetting appropriate assessment instruments. Assessment design may involve collaboratively workshopping an instructor’s assessments to align with learning objectives and embedding valid, reliable, direct measures of learning outcomes into coursework. After data collection, we often function as a data broker by analyzing data for faculty lacking necessary quantitative or qualitative skills or time. We also coordinate the matching of course-level data with other student data from the registrar. Instructors don’t necessarily have permission to see registrar data, and our CTL consultants are not allowed to see student identifiers in connection with student data from courses or the registrar. Thus, we developed an automated process by which we can remove identifiers from the data and yet still match data from instructors and the registrar without anybody being able to see or reconstruct the full data set with student identifiers.

The Framework in Action (Facilitate)

Hakan Erdogmus, teaching professor in electrical and computer engineering, participated in our TAR Institute and then benefited from the above services. He devotes the majority of class time to active learning in order to engage students. He was committed to an innovation, flipping the classroom, but did not know how best to optimize its implementation. After the institute, he wanted to leverage his new skills to investigate how best to support students’ pre-class learning via instructional videos. Consultations helped him identify data sources and an appropriate study design to test the effectiveness of inserting guiding questions into the instructional videos to focus student attention. We also helped him analyze and interpret the data, including registrar data, to inform his future course design choices and to prepare a paper to disseminate his findings at an international engineering conference (Erdogmus et al., 2019). Because students performed significantly better on exam questions related to videos containing guiding questions, Erdogmus now requires students to submit answers to guiding questions for all videos.

Disseminate

How Can Teaching and Classroom Research Help Build a Community of Practice Rather than Remain Solitary Endeavors?

CTLs can effectively position themselves as a clearinghouse of resources on learning and teaching as well as a nexus for fostering dialogue, community-building, and a culture of teaching on campus (Cook & Kaplan, 2011). To this end, our CTL provides a number of pathways to “close the loop” on TAR projects, disseminate findings, and create community. For instance, annually, we host a university-wide teaching and learning conference. This Teaching & Learning Summit provides a venue for teacher-researchers to share their work with one another and the broader university community. Through posters, roundtable discussions, demonstrations, and interactive presentations, instructors share both their teaching innovations and the data they have collected. Each year, roughly 200 faculty and graduate students attend, with over 40 instructors presenting their work. Although we do not have an exact count, many presentations include quantitative or qualitative data on student outcomes, which is encouraged as part of the event’s request for proposal (RFP) process.

Seeing the work of colleagues at this event has motivated other instructors to join this community of practice and take advantage of the TAR programs and services described above. For example, one instructor commented, “I had no idea my colleague was doing that, and that there was support available. If she can do it, maybe I can, too.” This outcome is one we hope for—for the summit to be a driver of interest and a mechanism connecting instructors with the multiple entry points for motivation, education, and facilitation in the MEFD framework.

Our Teaching & Learning Summit is not the only pathway for disseminating TAR findings. We opportunistically highlight rigorous faculty work at other CTL events on campus. We also feature TAR projects prominently on our CTL website (Eberly Center, 2019). Some instructors wish to disseminate beyond our university by publishing in relevant journals focused on DBER and SoTL and/or presenting at disciplinary conferences. Our TAR consulting service encourages and supports these goals. Often, faculty have no idea where to disseminate their work or the norms for doing so. We help them find appropriate venues and audiences. Additionally, our consultants guide faculty in writing up the work, provide templates and examples to follow, and provide feedback on drafts.

The Framework in Action (Disseminate)

Alexis Adams is the instructor for a section of Reading and Writing in an Academic Context, a first-year writing course. In addition to teaching written communication skills, this course seeks to develop students’ oral interactional competence, primarily via classroom discussions of assigned readings. Students come from a wide range of disciplines. Many speak English as a second language. Frustrated by the quality of student participation during discussions, Adams piloted several targeted interventions to scaffold development of interactional competence. Our CTL helped design her study, including an observation protocol to measure student engagement. During eight class sessions, a human observer recorded the number and type of rhetorical moves used by each student during a 20-minute discussion. At the 2018 Teaching & Learning Summit, she presented on her interventions and study design. Afterward, a number of attendees requested her innovative educational materials. In 2019, she will return to the summit, presenting on the data collected and lessons learned. In this way, the summit provides instructors an opportunity to get low-stakes feedback on their work while networking with instructors, helping to convert teaching from a solitary to a community endeavor. Our CTL continues to support Adams as she prepares a SoTL manuscript for publication.

Data on CTL Teaching Consultations: Is the Systems Approach Working?

After implementing the MEFD systems approach, we observed significant changes in the frequency of data-driven consultation services requested by faculty (Table 1). We define a service as a substantive consulting interaction provided by a CTL staff member to an instructor-course combination. A single service may (and frequently does) include multiple face-to-face meetings between consultant(s) and client(s). Data-driven consults explicitly address the systematic, direct measurement of student outcomes via quantitative and/or qualitative research methods. During the last five years, both the total number of course-level consultation services and data-driven services increased steadily, by a factor of 1.5 and 6.4, respectively. With the exception of the ProSEED Grants, established in 2014, we implemented the key elements of the MEFD framework during academic year (AY) 2016–2017, including the TAR Institute, TAR consultation service and broad IRB protocols, Teaching & Learning Summit. Before versus after AY 2016–2017, the frequency of course-level, data-driven services increased dramatically, by an average of 20%. While the annual proportion of data-driven services has remained somewhat stable after implementing the MEFD framework, the impact on absolute numbers of faculty and courses continues to increase. Prior to AY 2016–2017, almost all data-driven consults focused on leveraging learning analytics from educational technology tools. Few, if any, clients collected other types of data on direct measures of learning outcomes. Projects resulting from the TAR Institute (educate) and faculty grant and fellowship programs (motivate) alone do not account for the observed increases, suggesting that other steps of the framework (facilitate and disseminate) may also be playing a role.

| 2014–2015 | 2015–2016 | 2016–2017* | 2017–2018* | 2018–2019* | |

|---|---|---|---|---|---|

| Total consult services | 223 | 252 | 260 | 299 | 342 |

| Total data-driven consult services | 16 (.07) | 22 (.09) | 67 (.26) | 79 (.26) | 102 (.30) |

| Unique faculty served by consult services | 150 | 174 | 179 | 231 | 260 |

| Unique faculty served by data-driven consult services | 13 (.09) | 22 (.13) | 67 (.37) | 66 (.28) | 89 (.34) |

The data in Table 1 have obvious limitations. They are observational, and we lack data from an appropriate comparison group, such as faculty not self-selecting to request CTL services. Nevertheless, we find no other plausible explanation for the observed patterns. Consequently, we believe these data suggest our CTL’s systems approach is contributing to greater adoption of data-driven teaching and course design on our campus. The large jump observed in AY 2016–2017 may also suggest that our campus’ faculty seed grant program alone may not have been enough to move the needle on faculty adoption of data-driven teaching.

Conclusions and Lessons Learned

Through our work over the past several years, we have experimented with a variety of strategies for implementing each step in the MEFD framework. The benefit of a cyclical framework is that one can refine, add, and switch strategies over time. Furthermore, one strategy need not be a linchpin to success because each strategy can complement and reinforce the others. Although our experiments and refinements were developed within the constraints of our own institutional context, we believe the following lessons learned might be generalized to others:

- For institutions that already have a funding program for educational innovations, consider adding selection criteria that emphasize data collection and data-informed assessment/improvement; then provide support for faculty to execute on their data-collection plans.

- Explore incentive structures and rewards beyond financial remuneration to promote data-informed improvement, such as:

- provide personnel support, instead of grant money, to help faculty collect and analyze data;

- create awards to recognize the use of data to improve teaching and learning; and

- highlight data-driven teaching projects through programs, websites, and other forms of publicity.

- Learn from early adopters about what worked for them and where they struggled. Build that information into professional development programs and services for faculty.

- Find university partners who are best positioned to lower barriers to data collection and use (e.g., CTLs, IRB office, registrar’s office).

- Cultivate peer networks of faculty who can talk about their work in this area and help make the case for its value, inspiring colleagues to try something similar.

- Provide multiple venues and supports to help faculty disseminate their innovations and data-driven practices, both across campus and beyond.

Now that we have a foundation of strategies to build upon, we see more clearly where we need to focus our future efforts as we continue to strive toward making data-informed teaching part of our institution’s standard practice. First, we are focusing more on publicizing the opportunities and our support for collecting and using data to inform teaching. The goal is to move our work well beyond the early adopters. Beyond raising awareness, we will need to maintain and scale our efforts so that more faculty can engage in this work without experiencing extra burden. We are currently laying the groundwork for this by standardizing our procedures in several ways so that our same-sized staff can support a larger volume of TAR clients. For example, now that we have seen recurring patterns in analyses and dissemination, we are developing routines and even templates for that work. Simultaneously, we are compiling a set of validated instruments for commonly asked about constructs (e.g., students’ sense of belonging, intercultural competence) and have prepared resources and tools for administering them more efficiently.

Finally, we recognize that our previous support for TAR was somewhat separated from our CTL’s other main activities: teaching consultations and technology-enhanced learning development. In fact, we discovered that some of our CTL staff perceived our TAR services as independent of other types of consultation services. However, there are natural tie-ins for leveraging learning data across all our efforts. Just as we found benefit in weaving data-informed assessment into our Wimmer Faculty Fellows program, we are working to make data-collection and direct assessment of outcomes expected steps in our other signature consultation services. In part, we are doing this through monthly professional development activities, challenging staff to analyze consultation case studies and recognize opportunities for data-driven practice and collaboration across teaching, technology, and assessment consultants.

Although the particular strategies and contexts may change over time, we will continue to employ the MEFD framework to constructively infuse formative, data-driven practice as an essential element of all that we do. We hope leaders of CTLs will find this framework helpful for strategic planning in two ways. First, this framework can help CTLs intentionally coordinate and integrate the delivery of complementary, yet seemingly independent, programs and services toward a shared goal. Second, this framework provides a useful lens for identifying and prioritizing new opportunities to promote data-driven teaching.

Appendix

Rubric for evaluating faculty teaching innovation grant proposals. Data-driven teaching is highlighted via the criteria (rows) for “collect and analyze data on student learning outcomes” and “leverage results from previous learning research.”

| REQUIRED CRITERIA | Not Recommended (1) | Room for Improvement (3) | Highly Recommended (5) |

|---|---|---|---|

| Deploy technology-enhanced learning in some form | Not a fit: There is no description of technology use in the innovative educational practice to be studied. | Technology-enhanced learning is mentioned, but the educational intervention (including how technology will be applied) is not very innovative and/or may not be effective to promote learning & engagement. | The technology involved may be either a "standard" tool that is applied in a novel way to promote student learning and engagement OR a novel tool that is being developed to promote student learning and engagement. The educational technology and associated intervention is described well enough to evaluate its potential impacts. |

| Collect and analyze data on student learning outcomes | Not a fit: There are no direct measures of student outcomes (learning, engagement, attitudes), or the proposed data and study design are insufficient to study the impacts of the proposed intervention. | Direct measures are mentioned but they are not well aligned with research question, or more effective measures of learning/engagement are possible but not discussed. Data and/or study design would only enable weak inferences about impacts of the intervention or learning mechanisms. | Data to be collected includes direct measures of student outcomes (learning, engagement, attitudes), and a case is made (e.g., based on past work) that these measures are valid and reliable for the current context. Study design enables meaningful inferences to be drawn from the data collected. |

| Articulate a sustainability plan | Future sustainability of the proposed work is not addressed, either in terms of opportunities for future external funding or future adoption/expansion of the innovative educational practice within the course (e.g., course is not likely to be taught in this way in the | There is limited potential for extramural funding or PIs are unlikely to seek it. OR Intervention has limited potential or likelihood of re-use/expansion in future course offerings (e.g., would only be re- used or expanded upon by PIs or select instructors). | Opportunities for external funding are mentioned (ideally with specific sources and CFPs) and seem to fit the proposed work. OR Intervention is likely to be re- used and/or expanded upon in future iterations of the course, even when instructor changes. |

| DESIRABLE CRITERIA | Not Recommended (1) | Room for Improvement (3) | Highly Recommended (5) |

| Describe meaningful contributions to learning research or educational practice | Project's potential for impact on learning is modest or negligle; intervention is not transferable to other contexts, or this issue is not addressed. Scope of project affects small number or range of students, even considering relative size of courses in target discipline. | Project has some potential for transformative impact on students' learning; intervention is somewhat transferrable but is likely limited (within discipline or course type). Scope of project affects a moderate number or range of students. (Note: some disciplines have few or no large courses, so scope is relative.) | Project has potential for signficant, transformative impact on students' learning; intervention is transferrable across courses/disciplines/other teaching & learning contexts. Project addresses a significant felt need, or the scope of project affects a good number or range of students. (Note: some disciplines have few or no large courses, so scope is relative.) |

| Leverage results from previous learning research | No reference to prior relevant research. Proposed intervention is based more on anecdoate and intuition than evidence. | Reference to some prior research is made in the proposal but may not be appropriate or compelling. | Intervention is well grounded by previous research. Proposed work promises to fill a gap in the education literature. |

Biographies

Marsha Lovett (Carnegie Mellon University) studies how learning works (mostly in college-level courses) and then finds ways to improve it. She has done this in several disciplines, including physics, matrix algebra, programming, statistics, and engineering. She uses various methodologies in her work, including computational modeling, protocol analysis, laboratory experiments, and classroom studies. As a result, she has developed several innovative educational technologies to promote student learning and metacognition, including StatTutor (an intelligent tutoring system for statistics) and the Learning Dashboard (a learning analytics system that promotes adaptive teaching and learning in online instruction).

Besides her appointment in the Department of Psychology, she is Director of the Eberly Center for Teaching Excellence & Educational Innovation. At the Eberly Center, she applies theoretical and empirical principles from cognitive psychology to help CMU faculty improve their teaching. Combining her research and Eberly Center work, she co-authored the book How Learning Works: 7 Research-Based Principles for Smart Teaching, which distills the research on how students learn into a set of fundamental principles that instructors can use to guide their teaching.

Chad Hershock (Carnegie Mellon University) is the Director of Faculty & Graduate Student Programs at the Eberly Center for Teaching Excellence & Educational Innovation. Chad leads a team of teaching consultants and data science research associates who support instructors through data-driven consultation services and evidence-based educational development programming. Together, they collect and leverage data on student outcomes to help instructors iteratively refine their teaching and course design. Chad's training includes a PhD and MS from the University of Michigan and a BS from the Pennsylvania State University, all in biology. From 2005–2013, he served as an Assistant Director and Coordinator of Science, Health Science, and Instructional Technology Initiatives at the University of Michigan’s Center for Research on Learning and Teaching. Chad has been teaching courses on biology or STEM education for undergraduates, graduate students, and/or post-docs since 1994. He joined the Eberly Center in 2013.

References

- Arum, R., & Roska, J. (2011). Academically adrift: Limited learning on college campuses. University of Chicago Press.

- Astin, A. W. (2011, February 14). In “academically adrift," data don’t back up sweeping claim. The Chronicle of Higher Education. https://www.chronicle.com/article/Academically-Adrift-a/126371

- Beach, A. L., Sorcinelli, M. D., Austin, A. E., & Rivard, J. K. (2016). Faculty development in the age of evidence: Current practices, future imperatives. Stylus Publishing.

- Bishop-Clark, C., & Dietz-Uhler, B. (2012). Engaging in the scholarship of teaching and learning. Stylus Publishing.

- Boyer Commission. (1998). Reinventing undergraduate education: A blueprint for America’s research universities. Stony Brook, NY: Boyer Commission on Educating Undergraduates in the Research University. https://files.eric.ed.gov/fulltext/ED424840.pdf

- Chick, N. L. (Ed.). (2018). SoTL in action: Illuminating critical moments of practice. Stylus Publishing.

- Christian, S. J., & Hershock, C., & Melville, M. C. (2019, June). Guided inquiry-based lab activities improve students’ recall and application of material properties compared with structured inquiry. Paper presented at 2019 ASEE Annual Conference & Exposition, Tampa, Florida. https://peer.asee.org/32881

- Cook, C. E., & Kaplan, M. (Eds.). (2011). Advancing the culture of teaching on campus: How a teaching center can make a difference. Stylus Publishing.

- Cook, C. E., Meizlish, D. S., & Wright, M. C. (2011). The role of a teaching center in curricular reform and assessment. In C. E. Cook & M. Kaplan (Eds.), Advancing the culture of teaching on campus: How a teaching center can make a difference (pp. 121–136). Stylus Publishing.

- Eberly Center. (2019). Teaching as research: Discovering what works for student learning. https://www.cmu.edu/teaching/teaching-as-research/

- Erdogmus, H., Gadgil, S., & Peraire, C. (2019). Introducing low-stakes just-in-time assessments to a flipped software engineering course. Paper presented at the 2019 Hawaii International Conference on System Sciences (HICSS), Honolulu, Hawaii. https://doi.org/10.24251/HICSS.2019.920

- Fanghanel, J. (2013). Going public with pedagogical inquiries: SoTL as a methodology for faculty professional development. Teaching & Learning Inquiry, 1(1), 59–70.

- Finelli, C. J., Ott, M., Gottfried, A. C., Hershock, C., O’Neal, C., & Kaplan, M. (2008). Utilizing instructional consultations to enhance the teaching performance of engineering faculty. Journal of Engineering Education, 97(4), 397–411.

- Finelli, C. J., Pinder-Grover, T., & Wright, M. C. (2011). Consultations on teaching: Using student feedback for instructional improvement. In C. E. Cook & M. Kaplan (Eds.), Advancing the culture of teaching on campus: How a teaching center can make a difference (pp. 65–79). Stylus Publishing.

- Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. PNAS, 111(23), 8410–8415.

- Haras, C., Taylor, S. C., Sorcinelli, M. D., & von Hoene, L. (Eds.). (2017). Institutional commitment to teaching excellence: Assessing the impacts and outcomes of faculty development. American Council on Education.

- Hines, S. R. (2015). Setting the groundwork for quality faculty development evaluation: A five-step approach. The Journal of Faculty Development, 29(1), 5–12.

- Kreber, C. (2013). The transformative potential of the scholarship of teaching. Teaching & Learning Inquiry, 1(1), 5–18.

- Kuh, G. D., & Ikenberry, S. O. (2018, October). NILOA at ten: A retrospective. Urbana, IL: University of Illinois and Indiana University, National Institute for Learning Outcomes Assessment (NILOA). https://www.learningoutcomesassessment.org/wp-content/uploads/2019/02/NILOA_at_Ten.pdf

- McCarthy, M. J., Mertz, J., Barrett, M. L., & Melville, M. (2019, February). Debriefing lab content using active learning. Poster presented at 2019 ACM Technical Symposium on Computer Science Education, Minneapolis, Minnesota. https://doi.org/10.1145/3287324.3293843

- Miller, J. E., & Seldin, P. (2014). Changing practices in faculty evaluation. Academe, 100(3). https://www.aaup.org/article/changing-practices-faculty-evaluation#.W9QA_C-ZPMU

- Miller-Young, J. E., Anderson, C., Kiceniuk, D., Mooney, J., Riddell, J., Hanbidge, A. S., Ward, V., Wideman, M. A., & Chick, N. (2017). Leading up in the scholarship of teaching and learning. The Canadian Journal for the Scholarship of Teaching and Learning, 8(2). https://doi.org/10.5206/cjsotl-rcacea.2017.2.4

- Pedaste, M., Mäeots, M., Siiman, L. A., de Jong, T., van Riesen, S. A. N., Kamp, E. T., Manoli, C. C., Zacharia, Z. C., & Tsourlidaki, E. (2015). Phases of inquiry-based learning: Definitions and the inquiry cycle. Educational Research Review, 14, 47–61. https://doi.org/10.1016/j.edurev.2015.02.003

- POD Network. (2018a). Defining what matters: Guidelines for comprehensive center for teaching and learning (CTL) evaluation.https://podnetwork.org/content/uploads/POD_CTL_Evaluation_Guidelines__2018_.pdf

- POD Network. (2018b). 2018–2023 strategic plan. https://podnetwork.org/content/uploads/POD_Strategic-Plan_2018-23.pdf

- Rogers, E. M. (2003). Diffusion of innovations (5th ed.). Free Press.

- Tafoya, E., Sunal, D. W., & Knecht, P. (1980). Assessing inquiry potential: A tool for curriculum decision makers. School Science and Mathematics, 80(1), 43–48.

- Trigwell, K. (2013). Evidence of the impact of scholarship of teaching and learning purposes. Teaching & Learning Inquiry, 1(1), 95–105.

- Wright, M. (2008, October). Encouraging the scholarship of teaching and learning: Helping instructors navigate IRB and FERPA. Organization of Professional Organizers and Developers.

- Wright, M. C., Finelli, C. J., Meizlish, D., & Bergom, I. (2011). Facilitating the scholarship of teaching and learning at a research university. Change: The Magazine of Higher Learning, 43(2), 50–56.