An Overview of the System Usability Scale in Library Website and System Usability Testing

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution 3.0 License. Please contact mpub-help@umich.edu to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

This paper was refereed by Weave's peer reviewers.

Abstract

The System Usability Scale, or SUS, was created in 1986 by John Brooke and has been used extensively by a variety of industries to test numerous applications and systems. The SUS is a technology agnostic tool consisting of ten questions with five responses for each question ranging from “strongly agree” to “strongly disagree.” Incorporating the SUS into library website and system usability testing may offer several benefits worth considering, however, it does have some very specific limitations and implementation challenges. This article summarizes examples in library and general literature of how the SUS has been incorporated into usability testing for websites, discovery tools, medical technologies, and print materials. Benefits and challenges of the SUS, best practices for incorporating the SUS into usability testing, and grading complexities are also examined. Possible alternatives and supplementary tools, such as single-item scales or other evaluative frameworks such as the UMUX, UMUX-LITE or SUPR-Q, are also briefly discussed.

Introduction

The System Usability Scale, or SUS, was created in 1986 by John Brooke as a “quick and dirty” way to measure the usability of products (Usability.gov, n.d.). It has been used extensively by various industries to test numerous systems and applications, including “hardware, software, mobile devices, websites and applications” (Usability.gov, n.d.). The SUS is widely referred to as an “industry standard” in the business and technology industries although Brooke has noted “it has never been through any formal standardization process” (2013, p. 29).

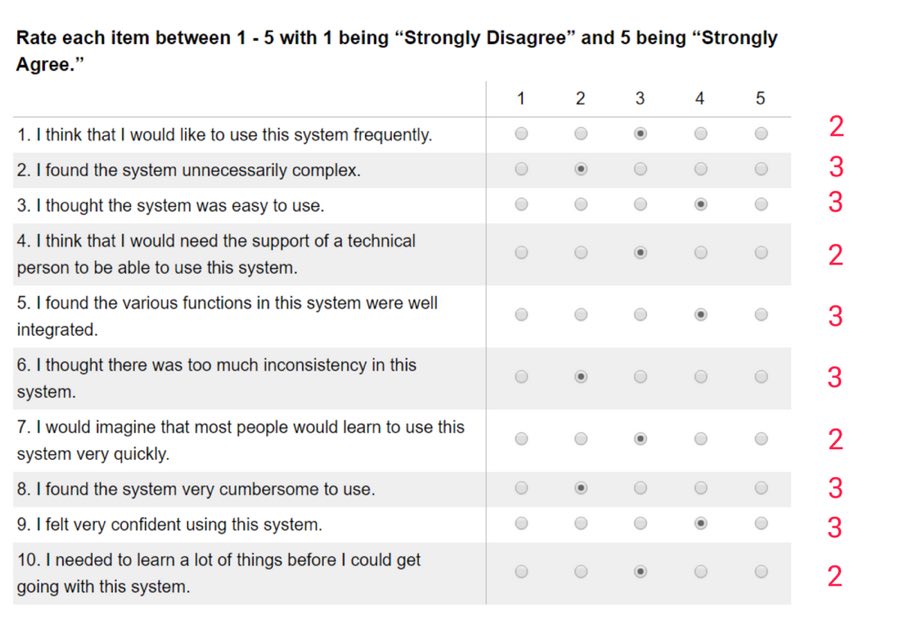

The SUS is “a simple, ten-item scale giving a global view of subjective assessments of usability” (Brooke, 1996, p. 6). There are five responses for each question ranging from “strongly agree” to “strongly disagree.” SUS scores range between 1–100 and 68 is considered the average score (Sauro, 2011c). Scores “are affected by the complexity of both the system and the tasks users may to perform before taking the SUS” (Condit Fagan, Mandernach, Nelson, Paulo, & Saunders, 2012, p. 90).

The ten items on the scale are as follows:

- I think that I would like to use this system frequently.

- I found the system unnecessarily complex.

- I thought the system was easy to use.

- I think that I would need the support of a technical person to be able to use this system.

- I found the various functions in this system were well integrated.

- I thought there was too much inconsistency in this system.

- I would imagine that most people would learn to use this system very quickly.

- I found the system very cumbersome to use.

- I felt very confident using the system.

- I needed to learn a lot of things before I could get going with this system.

There are several potential benefits of incorporating the System Usability Scale into library website and system usability testing. The SUS has been in use for approximately 30 years and is a reliable, tested tool for evaluating a wide range of products and systems. It is also customizable and easily administered via simple survey tools like Survey Monkey, or more advanced survey distribution tools like Qualtrics. The data it provides has a variety of uses. It can be used as a benchmark to measure how changes to a system or product are received by users. Or, it can be used to quantify user reaction to two alternatives, such as two different versions of the same web page or two different user interfaces, so that they can be compared for decision-making purposes. When used in conjunction with data gathered from other sources, such as usability testing, it can be a helpful supplemental tool for researchers who need to collect a source of big picture quantitative data that can be easily communicated to library administration and stakeholders.

The SUS does have some specific limitations and implementation challenges. Depending on the website or system being tested, SUS data may have very limited value on its own. Grading the SUS is also a complex process that can potentially lead to scoring errors if not executed properly, and calculated grades must be normalized to produce a percentage (Usability.gov, n.d.). Another possible issue is the SUS scale alternates between positive and negative statements and this may cause confusion for participants when completing the scale. Odd statements on the scale are positively worded, with the number “5” being the highest score, and even statements on the scale are negatively worded, with the number “1” being the highest score. Libraries who want the kind of data the SUS can provide but find its shortcomings prohibitive may wish to look into other similar options as possible alternatives or as supplementary tools, such as single-item scales or other evaluative frameworks such as the UMUX, UMUX-LITE or SUPR-Q, which are also discussed briefly in this article.[1]

SUS in the Literature

In Libraries

The SUS has been referenced in more than 1,300 articles and publications (Usability.gov, n.d.), including many library and information sciences publications detailing its use in testing various library systems and applications such as websites and discovery tools. Usability testing where the SUS is used to gather data from participants after completing a series of tasks using a discovery tool, such as Primo, EDS, or Encore, are the most typical cases noted in library and general literature. The literature also includes studies in which the SUS is used to test other systems such as scholarly repositories. The majority of tests noted in library and general literature utilize the SUS with usability methods as a means to gather data, answer research questions, and identify general painpoints that users encounter when interfacing with a website or system.

Perrin, Clark, De-Leon, and Edgar (2014) incorporated the SUS into usability testing of the Primo discovery tool at Texas Tech University Libraries. Participants were given seven tasks to complete, including find a book and find a thesis, followed by the SUS. The data gathered using the SUS helped researchers come to the conclusion “that overall, the implementation of OneSearch was successful, at least in terms of how students perceived it after using it,” while additional data obtained during task completion, such as task time, error rate, and success rate, helped to “identify specific problems with the interface” (Perrin et al., 2014, p. 59).

Condit Fagan, Mandernach, Nelson, Paulo, and Saunders (2012) incorporated the SUS into a usability evaluation of the EBSCO Discovery Service at James Madison University Libraries. The SUS was administered to participants after a series of “nine tasks designed to showcase usability issues, show the researchers how users behaved in the system, and measure user satisfaction” followed by a “post-test consisting of six open-ended questions, plus one additional question for faculty participants, intended to gather more qualitative feedback about user satisfaction with the system” (Condit Fagan et al., 2012, p. 90). Additional post-test questions revealed that some participants found EDS “overwhelming” or “confusing” and data gathered from administering the SUS supported the data gathered during the post-test process (Condit Fagan et al., 2012, p. 98). The SUS was also utilized as a benchmarking tool to measure usability over time and note improvements from testing done in spring 2010 and testing done in fall 2010.

Johnson (2013) also incorporated the SUS into usability testing to compare the Encore discovery tool versus a tabbed layout on the library website at Appalachian State Libraries. Participants were asked to complete four tasks on the website using the tabbed layout and using an Encore search box in place of the tabbed layout. Tasks included locating a known book, finding journal articles related to certain topics, and to search for materials on a topic they had recently written about. Participants were also asked follow-up questions after or during each task. After completing the tasks on each interface users were asked to complete the SUS. The average SUS scores for the website design with the Encore search box was 71.5 versus 68 for the original tabbed layout. The author noted the SUS scores were “a way to benchmark how ’usable’ the participants rated the two interfaces” (Johnson, 2013, p. 65).

In an atypical case in the literature Zhang, Maron, and Charles (2013) document usability testing at Purdue University Libraries for a research repository and collaboration website known as the Human-Animal Bond Research Initiative Central Research Repository. The purpose of testing was to have participants engage in various tasks using the repository such as “finding an article in the repository, submitting an article to the repository, adding bibliographic information of an article in the repository, and using interaction features such as user groups” (Zhang, et al., 2013, p. 58). Following the completion of assigned tasks, testing participants were asked a series of open-ended questions and to complete the System Usability Scale to rate their experience using the system. SUS scores were used in conjunction with five other response measures including “successfulness of each task; whether participants needed help from the researcher during each task; number of steps of the navigational path participants went through in each task; time to complete each task; participants’ comments during each task” in order to gauge overall attitude and experience regarding usage of the tool (Zhang et al., 2013, p. 71). Although researchers were able to identify some usability problems through participant observation, such as “HABRI Central’s article submission workflow, user input design, and positions of certain interface elements,” the SUS provided researchers with data that indicated participants rated the repository highly overall (Zhang et al., 2013, p. 72).

Beyond Libraries

There are also numerous studies documented in broader literature that incorporate the SUS for testing a variety of products and tools, including websites, medical technologies, decision aids, and print materials. A few representative examples from the fields of education and medicine are summarized below.

Tsopra, Jais, Venot, and Duclos (2014) used the SUS when testing clinical decision support systems interfaces. The purpose of the study was to have general practitioners interact with two interfaces in order to compare an interface design that focuses on decision processes versus an interface design that focuses on usability principles. SUS scores, the number of correct responses for ten clinical cases, and the confidence level measured with a 4-point Likert scale for each interface were assessed and it was determined the “interface designed according to usability principles was more usable and inspired more confidence among clinicians” (Tsopra et al., 2014, p. e107). The authors state that a separate analysis of the data gathered from SUS questions uncovered that 91.5 percent of participants didn’t feel they would need technical assistance to use the design focusing on usability principles versus 77.1 percent of participants using the design focusing on decision principles (Tsopra et al., 2014).

Li et al. (2013) document usability testing for a web-based patient decision tool for rheumatoid arthritis patients referred to as ANSWER (Animated, Self-serve, Web-based Research tool). The purpose of the study was to “assess the usability of the ANSWER prototype” and “identify strengths and limitations of the ANSWER from the patient’s perspective” (Li, et al., 2013, p. 131). A group of fifteen participants were asked to use ANSWER and “verbalize their thoughts” during the process (Li et al., 2013, p. 131). In addition to observing participants and taking field notes, the testing was also audiotaped. The authors indicate ANSWER scored well on the SUS in earlier testing (81.25) and after changes were implemented (81), therefore indicating ANSWER “had met the standard of a user-friendly program” (Li et al., 2013, p. 138). However, data gathered from the “think-aloud sessions illustrated ideas that required further refinement” supporting “the use of the formative evaluation along with the summative evaluation in usability testing” (Li et al., 2013, p. 138).

Grudniewicz, Bhattacharyya, McKibbon, and Straus (2015) document the process of updating printed education materials and testing usability via methods including the SUS. These materials were redesigned “using physician preferences, design principles, and graphic designer support” and physicians were asked to select whether they preferred the redesigned version of the document or the original (Grudniewicz, et al., 2015, p. 156). This process was followed by “an assessment of usability with the System Usability Scale and a think aloud process” (Grudniewicz et al., 2015, p. 156). The purpose of the study was to determine whether or not the redesigned materials “better meet the needs of primary care physicians and whether usability and selection are increased when design principles and user preferences are used” (Grudniewicz et al., 2015, p. 157). Ease of use was measured using the System Usability Scale and scores for the redesigned materials were significantly higher. The wording of the SUS was adjusted slightly in this case to reflect that print materials were being tested rather than a system (Grudniewicz et al., 2015).

Benefits of Using the System Usability Scale (SUS)

SUS is a Reliable, Tested Tool for Gathering Quantitative Data

There are many valid reasons to consider incorporating the System Usability Scale (SUS) into library website and system testing. Since the SUS has been available for approximately 30 years and “a considerable amount of research has indicated that the SUS has excellent reliability,” it can be used with confidence on both large and small sample sizes (Lewis, Utesch, & Maher, 2015, p. 497). Test participants can complete the scale quickly, making it an easy way to gather quantitative data whether used on its own or in conjunction with other quantitative and qualitative measures (Usability.gov, n.d.). As indicated in library and broader literature, SUS data can help provide a more complete picture of the attitudes toward a website or system being tested when used in conjunction with usability test measures such as timed tasks and the number of tasks successfully completed. Together, the results of these measures provide researchers with a clearer understanding of the specific pain points users have identified in a system.

The SUS is also free, easy to set up and administer to participants online or in print, and “technology agnostic” (Usability.gov, n.d.). Because of this, researchers can continue to incorporate the SUS as technologies change and develop without having to worry about updating or recreating questionnaires (Brooke, 2013). Another benefit of using a technology agnostic tool like the SUS is that “scores can be compared regardless of the technology,” and the same set of scores can be used by an organization to benchmark both standard websites and other tools such as catalogs and discovery layers (Sauro, 2015, p. 69).

SUS Results are Easy to Share and Understand

In an analysis of the System Usability Scale, Bangor, Kortum, and Miller note “the survey provides a single score on a scale that is easily understood by a wide range of people (from project managers to computer programmers)” (2008, p. 574). Kortum and Bangor note that subjective usability scores gathered through administering questionnaires like the SUS “provide a common language” for a number of groups, from web designers to managers, “to describe and discuss the usability attributes of a system” (2013, p. 68). Measures from other kinds of tests, such as the average amount of time to complete a task, for example, may have little or no meaning to groups like developers “without specific, detailed knowledge of that task and other comparable tasks” (Kortum & Bangor, 2013, p. 68). The simplicity of SUS scores may make sharing data with an organization’s administration and stakeholders far less complicated and time consuming than synthesizing and communicating the importance and meaning of other types of data gathered throughout the usability testing process.

Tracking SUS scores on the same system over a period of time is also a simple way to communicate to stakeholders how system performance has improved or declined. SUS data that indicates scores have improved over time may be useful to researchers needing to justify the time, effort, and resources expended for the usability testing process to administration. As previously noted, Condit Fagan et al. (2012) used the SUS to “benchmark for system improvement” when testing the EBSCO Discovery System across semesters (p. 102). Johnson (2013) also notes the SUS was used to “benchmark our students’ initial reaction” to an external Encore discovery tool versus a tabbed layout on the library website (p. 65). If SUS scores on a particular system have declined over time, researchers may be able to use this data to justify the need for more usability testing resources in order to make necessary improvements.

When comparing the usability and functionality of two or more products, the SUS also provides a straightforward way to communicate to stakeholders how well a product performed compared to a similar product or products. As noted by Johnson (2013), having participants complete the SUS after completing tasks on an existing tabbed layout and on an interface which incorporated the Encore discovery tool indicated the website design with the Encore search box was the preferred choice.

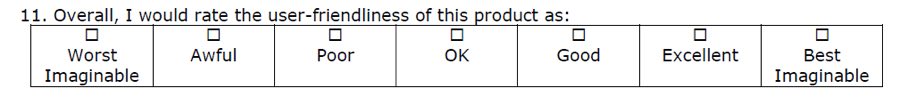

SUS is Customizable

Research indicates that minor modifications can be made to System Usability Scale without changing the results. Bangor, Miller, and Kortum (2009) conducted testing where an additional adjective scale was added as an eleventh question to the SUS and administered to 964 participants. The purpose of this addition was to address the question, “what is the absolute usability associated with any individual score?” (Bangor et al., 2009, p. 117) The question asked participants to rate the user-friendliness of a product or website as worst imaginable, awful, poor, OK, good, excellent, or best imaginable. Findings indicated that the scale ratings were a close match to SUS scores and the inclusion of an additional scale may be useful for providing a “subjective label for an individual study’s mean SUS score” (Bangor et al., 2009, p. 119).

The SUS is also “insensitive to minor changes in its wording,” therefore it can be edited to reflect the type of system or material being tested (Lewis et al., 2015, p. 497). For example, the word “system” which is used in all ten items of the SUS can be changed to “website.” Minor wording changes can also be made to reflect that print materials are being tested rather than a system. As previously noted, Grudniewicz et al. (2015) made minor wording adjustments to reflect that printed education materials were being tested rather than a computer system.

Challenges of Using the System Usability Scale

SUS May Have Limited Value When Used On Its Own

A benefit of the SUS is that it is a “technology agnostic” tool that can be used to rate a variety of products, however, this trait may make it a liability when testing library websites and systems because “the disadvantage of a technology agnostic instrument is that it can omit important information that is specific to an interface type” (Sauro, 2015, p. 69).

Using the SUS on its own will provide a source of quantitative data for researchers, but it may be difficult to understand why users assigned a website or system a low or high score without additional qualitative and quantitative measures (Sauro, 2011c). SUS scores provide information about how usable a website or system is, however, “they won’t tell you much about what’s unusable or what to fix,” or provide insight into which facets of the system are functioning well (Sauro, 2011c). It may be necessary to gather more information on why participants like or dislike the product in order to make informed decisions. For example, item 2 of the SUS is “I found the system unnecessarily complex.” If a participant rates this question as “strongly agree,” this is useful to researchers because it identifies a possible issue with the system. The researchers will not know specifically, however, which elements of the system caused the user to struggle. Open-ended questions added to the end of the SUS may be useful for identifying specific issues with the system that need to be addressed. However, a better solution might be to utilize a more diagnostic instrument in addition to, or instead of, the SUS, such as the UMUX, UMUX-LITE, or SUPR-Q, if more detailed information on specific problems is desired (Finstad, 2010b; Sauro, 2015). The questions on the SUPR-Q, for example, address website specific issues such as navigation, and look and feel (Sauro, 2015).

There is one specific situation in which administering the SUS alone may be beneficial: when evaluating commercial systems that the library has no ability to customize. In such situations, locating the exact source of problems may be less important than determining whether such problems exist and rating their relative severity. The SUS could, for example, potentially be helpful in evaluating the user interface of commercial library databases and assisting in purchasing decisions.

SUS Structure May Be Confusing and Frustrating to Test Participants

Another disadvantage of administering the SUS is the scale structure may be confusing and frustrating to test participants. The number “5” on the scale is the highest score for odd numbered questions on the SUS, and the number “1” is the highest score on even numbered questions. According to Sauro, the purpose of this pattern is to “reduce acquiescent bias (users agreeing to many items) and extreme response bias” (Sauro, 2011c). “Although it is a standard psychometric practice to vary item tone, this variation can have negative consequences” (Lewis et al., 2015, p. 497) as participants may be used to taking surveys where the highest number on the scale is considered the best score throughout the entire survey, and the lowest number on the scale is considered the worst score throughout the entire survey. Sauro also notes that if a test participant has strong feelings for or against a website or system, they may go through all ten SUS questions and “forget to reverse their answers and therefore respond incorrectly” by selecting all “1s” to indicate a weak product or all “5s” to indicate a strong product (Sauro 2011c).

Research by Finstad (2006) also noted the wording of the SUS may pose issues for some non-native English speakers. This can be problematic if participants do not feel comfortable asking researchers for question clarification in a supervised testing environment, or do not have the option to ask for clarification in an unsupervised testing environment (Finstad, 2006). One issue noted was lack of familiarity with the word “cumbersome,” which is used in item 8, “I found the system very cumbersome to use.” Finstad suggests that changing “cumbersome” to “awkward” may help ease confusion in such cases (Finstad, 2006, p. 186). Another issue detected by Finstad was with questions seven, eight, and nine and the use of the word “very” to indicate that participants felt the system could be learned “very quickly,” the system was “very cumbersome” to use, and whether or not they felt “very confident” using the system (2006, p. 186). A testing participant noted concern that agreeing to question nine “would automatically categorize him as ‘very confident,’ when he was more comfortable agreeing he was simply ‘confident’” (Finstad, 2006, p. 186).

Participants may also experience frustration with the 5-point Likert items in the SUS if they feel the available options, which include strongly disagree, disagree, neither agree nor disagree, agree, and strongly agree, don’t accurately capture their attitudes and feelings about the website or system being tested. Additional research by Finstad (2010a) indicates that a 7-point Likert scale with the options entirely disagree, mostly disagree, somewhat disagree, neither agree nor disagree, somewhat agree, mostly agree, and entirely agree, may be a better option because it “is more likely to reflect a respondent’s true subjective evaluation of a usability questionnaire item than a 5-point item scale” (p. 108).

Best Practices for Incorporating the SUS into Usability Testing

Administering the SUS to Users

Typical library website or system testing often involves a series of tasks where researchers observe participants and gather specific data throughout the process such as the number of tasks completed, task completion time, and comments made by the participants while completing tasks. When incorporating a standardized questionnaire, such as the SUS, along with tasks and other measures into usability testing, researchers “should carefully consider their administration for the proper management of satisfaction outcomes” (Borsci, Federici, Bacci, Gnaldi, & Bartolucci, 2015, p. 493). Administering questionnaires at the proper point in testing, as well as providing clear and concise instructions to participants are essential for accurately measuring user satisfaction (Borsci et al., 2015). Since the SUS alternates between positive and negative statements “reminding users upfront, for instance, of the alternative nature of the SUS statements for rating products properly” should be kept in mind (Muddimer, Peres, & McLellan, 2012, p. 63).

Ideally, the SUS or any standardized questionnaire is administered to the testing participant as soon as they have tested the system and completed all tasks (McLellan et al., 2012). This helps ensure the participant remembers everything they liked and disliked about the system in order to provide an accurate summation (McLellan et al., 2012). Brooke also notes that “respondents should be asked to record their immediate response to each item, rather than thinking about it for a long time” (1996, p. 8), however, he does not make any recommendations for imposing an actual time limit on participants completing the SUS.

Randomizing the Order of System Tests

When administering a series of identical tasks to participants to compare two or more products, the tasks may become easier for participants to complete as they are repeated. This may lead to a higher SUS score for the product being tested last regardless of how usable it is. To avoid this, researchers should consider alternating the order in which tools are tested from one participant to the next when testing multiple systems or websites.

Understanding Users’ Prior Use

Prior use and experience with a website or system can impact scores on post-study questionnaires like the SUS, which may cause additional challenges for researchers. Sauro found that “prior experience boosted usability ratings 11 percent for websites and consumer software” (2011a). Borsci et al. noted that when testing systems “the overall level of satisfaction will be higher than that among less experienced users” (2015, p. 494). Researchers may want to recruit testing participants with no or limited experience with the website or system being tested since users may rate a system they are familiar with higher than a system they are unfamiliar with, even if the unfamiliar product is more usable (Sauro, 2011a).

Scoring the SUS

Calculating Scores

The System Usability Scale has a complex grading structure, which may increase the likelihood of miscalculating scores (Brooke, 2013). As previously discussed, in the SUS instrument, even and odd questions are scored differently. Odd questions are scored 0–4 based on the 1–5 selection, where a selection of 1 equals 0 points, a selection of 2 equals 1 point, and so on (Sauro, 2011b). Even questions are scored 4–0 on the 1–5 selection where a selection of 1 equals 4 points, a selection of 2 equals 3 points, and so on. Scores for all ten questions are added up for a total score between 0–40 points. This total is multiplied by 2.5 to generate a SUS score between 0–100 points. As you can see, this means calculating the scoring, especially doing so manually, can be a bit tricky. Assigning the wrong point total to a question is very easy, especially since the scales are not consistent from question to question, and this can throw off the entire final score. Survey tools may be helpful in minimizing scoring errors. Simple survey tools such as Survey Monkey can be used to administer the SUS and assign the correct number of points based on each selection of the scale. This data can be exported into Excel where formulas can be applied to calculate the final scores. More advanced survey tools, such as Qualtrics, can be set up so the correct number of points is automatically assigned to each selection on the scale and final scores are tabulated automatically when the survey is submitted.

Figure 1 shows an example of a graded SUS questionnaire. The total score for this example is 26. Multiplying 26 * 2.5 gives us a SUS score of 65.

Another challenge of SUS scoring is once the completed score is calculated there is a “tendency for scores between 0 and 100 to be perceived as percentages,” when in fact, normalization of the score is required to calculate a percentage (Brooke, 2013, p. 35).

Normalizing Scores

It is important to remember that raw “SUS scores are not percentages” (Sauro, 2011b) and it is necessary to normalize scores in order “to produce a percentile ranking” (Usability.gov, n.d.). Sauro notes that based on analysis of more than 5,000 user scores encompassing “almost 500 studies across a variety of application types,” (2011c) the average SUS score is 68 with a standard deviation of 12.5 (Sauro & Lewis, 2016). A SUS score of 68 is in the 50th percentile, which means the score is higher than 50 percent of all tested systems and applications (Sauro, 2011c).

SUS scores can also be translated into letter grades, which may be helpful for communicating results to stakeholders. Since the average SUS score is 68, Sauro & Lewis (2016) note they “prefer to grade on a curve in which a SUS score of 68 is at the center of the range for a ‘C’” (p. 203). As shown in table 1, the full “C” range is from 65.0–71.0 (Sauro & Lewis, 2016). The scale also indicates a SUS score of 78.9 or above would constitute an “A-” or above, while a SUS score of 51.6 or below would constitute an “F” (Sauro & Lewis, 2016).

| Letter grade | Numerical score range |

|---|---|

| A+ | 84.1–100 |

| A | 80.8–84.0 |

| A- | 78.9–80.7 |

| B+ | 77.2–78.8 |

| B | 74.1–77.1 |

| B- | 72.6–74.0 |

| C+ | 71.1-72.5 |

| C | 65.0–71.0 |

| C- | 62.7–64.9 |

| D | 51.7–62.6 |

| F | 0–51.6 |

Other Post-Study Questionnaires

For libraries that want the kind of data the SUS can provide, but find its shortcomings too difficult to deal with, there are a range of other similar instruments available as possible supplements to, or replacements of, the SUS. Many of these tools are shorter and easier to score than the SUS. I include here a brief discussion of four such tools for libraries interested in investigating other options.

Single-Item Adjective Rating Scale

For some libraries, a single-item scale may provide a good alternative to the SUS, or serve as a good supplement to the SUS when added as an additional item to the scale (Bangor et al., 2009). Single-item scales are easy to score and they produce simple, transparent big picture data that can easily be shared with administrators and other stakeholders (Bangor et al., 2009). As previously noted, Bangor, et al. added an adjective rating scale (fig. 2) to the end of the System Usability Scale questionnaire and found that “Likert scale scores correlate extremely well with the SUS” (2009, p. 114).

Even though a strong correlation between the SUS and the single-item rating scale was found, Bangor et al. (2009) caution against using a single-item scale on its own and recommend using it in conjunction with objective measures such as “task success rates or time-on-task measures” (p. 119) so that specific system problems can be identified.

UMUX

“Although the SUS is a quick scale, practitioners sometimes need to use reliable scales that are even shorter than the SUS to minimize time, cost, and user effort” (Borsci et al., 2015, p. 485). The Usability Metric for User Experience (UMUX) was developed to “provide an alternate metric for perceived usability for situations in which it was critical to reduce the number of items while still getting a reliable and valid measurement of perceived usability” (Lewis et al., 2015, p. 498). The UMUX is similar to the SUS in that odd numbered items have a positive tone and even numbered items have a negative tone (Lewis et al., 2015). The UMUX, however, has four items instead of ten and has “seven rather than five scale steps from 1 (strongly disagree) to 7 (strongly agree)” (Lewis et al., 2015, p. 498).

The four items that comprise the UMUX are as follows:

- This system’s capabilities meet my requirements.

- Using this system is a frustrating experience.

- This system is easy to use.

- I have to spend too much time correcting things with this system.

The UMUX is scored as [score – 1] for items one and three and [7 – score] for items two and four (Borsci et al., 2015). The scores for each item are summed, divided by 24, and multiplied by 100 (Borsci et al., 2015). Borsci et al. (2015) note that research incorporating the UMUX has found that the “scores have not only correlated with the SUS but also had a similar magnitude” (p. 486). However, they “recommend that researchers avoid using only the UMUX for their analysis of user satisfaction” because it was found it to be too optimistic (Borsci et al., 2015, p. 494).

There are recommendations from researchers on how and when to incorporate the UMUX into usability testing. Berkman & Karahoca (2016) note that researchers may benefit from the UMUX and UMUX-LITE “as lightweight tools to measure the perceived usability of a software system” (p. 107). They also observed the UMUX and UMUX-LITE “may not be sensitive to differences between the software” when comparing very similar systems which may be rated similarly by test participants (Berkman & Karahoca, 2016, p.107). In such studies, they suggest using the UMUX in conjunction with another post-study questionnaire, such as the SUS. Borsci et al. (2015) and Lewis et al. (2013) recommend administering both the SUS and the UMUX or UMUX-LITE during advanced phases of design. Borsci et al. note that administering both scales during advanced phases is beneficial as a means to gather more comprehensive data in order “to assess user satisfaction with usability” (2015, p. 494).

Libraries may benefit from using the UMUX as a supplement to the SUS, particularly for studies comparing highly similar tools and during advanced phases of design testing. The UMUX may also offer a viable alternative as a replacement to the SUS in some circumstances because it consists of only four questions, has a high correlation to the SUS, consists of questions with seven scale steps rather than five scale steps, and has a less complicated grading structure. For studies that involve testing only one system or website, the UMUX may provide a good alternative to the SUS if utilized in conjunction with usability testing methods which gauge user satisfaction, such as task completion and the time it takes to complete a task.

UMUX-LITE

The UMUX-LITE is an even shorter two-item version of the UMUX. It consists only of items one and three, the positive-tone items, from the UMUX instrument. Like the UMUX, it also utilizes a 7-point rating scale (Lewis et al., 2015).

The two items that comprise the UMUX-LITE are:

- This system’s capabilities meet my requirements.

- This system is easy to use.

The UMUX-LITE is scored as [score – 1] for both items (Lewis, 2013). The scores are summed, divided by 12, and multiplied by 100 (Lewis, 2013). In order to create a score that corresponds with the reliability of SUS scores, the sum is entered into the regression equation below, which produces the final UMUX-LITE score (Borsci et al., 2015).

UMUX-LITE = .65 * (([Item1 score] + [Item2 score] – 2)100/12) + 22.9

Borsci et al. note that while the UMUX and UMUX-LITE are both “reliable and valid proxies of the SUS,” the UMUX-LITE, with the regression formula, “appears to have results that are closer in magnitude to the SUS than the UMUX, making it the more desirable proxy” (2015, p. 494). It is worth noting, however, that the application of the regression formula does add an additional layer of complication when grading the scale.

There are recommendations from researchers on how and when to incorporate the UMUX-LITE into usability testing. Borsci et al. (2015) and Lewis et al. (2013) suggest the UMUX-LITE may be best used in conjunction with the SUS and not as a replacement. Borsci et al. (2015) suggest using the UMUX-LITE as a “preliminary and quick tool to test users’ reactions to a prototype” and then follow-up with a combination of the SUS and UMUX or UMUX-LITE in later testing (p. 494).

Libraries may benefit from using the UMUX-LITE as a supplement to the SUS, particularly in more advanced phases of usability testing. Although the UMUX-LITE has a slightly more complicated grading structure than the UMUX, it may still provide libraries with a reliable, quick, and easy to administer alternative to the SUS for testing websites and systems in very early usability testing phases. The two question scale is very quick to administer either online or in print, the regression formula produces results which are closer in magnitude to the SUS than the UMUX (Borsci et al., 2015), and it consists of questions with seven scale steps compared to five scale steps.

SUPR-Q

The Standardized User Experience Percentile Rank Questionnaire, known as the SUPR-Q, consists of eight items used to measure four website factors: “usability, trust, appearance, and loyalty” (Sauro, 2015, p. 73). The primary advantage the SUPR-Q may have over the SUS and UMUX is that it “measures more than just a single factor such as usability” (Sauro, 2015, p. 84). The SUPR-Q was created as the result of a three-part study, which spanned a five-year period and included “over 4,000 responses to experiences with over 100 websites” (Sauro, 2015, p. 84). This extensive research by Sauro (2015) also found “there was evidence of convergent validity with existing questionnaires” including the SUS (p. 84). With the purchase of a SUPR-Q full license researchers can administer the questionnaire to an unlimited number of participants, paste raw test scores into a calculator to produce normalized scores and percentile ranks, and compare results to a database of other websites.[2]

Seven of the eight questions on the SUPR-Q are measured with a 5-point scale where 1 equals “strongly disagree” and 5 equals “strongly agree” (Sauro, 2015, p. 84). The fifth question asks users how likely they would be to recommend the website using an 11 point scale with 0 being “not at all likely” and 10 being “extremely likely” (Sauro, 2015, p. 84). The first two questions on the scale rate usability, questions three and four rate trust, questions five and six rate loyalty, and questions seven and eight rate appearance (Sauro, 2015). To score the SUPR-Q, averages are taken for the questions graded on a 5-point scale and added to half the score for question five (Measuring Usability, LLC, 2017).

The eight items in the SUPR-Q are: (Sauro, 2015, p. 84)

- The website is easy to use.

- It is easy to navigate within the website.

- I feel comfortable purchasing from the website.

- I feel confident conducting business on the website.

- How likely are you to recommend this website to a friend or colleague?

- I will likely return to the website in the future.

- I find the website to be attractive.

- The website has a clean and simple presentation.

Libraries may benefit from the SUPR-Q as a replacement to the SUS for long-term usability projects measuring improvement of different iterations of systems and websites, as well as benchmarking how users rate a website’s loyalty, trust, usability, and appearance (Sauro, 2015, p. 84). The SUPR-Q license features could potentially save libraries a great deal of time with generating scores, comparing scores to other websites, and reducing the likelihood of scoring errors, however, it may be difficult for many organizations to justify the license cost.

Conclusion

While using the SUS in library website and system usability testing does present challenges, there are also several benefits libraries should consider. The SUS offers a quick and easy source of supplemental data for library website and system usability testing in a wide variety of contexts. Libraries both large and small can adopt and adapt this tool for the purposes of gaining usability insights and improving the user experience for patrons, as long as the specific challenges it presents are known and planned for ahead of time. There are a number of other similar tools that can supplement or possibly be used in lieu of the SUS, such as the UMUX, UMUX-LITE, or SUPR-Q. Data from tests such as the SUS can be useful for establishing baselines, communicating the impact of changes, or aiding in decision making.

REFERENCES

- Bangor, A., Kortum, P., & Miller, J. (2008). An empirical evaluation of the System Usability Scale. International Journal of Human-Computer Interaction, 24(6), 574–594.

- Bangor, A., Miller, J. & Kortum, P. (2009). Determining what individual SUS scores mean: Adding an adjective rating scale. Journal of Usability Studies, 4(3), 114–123. Retrieved from http://uxpajournal.org/determining-what-individual-sus-scores-mean-adding-an-adjective-rating-scale/

- Berkman, M. I., & Karahoca, D. (2016). Re-assessing the Usability Metric for User Experience (UMUX) scale. Journal of Usability Studies, 11(3), 89–109. Retrieved from http://uxpajournal.org/assessing-usability-metric-umux-scale/

- Borsci, S., Federici, S., Bacci, S., Gnaldi, M., & Bartolucci, F. (2015). Assessing user satisfaction in the era of user experience: Comparison of the SUS, UMUX, and UMUX-LITE as a function of product experience. International Journal of Human-Computer Interaction, 31(8), 484–495.

- Brooke, J. (1996). SUS: A quick and dirty usability scale. Usability Evaluation in Industry, 189(194), 4–10.

- Brooke, J. (2013). SUS: A retrospective. Journal of Usability Studies, 8(2), 29–40. Retrieved from http://uxpajournal.org/sus-a-retrospective/

- Condit Fagan, J., Mandernach, M., Nelson, C. S., Paulo, J. R., & Saunders, G. (2012). Usability test results for a discovery tool in an academic library. Information Technology & Libraries, 31(1), 83–112.

- Finstad, K. (2006). The system usability scale and non-native English speakers. Journal of Usability Studies, 1(4), 185–188. Retrieved from http://uxpajournal.org/the-system-usability-scale-and-non-native-english-speakers/

- Finstad, K. (2010a). Response interpolation and scale sensitivity: Evidence against 5-point scales. Journal of Usability Studies, 5(3), 104–110. Retrieved from http://uxpajournal.org/response-interpolation-and-scale-sensitivity-evidence-against-5-point-scales/

- Finstad, K. (2010b). The usability metric for user experience. Interacting with Computers, 22(5), 323–327. doi:10.1016/j.intcom.2010.04.004

- Grudniewicz, A., Bhattacharyya, O., McKibbon, K. A., & Straus, S. E. (2015). Redesigning printed educational materials for primary care physicians: Design improvements increase usability. Implementation Science, 10, 1–13. doi:10.1186/s13012-015-0339-5

- Johnson, M. (2013). Usability test results for Encore in an academic library. Information Technology & Libraries, 32(3), 59–85.

- Kortum, P. T., & Bangor, A. (2013). Usability ratings for everyday products measured with the system usability scale. International Journal of Human-Computer Interaction, 29(2), 67–76. doi:10.1080/10447318.2012.681221

- Lewis, J. R. (2013). Critical review of ‘The usability metric for user experience.’ Interacting with Computers, 25(4), 320–324. doi: 10.1093/iwc/iwt013

- Lewis, J. R., Utesch, B. S., & Maher, D. E. (2015). Measuring perceived usability: The SUS, UMUX-LITE, and AltUsability. International Journal of Human-Computer Interaction, 31(8), 496–505. doi:10.1080/10447318.2015.1064654

- Li, C. L., Adam, P. M., Townsend, A. F., Lacaille, D., Yousefi, C., Stacey, D., et al. (2013). Usability testing of ANSWER: A web-based methotrexate decision aid for patients with rheumatoid arthritis. BMC Medical Informatics & Decision Making, 13(1), 1–22. doi:10.1186/1472-6947-13-131

- Measuring Usability LLC. (2017). SUPR-Q: Standardized UX Percentile Rank. Retrieved from http://www.suprq.com/index.php

- Muddimer, A., Peres, S. C., & McLellan, S. (2012). The effect of experience on System Usability Scale ratings. Journal of Usability Studies, 7(2), 56–67. Retrieved from http://uxpajournal.org/the-effect-of-experience-on-system-usability-scale-ratings/

- Perrin, J. M., Clark, M., De-Leon, E., & Edgar, L. (2014). Usability testing for greater impact: A Primo case study. Information Technology & Libraries, 33(4), 57–66.

- Sauro, J. (2011a). Does prior experience affect perceptions of usability? Retrieved from http://www.measuringu.com/blog/prior-exposure.php

- Sauro, J. (2011b). Measuring usability with the System Usability Scale (SUS). Retrieved from http://www.measuringu.com/sus.php

- Sauro, J. (2011c) SUStisfied? Little-known System Usability Scale facts. User Experience: The Magazine of the User Experience Professionals Association, 10(3). Retrieved from http://uxpamagazine.org/sustified/

- Sauro, J. (2015). SUPR-Q: A comprehensive measure of the quality of the website user experience. Journal of Usability Studies, 10(2), 68–86. Retrieved from http://uxpajournal.org/supr-q-a-comprehensive-measure-of-the-quality-of-the-website-user-experience/

- Sauro, J., Lewis, J. (2016). Quantifying the user experience: Practical statistics for user research. Amsterdam; Waltham, MA: Elsevier/Morgan Kaufmann.

- Tsopra, R., Jais, J., Venot, A., & Duclos, C. (2014). Comparison of two kinds of interface, based on guided navigation or usability principles, for improving the adoption of computerized decision support systems: Application to the prescription of antibiotics. Journal of the American Medical Informatics Association, 21, e107–e116. doi:10.1136/amiajnl-2013-002042

- Usability.gov. (n.d.). System Usability Scale (SUS). Retrieved from http://www.usability.gov/how-to-and-tools/methods/system-usability-scale.html

- Zhang, T., Maron, D. J., & Charles, C. C. (2013). Usability evaluation of a research repository and collaboration web site. Journal of Web Librarianship, 7(1), 58–82. doi:10.1080/19322909.2013.739041

1. Other possible options not discussed in this article include the SUMI (Software Usability Measurement Inventory), the WAMMI (Website Analysis and Measurement Inventory), and the Post Study System Usability Questionnaire (PSSUQ).

2. More information about the SUPR-Q full and limited licenses can be found on Measuring Usability LLC.