Speed Matters: Performance Enhancements for Library Websites

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution 3.0 License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

This paper was refereed by Weave's peer reviewers.

Abstract

For a user, speed matters. A quick-loading web page facilitates a positive and pleasant user experience. In this article, I discuss the performance of 129 library websites, and detail a practical plan for addressing common performance issues.

Introduction and Context

To the user, speed matters. The principle of “saving the time of the reader” was codified in 1931 as one of S. R. Ranganathan’s “Five Laws of Library Science.” Ranganathan’s defense of the user’s time finds new expression today through the web, where a fast library website can save the user’s time. In this paper, I discuss the web performance of 129 library websites, and detail a step-by-step performance enhancement plan that I implemented successfully for my library’s website.

The technical performance of the web has been a subject of study in the field of human-computer interaction and other computer science subfields for some time. The phrase “end-to-end” was one of the earliest terms of inquiry for web performance studies, used to describe the network process of routing information packets across the web (Bolot, 1993; Krishnamurthy & Wills, 2000). A landmark study arrived in 1997, when Paxson investigated the nature of slow network connections and their impact on the end user. In examining network connections between different web servers across the country over two study periods—once in 1994 and again in 1995—Paxson found that the likelihood of a user encountering slow page load time more than doubled year-to-year, concluding that different sites encounter very different network routing characteristics that can affect the user’s experience with a web page (1997, p. 614). Paxson’s study demonstrated two fundamental and related aspects of web performance: networked systems such as the World Wide Web are increasingly complex and will consequently over time tend to become slower.

Barford and Crovella described the situation in 1999 simply: “One of the most vexing questions facing researchers interested in the World Wide Web is why users often experience long delays in document retrieval…The question that motivates our work is a basic one: why is the web so slow?” (1999, p. 27). Others at the time pointed a wry finger at the web, remarking that WWW is often associated with “World Wide Waiting.” (Charzinski, 2001, p. 37). Though not operating strictly within the context of user experience (UX), these early studies recognized that perceived slowness could affect the user’s satisfaction with a website. In a 2002 study of website usability, design, and performance, Palmer proposed that speed could serve as a success metric for websites, noting that the performance factors of a website are “relatively simple to measure...and have a significant linkage with site success” (2002, p. 164). Palmer’s suggestion can be readily applied to today’s UX environment, with web performance emerging as a key measure of the user’s experience. Stated directly, if your website is slow, your users’ experience will suffer.

More recent publications have reiterated the essential importance of speed. Hogan, in an excellent new book from O’Reilly,[1] asserts that speed affects experience, especially in the context of the web, where “page load time and how fast your site feels is a large part of this user experience and should be weighed equally with the aesthetics of your site” (2014, p. 1). Hogan’s book echoes Paxson and others whose earlier research illustrated the growing complexity of the web and its resulting impact on performance:

Collectively we are designing sites with increasingly rich content: lots of dynamic elements, larger JavaScript files, beautiful animations, complex graphics, and more. You may focus on optimizing design and layout, but those can come at the expense of page speed (Hogan, 2014, p. 8).

As web content continues to grow in size and complexity, three key measures can reveal basic performance impact: page weight, page requests, and page structure.

- Page weight—The combined file size of all the resources used to build a page, including markup, styles, scripts, images, and fonts.

- Page requests—The total number of resource files used to build a page. When a user loads a web page, the web browser requests various resources from a web server, and each request is transferred via HTTP to the browser, which then incrementally assembles the page content for the user.

- Page structure—The markup configuration that defines the sequence of steps used by the browser to assemble page resources, also known as the critical rendering path.[2]

Trend analysis of data from the HTTP Archive shows that the average page weight for the World Wide Web has grown from 705 kB in 2010 to 2,194 kB in 2015, an increase of 211 percent. The average number of page requests has also increased 33 percent over that same time period, from 74 to 99. In general terms, the web is getting bigger and—without performance optimization—will likely get slower.

To counterbalance the growing complexity of the web, we—web developers, web designers, librarians, user experience designers—must prioritize performance in our workflows by continually evaluating and optimizing for speed. This optimization work can be categorized into three primary areas: front-end optimization (focusing on web browser enhancements), back-end optimization (focusing on web server enhancements), and network optimization (focusing on network connection speeds). General best practices and techniques for web performance optimization across these three areas have been well documented, such as Steve Souder’s “14 Rules for Faster-Loading Web Sites.” Entire websites, in fact, have been created strictly for web performance education—and with clever names too, like “browserdiet.com.” One of the best performance walk-throughs I’ve found is a presentation from Ilya Grigorik, a member of Google’s Make the Web Fast team, whose “Crash Course on Web Performance” provides a clear and extensive overview of factors affecting web speed today. As an employee of Google, Grigorik articulates a crucial aspect of web performance in this overview: “Treat performance as a business metric, not a technical one” (Grigorik, 2012). With this statement, Grigorik appeals to a broad and inclusive vision of web performance, where the details of page load times are not the sole domain of web developers, but are instead relevant throughout an organization. The “business metric” is, in essence, the basic measure of success for any organization. For user-focused libraries, key measures of success include user satisfaction and frequency of use. A fast website benefits online users by saving time, ultimately creating satisfying experiences that lead to return visits. Brad Frost expresses this nicely: “Performance is about respect. Respect your users’ time and they will be more likely to walk away with a positive experience. Good performance is good design” (Frost, 2013). Hogan reiterates this point in calling for an organization-wide culture of performance, “The largest hurdle to creating and maintaining stellar site performance is the culture of your organization” (2014, p. 135).

By creating cultures of performance and prioritizing speed, many organizations have affirmed the value of speed to the user. Twitter, for example, published a blog post recognizing that “to connect you to information in real time, it’s important for Twitter to be fast” (Twitter, 2012). In 2009, Google Research shared an experiment showing that a reduced-speed search results page had a measurable impact on the number of searches conducted (Brutlag, 2009). This theme has remained constant for Google, whose Chrome team reasserted in 2015, “Speed is one of the founding principles of Chrome. As the web evolves and sites take advantage of increasing capabilities, Chrome’s performance becomes even more important” (Schoen, 2015). Google Search also counts site speed as a signal in determining link placement in the search engine results page, noting, “Faster sites create happy users and we've seen in our internal studies that when a site responds slowly, visitors spend less time there” (Sighal & Cutts, 2010). By recognizing the importance of speed, Mozilla generated a 15.4 percent increase in download conversions by improving the performance of its landing page by 2.2 seconds (Cutler, 2010). The user experience of web performance can produce other real-world effects, as with the Obama 2012 campaign fundraising website, which generated a 14 percent increase in donation conversions after launching with a 60 percent speed improvement over its previous iteration (Rush, 2012). A panoply of other public and private organizations have also prioritized web performance, including National Public Radio, GQ, Microsoft, and the New York Times (Cooper & Bachorik, 2015; Moses, 2015; Web Performance Inc., 2010; Konigsburg, 2013). In service to their users and their bottom lines, these companies have achieved and documented a faster web, in effect providing answers to the researchers of the 1990s who asked, “Why is the web so slow?”

As for library websites, web performance has not yet been treated at length in the library literature. In reviewing past publications, I found that performance is inconsistently applied as a principle of user-centered design or as a measure of user experience. In establishing a checklist of user-centered design principles for library websites, Raward includes a few items relating to speed, such as “Home page displays within 10 seconds with a 33.6 modem?” and “Is each page size under 70k?” (2001, p. 134). While these performance standards are now outdated, Raward effectively positions the principle of speed within a broader and more enduring context of user-centered design. Subsequent work, however, has not maintained a focus on performance best practices. In a study of the usability and design of nearly 1,500 public and academic library websites, researchers adapted a number of previous studies—including Raward’s checklist—in evaluating dozens of design criteria, but chose not to include any aspect of performance in their evaluation (Chow, 2014). In a similar analysis of Indiana public library websites, researchers evaluated over 100 different design elements, but performance was likewise not included (Thorpe & Lukes, 2015).

Where performance has been included, the topic typically appears briefly or indirectly within discussions of website usability and design. In a study of the factors affecting university library website design, Kim found that a majority of user survey respondents agreed that a library website is successful when “it lets me finish my project more quickly.” (2011, p. 99). The scope of Kim’s study, however, does not allow for an exploration into the details of this important performance-related finding. In a study of mobile website usability, Pendell and Bowman identify five primary problems encountered by users in mobile environments, including “unusually long page loading time” (2012, p. 54). But a discussion of exactly how to address long page loading time is beyond the scope of their work. In another study investigating the mobile user experience, Seeholzer and Salem found that users “were not very concerned with sites that were unformatted for mobile devices, as long as the sites loaded quickly” (2011, p. 19). Methods for developing quick-loading websites also fall out of the scope of their study. And Brown and Yunkin, in examining the history of website design at the University of Nevada – Las Vegas Libraries, report that the homepage was once described as “graphics-heavy and slow to load” (2014, p. 25). While recognizing specific issues such as slow page load, Brown and Yunkin ultimately offer only broad recommendations for library website design, such as “keep the user in mind.”

So in keeping the user in mind, and with many other industries and disciplines examining and writing about the specifics of web performance, I wondered—how well do library websites perform? How widely are industry performance standards implemented in library websites? How can libraries prioritize and address performance issues? With this paper, I want to return to Ranganathan’s law by asking and answering the question: Do library websites save the time of the user?

Developing a Library Web Performance Enhancement Plan

The central research question of this project revolved around the performance of library websites, and I aimed ultimately to create a practical “Web Performance Enhancement Plan” that would be applicable for library websites and beneficial to library website users. In the course of my research, I found that most library websites fall below performance standards. I also found that there are clear and identifiable steps for addressing shortfalls that libraries can follow to improve the performance of their websites.

In order to evaluate the speed of library websites and to develop a relevant performance enhancement plan, I examined the performance of 129 library websites during the period of February–September 2015. Institutions were selected from the member list of the Digital Library Federation, a community of web and digital practitioners whose membership includes a range of small, medium, and large academic libraries from across North America. In working from the membership list, I aimed to create a representative sample of library websites for performance evaluation. For each library website, I evaluated the performance of the homepage, as an example of a primary starting point and landing page for users, and the “about” page, as an example of a common secondary page.

Performance Diagnostic Tools

Performance testing was conducted using two leading diagnostic tools, Yahoo’s YSlow and Google’s PageSpeed Insights. These tools perform an automated evaluation of a given web page’s URL, measuring the performance of each web page against a set of rules and producing a score for each rule. PageSpeed Insights evaluates ten different performance rules, with three possible scores for each rule: passed rules, consider fixing, should fix.[3] Google’s documentation further explains these terms: “passed rules” indicates that no significant issues were found for a given rule; “consider fixing” indicates that a rule should be addressed if it wouldn’t involve a lot of work; and “should fix” indicates that addressing a given rule would have a measurable impact on page performance. YSlow tests twenty-three different performance rules, with six possible scores for each: A, B, C, D, E, F.[4] Each tool then produces a single overall numerical score—based out of one hundred—generated from the aggregate scores across all rules. During the evaluation process, I also recorded other key metrics: HTTP requests, page weight, and image weight.[5]

Google PageSpeed Insights—Performance Rules

- Leverage browser caching

- Eliminate render-blocking JavaScript and CSS

- Enable compression

- Optimize images

- Minify CSS

- Minify JavaScript

- Minify HTML

- Avoid landing page redirects

- Prioritize visible content

- Reduce server response time

Yahoo YSlow—Performance Rules

- Make fewer HTTP requests

- Use a content delivery network (CDN)

- Avoid empty src or href

- Add expires headers

- Compress components with gzip

- Put CSS at top

- Put JavaScript at bottom

- Avoid CSS expressions

- Make JavaScript and CSS external

- Reduce DNS lookups

- Minify JavaScript and CSS

- Avoid URL redirects

- Remove duplicate JavaScript and CSS

- Configure entity tags (ETags)

- Make AJAX cacheable

- Use GET for AJAX requests

- Reduce the number of DOM elements

- Avoid HTTP 404 error

- Reduce cookie size

- Use cookie-free domains

- Avoid AlphaImageLoader filter

- Do not scale images in HTML

- Make favicon small and cacheable

Library Web Performance—Homepage

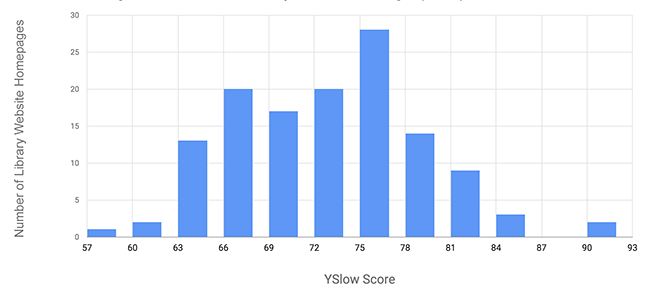

A histogram of overall scores for library homepages from PageSpeed Insights (fig. 1) and YSlow (fig. 2) shows the distribution of scores produced from each tool. The median PageSpeed Insights score was 65, and the median YSlow score was 73. These diagnostic tests revealed that library website homepages can indeed be improved. PageSpeed Insights documentation states that a score of 85 or above indicates that the page is performing well, and while YSlow has not published score guidelines, a higher score is generally considered to be better. Two key measures further demonstrate the current state of library website homepage performance: page weight (fig. 3) and HTTP requests (fig. 4). The median page weight for library homepages was 967 kB, and the median number of HTTP requests was 48. Since various pages may be more or less complex in design, there is no exact performance benchmark for page weight and HTTP requests. This analysis intends to convey the relative states of library websites included in the performance dataset.

Library Web Performance—About Page

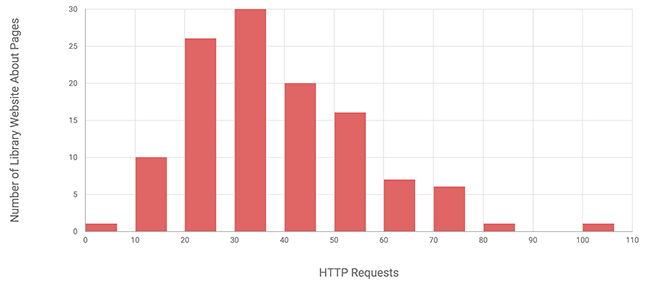

Analysis of library website about pages produced similar results. Ten library websites did not feature a discernable about page, and so the dataset for about pages includes 119 library websites. A histogram of scores for library about pages from PageSpeed Insights (fig. 5) and YSlow (fig. 6) shows the distribution of scores produced from each tool. The median PageSpeed Insights score was 72, and the median YSlow score was 75. While moderately improved over the homepages, the diagnostic tools revealed that library website about pages can also be improved. Results for page weight (fig. 7) and HTTP requests (fig. 8) for about pages reveal that they are lighter than homepages and request fewer HTTP resources. The median page weight for library about pages was 601 kB, and the median number of HTTP requests was 35.

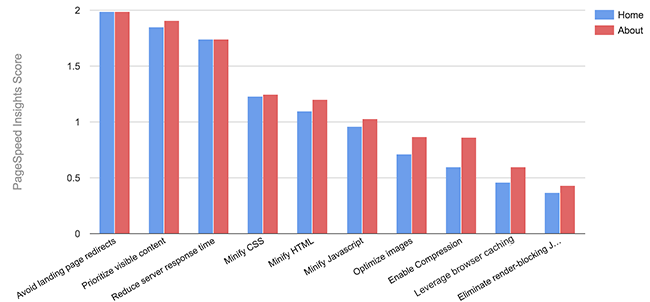

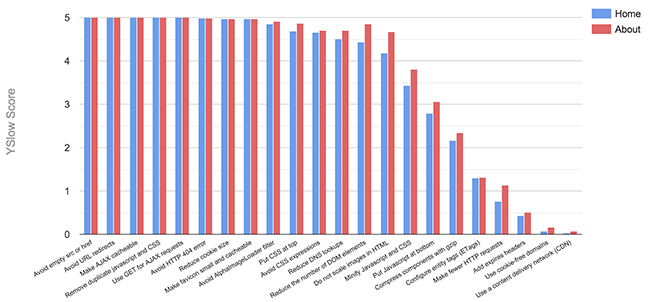

Performance Rules

To take a deeper dive into the results, I then looked at the individual performance rules of library website homepages and about pages as scored by both PageSpeed Insights (fig. 9) and YSlow (fig. 10). For the purposes of data analysis, I converted each tool’s rule measurement scores to numerical scores. PageSpeed Insights scores were converted to a 2-point scale, with 2 for “passed rules,” 1 for “consider fixing,” and 0 for “should fix.” YSlow scores were converted to a 5-point scale, with 5 for an A, 4 for a B, 3 for a C, 2 for a D, 1 for an E, and 0 for an F. I aggregated the results for all institutions and generated average mean ratings for each performance rule analyzed by PageSpeed Insights and YSlow. While not directly comparable, these two scales effectively show performance relative to their respective rules and scoring systems.

With its granularity, this process helped identify strengths and weaknesses in the performance of the library websites included in the dataset. Results show that library website homepages and about pages scored widely across a range of performance rules.

The score distributions also allowed for a further categorization of results that informed the creation of a priority list of performance enhancements—the Library Web Performance Enhancement Plan. I formed three categories of performance rules according to the scores produced by PageSpeed Insights (Table 1) and YSlow (Table 2): High-Scoring, Medium-Scoring, and Low-Scoring. High-scoring rules scored an average between 1.5 and 2 from PageSpeed Insights and between 4 and 5 from YSlow; medium-scoring rules scored an average between 1 and 1.5 from PageSpeed Insights and between 2 and 4 from YSlow; low-scoring rules scored an average between 0 and 1 from PageSpeed Insights and between 0 and 2 from YSlow.

| High-Scoring Rules | Medium-Scoring Rules | Low-Scoring Rules |

|---|---|---|

|

|

|

| High-Scoring Rules | Medium-Scoring Rules | Low-Scoring Rules |

|---|---|---|

|

|

|

From these three categories, I identified the most and least urgent performance issues present in the dataset. I considered rules that scored within the “high scoring” category to be less urgent, and so these rules were not included in the Web Performance Enhancement Plan. I considered rules that scored within the “medium scoring” and “low scoring” categories to be more urgent, and so these rules were included in the Web Performance Enhancement Plan. Fifteen unique rules from PageSpeed Insights and YSlow were categorized as medium or low performing, but many rules are similar, such as PageSpeed Insights’ “Enable compression” and YSlow’s “Compress components with Gzip.” This allowed for about half of the rules to be combined in the Web Performance Enhancement Plan. I considered two particular YSlow rules to be exceptions that were not included in the plan: “Use a content delivery network (CDN)” and “Use cookie-free domains.” These two rule’s low scores and potential urgency are outweighed by other factors. A CDN, a method for delivering web content from geographically distributed web servers—and typically an expensive third-party service—is not likely a justifiable purchase for many libraries who serve mostly local user communities. And while removing cookies from a web property will likely improve performance, it may not be feasible for libraries to follow the steps required to address this issue, such as creating multiple subdomains from which to serve static content. By curating a list of relevant performance rules, I aimed to create a high-impact and achievable Web Performance Enhancement Plan that would be applicable to the widest possible number of libraries.

And so, armed with the results of my analysis of 129 library websites, I drafted the Library Web Performance Enhancement Plan.

Web Performance Enhancement Plan

- Minify CSS and JavaScript

- Minify HTML

- Optimize images

- Reduce HTTP calls

- Enable compression

- Add expires headers/leverage browser caching

- Eliminate render-blocking JavaScript and CSS in above-the-fold content

Implementing a Web Performance Enhancement Plan

In order to test the efficacy and impact of the Web Performance Enhancement Plan, I applied it to the library website homepage of my own institution, Montana State University. Before beginning implementation, baseline performance measures of the website were established by following the same evaluation process described above. The results from this initial evaluation were included in the full performance evaluation dataset. At each subsequent step of the plan and at its completion, I remeasured the performance of the website, thereby tracking the impact and progress of the plan (Table 3). I maintained the same visual design and functionality of the homepage during the implementation of the plan so as to isolate each performance step in the evaluation testing.

| PageSpeed Insights Score | YSlow Score | Page Weight (kB) | HTTP Requests | |

|---|---|---|---|---|

| Initial evaluation | 76 | 73 | 720 | 30 |

| 1. Minify CSS/JS | 78 | 75 | 668 | 30 |

| 2. Minify HTML | 79 | 75 | 666 | 30 |

| 3. Optimize images | 80 | 77 | 611 | 30 |

| 4. Reduce HTTP requests | 82 | 81 | 435 | 16 |

| 5. Enable compression | 89 | 83 | 309 | 16 |

| 6. Add expires headers | 94 | 95 | 298 | 16 |

| 7. Eliminate render-blocking content | 96 | 96 | 238 | 15 |

| Final evaluation | 96 | 96 | 238 | 15 |

In the initial pre-enhancement evaluation, the homepage scored a 76 from PageSpeed Insights (76th percentile in the performance dataset) and a 73 from YSlow (46th percentile). The homepage weighed 720 kB (29th percentile) with 30 HTTP requests (11th percentile). After completing the Performance Enhancement Plan, the homepage scored a 96 from PageSpeed Insights (99th percentile equivalent) and a 96 from YSlow (99th percentile equivalent), with a page weight of 238 kB (1st percentile equivalent and a decrease of 67 percent) and 14 HTTP requests (1st percentile equivalent and a decrease of 53 percent). In short, the Performance Enhancement Plan worked.

In the following sections, I address each point of the plan directly and detail its step-by-step implementation process that I followed for my library’s website. Each step of the plan contains a level of depth and detail that I am unable to address in the scope of this article, so I also include links to further readings and documentation from Google, Yahoo, and Designing for Performance. While certain steps individually affected performance metrics to a greater degree than others, all steps of the plan ultimately operated in concert to create a coherent and effective program for performance enhancement.

Minify CSS and JavaScript

Minifying stylesheets and scripts comes as the first step because it is among the most straightforward to implement. “Minification” entails the removal of spaces in markup and code. For a human reader, spaces between characters and words are necessary for interpretation, but for machine readers such as a web browser, spaces are unnecessary and even undesirable, as spaces can add weight to a resource. Many free web tools exist that automate minifying and unminifying resources.[6]

Before minifying the CSS for the homepage, the markup contained spaces unnecessary for a web browser:

.social-icons a.facebook:hover {

background-color: #305fb3;

}

.social-icons a.twitter:hover {

}background-color: #2daae1;

}}

.social-icons a.youtube:hover {

}background-color: #ff3333;

}}

.social-icons a.pinterest:hover {

}background-color: #e1003a;

}}

.social-icons a.tumblr:hover {

}background-color: #44546b;

}}

After minification, the same CSS can be rendered on a single line and interpreted seamlessly by the browser:

.social-icons a.facebook:hover{background-color:#305fb3}.social-icons a.twitter:hover{background-color:#2daae1}.social-icons a.youtube:hover{background-color:#f33}.social-icons a.pinterest:hover{background-color:#e1003a}.social-icons a.tumblr:hover{background-color:#44546b}

Minifying the primary CSS file for homepage reduced its size by 33 percent (24 kB to 16 kB). Minifying our 6 JavaScript resources and 3 CSS resources reduced page weight by 7 percent (720 kB to 668 kB). While this gain in itself was modest, it represented one gradual step in a process which builds towards a high-impact result. After this step, the PageSpeed Insights score increased from 76 to 78, and the YSlow score increased from 73 to 75.

Further reading

Minify HTML

The same best practice principles of minification apply to markup. Prior to minifying our homepage HTML, the markup contained many necessary spaces for human interpretation, but unnecessary for machine interpretation:

<div id="rightpane">

<div id="aside">

<ul class="nav-tabs">

<li class="active"><a href="index.php">Hours</a></li>

<li><a href="index.php?view=news">News</a></li> <li><a

href="index.php?view=chat">Chat</a></li>

</ul>

</div>

</div>

To complete the minification step, I simply unindented each line of HTML:

<div id="rightpane">

<div id="aside">

<ul class="nav-tabs">

<li class="active"><a href="index.php">Hours</a></li>

<li><a href="index.php?view=news">News</a></li> <li><a href="index.php?view=chat">Chat</a></li>

</ul>

</div>

</div>

Minifying our one HTML resource reduced page weight by 0.3 percent (668 kB to 666 kB). After this step, the PageSpeed Insights score increased from 78 to 79, and the YSlow score remained 75.

Further reading

Optimize Images

The role of images in web performance has been well documented and much discussed. Trend analysis of data from the HTTP Archive shows that images have accounted for more than 50 percent of page weight since measurement began in November 2010, and as of November 2015, images account for 64 percent of average page weight. In Designing for Performance, Hogan notes, “optimizing images is arguably the easiest big win when it comes to improving your site’s page load time” (2014, p. 27). Images have been elsewhere described as “the biggest culprit for bloated web pages” (Avery, 2014). Images on library websites are no different. In my performance dataset, images on average accounted for 25 percent of total homepage weight and 11 percent of total about page weight. This data suggests that images on library websites can impact performance in a big way. Optimizing existing images is an approach that can offer performance gains without requiring a redesign. “Optimization” in this case entails choosing an appropriate file format and then reducing file size with compression tools. For my library’s website, I converted nineteen images to JPEG, a reduced-size file format. I then further reduced file size with Kraken, a web tool that compresses image files. Other tools for Windows, Mac, and the web can be used to compress image files.[7] Before optimization, images accounted for 80 percent of the total weight of our homepage. After optimization, images accounted for 77 percent of total page weight. In all, optimizing our nineteen image resources reduced page weight by 8 percent (666 kB to 611 kB). After this step, the PageSpeed Insights score increased from 79 to 80, and the YSlow score increased from 75 to 77. This modest improvement illuminated two aspects of web performance enhancement: optimizing images offer solid performance benefits, but greater gains can result from reducing the total number of images requested via HTTP. I explore this second aspect in the next section.

Further reading

Reduce HTTP Requests

This step currently offers a high potential for performance gains. YSlow’s FAQ on grading considers the rule “Make fewer HTTP requests” to be the most important aspect of web performance, and Hogan states, “Optimizing the size and number of requests that it takes to create your web page will make a tremendous impact on your site’s page load time.” (2014, p. 14) For my library website’s homepage, reducing HTTP requests started with a critical review of each resource. Prior to addressing this step, the homepage was comprised of thirty requests: nineteen image files, six JavaScript files, three CSS files, one text file, and one HTML file. I reduced the overall number of requests to sixteen by combining or removing resources to build a page comprised of eleven images, three JavaScript files, one CSS file, and one HTML file. The highest-impact approach was simply to reduce the number of images on the page. While it was not feasible to entirely eliminate our homepage’s slideshow carousel, I reduced the number of slides by half, from six to three.[8] Prior to this step, our homepage used a JavaScript file to interact with the Instagram API, dynamically loading six Instagram images onto the page. In order to optimize this page element, I eliminated the JavaScript request and created a single image that presented a static grid-view of Instagram images, thereby reducing both our JavaScript requests and our image requests while maintaining the same basic experience of this design element. I took a more straightforward approach for our CSS by simply combining three separate files into one single file, which was rendered seamlessly by the browser. Our one text file was an out-of-date resource that was easily removed. In all, reducing our HTTP requests from 30 to 16 reduced page weight by 29 percent (611 kB to 435 kB). After this step, the PageSpeed Insights score increased from 80 to 82, and the YSlow score increased from 77 to 81.

While keeping all of the above in mind, a recent development in HTTP protocol promises to considerably change the performance enhancement technique of reducing HTTP requests. The technical architecture of HTTP/1.x currently limits the number of resources that can be loaded by the browser at a single time, thus significantly slowing page load time if a large number of resources are required. The architecture of HTTP/2, on the other hand, is designed for “multiplexing,” a new method for delivering resources concurrently to the browser.[9] While not now widely adopted, HTTP/2 will likely become the norm and—from the perspective of web performance—will render HTTP request reduction unnecessary.[10]

Further Reading

Enable Compression

Compressing your page resources can offer instant performance gains. Gzip is the leading method for web resource compression, and it can be enabled for specific file types through server configuration settings or easy-to-install plug-ins and extensions for content management systems such as WordPress and Drupal. My library’s website runs on an Apache server, so our server configuration for enabling compression is a simple one-line command:

AddOutputFilterByType DEFLATE

text/html text/plain text/xml text/css text/javascript

This setting compresses all HTML, XML, plain text, JavaScript, and CSS files that pass from our web server to a web browser. Enabling compression reduced our homepage weight 29 percent (435 kB to 309 kB). After this step, the PageSpeed Insights score increased from 82 to 89, and the YSlow score increased from 81 to 83.

Further Reading

Add Expires Headers/Leverage Browser Caching

Caching page resources in the web browser can also offer instant performance gains. When a resource is saved and cached in the browser, it does not need to be immediately requested again from the server. With this setting enabled, your returning visitors will experience much faster page load time. Through this process, you can also set the duration of time that a page resource “expires” and must again be requested by the browser. For example, a CSS file that is edited infrequently can be given an expires setting of 30 days, thus allowing a web browser to cache this file for one month before requesting it again. During this one-month time period, a returning visitor to your website will benefit from having this CSS file stored for quicker access and faster loading by the web browser. As with resource compression, the exact configuration setting for adding expires headers and enabling browser caching will depend on your local server environment. Since my library’s website runs on an Apache server, I used the following server syntax to complete this step:

<IfModule mod_expires.c>

#Enable expirations

ExpiresActive on

#Default directive

ExpiresDefault "access plus 30 days"

#favicon

ExpiresByType image/x-icon "access plus 1 year"

#cache

ExpiresByType text/cache-manifest "access plus 0 seconds"

#Data

ExpiresByType text/xml "access plus 15 days"

ExpiresByType application/xml "access plus 15 days"

ExpiresByType application/json "access plus 15 days"

#Images

ExpiresByType image/gif "access plus 30 days"

ExpiresByType image/png "access plus 30 days"

ExpiresByType image/jpg "access plus 30 days"

ExpiresByType image/jpeg "access plus 30 days"

#CSS

ExpiresByType text/css "access plus 30 days"

#Javascript

ExpiresByType application/javascript "access plus 1 year"

ExpiresByType text/javascript "access plus 1 year"

#HTML

ExpiresByType text/html "access plus 43200 seconds"

#Fonts

ExpiresByType application/vnd.ms-fontobject "access plus 1 year"

ExpiresByType application/x-font-ttf "access plus 1 year"

ExpiresByType application/x-font-opentype "access plus 1 year"

ExpiresByType application/x-font-woff "access plus 1 year"

ExpiresByType font/truetype "access plus 1 month"

ExpiresByType image/svg+xml "access plus 1 month"

</IfModule>

These settings add various expires timeframes for all HTML, XML, JSON, plain text, JavaScript, CSS, image, and font files that pass from our web server to a web browser. The web server and the web browser coordinate resources through a mechanism known as Entity Tags. Once you’ve enabled browser caching, you will also want to check that your server’s “ETags” are also configured correctly. Leveraging browser caching reduced page weight 3.5 percent (309 kB to 298 kB). After this step, the PageSpeed Insights score increased from 89 to 94, and the YSlow score increased from 83 to 95.

Further Reading

Eliminate Render-Blocking JavaScript and CSS in Above-The-Fold Content

This step involves optimizing the delivery of JavaScript and CSS resources for page content that loads in the immediate view of a user (above the so-called page fold). In practical terms, this means loading CSS resources at the top of the page, and asynchronously loading JavaScript at the bottom. To complete this step for my library website’s homepage, I added the “async” HTML attribute to our primary JavaScript file:

<script async type="text/javascript" src="meta/scripts/scripts-all-min.js">

</script>

And I moved all the styles from our single CSS file used for the homepage—already quite small at 7.7 kB—into the HTML head of our homepage, thereby improving page load time by reducing our HTTP requests and removing the dependency on this potentially render-blocking external CSS file. Eliminating render-blocking JavaScript and CSS reduced page weight 20 percent (298 kB to 238 kB), and reduced HTTP requests from 16 to 15. After this step, the PageSpeed Insights score increased from 94 to 96, and the YSlow score increased from 95 to 96.

Further Reading

- https://developers.google.com/speed/docs/insights/OptimizeCSSDelivery

- https://developers.google.com/speed/docs/insights/BlockingJS

- https://developer.yahoo.com/performance/rules.html#css_top

- https://developer.yahoo.com/performance/rules.html#js_bottom

- http://designingforperformance.com/optimizing-markup-and-styles/#css-and-javascript-loading

Web Performance in Practice

The Web Performance Enhancement Plan proved to be a great success for the MSU Library website. From a visual standpoint, the homepage interface itself went mostly unchanged through this process. Only when looking under the hood is it possible to discern the differences. The performance enhancements detailed in this paper translated into a faster-loading library homepage and an overall improved experience for our users. Results from this research and walk-through can help inform the work of library web practitioners and user experience designers, with the Web Performance Enhancement Plan serving as one possible method for ensuring that your pages load quickly.

Beyond the immediate impact of the plan are issues of workflow integration and the long-term sustainability of performance optimization. To help introduce or reinforce performance-focused measures into existing workflows, I recommend “network checks,” which entail testing a website or web application under a variety of network speeds to ensure usability and functionality across a range of internet connection environments. Many users from advanced countries such as South Korea, Sweden, and Japan enjoy average broadband connections that near an ideal 30 Mbps, but many other users across the globe access the web from less-than-ideal mobile connection speeds that average near 1 Mbps, such as Vietnam, Indonesia, and Argentina (Belson, 2015). Within the United States, network connection speeds can also vary greatly. In 2011, the average download speed in Dover, Delaware, 18.9 Mbps, was nearly three times that of Dallas, Texas at 6.8 Mbps (Statista, 2011). Broadband adoption is another relevant website performance indicator which also shows a disparity among U.S. states, with Delaware recording a 97 percent adoption rate of 4 Mbps broadband in the first quarter of 2015 and West Virginia recording a 63 percent adoption rate (Belson, 2015). The reality of varying network connection speeds is especially relevant for libraries whose users access web content from a variety of geographic areas, mobile devices, and rural environments (Hogan, 2014)

In order to track and measure web performance, I also recommend “performance benchmarking,” which entails documenting page weight and other performance metrics using PageSpeed Insights, YSlow, and other widely-available performance monitoring tools such as WebPageTest and Byte Check. To provide additional structure to performance monitoring, a “performance budget” can also be applied to a web page or a web application, which entails setting upper limits for key metrics such as page weight, HTTP requests, PageSpeed Insights score, YSlow score, speed index, and page render times.[11] Advanced CSS animations, large images, and complex JavaScript functions can dramatically impact the performance of a website, and such costly functionality should be weighed against the goals of the site, its users, and the potential impact on web performance. As more functionality is added to a website or web app, a “performance budget” can serve as a check against unnecessary or overly costly web page elements. Performance budgets can also be gamified, as demonstrated by the University of Minnesota Libraries Web Development Team, which has created a Speed Leader Board that publicizes up-to-date PageSpeed Insights scores for each Big Ten university library’s website homepage.

As part of a comprehensive web analytics program, metrics such as performance benchmarking and performance budgeting can be used to understand, evaluate, and improve library websites. The role of web analytics overall has notably increased in recent years as libraries have begun incorporating analytics tools into web workflows and user experience research. Recent studies detail the benefits offered by web analytics to user-centered web design and development (Barba et. al, 2013; Veregin, 2014; Young, 2014; Tobias, 2015). Helpful library-focused analytics tutorials and practical walk-throughs have also appeared in recent years (Hess, 2012; Yang & Perry, 2014). In reviewing the function of web analytics reporting for academic library websites, Hogan concludes that web analytics have the potential to be “a treasure trove of valuable decision-making information for libraries.” (2014, p. 32). Indeed, web performance metrics can provide a valuable structure for shaping a library’s website, with page load time and its associated measures helping to form the core of a library’s website design and user experience evaluation.

Final Thoughts

Performance is a fundamental aspect of a user’s experience of the web. How fast or slow a page loads should be a matter of practical concern for those designing and developing the web. Through research into library website performance, I found that many library websites exhibit similar strengths and weaknesses across a range of performance rules. Using this research data as a foundation for improvement, I created a seven-step Library Web Performance Enhancement Plan that I implemented for my own library’s website. By following this plan, the performance of my library website’s homepage markedly improved, with gains across key performance metrics such as PageSpeed Insights score, YSlow score, page weight, and HTTP requests. This plan, which can be implemented with a modest commitment of time and resources, ultimately produced an achievable and effective pathway for performance enhancement. While the nuances of your local environment may generate greater or lesser gains, web performance enhancement and maintenance promise to save the time of the user, ultimately leading to an overall positive impact for your website’s users.

Data Availability

All research data is available from the author’s institutional repository, Montana State University ScholarWorks: http://doi.org/10.15788/m23w2g.

Acknowledgements

For their illuminating insight, I would like to thank the Skylight writing group: Ryer Banta, Sara Mannheimer, and Kirsten Ostergaard. My thanks also to Krista Godfrey for many valuable video chats along the way.

References

- Avery, J. (2014). Reducing image sizes. Retrieved from https://responsivedesign.is/articles/reducing-image-sizes

- Barba, I., Cassidy, R., De Leon, E., & Williams, B. J. (2013). Web analytics reveal user behavior: TTU Libraries’ experience with Google Analytics. Journal of Web Librarianship, 7(4), 389–400.

- Barford, P., & Crovella, M. (1999). Measuring web performance in the wide area. Performance Evaluation Review, 27(2), 37–48.

- Belson, D. 2015. State of the Internet, Q1 2015 report [PDF document]. Vol. 8 No. 1. Akamai. Retrieved from https://www.stateoftheinternet.com/downloads/pdfs/2015-q1-state-of-the-internet-report.pdf

- Bolot, J.-C. (1993). End-to-end packet delay and loss behavior in the Internet. In Conference Proceedings on Communications Architectures, Protocols and Applications (pp. 289–298). New York: Association for Computing Machinery.

- Brown, J. M., & Yunkin, M. (2014). Tracking changes: One library’s homepage over time—findings from usability testing and reflections on staffing. Journal of Web Librarianship, 8(1), 23–47.

- Brutlag, J. (2009). Speed matters. Retrieved from http://googleresearch.blogspot.com/2009/06/speed-matters.html

- Charzinski, J. (2001). Web performance in practice—why we are waiting. International Journal of Electronics and Communications, 55(1), 37–45.

- Chow, A. S., Bridges, M., & Commander, P. (2014). The website design and usability of U.S. academic and public libraries. Reference & User Services Quarterly, 53(3), 253–265.

- Cooper, P., & Bachorik, J. (2015). NPR.org now twice as fast. Retrieved from http://www.npr.org/sections/thisisnpr/2015/10/27/451147757/npr-org-now-twice-as-fast

- Cutler, B. (2010). Firefox & page load speed—part II. Retrieved from https://blog.mozilla.org/metrics/2010/04/05/firefox-page-load-speed-%E2%80%93-part-ii/

- Frost, B. (2013). Performance as design. Retrieved from http://bradfrost.com/blog/post/performance-as-design/

- Grigorik, I. (2012). Crash course on web performance [PDF document]. Retrieved from https://www.igvita.com/slides/2012/webperf-crash-course.pdf

- Hess, K. (2012). Discovering digital library user behavior with Google Analytics. Code4Lib Journal, (17).

- Hogan, L. C. (2014). Designing for performance. Cambridge: O’Reilly Media, Inc.

- Kim, Y.-M. (2011). Factors affecting university library website design. Information Technology and Libraries, 30(3), 99–107.

- Konigsburg, E. (2013). Web performance at the New York Times [Video file]. Retrieved from https://youtu.be/2dwBB2Xa_B0

- Krishnamurthy, B., & Wills, C. E. (2000). Analyzing factors that influence end-to-end web performance. Computer Networks, 33(1–6), 17–32.

- Moses. L. (2015). How GQ cut its webpage load time by 80 percent. Retrieved from http://digiday.com/publishers/gq-com-cut-page-load-time-80-percent/

- Palmer, J. W. (2002). Web site usability, design, and performance metrics. Information Systems Research, 13(2), 151–167.

- Paxson, V. (1997). End-to-end routing behavior in the Internet. IEEE/ACM Transactions on Networking, 5(5), 601–615.

- Pendell, K. D., & Bowman, M. S. (2012). Usability study of a library’s mobile website: An example from Portland State University. Information Technology and Libraries, 31(2), 45–62.

- Ranganathan, S. R. (1931). The five laws of library science. Madras: Madras Library Association.

- Raward, R. (2001). Academic library website design principles: Development of a checklist. Australian Academic & Research Libraries, 32(2), 123–136.

- Rush, K. (2012). Meet the Obama campaigns $250 million fundraising platform. Retrieved from http://kylerush.net/blog/meet-the-obama-campaigns-250-million-fundraising-platform/

- Schoen, R. (2015). Chrome improvements for a faster and more efficient web. Retrieved from http://chrome.blogspot.com/2015/09/chrome-improvements-for-faster-and-more.html

- Seeholzer, J., & Salem, J. A. (2011). Library on the go: A focus group study of the mobile web and the academic library. College & Research Libraries, 72(1), 9–20.

- Sighal, A., & Cutts, M. (2010). Using site speed in web search ranking. Retrieved from http://googlewebmastercentral.blogspot.com/2010/04/using-site-speed-in-web-search-ranking.html

- Statista (2011). Average download speeds in selected U.S cities in 2011 (in mbps). Retrieved from http://www.statista.com/statistics/210601/average-download-speeds-in-us-cities/

- Thorpe, A., & Lukes, R. (2015). A design analysis of Indiana public library homepages. Public Library Quarterly, 34(2), 134–161.

- Tobias, C., & Blair, A. (2015). Listen to what you cannot hear, observe what you cannot see: An introduction to evidence-based methods for evaluating and enhancing the user experience in distance library services. Journal of Library & Information Services in Distance Learning, 9(1-2), 148–156.

- Twitter. (2012). Improving performance on twitter.com. Retrieved from https://blog.twitter.com/2012/improving-performance-on-twittercom

- Veregin, H., & Wortley, A. J. (2014). Using web analytics to evaluate the effectiveness of online maps for community outreach. Journal of Web Librarianship, 8(2), 125–146.

- Web Performance, Inc. (2010) Microsoft affirms the importance of web performance. Retrieved from http://www.webperformance.com/load-testing/blog/2010/06/microsoft-affirms-the-importance-of-web-performance/

- Yang, L., & Perrin, J. M. (2014). Tutorials on Google Analytics: How to craft a web analytics report for a library web site. Journal of Web Librarianship, 8(4), 404–417.

- Young, S. W. (2014). Improving library user experience with A/B testing: Principles and process. Weave: Journal of Library User Experience, 1(1). Retrieved from http://dx.doi.org/10.3998/weave.12535642.0001.101

Notes:

Also available on the web with a CC-BY-NC-ND license: http://designingforperformance.com/.

Detailed discussion of this important and nuanced concept: https://developers.google.com/web/fundamentals/performance/critical-rendering-path/.

See the full documentation for more detail: https://developers.google.com/speed/docs/insights/about; also see Google’s performance guidelines for detail regarding various PageSpeed Insights rules: https://developers.google.com/web/fundamentals/performance/.

See the full documentation for more detail: http://yslow.org/ruleset-matrix/. I tested websites using YSlow V2, from which one rule was excluded by default (Make JavaScript and CSS external).

Full dataset available at http://doi.org/10.15788/m23w2g.

For example, http://cssminifier.com/ and http://unminify.com/

For example: RIOT for Windows. http://luci.criosweb.ro/riot/; ImageOptim for Mac/iOS devices, https://imageoptim.com/; Smush it on the web, http://www.imgopt.com/.

For a more critical take of slideshow carousels, see http://shouldiuseacarousel.com/.

For more information, see: https://http2.github.io/faq/#why-is-http2-multiplexed; http://rmurphey.com/blog/2015/11/25/building-for-http2.

For further reading on HTTP/2, see Chapter 12 of High Performance Browser Networking by Ilya Grigorik: http://chimera.labs.oreilly.com/books/1230000000545/ch12.html.

For more on performance budgeting, see https://timkadlec.com/2014/11/performance-budget-metrics/ and http://danielmall.com/articles/how-to-make-a-performance-budget/.