Educational Development Efforts Aligned With the Assessment Cycle

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Abstract

Using an assessment cycle as an organizing framework, this article illustrates how educational development and assessment mutually complement each other. It describes an assessment study conducted to determine if two colleges at a small university met their strategic goals to increase the adoption of learning-centered teaching. This study served the parallel function of assessing the impact of sustained educational development efforts by the Centers for Teaching and Learning (CTL) to promote learning-centered teaching. The majority of interviewed faculty reported using learning-centered approaches. The data collection method itself also served as a teachable moment for faculty who do not attend CTL events. Then, the data were used to create further development efforts, thus completing the assessment cycle. Subsequent events educated faculty about rarely used or not understood components of learning-centered teaching.

Keywords: accountability, assessment, development, faculty development, pedagogy

Promoting effective teaching practices is a core function for educational developers, and they have been advocating for learning centered teaching as an effective practice for over a decade (Sorcinelli, Austin, Eddy, & Beach, 2006). With this approach to teaching, the focus shifts from what the instructor does, such as giving a clear lecture on the content, to how much and how well the students are learning the material. Thus, instructors become facilitators to enable student learning by identifying why content is learned, determining how it can be learned, offering continuous formative feedback, and helping students to take responsibility for their learning (Blumberg, 2009).

In addition to promoting effective teaching practices, educational developers are well positioned to lead or collaborate with faculty on the study of teaching practices (Kebaste & Sims, 2016; Sorcinelli, Gray, & Birch, 2011). When educational developers broaden their scope from working with individuals to collaborating with departments or colleges, they are poised to improve the overall educational quality of programs. Moving from looking at the impact of individual teachers to studying teaching practices in programs can be a manageable and appropriate next step for educational developers. This enlargement of purpose makes educational developers more central to institution wide improvement efforts, and it can position them to have a greater influence on more individuals than they might contact through one on one consultations (Schroeder, 2011). As educational developers are often trusted by both the faculty and the administration, they can be change agents to promote organizational development through formative assessment efforts. As such assessment provides feedback for the purpose of improvement, it aligns with that core function of educational developers, the promotion of effective teaching.

Value of Teaching at This Institution

Good teaching is required for promotion at this small, private, tuition driven university. Within this university and especially within two colleges (arts and sciences and health professions), good teaching is defined by learning centered practices. Many faculty members are eager for professional development and attend faculty development events or seek consultation with the Centers for Teaching and Learning (CTL; University of the Sciences, Teaching and Learning Center, 2005). There is a strong tradition of sharing teaching practices through discussions and peer to peer presentations (Blumberg, 2004; Blumberg & Everett, 2005). In facilitating this kind of formative development, the CTL furthers its goals to support the kind of good teaching valued by the institution.

In support of such good teaching, but not as any part of summative faculty evaluation, the author, the director of the CTL for over 15 years, conducted the assessment study reported here to identify current teaching practices. Specifically, she took an inventory of the use of learning centered teaching practices because increased use of these methods ultimately should increase student learning (Weimer, 2013). This study served two complementary purposes: to determine if two colleges met their strategic goals of implementing learning centered teaching and to determine the impact of the sustained faculty development efforts of the CTL toward similar goals.

Definitions and Distinctions

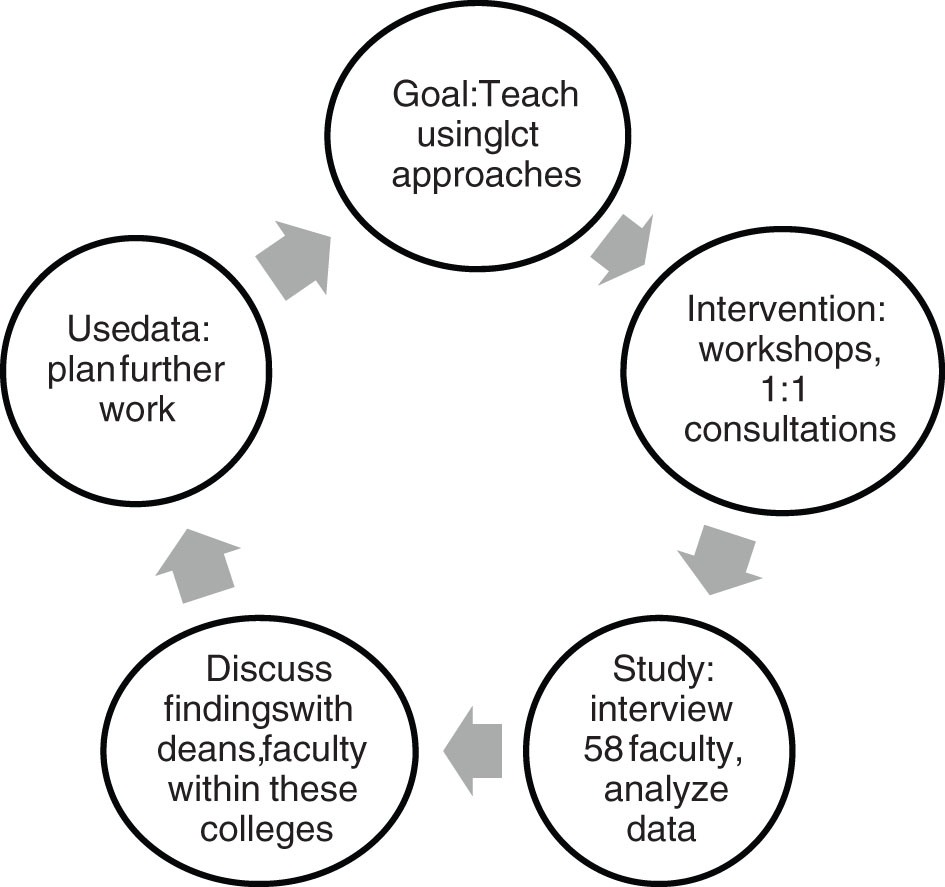

The author defines “assessment” and the “assessment cycle” according to classic assessment and evaluation literature. Assessment implies the systematic collection, analysis, and use of data and is conducted for the purpose of improvement (Palomba, 2001). To promote the use of data to plan future actions, Suskie (2009) popularized a heuristic called the assessment cycle (Figure 1). This assessment cycle focuses on interpreting and sharing results to enhance practices. Within this framework, data only become meaningful and therefore valuable when they are used to identify priorities for further actions or to make plans for improvements over what is currently done. When individuals make a commitment to ongoing improvement, assessment becomes an integral part of the teaching process (Maki, 2010). Furthermore, college level assessments consider the alignment of faculty members’ actions with the college’s mission statement and strategic goals (Maki, 2010).

Just as definitions provide focus, distinctions between other terms also offer clarity to the direction and scope of this study. While it occurs at the college level, this study is not an institutional evaluation because it does not gauge the colleges’ broader effectiveness. Institutional effectiveness would look at more varied outcomes, such as meeting stakeholder needs or deploying resources effectively (Suskie, 2015). As evaluation implies judgment or a decision (Stark & Thomas, 1994), this study is not program evaluation because this work is not intended to draw conclusions about the worth of these programs or to provide judgments about continuing them. This is not a program review or self study because such studies are often authorized by a higher authority, such as accreditation agencies (Stark & Thomas, 1994). Nor is this work faculty evaluation because it does not use the data for summative judgment of individual faculty performance (Banta & Palomba, 2015). Finally, while assessments often involve research processes, the focus here is on improvement and quality assurance rather than expansion of scientific knowledge (Suskie, 2015).

Culture of Assessment and the Role of the CTL

Historically, assessment at this institution was completed sporadically and for the purpose of accreditation. In fact, the regional accreditors noted in 2013 that while much data were collected, they were not used to make changes or improvements. Newer accreditation standards that focus on outcomes have led to much greater university wide attention to assessment, and the new university president (inaugurated in 2013) is dedicated to assessment and accountability.

The two colleges involved in this study value assessment differently. All of the programs in the College of Health Professions are accredited by professional organizations. Thus, all of the faculty within this college are used to doing program reviews and completing periodic self studies. In contrast, the educational programs within the College of Arts and Sciences are not accredited. Only the majors within one department are certified by a professional association, and these certifications are more concerned with curriculum than program review. Within the last few years, faculty in the College of Arts and Sciences have been encouraged by the university level administrators to engage in more assessment. Their college wide assessment concentrates on whether the goals of their strategic plan have been met.

Accordingly, the provost has also recognized that an important part of assessment work involves educating faculty to accept and to prepare for doing assessments, and the director of the CTL was recruited to cochair the University Assessment Council. Under the director’s leadership, this university wide council aims to change the culture to accept all aspects of the assessment cycle as essential for ongoing program improvement (Hines, 2009). The council assists faculty and administrators in their efforts to measure the quality of educational programs by showing them how to conduct assessments of their teaching using appropriate direct and indirect measures. Currently, data from assessment studies are stimulating rich discussions about teaching and learning and the educational environment at this university.

Organizational Framework for This Article

Because this article reports on an assessment study, it is logical to use Suskie’s (2009) assessment cycle as the organizational framework. The headings reflect the steps of this assessment cycle instead of the usual sections of a research paper (i.e., introduction, methods, results, discussion, and conclusion). Figure 2 applies the assessment cycle to the goal of implementing learning centered teaching within two colleges within the university. As an alternative to explaining the results of using learning centered practices within these two colleges, the discussion focusses on how the data can be used for improvement, further demonstrating how the assessment cycle is useful for educational development purposes.

Figure 2. Assessment Cycle as Applied to Learning Centered Teaching (lct) Educational Development Efforts

Figure 2. Assessment Cycle as Applied to Learning Centered Teaching (lct) Educational Development EffortsGoal: Teach Using Learning Centered Teaching Practices

According to the assessment cycle, an effective process begins by setting a goal. While there has been a history of strategic plans at the university and college level, the faculty and administrators have rarely assessed whether they met their strategic goals. With the conscious shift to an assessment culture, there is a new focus on assessing how well strategic goals are met. The current strategic plans of the College of Arts and Sciences (approved in 2011) and the College of Health Professions at this university (approved in 2012) both state that the faculty will use learning centered teaching practices. Instead of encouraging many different effective teaching techniques, faculty at both colleges chose to embrace learning centered teaching practices to promote more consistency in instruction. They did so because there is strong research evidence that learning centered teaching increases student learning (Blumberg, 2009; Doyle, 2011; Weimer, 2002). The faculty of the College of Arts and Sciences adapted Blumberg’s (2009) components to define 21 literature based learning centered practices listed in their tactical plan, which became the primary focus of this assessment study. In contrast, the College of Health Professions did not elaborate on specific tactics for achieving their goals and only stated a general goal of teaching using learning centered practices. In this study, the specific practices identified by the College of Arts and Sciences were also used to determine if the College of Health Professions met its general strategic goal.

Criteria for Success

The literature of educational development offers a means to precisely define and promote success of the goals of both colleges. Blumberg (2009) developed a series of four level rubrics to explain how faculty can incrementally implement learning centered teaching. The four levels are instructor centered (1), lower level of transition (2), higher level of transition (3), and learning centered (4). The rubrics measure 32 different components of learning centered teaching based on Weimer’s (2002) practices or dimensions. According to Weimer (2002, 2013), learning centered teaching has five essential dimensions: the function of content, the role of the instructor, the responsibility for learning, the purposes and processes of assessment, and the balance of power. In addition to showing different ways to teach, these rubrics can assess the extent of implementation of these specific learning centered teaching practices (Blumberg, 2009). Blumberg and Pontiggia (2011) further identified how to use these rubrics to measure learning centered teaching. Scores of either higher level of transition (3) or learning centered (4) were defined as achieving the goal of using learning centered teaching, and—as agreed by the Deans of these two colleges—the overall criterion for success was set at greater than 50% of the study faculty in each college receiving scores of 3 or 4 on a majority of the components.

Develop and Implement Educational Intervention Efforts to Promote Learning Centered Teaching

The CTL of this university has been the champion for promoting learning centered teaching throughout the campus for over 12 years (Blumberg, 2004). Among its initiatives, the CTL has: (a) offered workshops given by internationally known experts on learning centered teaching, (b) paid the registration for faculty to attend teaching and learning conferences, (c) sponsored awards for innovative teaching that used learning centered practices, and (d) assisted some faculty to engage in SoTL about their learning centered teaching. To meet the needs of different types of faculty, the center director has planned events for faculty who consider teaching to be information dissemination and for faculty who teach using high impact practices or in experiential settings. Furthermore, in her one on one consultations, the director has consistently promoted the use of various learning centered techniques as well.

Prior to the development of current strategic plans, the CTL hosted a consensus conference to define how the faculty at this university could define and implement learning centered teaching, which over a third of the faculty from the College of Arts and Sciences and College of Health Professions attended. These participants agreed that the crucial characteristics of such teaching were providing an environment to help students to learn and succeed by actively engaging the students in their learning and by helping them to develop the skills to take responsibility for their own learning. Their role as teachers was more of facilitating and coaching than didactic instruction (Blumberg & Everett, 2005).

Annually, since 2003, all new faculty members have participated in a one and half day workshop on how to teach using the consensually defined learning centered approaches. The CTL has also offered short, practical workshops throughout each year that focused on different aspects of learning centered teaching. Faculty have time to work on their proposed changes in their teaching at all of these workshops. Thus, all faculty within these two colleges have had opportunities to be trained to use learning centered teaching approaches. Over the past five years, more than 70% of the faculty in the College of Arts and Sciences and more than 85% of the faculty in the College of Health Professions attended at least one such workshop each year.

Collect and Analyze Data to Determine If the Goal Was Met

To assess the achievement of their strategic plans, the director of the CTL gauged the extent to which the faculty in both colleges implemented learning centered teaching. This study was approved by the university’s Institutional Review Board, and all full time faculty members within both colleges were encouraged to participate. Faculty within the College of Arts and Sciences and the College of Health Professions were interviewed to determine the extent of the implementation of learning centered approaches.

To ensure a representative sample, the director of the CTL aimed to collect data from at least 50% of the full time faculty in each college. She contacted all of them and asked to set up an hour’s interview at their convenience in their office. The faculty members who rarely attend the Center’s events were more persistently asked to participate so that it would not become a sample of the most frequent faculty development consumers. Meetings were established until the sample goal was met and the academic year ended. Once the sample goal was met, the remaining faculty members were not scheduled for an interview. Only one faculty member refused to participate in the study. The director interviewed 58 of 99 (60%) full time faculty members from the Colleges of Arts and Sciences and Health Professions. All participants agreed to be interviewed.

The director created and used a semistructured questionnaire that inquired about the implementation of the practices outlined in the College of Arts and Sciences’ tactical plan. These practices reflected Blumberg’s (2009) components. The questions provided the structure for individual interviews with faculty in which they discussed the courses they taught during that academic year. During the interview, components were explained and examples of it given when necessary. All of the interviews were transcribed. Appendix lists the general information questions and selected questions on the questionnaire.

Because faculty do not always accurately report on their behaviors, the director also asked the professors to provide support for how they teach by sharing course artifacts, such as syllabi or instructions for assignments. However, Ravitz, Becker, and Wong (2000) report the validity of self report of the roles of teachers through survey methods in research funded by the National Science Foundation and the U.S. Department of Education, Office of Educational Research and Improvement. They found that teachers’ beliefs about their teaching practices (i.e., their personal pedagogies) do a reasonably good job of predicting practice. Teachers who accepted constructivist teaching practices, of which learning centered teaching is an example, are likely to use them (Ravitz et al., 2000). This research also found no evidence of reporting bias because of perceived social desirability of answers.

Based on Ravitz et al.’s (2000) review of the literature and their own research, combined with the collection methods of both interviews and review of course artifacts, the director is confident that the data in this study have sufficient validity to measure the extent to which the colleges met their strategic goals. This confidence is further bolstered by the fact that the interviewer was not evaluating these faculty. Participants knew that individual responses would never be disclosed. Although observing the participants in the classroom would have triangulated data sources and perhaps increased the validity of the data, the director chose not to do so. Observations of teaching would have appeared more like evaluating the individual teachers, which was beyond the purpose of this study.

After collecting the data from the interview questions, the director rated all of the answers to the interview questions on the selected components of Blumberg’s (2009) rubrics, employing the reliable and valid methods described by Blumberg and Pontiggia (2011). The complete set of rubrics can be found in Appendix B of Blumberg (2009). This data rating produced a set of scored rubrics for every faculty member interviewed. The rubrics were treated like a series of Likert scales, thus creating four point indices that summarized the data. The range of the indices is from 1 to 4, with a higher value being the more learning centered response (Blumberg & Pontiggia, 2011). Each faculty member was represented by a separate row on a spreadsheet indicating that person’s rating on each component. For each individual and for each component, the mean, standard deviation, median, and mode were calculated.

Ratings from the four point scale were grouped into two overall levels for ease of interpretation of results: instructor centered or learning centered. Those rated as either instructor centered or lower level of transition (1 or 2 on rubric) were summarized as instructor centered, and ratings marked as either higher level of transition or learning centered (3 or 4 on rubric) were summarized as learning centered. Next, the percent of faculty members rated as instructor centered or learning centered was tabulated for each component separately. This grouping of scores is consistent with the criteria for success identified for this study. This scoring method follows Suskie’s (2009) recommendations of grouping levels and reporting percentages as opposed to using a more complex statistical analysis, especially for assessment studies. She recommends these simple ways of reporting data for assessment studies because they are easy to understand and interpret, thus making them meaningful to diverse stakeholders and aligned with the additional formative goals of this study.

The data were interpreted in two different ways: overall learning centered scores and how frequently specific components are used. The overall learning centered scores form an effective indicator of the status of implementation of learning centered approaches for these two colleges. Scores on specific components offer insights into which practices are used and which are not. Both interpretations can be meaningful for faculty development purposes.

Results of the Study

In the College of Arts and Sciences, 74% of the interviewed faculty and 92% of the interviewed faculty in the College of Health Professions employ at least half of the practices in a learning centered manner. Therefore, both colleges met the criteria of successfully employing learning centered teaching.

The highest overall score was 3.56, and the lowest overall score was 1.75. The average score for all participants was 2.51 (a transition score between instructor centered and learning centered), with the median and modal scores of 3 (the higher level of transition). All of the faculty members who were using the most learning centered approaches were either assistant professors or recently promoted associate professors. Four of the five faculty members with the lowest scores (using instructor centered approaches) were professors. There was a large variability in the overall use of learning centered approaches as the standard deviation was 0.97.

Learning Centered Teaching Components

A total of 62% of the components were used in a learning centered way by at least half of the faculty. Table 1 shows that three components were used in learning centered ways by about 90% of the faculty. Table 2 shows that three components were used by less than 30% of the faculty. Table 3 shows the two components that the participants did not understand as the interviewer had to frequently explain these concepts. Those components not listed on these tables were used by about half of the faculty in learning centered ways.

| Dimension | Component | Percent of Faculty Using Instructor Centered Approach (Scored as 1 or 2) | Percent of Faculty Using Learning Centered Approach (Scored as 3 or 4) |

|---|---|---|---|

| The function of content | Promote students to engage with the content This engagement with content occurs through reflection and personal meaning making to facilitate mastery | 7 | 93 |

| The role of the instructor | Create an environment for learning and student success to occur | 11 | 89 |

| The purposes and processes of assessment | Provide formative feedback that can be used to help students to improve | 11 | 89 |

| Dimension | Component | Percent of Faculty Using Instructor Centered Approach (Scored as 1 or 2) | Percent of Faculty Using Learning Centered Approach (Scored as 3 or 4) |

|---|---|---|---|

| The responsibility for learning | Help students to engage in self assessment of their learning and of their strengths and weaknesses | 80 | 20 |

| The purposes and processes of assessment | Use peer and self assessment | 76 | 24 |

| The role of the instructor | Describe learning centered teaching practices that the instructor uses in the course syllabus | 72 | 28 |

| Dimension | Component and Further Explanation as Given in the Interview (In Italics) | Percent of Faculty Using Instructor Centered Approach (Scored as 1 or 2) | Percent of Faculty Using Learning Centered Approach (Scored as 3 or 4) |

|---|---|---|---|

| The function of content | Use organizing schemes to tie together most of the content. Experts use organizing schemes; novices do not use them An organizing scheme goes well beyond a course outline or list of topics in terms of how a course is taught. Faculty will use organizing schemes throughout the course. Homeostasis is an organizing theme in physiology, and modernity is an organizing theme in history | 48 Biology faculty 37 | 52 Biology faculty 63 |

| The role of the instructor | Align the course components—objectives, teaching or learning methods, and assessment methods—for consistency of content and for students’ cognitive demand (verbs on objectives, learning or practice activities, or assessments, i.e., evaluate, analyze) | 54 | 46 |

Discussion of Learning Centered Practices and the Data With Stakeholders

Faculty indicated that the data collection method itself was a value added component of the study as it was an effective educational development vehicle, especially for faculty who do not regularly attend events hosted by the CTL. Research oriented faculty may be more inclined to participate in a study that involves an interview in their office than to attend an event that focuses on teaching techniques. While collecting data about teaching practices in the structured one on one interviews, the educational developer also disseminated information about learning centered teaching (see Appendix for how this was performed). Upon hearing the explanation for various questions, especially the two practices that were frequently not understood, the faculty members remarked that these concepts now made sense, and some of them said that they would consider using these practices in the future. These interviews created an immediate opportunity to motivate faculty to use things they learned during the interview to guide how they structure their teaching and describe their rationale to their students.

Discussions of the results can stimulate changes to educational practices and pedagogy (Maki, 2010). However, as the above paragraph illustrates, this development actually began in the interview process, when an interviewer would offer explanations of learning centered practices. After the study was completed, the director discussed these results with the deans, department chairs, and the faculty of these colleges. The tone of all of these discussions was information sharing, with a focus on improvement and never with judgment. Individual responses and ratings were never disclosed. These discussions led to some interesting additional insights that promote awareness of learning centered practices and raise further questions about their implementation.

During these dialogues about the results of the study, the faculty considered the level of student awareness and the effect that may have. While the faculty members may be teaching using learning centered practices, those faculty who use many learning centered practices perceive that the students may not be aware of, and may even be confused by, these practices. When students do not understand why this course is different from their expectations of more instructor centered courses, they tend to perceive the course as harder, resent the extra work, and may evaluate the course negatively. These perceptions come from students’ questions, complaints, and statements made on their end of course evaluations. This led to a discussion about the need to be very explicit in explaining teaching methods. Referring to the learning centered approaches on the syllabus, is a good first step that more faculty could implement, but merely listing them is not effective. The most learning centered faculty suggested that good practice would identify learning centered practices in the syllabus and also explain how and why they are used on the course’s learning management system. These explanations should also occur as the students engage with these practices during the course.

These dialogues with faculty revealed that informal discussions of teaching, even those held for 10 to 15 minutes during department meetings, were an effective faculty development tool. During these sessions, the faculty members informally discussed how they teach. For example, prior to conducting this study, the faculty in the Department of Biological Sciences discussed how they implemented specific aspects of learning centered teaching. Several professors who teach the introductory biology courses are proponents of using organizing schemes to help students understand the larger picture of their discipline. During these department meetings, they discussed how they described it in their syllabus, used it to introduce new units, and even asked students to relate concepts to organizing schemes on examinations and papers. As a result of their persuasive arguments, more faculty members in this department are using organizing schemes than any other department (as indicated in Table 3). Results of this study validated the departmental development efforts, and they plan to continue similar learning centered teaching practice discussions in the future.

Using the Results of This Study to Plan Further Efforts

As a result of this study and these college wide discussions, the deans and executive committees of these colleges concluded that they are making good progress toward achieving their strategic goal of using learning centered teaching. The majority of the faculty in both colleges were using learning centered techniques. However, there still is a minority of the faculty who were not employing learning centered approaches. The deans and executive committees are planning on how to institutionalize learning centered teaching practices in all of their courses and how to encourage the instructor centered minority to become more learning centered. The executive committee of the College of Arts and Sciences is discussing how learning centered teaching can be incorporated within the next strategic plan.

Educational developers can help faculty members overcome the perception that adapting learning centered teaching requires a total revision of their teaching. Instead, incremental changes that are easy to implement are good first steps toward becoming more learning centered (Blumberg, 2009). Further analysis of the data in this study indicated reasons why faculty members chose specific learning centered teaching practices. These reasons included that the practice fit with their discipline or personal teaching philosophy; this was especially true of faculty members who used few learning centered practices. Educational developers can tailor suggestions to begin using learning centered practices to those that are naturally aligned with the discipline they teach. For example, health professions students often practice clinical skills on each other. Faculty members who teach these students may be encouraged to ask students to provide routinely feedback to each other (Blumberg, 2016).

In addition, the director of the CTL uses the results to plan center activities. She continues to give the workshops on teaching using learning centered teaching to new faculty, and she has developed a series of targeted workshops for more senior faculty, especially focusing on the least understood and least used components. Here are two examples of how the data from the assessment study led to specific educational development workshops:

From the data of this study, the director discovered that faculty from the College of Arts and Sciences were not using student peer and self assessment, whereas the faculty from the College of Health Professions were using this practice. She organized a training session for the faculty in the College of Arts and Sciences on how to use student peer and self assessments to foster student professional development. Faculty from the College of Health Professions led the training session, showing how they use this practice, and gave out examples of the rubrics the students use to assess themselves and their peers. The formal training session led to a discussion about the purpose and value of peer and self assessments for all students.

The director also recognized from the process of the study the value of having learning centered practices explained and exemplified by a colleague. To facilitate more of this kind of modeling, she organized a panel discussion in which she invited five of the most learning centered faculty to talk about their teaching. Prior to the panel discussion, she asked the participants to prepare remarks describing why and how they use learning centered teaching. In addition, the director told each panelist that she would be asking them to describe how they implement a specific component. Each panelist spoke about a different infrequently used or not well understood component. The panel discussion was part of the annual CTL’s spring workshops open to the entire university. A total of 22 faculty members attended this event, a third were newer faculty. In the audience, there was a mix of faculty who were implementing learning centered practices and those who were not. Everyone who attended noted the value of the session because they learned a new technique or how to use a learning centered component that they did not use before. This session led to interesting discussions about the relevance of specific components, and we know from the literature on faculty coaching that such settings that offer “contextualized opportunities to reflect on teaching and learning” are a crucial component of professional development (Huston & Weaver, 2008).

Keeping the Study Separate From Individual Faculty Evaluation

Banta and Palomba (2015) identified a common misconception held by faculty. Assessment and faculty evaluation are not the same processes, but faculty fear that assessment can be used for faculty evaluations. The director followed the guidelines developed on assessment by experts such as Suskie (2015) and Banta and Palomba (2015) to alleviate this concern among faculty. The information gained from this assessment determined whether two colleges were meeting their strategic goals; it will never be used to evaluate individual faculty. Care was taken to ensure that the identities of individual faculty were never disclosed in all discussions of the results or the internal, written summary of the study. Results were always reported using aggregate data by college or department. The names of the participants were not disclosed. Findings from this assessment study cannot be used to judge individuals or to make critical decisions about faculty members. Thus, there was no breach of trust between the faculty and the director as an educational developer.

Moving forward from this study, the colleges may use these aggregate data as a benchmark to measure individual faculty members use of learning centered teaching. In fact, the dean of the College of Health Professions wants to include using learning centered teaching as part of the criteria for being a good teacher on annual faculty evaluations so that such teaching becomes the accepted standard for all faculty members in the future. This evaluation would most likely involve self report as it is used now, such as stating learning centered practices used and explaining how they are used through examples of syllabi or assessment instruments. While such use of the results is within the purview of the dean, the director would never agree to be the agent or provide data for such faculty evaluations.

Conclusion

This study served two mutually complementary purposes: to determine if two colleges met their strategic goals of implementing learning centered teaching and to determine the impact of the work of the CTL. This article illustrates why educational developers should become involved with ongoing college or campus wide assessment projects. The data collection method used here can be a teachable moment for faculty who do not attend educational development programs. Data from assessment studies led to rich conversations with faculty and administration about teaching and learning. In this case, the study promoted discussions about teaching methods and the varied ways that faculty can implement the same learning centered teaching components. Data are strong evidence that faculty and administrators can use to plan further changes that can lead to the improvement of the program quality in the future.

Going through all of the steps of the assessment cycle can take multiple years as this study shows. The strategic plans had been in place for at least four years before the faculty and administration decided to assess if the goal had been met, while the CTL had been promoting and educating faculty on learning centered teaching for over a decade. It is unrealistic to expect a quick turnaround in teaching practices, and if educational developers were to try to complete the assessment cycle in a year or two, they might only be able to look at indicators, such as number of people participating in events, rather than substantive outcomes, such as changes in teaching.

This study shows how the assessment cycle was applied to determine if an intervention was successful. The data indicate that long term, consistent educational development efforts influence faculty’s teaching behaviors. The results of this study also show that at least half of the faculty are using a wide variety of learning centered practices, largely as promoted by the CTL. In addition to assessing the impact of past efforts, the findings served as a needs assessment to guide planning for future educational development initiatives and continued efforts within the colleges. The faculty and administrators used the data to complete the assessment cycle by planning further actions. Also, the director of the CTL used the data to plan targeted events to implement specific components of learning centered teaching. She will continue her long efforts to promote learning centered practices with renewed dedication given the encouraging results of this study. She hopes that these results will inspire others to do so also.

Acknowledge helpful comments made by Claudia Stanny of the University of Western Florida on an earlier version of this paper. Phyllis Blumberg an Assistant Provost for Faculty and Leadership Development, the Director of the Teaching and Learning Center and professor of social sciences and education at the University of the Sciences. She is the author of more than sixty articles on learner centered teaching, faculty development and assessment.

References

- Banta, T. W., & Palomba, C. A. (2015). Assessment essentials (2nd ed.). San Francisco, CA: Jossey Bass.

- Blumberg, P. (2004). Beginning journey toward a culture of learning centered teaching. Journal of Student Centered Learning, 2(1), 68–80.

- Blumberg, P. (2009). Developing learner centered teaching: A practical guide for faculty. San Francisco, CA: Jossey Bass.

- Blumberg, P. (2016). Factors that influence faculty adoption of learning centered approaches. Innovative Higher Education, 41(2). doi:10.1007/s10755-015-9346-3.

- Blumberg, P., & Everett, J. (2005). Achieving a campus consensus on learning centered teaching: Process and outcomes. To Improve the Academy, 23, 191–210.

- Blumberg, P., & Pontiggia, L. (2011). Benchmarking the learner centered status of courses. Innovative Higher Education, 46(3), 189–202.

- Doyle, T. (2011). Learner centered teaching: Putting the research on learning into practice. Sterling, VA: Stylus.

- Hines, S. (2009). Investigating faculty development program assessment practices: What’s being done and how can it be improved? Journal of Faculty Development, 23(3), 5–19.

- Huston, T., & Weaver, C. L. (2008). Peer coaching: Professional development for experienced faculty. Innovative Higher Education, 33(1), 5–20.

- Kebaste, M., & Sims, R. (2016). Using instructional consultation to support faculty in learner centered teaching. Journal of Faculty Development, 30(3), 31–40.

- Maki, P. (2010). Assessing for learning (2nd ed.). Sterling, VA: Stylus.

- Palomba, C. (2001). Implementing effective assessment. In C. A. Palomba & T. W. Banta (Eds.), Assessing student competence in accredited disciplines (pp. 13–28). Sterling, VA: Stylus.

- Ravitz, J., Becker, H., & Wong, Y. (2000). Constructivist compatible beliefs and practices among US teachers. Retrieved July 1, 2015, from http://www.crito.uci.edu/TLC/findings/report4/body_startpage.html

- Schroeder, C. (Ed.) (2011). Coming in from the margins: Faculty development’s emerging organizational development role in institutional change. Sterling, VA: Stylus.

- Sorcinelli, M. D., Austin, A. E., Eddy, P. L., & Beach, A. L. (2006). Creating the future of faculty development. Bolton, MA: Anker.

- Sorcinelli, M. D., Gray, T., & Birch, A. (2011). Faculty development beyond instructional development. To Improve the Academy, 30, 247–261.

- Stark, J. S., & Thomas, A. M. (1994). Introduction. In J. S. Stark & A. M. Thomas (Eds.), Assessment and program evaluation (pp. xvii–xxii). Needham Heights, MA: Simon and Schuster.

- Suskie, L. (2009). Assessing student learning (2nd ed.). San Francisco, CA: Jossey Bass.

- Suskie, L. (2015). Five dimensions of quality: A common sense guide to accreditation and accountability. San Francisco, CA: Jossey Bass.

- University of the Sciences, Teaching and Learning Center (2005). Self study of the teaching and learning center of the University of the Sciences (internal document). Philadelphia, PA: University of the Sciences.

- Weimer, M. (2002). Learner centered teaching. San Francisco, CA: Jossey Bass.

- Weimer, M. (2013). Learner centered teaching: Five key changes to practice (2nd ed.). San Francisco, CA: Jossey Bass.

Appendix 1 Selected Interview Questions

A

In addition to general information questions, this appendix lists the questions that were asked relating to the three learning centered teaching components that were used infrequently and the questions that were asked and explanations given relating to the two learning centered teaching components that were not understood by the faculty.

A. General Questions

1. What courses are you teaching or taught this academic year? Can you please give me a copy of your syllabus for each course?

2. What happens on a typical day in your classes?

All of the following questions were preceded by a general question inquiring if they used a practice that could be answered yes or no. If yes, then there were follow up questions. If faculty asked for more explanation, the item was explained to them.

B. Questions That Were Asked Relating to the Learning Centered Teaching Components That Were Used Infrequently

1. Students engage in self assessment of their learning and of their strengths and weaknesses.

How do your students acquire self assessment skills? Self assessment might include assessment of their learning processes, strengths, weaknesses of their abilities to learn.

How much time is devoted to the acquisition and practicing of these self assessment skills?

2. Peer and self assessment.

Does anyone beside you provide formative feedback? Do the students provide feedback to each other?

3. Learning centered teaching practices described on the course syllabus.

Do you mention the use of learning centered teaching practices on the course syllabi or orientation to the course? If so, please indicate what you write on your syllabi or explain what you say.

C. Questions That Were Asked Relating to the Learning Centered Teaching Components That Were Not Understood by the Faculty.

1. Organizing schemes to tie together most of the content.

A discipline specific conceptual framework or organizing theme helps experts integrate much of the material. Experts use these themes to learn new material. Novices have not organized the content well enough to use them unless they are explicitly taught. An organizing theme goes well beyond a course outline or a list of topics in terms of how a course is taught. Teaching /learning activities and assessments should explicitly use these frameworks.

The structure–function relationship is an organizing theme of biology; homeostasis is an organizing theme in physiology; the drive to modernity is such a theme in history.

How do you explicitly use discipline specific frameworks or organizing themes that pull together much of the course content?

Please provide a brief explanation of what you do for each course, indicate where additional material or explanation can be found.

How much time is devoted to giving students exposure to these discipline specific frameworks or organizing themes?

2. Course alignment.

Objectives, teaching/learning, and assessment methods should be at a consistent cognitive process level or verb on a learning taxonomy hierarchy (i.e., such as Bloom’s taxonomy that goes from remember through apply to create). This concept is called alignment. For example, if the objectives call for analysis, the students learn how to analyze, get opportunities to practice such analysis, and the assessments should evaluate that the students can do these analyses.

Describe how the student’s cognitive process requirements or verbs are consistent for the teaching learning activities, assessment methods and course objectives.

How you align your courses? Provide material from syllabi.

How do the students realize that their course is aligned?