4 The Knowledge Survey: A Tool for All Reasons

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Knowledge surveys provide a means to assess changes in specific content learning and intellectual development. More important, they promote student learning by improving course organization and planning. For instructors, the tool establishes a high degree of instructional alignment, and, if properly used, can ensure employment of all seven best practices during the enactment of the course. Beyond increasing success of individual courses, knowledge surveys inform curriculum development to better achieve, improve, and document program success.

INTRODUCTION

An increasing number of students in diverse disciplines at our institutions now take knowledge surveys at the beginning and end of each course. A survey consists of course learning objectives framed as questions that test mastery of particular objectives. Table 4.1 displays an excerpt from a knowledge survey from an introductory course in environmental geology. Six survey items represent a unit lesson on asbestos, together with the header that provides directions for responding to the survey. These six items, taken from a 200-item knowledge survey, range from simple knowledge to evaluation of substantial open-ended questions. Students address the questions, not by providing actual answers, but instead by responding to a three-point rating of one’s own confidence to respond with competence to each query (see “Instructions” in Table 4.1). Knowledge surveys differ from pre-test/post-test evaluations because tests, by their nature, can address only a limited sampling of a course. In contrast, knowledge surveys cover an entire course in depth. While no student could possibly allocate the time to answer all questions on a thorough knowledge survey in any single exam sitting, they can rate their confidence to provide answers to an extensive survey of items in a very short time span. Sequence of items in the survey follows the sequence in which the instructor presents the course material.

A well-designed survey will contain clear, unifying concepts that are fleshed out with the more detailed content needed to develop conceptual learning. The content items in Table 4.1 also develop central unifying concepts about the nature of science and scientific methods (see Table 4.3). Knowledge surveys can present the most complex of open-ended kinds of problems or issues, and they can assess skills as well as content knowledge.

| Item # | Bloom Level | Content topic: asbestos hazards |

|---|---|---|

| 20 | 1 | What is asbestos? |

| 22 | 2 | Explain how the characteristics of amphibole asbestos make it more conducive to producing lung damage than other fibrous minerals. |

| 23 | 3 | Given the formula Mg3Si2O5(OH)4, calculate the weight percent of magnesium in chrysotile. |

| 24 | 4 | Two controversies surround the asbestos hazard: (1) it’s nothing more than a very expensive bureaucratic creation, or (2) it is a hazard that accounts for tens of thousands of deaths annually. What is the basis for each argument? |

| 25 | 5 | Develop a plan for the kind of study needed to prove that asbestos poses a danger to the general populace. |

| 26 | 6 | Which of the two controversies expressed in item 24 above has the best current scientific support? |

Excerpt from a Knowledge Survey

INSTRUCTIONS: This is a knowledge survey, not a test. The purposes of this survey are to (1) provide a study guide that discloses the organization, content and levels of thinking expected in this course and (2) help you to monitor your own growth as you proceed through the term. Use the accompanying Form Number 16504 answer sheet provided. Be sure to fill in your name and student ID number on the front left of the form. This requires both writing your name in the spaces in the top row and filling in the bubbles corresponding to the appropriate letters. You may use pen or pencil to mark your responses, so long as the pen or pencil is not one with red ink or lead.

In this knowledge survey, don’t actually try to answer any of the questions provided. Instead rate (on a three-point scale) your confidence to answer the questions with your present knowledge. Read each question and then fill in an A, B or C in the A through E response row that corresponds to the question number in accord with the following instructions:

Mark an “A” as response if you feel confident that you can now answer the question sufficiently for graded test purposes.

Mark a “B” response to the question if you can now answer at least 50% of it or if you know precisely where you could quickly get the information needed and could return here in 20 minutes or less to provide a complete answer for graded test purposes.

Mark a “C” as response to the question if you are not confident that you could adequately answer the question for graded test purposes at this time.

Do your best to provide a totally honest assessment of your present knowledge. When you mark an “A” or a “B,” this states that you have significant background to address an item. You should consider it fair if your professor asks you to demonstrate that capability by actually answering the question. This survey will be given again the last week of the semester. Keep this survey and refer to the items co monitor your increasing mastery of the material through the semester.

Note: Students respond in accord with instructions for either a bubble sheet (as above) or a web-based format. The instructions for completion are generic for most courses; the actual items shown here are from a lower division course in environmental geology.

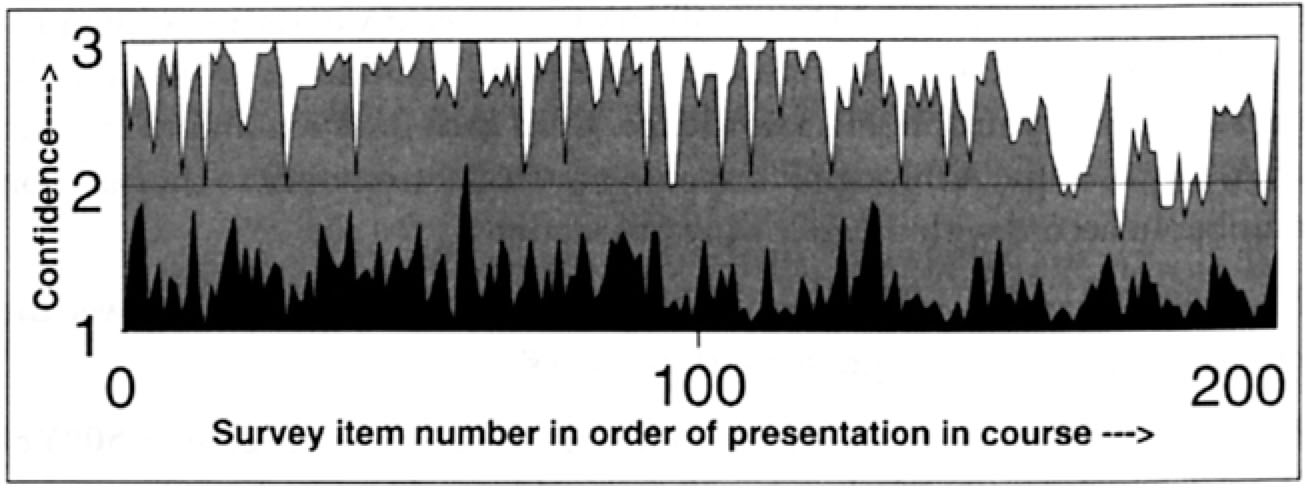

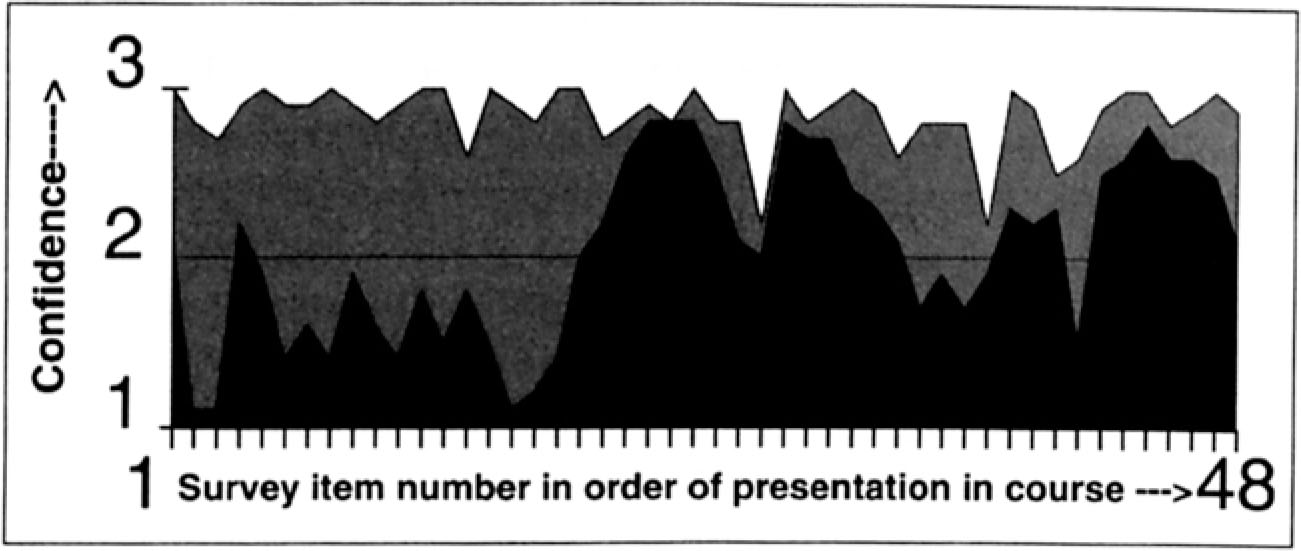

Figure 4.1 shows the pre- and post-course results from the 200 items in the same semester-long course. The values plotted are the class average of responses to each item, and only the students who completed both pre- and post-surveys are included. When needed, the instructor can call up the data at the level of each individual student. The figure provides insights that global summative student evaluations would never reveal, such as better pacing (see caption) as a key to improve learning when the instructor again teaches the course.

Figure 4.1 Pre- and Post-Course Results of a Knowledge Survey(From Nuhfer, 1996)Note: Ordinate scales are from 1 (low confidence) to 3 (high confidence). The survey elicited confidence ratings to 200 items (abscissa) in the order in which students encountered items in the course. The sample items 20–26 provided in Table 4.1 are from this same course. The lower darker area (on this and all similar figures in this chapter) reveals the class averages on confidence to address each item at the start of class; the upper shaded area displays the ratings to the same items at the end of class. The course, instructor, and learning experience all received “A” ratings from students on summative evaluations, but lower learning did occur in the final two weeks of classes when the final topic on coastal hazards was hurriedly covered. The right side of the graph (approximately items 170–200) reveals this learning gap. Better pacing eliminated this gap when the instructor next taught the course.

Figure 4.1 Pre- and Post-Course Results of a Knowledge Survey(From Nuhfer, 1996)Note: Ordinate scales are from 1 (low confidence) to 3 (high confidence). The survey elicited confidence ratings to 200 items (abscissa) in the order in which students encountered items in the course. The sample items 20–26 provided in Table 4.1 are from this same course. The lower darker area (on this and all similar figures in this chapter) reveals the class averages on confidence to address each item at the start of class; the upper shaded area displays the ratings to the same items at the end of class. The course, instructor, and learning experience all received “A” ratings from students on summative evaluations, but lower learning did occur in the final two weeks of classes when the final topic on coastal hazards was hurriedly covered. The right side of the graph (approximately items 170–200) reveals this learning gap. Better pacing eliminated this gap when the instructor next taught the course.The Need for a More Direct Assessment of Learning

Knowledge surveys address learning in specific detail, as opposed to the common global summative item: “Evaluate this course as a learning experience.” Summative student ratings are frequently used to evaluate professors for rank, salary, and tenure. Summative ratings are measures of students’ general satisfaction, which results from a mix of content learning, cognitive development, and affective factors. The most thorough research on the relationship between content learning and summative ratings produced a correlation coefficient derived by meta-analysis of r = 0.43 (Cohen, 1981). That relationship is strong enough to prove that, in general, students learn more from professors whom they rate highly, but it is too weak to allow learning in any single class to be assessed by inference based on student ratings of the class, the individual professors who taught it, or the overall learning experience. Researchers such as Feldman (1998) have shown that educational practices that produce the most learning are not exactly the same as those that produce the highest student ratings. Because student ratings alone cannot reliably reveal learning outcomes produced by individual instructors or courses, good assessment requires a more direct assessment of learning. We believe knowledge surveys, properly used, serve this purpose. Knowledge surveys were created to allow instructors to prove that their courses produced specific changes in students’ learning and to disclose the detailed content of a course to students (Nuhfer, 1993, 1996). Tobias and Raphael (1997) grasped the usefulness of the tool and included it as a best practice in their book. Knowledge surveys do achieve their original purposes, but employment of this tool encourages further instructional improvements that are discussed in this chapter.

DISCUSSION

Knowledge Surveys that Enhance High-Level Thinking

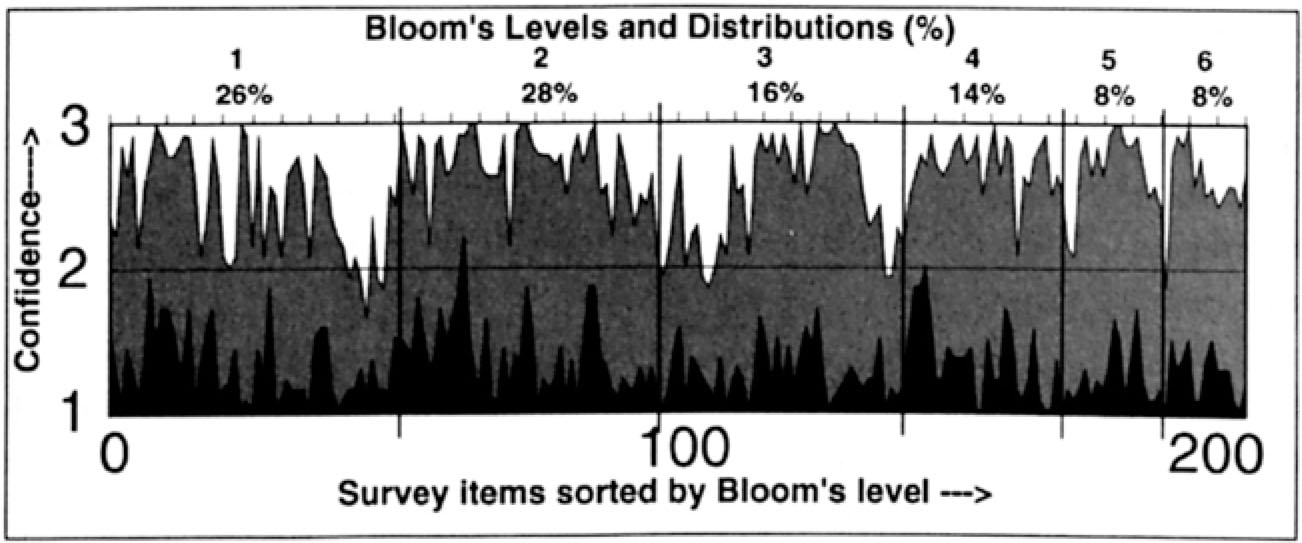

When instructors develop sophistication with knowledge surveys, they can code each item according to levels of reasoning, such as those of Bloom (1956) (see Tables 4.1 and 4.2). This coding allows an instructor to see the course as a profile of levels of inquiry addressed (Figure 4.2) and verify that the course plan accords with the original intentions of the instructor and the purposes of the course. As noted by Gardiner (1994), most testing pinpoints low-level content knowledge, even though most instructors really aspire to teach some higher-level critical thinking. If an instructor includes critical thinking as her or his course objective, and the detailed knowledge survey reveals overt emphasis on mere memorization, then recognizing this discrepancy is empowering. Such revelations allow course redesign that will ensure the desired critical thinking experiences.

Interpretations of graphs like Figure 4.2 require care. Although 54% of knowledge survey items for this lower division course addressed the lowest Bloom levels, this does not imply that 54% of course time was spent addressing lower levels. In fact, high-level open-ended questions require disproportionately more time to confront than do simple recall knowledge items. Figure 4.2 verifies that students encountered no less than (they actually encountered more) 28 analytical, 16 synthesis, and 16 evaluation challenges in this course. Yet, students can do synthesis and evaluation poorly (see Nuhfer and Pavelich, 2001), thus operating at the lower levels of the Perry (1999) model or the reflective judgment model (King & Kitchener, 1994). If one wants to use such a presentation like Figure 4.2 to prove that students truly mastered some critical thinking in this course, then one must disclose the rubrics used to evaluate students’ answers to representative high-level items. (Rubrics are the disclosed criteria that instructors use for evaluation of a test question or project.) When instructors present rubrics along with evidence like Figure 4.2, the assertion that students addressed high-level challenges with high levels of sophistication is hard to refute. Table 4.2 near the end of this chapter provides rubrics for a few such questions.

| This Bloom reasoning level is probably addressed . . . | . . . if the query sounds like: |

|---|---|

| 1. Recall (remember terms, facts) | “Who . . .?” or “What . . .?” |

| 2. Comprehension (understand meanings) | “Explain.” “Predict.” “Interpret.” “Give an example.” “Para phrase . . . ” |

| 3. Application (use information in new situations) | “Calculate.” “Solve.” “Apply.” “Demonstrate.” “Given___. Use this information to . . . ” |

| 4. Analytical (see organization and patterns) | “Distinguish . . . ” “Compare” or “Contrast” “How does_____relate to___?” “Why does___?” |

| 5. Synthesis (generalize/create new ideas from old sources) | Design. . .” “Construct . . . ” “Develop.” “Formulate.” “What if. . . ” “Write a poem.” “Write a short story . . . ” |

| 6. Evaluation (discriminate and assess value of evidence) | “Evaluate.” “Appraise.” “Justify which is better.” “Evaluate____argument, based on established criteria.” |

Figure 4.2 Levels of Thinking Represented in a Knowledge SurveyNote: Data are from the same environmental geology class and knowledge survey shown in Figure 4.1, but here have been rearranged to present the course outcomes as a profile of levels of educational objectives (Bloom, 1956) encountered in the course. The graph reveals that reduced learning in the final two weeks (Figure 4.1) occurred in material typified by the lower Bloom’s levels.

Figure 4.2 Levels of Thinking Represented in a Knowledge SurveyNote: Data are from the same environmental geology class and knowledge survey shown in Figure 4.1, but here have been rearranged to present the course outcomes as a profile of levels of educational objectives (Bloom, 1956) encountered in the course. The graph reveals that reduced learning in the final two weeks (Figure 4.1) occurred in material typified by the lower Bloom’s levels.Does Increased Confidence Reveal Increased Learning?

The video, Thinking Together: Collaborative Learning in Science (Derek Bok Center, 1993), from Harvard University shows a brief paired-learning exercise in Dr. Eric Mazur’s introductory physics class. The students confront a problem, answer a multiple-choice question about the problem, and rate the confidence that their own answer is correct. The students then engage in paired discussion to convince the partner that one’s own answer is correct. Thereafter, the entire class debriefs and summarizes results. In that video, a bar graphic displays a positive relationship between confidence and correctness. Mazur (personal communication, November 29, 2001) revealed that there is no strong correlation initially between correctness and confidence to answer correctly, but overall class confidence rises significantly as discussion of the problem proceeds and greater understanding results.

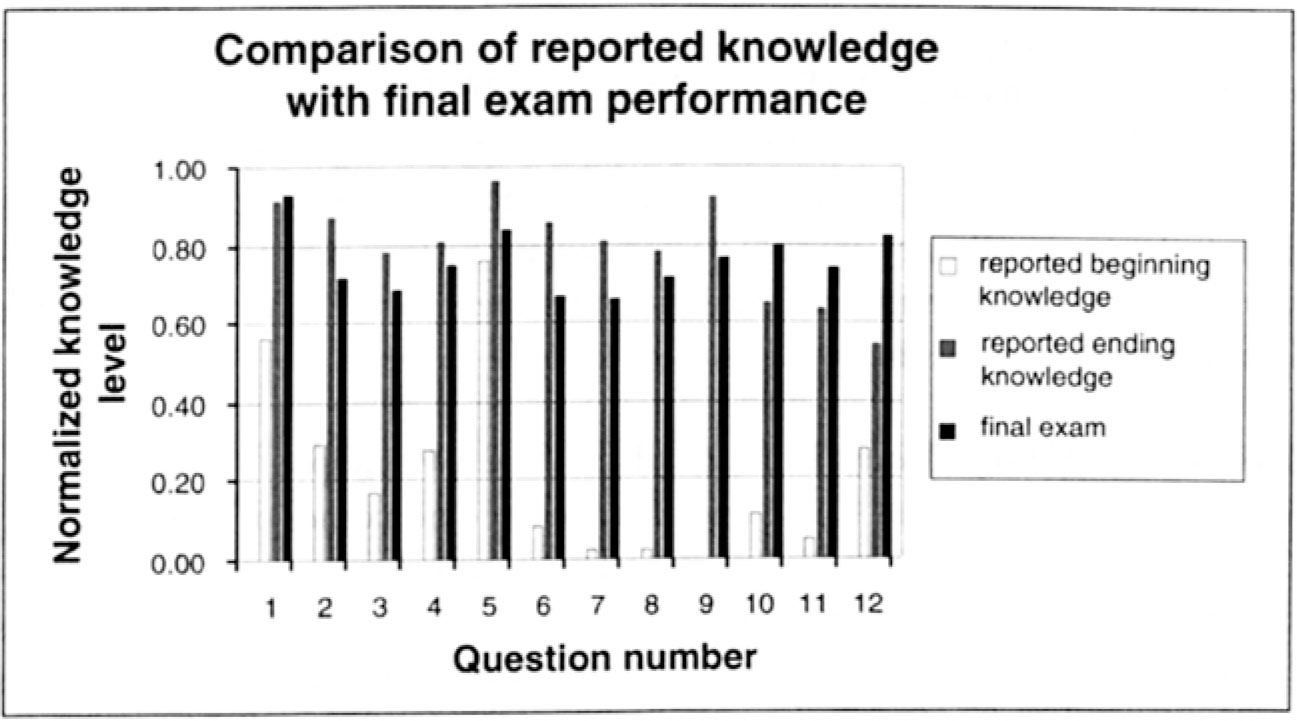

We have observed overt overconfidence expressed on knowledge surveys given at the start of a class by one or two students enrolled in about 10% of courses we have examined. Such students may appear in any level course, but are more frequently freshmen with undeveloped self-assessment skills. However, the aberrations contributed by occasional individuals never affect a class average in a significant way. To date, every class average we have examined has invariably been a very good representation of that class’s knowledge and abilities. The confidence rating to address content does indeed parallel ability to address it in an exam situation (Figure 4.3), especially when a teacher designs effective teaching/learning experiences for the topic. Learning gaps revealed in post-course surveys reflect strong concurrence among nearly all students of little confidence to address an item. Interviews with professors who use knowledge surveys show that they nearly always know what produced a so-designated gap. Often a gap reveals a topic not covered or one that was inadequately addressed (see caption, Figure 4.1).

The best results occur when survey items clearly frame specific content, and students take the survey home to complete it with plenty of time for self-reflection. Instructors should refer frequently to the survey throughout the course and remind all students to monitor their progress. Like any tool, a user’s ability to be effective with it increases with practice and experience. Based on our work with individual faculty, the first knowledge survey an instructor creates tends to consist almost entirely of recall and comprehension items, even though the instructor aspires to instill critical thinking skills in the course. Some items may not be so clearly stated as to elicit students’ focused reflection upon the intended knowledge or skill. In fact, lack of clarity or direction may contribute to the isolated incidents of overt overconfidence noted above. Initial surveys tend to be constructed without deep reflection on more central unifying concepts, global outcomes, or the function of the course in a larger curriculum. A developer can truly help an instructor to improve a course by making queries about course purpose and instructor’s intentions for outcomes. This helps the instructor to frame such outcomes as survey items and to organize lower-level items as a way to develop larger general outcomes.

Once the content and learning goals have been clearly laid out and organized in writing, the instructor can more clearly concentrate on what kinds of pedagogies, learning experiences, and rubrics (Table 4.4) will best ensure content learning and intellectual development. The overall result of deliberation and practice that begins with knowledge surveys is a course with increasingly sophisticated organization, good development of learning outcomes, and clear conveyance of a plan for learning to students.

Figure 4.3 Comparison of Normalized Reported Knowledge and Final Examination Results from an Astronomy Class(after Knipp, 2001)Note: Students were slightly overconfident about their knowledge on several of the questions from the first part of the course and less confident about their knowledge level of material toward the latter part of the course. The latter portion of the course covered material that was conceptually and mathematically new to the students.

Figure 4.3 Comparison of Normalized Reported Knowledge and Final Examination Results from an Astronomy Class(after Knipp, 2001)Note: Students were slightly overconfident about their knowledge on several of the questions from the first part of the course and less confident about their knowledge level of material toward the latter part of the course. The latter portion of the course covered material that was conceptually and mathematically new to the students.Knowledge Surveys Promote Preparation and Organization

Feldman (1998) used meta-analyses to tease apart the instructional practices that produce learning as opposed to those that produce high ratings of student satisfaction. He discovered that the most important instructional contribution to learning was the instructor’s preparation and organization of the course. However, this practice ranked only sixth in importance in gaining high ratings of satisfaction. Satisfaction depended much more strongly on the professor’s enthusiasm and stimulation of interest. The National Survey for Student Learning (Pascarella, 2001) thoroughly supports Feldman’s findings about student learning. The use of knowledge surveys as a best practice can be justified on the basis of the dominant evidence: Nothing a teacher can do to produce learning matters more than the efforts put into course preparation and organization.

The process of making a knowledge survey—laying out the course in its entirety—considering the concepts, the content, levels of thinking, and questions suitable for testing learning of chosen outcomes is extremely conducive to organization and preparation. Further, the act of disclosing the course in its entirety is akin to providing students with a detailed road map to the course. Students know the content, the sequence in which it will come, and the levels of challenge demanded. Erdle and Murray (1986) confirmed that students perceive disclosure as moderately important in physical science and humanities courses, and more important in social science courses. Simply put, the research shows that if one carefully decides what one is going to teach and conveys this clearly to students, then students are more likely to achieve the learning outcomes desired.

Knowledge Surveys Boost Practice of the Seven Principles

Chickering and Gamson (1987) summarized the outcomes of a Wingspread Conference in which attendees expressed consensus by drafting “Seven Principles of Good Practice” for succeeding with undergraduates. Developers know well that getting some faculty to adopt progressive pedagogical practices can be difficult. Most faculty relate better to content learning than to the practices that might better produce learning. The fact that knowledge surveys reach faculty where they are—with an obvious relationship to the content they value—makes them a good tool developers can use to introduce faculty to thinking toward improved practice. Knowledge surveys predispose a class to making use of all seven principles.

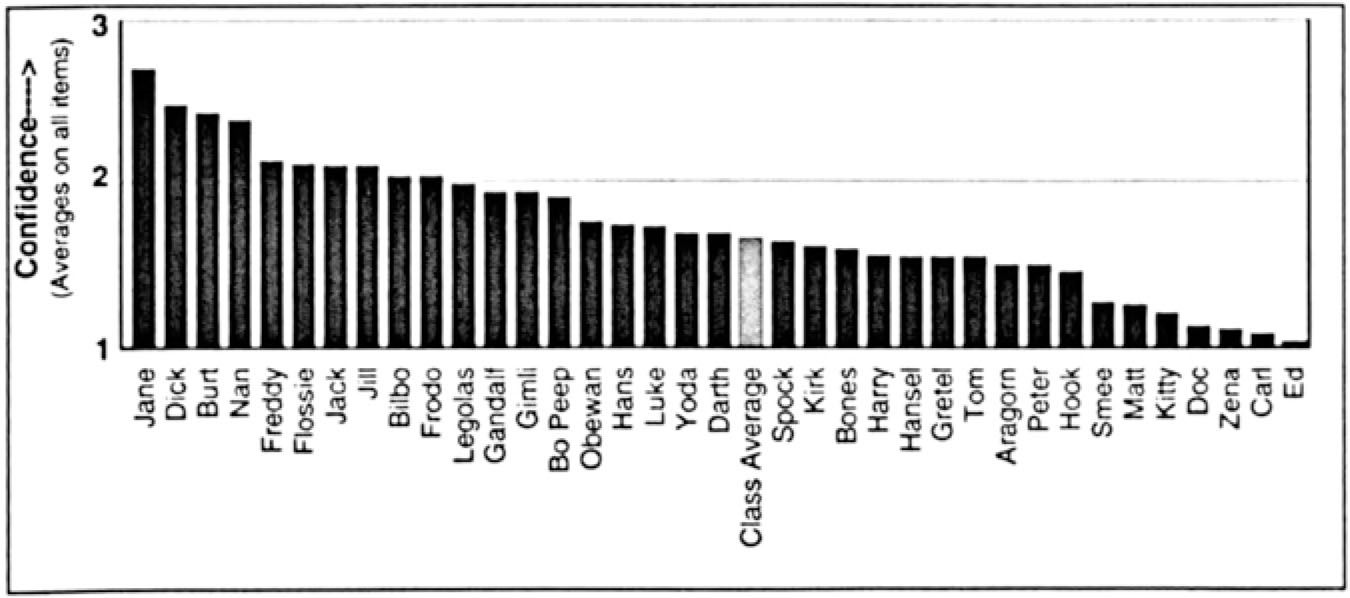

1) Good practice encourages student-faculty contact. One reason that few students ever come to a professor’s office for help is because they are often unaware of what they do not know or understand. Once students confront a knowledge survey and understand its use, students can more clearly see their need to seek help. A pre-course survey can also reveal which students have the most confidence with the material and which do not (Figure 4.4). Such insights permit faculty to know something about each student and make them aware of each student’s possible needs for extra assistance before the class is even underway. Knowledge surveys also indicate which individual students really have the prerequisites needed to engage the challenges forthcoming in the course.

Figure 4.4 A Pre-Course Knowledge Survey from a Music Theory ClassNote: Here the data is used to identify students (names have been changed) by confidence level at the start of the course. Data here are averages of responses to a 50-item survey plus a separately calculated class average (lighter bar).

Figure 4.4 A Pre-Course Knowledge Survey from a Music Theory ClassNote: Here the data is used to identify students (names have been changed) by confidence level at the start of the course. Data here are averages of responses to a 50-item survey plus a separately calculated class average (lighter bar).2) Good practice encourages cooperation among students. Knowledge surveys help to impart several of the five basic elements of cooperative learning (Johnson, Johnson, & Smith, 1991; Millis & Cottell, 1998). Individual accountability includes the critical ability of individuals to be able to accurately assess their own level of preparedness or lack thereof. When a course has detailed disclosure, students more readily know when they have deficiencies, making them more receptive to engaging in positive interdependence, promotive interaction, and group processing to overcome deficiencies. Pre-course knowledge survey results such as the kind shown in Figure 4.4 provide the information needed to form heterogeneous cooperative groups composed of members with known, varied abilities.

3) Good practice encourages active learning. Knowledge surveys can be a powerful prompt for addressing high-level thinking. When students receive both good example items and a copy of Bloom’s taxonomy, they can make up their own new test questions for a unit. Such questions will address the material and appropriately high Bloom levels. A simple assignment could be:

You already have seven questions on this unit in your knowledge survey. Address the material in the unit, use Bloom’s taxonomy, and see if you can produce seven good questions that are even more challenging. If yours are better, I may use them on the knowledge survey for this course next term.

When students know the important concepts and outcomes desired in a unit lesson, groups can become resources, thus structuring peer teaching into a course while assuring quality outcomes.

4) Good practice gives prompt feedback. When a detailed knowledge survey is furnished, it allows students to monitor their progress through the course. One of the first signs that an instructor has produced a survey of good quality is a query from a student: “Will I really be able to learn all these new things?” Prompt feedback delivered by a survey is the students’ own continuous tracking of knowledge gains as the course unfolds. When students can create their original test questions that address the material at a respectable level of thinking, they have reached what probably constitutes adequate preparation and understanding. If any student must be absent, the survey immediately discloses the material missed and reveals to an extent the work required to master it.

5) Good practice emphasizes time on task. Full disclosure at the start of a course allows timely planning and study. A review sheet given out before an exam will not reveal to students what they do not know in a timely manner, and it will promote mere cramming rather than planned learning. Faculty who plan courses well and disclose them at the detail of a knowledge survey quickly discover that the survey keeps them honest. A student who is following his or her progress will invariably say, “Excuse me, Dr.———, but it seems like we didn’t address that item #——— about———.” Perhaps that class launched into a discussion that carried more value than the item skipped and an expressed item of more value now replaces the original item. On the other hand, perhaps the class launched into a digression and the instructor forgot to address a particularly important point that he or she must yet address. When a class inadvertently strays off track, knowledge surveys reveal whether straying resulted in any important omissions. Surveys also require students to engage material repeatedly. Some of the earliest research on cognition deduced the benefits of time spent in repetition to learning. The use of knowledge surveys ensures at least two additional structured engagements with the entire course material.

6) Good practice communicates high expectations. Students sometimes complain that instructors teach at one level and test at a more challenging level. Knowledge surveys offer the opportunity to detail, in a timely manner, the level of challenge that students should expect. The materials needed to build a rudimentary knowledge survey already reside in most professors’ computers—in the past examinations, quizzes, and review sheets they provided the last time they taught the course. The only work required is to arrange items from these in the order of course presentation. Students appreciate the focus that a knowledge survey brings to the study process, and they will rise to expectations conveyed in a survey, particularly if instructors assert that some of the higher-level items will likely appear on a final exam.

7) Good practice respects diverse talents and ways of learning. One of the best ways to address diverse learners is to be certain to present and engage materials in a variety of ways, in particular ways that make sense in terms of how the brain operates in the learning process (Leamnson, 1999). When one actually has a blueprint of content and levels of thinking that one wants to present, it quickly allows one to ask “What is the best way to present this item, then the following item?” Without such a plan, one can too easily end up lecturing through the entire course, even when the desired outcomes literally scream for alternative methods. A detailed plan of outcomes will obviate a correlative plan for reaching these (see “Pedagogies” Table 4.4).

APPLICATIONS AT THE UNIT LEVEL

It is easy to conceive of tools applied to a single course (Figures 4.1 & 4.2) as applied to a four-year curriculum, with the content outcomes and levels of thinking desired plotted on the abscissa from freshman through senior year. We authors have just begun to scratch the surface of using knowledge surveys in unit-level development, but it is exciting and we hope readers will extend this kind of thinking to units at their own institutions.

When professors have detailed blueprints of their own courses, this permits a larger unit to sit down and have the necessary conversations about what content should be taught and when, what levels of thinking should be stressed and when, and what pedagogies and experiences should occur and when. One department at the senior author’s institution has now committed to post syllabi and knowledge surveys for all courses on the web. A senior comprehensive exit exam will draw its questions from the knowledge surveys of courses taken by students. A student can thus see in detail every course and will have relevant study guides to any exit examination. A college at the same institution is now using knowledge surveys to plan and assess the curricula of various departments in that college. Both authors’ institutions have discovered that when adjunct faculty are recruited or an institution has high turnover of instructors, knowledge surveys convey expectations for outcomes to new or part-time professors and help maintain course continuity and standards.

Table 4.3 shows 11 learning outcomes for a university-level general education requirement. These resulted after eight science professors gathered to answer the question: Why are we requiring students to take a science course, and what outcomes justify their costs in time and tuition dollars? Together, these items constitute a model for science literacy—understanding what science is, how it works, and how it fits into a broader plan for education. The institution regularly assesses these items by knowledge survey in each required core science course.

| CORE Questions for Science Literacy |

|---|

| 1. What specifically distinguishes science from other endeavors or areas of knowledge such as art, philosophy, or religion? |

| 2. Provide two examples of science and two of technology and use them to explain a central concept by which one can distinguish between science and technology. |

| 3. It is particularly important to know ideas, but also where these ideas came from. Pick a single theory from the science represented by this course (biology, chemistry, environmental science, geology, or physics) and explain its historical development. |

| 4. Provide at least two specific examples of methods that employ hypothesis & observation to develop testable knowledge of the physical world. |

| 5. Provide two specific examples that illustrate why it is important to the everyday life of an educated person to be able to understand science. |

| 6. Many factors determine public policy. Use an example to explain how you would analyze one of these determining factors to ascertain if it was truly scientific. |

| 7. Provide two examples that illustrate how quantitative reasoning is used in science. |

| 8. Contrast “scientific theory” with “observed fact.” |

| 9. Provide two examples of testable hypotheses. |

| 10. “Modeling” is a term often used in science. What does it mean to “model a physical system?” |

| 11. What is “natural and physical science”? |

Figure 4.5 shows a less positive outcome in a course given in a department that had not held the necessary conversations. The process shows that the course tied poorly into preceding courses. The students initially engaged new material and then spent a substantial amount of the course on material already previously covered elsewhere. By recognizing the duplication, the instructor was able to revise the course, and she achieved much better results the following year.

Figure 4.5 Knowledge Survey Revealing Curriculum Design DeficiencyNote: This 48-item survey of a course in graphic arts revealed significant material taught to students that the students had already learned from some previous course(s). Minimal duplication occurs when a department uses knowledge surveys to inform curriculum design and prevent such unplanned duplication of content.

Figure 4.5 Knowledge Survey Revealing Curriculum Design DeficiencyNote: This 48-item survey of a course in graphic arts revealed significant material taught to students that the students had already learned from some previous course(s). Minimal duplication occurs when a department uses knowledge surveys to inform curriculum design and prevent such unplanned duplication of content.| CHOSEN OUTCOMES (1) Apply the definition of science to a real problem and use the framework of the methods of science to recognize the basis for evidence and the difficulty associated with arriving at a sound conclusion. (2) To understand the asbestos hazard, what the material is, and how it became identified as a hazard. (3) To be able to evaluate the true risks posed to the general populace based upon what constitutes the currently strongest scientific argument. | ||

| CONTENT LEARNING and LEVELS of THINKING (Bloom taxonomy chosen) | ||

| Item# | Bloom Level | Content topic: asbestos hazards |

|---|---|---|

| 20 | 1 | What is asbestos? |

| 22 | 2 | Explain how the characteristics of amphibole asbestos make it more conducive to producing lung damage than other fibrous minerals. |

| 23 | 3 | Given the formula Mg3Si2O5(OH)4, calculate the weight percent of magnesium in chrysotile. |

| 24 | 4 | Two controversies surround the asbestos hazard: (1) it’s nothing more than a very expensive bureaucratic creation, or (2) it is a hazard that accounts for tens of thousands of deaths annually. What is the basis for each argument? |

| 25 | 5 | Develop a plan for the kind of study needed to prove that asbestos poses a danger to the general populace. |

| 26 | 6 | Which of the two controversies expressed in item 24 above has the best current scientific support? |

| PEDAGOGIES — Numbers correlate with content items above. (20) Lecture with illustrations, crossword, short answer drill; (22) guided discussion with formative quiz; (23) demonstration calculation, handout and in-class problems followed by homework; (24) paired (jigsaw) with directed homework on web; (25) based on data taken from “24,” teams of two reflect on two scientific methods and relative strengths weakness of each in this; (26) personal evaluation of conflicting evidence submitted as short (250 word maximum) abstract. | ||

| CRITERIA FOR ASSESSMENT (Rubric) — Be able to realize the basis for distinction between types of asbestos. Understand the nature of chemical formulae that describe minerals. Clearly separate testable hypotheses from advocacy of proponents as a basis for evidence. Clearly distinguish the method of repeated experiments from the historical method in the kinds of evidence they provide. Use science as a basis to recognize evidence, and formulate and state an informed decision about the risks posed to oneself. | ||

SELF-ASSESSMENT — What do you now know about asbestos as a hazard that you did not know before this lesson? You have investigated two competing hypotheses about the degree of hazard posed to the general populace, and you now know the scientific basis for each argument. Do you feel differently now about the asbestos hazard than you did before this lesson? Whether your answer is “yes” or “no,” explain why. Describe some possible non-scientific factors that could affect the arguments presented by each sides of the argument. How do you now feel about the risks posed to yourself, and what questions do you still have? (set Loacker, 2000) | ||

| Note: By stating the content learning outcomes in detail, clear choices emerge that engage learners in diverse experiences. The rubrics and self-assessments relate to both the course content and the global core learning outcomes (Table 4.3). Such an approach epitomizes the “instructional alignment” concept of Cohen (1987), wherein alignment of intended outcomes with instructional processes and instructional assessments produces learning outcomes about two standard deviations beyond what is possible without such organization. | ||

CONCLUSION

Knowledge surveys offer a powerful way to achieve superb course organization and to enact instructional alignment. Surveys serve as powerful assessment tools that provide a direct, detailed assessment of content, learning, and levels of thinking. The time invested to produce a knowledge survey returns much benefit in enhanced learning that results from their use. Faculty appreciate that knowledge surveys require no in-class time to administer, that they improve planning and preparation, that they validate student learning much better than summative student ratings, and that knowledge surveys can be constructed mostly from what is already available in their office computers.

ACKNOWLEDGMENTS

The authors thank colleagues Barbara Millis (USAF Academy) and Carl Pletsch (CU-Denver) for their pre-reviews and helpful suggestions, and Mitch Handelsman (CU-Denver) for help with APA styling.

REFERENCES

- Bloom, B. S. (1956). Taxonomy of educational objectives—The classification of educational goals: Handbook I—cognitive domain. New York, NY: David McKay.

- Chickering, A. W, & Gamson, Z. (1987). Seven principles for good practice in undergraduate education. AAHE Bulletin, 39, 3–7.

- Cohen, P. A. (1981). Student ratings of instruction and student achievement: a meta-analysis of multisection validity studies. Review of Educational Research, 51, 281–309.

- Cohen, S. A. (1987). Instructional alignment: Searching for a magic bullet. Educational Researcher, 16 (8), 16–20.

- Derek Bok Center for Teaching and Learning, Harvard University (Producer). (1993). Thinking Together: Collaborative Learning in Science [Videotape]. Boston, MA: Derek Bok Center for Teaching and Learning, Harvard University.

- Erdle, S., & Murray, H. G. (1986). lnterfaculty differences in classroom teaching behaviors and their relationship to student instructional ratings. Research in Higher Education, 24(2), 115–127.

- Feldman, K. A. (1998). Identifying exemplary teachers and teaching: evidence from student ratings. In K. A. Feldman & M. B. Paulsen (Eds.), Teaching and learning in the college classroom (2nd ed., pp. 391–414). Needham Heights, MA: Simon & Schuster.

- Gardiner, L. F. (1994). Redesigning higher education: Producing dramatic gains in student learning. (Higher Education Report No. 7). Washington, DC: ASHEERIC. (ERIC Document Reproduction Service No. ED 394 442)

- Johnson, D. W., Johnson, R. T., & Smith, K. A. (1991). Active learning: Cooperation in the college classroom. Edina, MN: Interaction Book Co.

- King, P. M., & Kitchener, K. S. (1994). Developing reflective judgment. San Francisco, CA: Jossey-Bass.

- Knipp, D. (2001, Spring). Knowledge surveys: What do students bring to and take from a class? United States Air Force Academy Educator. Retrieved March 18, 2002, from http://www.usafa.af.mil/dfe/educator/S01/knipp040l.htm

- Leamnson, R. (1999). Thinking about teaching and learning: Developing habits of learning with first year college and university students. Sterling, VA: Stylus.

- Loacker, G. (Ed.). (2000). Self assessment at Alverno College. Milwaukee, WI: Alverno College.

- Millis, B. J., & Cottell, P. G. (1998). Cooperative learning for higher education faculty. Westport, CT: Greenwood.

- Nuhfer, E. B. (1993). Bottom-line disclosure and assessment. Teaching Professor, 7 (7), 8.

- Nuhfer, E. B. (1996). The place of formative evaluations in assessment and ways to reap their benefits. Journal of Geoscience Education, 44 (4), 385–394.

- Nuhfer, E. B., & Pavelich, M. (200l). Levels of thinking and educational outcomes. National Teaching and Learning Forum, 11 (1) 5–8.

- Pascarella, E.T. (2001). Cognitive growth in college. Change, 33 (l), 21-27.

- Perry, W. G., Jr. (l 999). Forms of ethical and intellectual development in the college years. San Francisco, CA: Jossey-Bass (a reprint of the original 1968 work with minor updating).

- Tobias, S., & Raphael, J. (l 997). The hidden curriculum: Faculty-made tests in science, Part I lower-division courses. New York, NY: Plenum.

Contact:

Edward Nuhfer

Director, Teaching Effectiveness and Faculty Development

University of Colorado, Denver

PO Box 173364, Campus Box 137

Denver, CO 80217

Voice (303) 556-4915

Fax (303) 556-5855

Email [email protected]

Delores J. Knipp (Lt Col Ret)

Professor of Physics

Suite 2A25 Fairchild Hall

USAF Academy, CO 80840

Voice (7l 9) 333-2560

Fax (719) 333-3182

Email [email protected]

Geology professor Edward Nuhfer describes himself as “an absolutely incurable teacher.” In 1990, he founded the Teaching Excellence Center at University of Wisconsin, Platteville, and in 1992 was hired as the first director of the Office of Teaching Effectiveness at the University of Colorado at Denver, which was recently (200l) reviewed by the evaluation team for the North Central Association of Colleges and Schools as “an exemplary program and an outstanding resource.” He directs “Boot Camp for Professors,” authors the one-page newsletter, Nutshell Notes, and writes occasional columns for the National Teaching and Learning Forum. After July 1, 2002, he will assume directorship of the Center for Teaching and Learning at Idaho State University.

Delores Knipp has been a resident physics instructor at the United States Air Force Academy since 1989. She earned her bachelor’s degree in atmospheric science from the University of Missouri in 1976 and entered the Air Force as a weather officer shortly thereafter. She was a NORAD Command weather briefer before earning an MS in atmospheric science and joining the US Air Force Academy physics faculty. In 1989 she earned a PhD in Space and Atmospheric Physics at the University of California, Los Angeles. Since her return from UCLA she has been engaged in teaching introductory physics, meteorology, space physics, and astronomy and in researching the effects of solar activity on the near-earth environment. She is the recipient of both NASA and NSF grants and is currently the director of the Solar Terrestrial Interactions Group (STING) in the Physics Department. She became interested in knowledge assessment and knowledge surveys after she attended the 2000 Boot Camp for Professors at Leadville, Colorado.