Exploring Faculty Perspectives on Community Engaged Scholarship: The Case for Q Methodology

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 3.0 License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Over the past 25 years, community engaged scholarship has grown in popularity, practice, and scholarship. A review of the literature suggests that a wide range of personal, professional, institutional, and communal factors (Demb & Wade, 2012) interact in ways that shape faculty members’ perspectives on, conceptualizations of, and means of conducting community engaged work. To make sense of the potential number of factor combinations and inform more customized support for community engaged faculty, the authors discuss the merits and utility of faculty typologies. Q Methodology offers a way to create a typology that is capable of not only managing the complexity of faculty engagement, but also providing rich descriptions of varied points of view that do not oversimplify the phenomenon. The techniques and foundational assumptions of Q Methodology are described, making the case for Q as a good fit for developing a typology of community engaged faculty that more fully reflects multiple points of view.

Recognizing the criticism that higher education was growing more disconnected from and irrelevant to society by no longer addressing the heart of the nation’s work (Delve, Mintz, & Stewart, 1990; Newman, 1985), Boyer (1990) issued a clarion call to institutions of higher education to remember their missions and to reconsider how scholarship is conceptualized. Colleges and universities around the country began heeding this call to broaden their notions of scholarship and to take seriously their responsibility to serve their wider communities (Fitzgerald, Bruns, Sonka, Furco, & Swanson, 2016; Kezar, Chambers, & Burkhardt, 2005). These efforts entailed critically reflecting on the role of community involvement in their institutions, especially with regard to the nature of faculty work (Bringle, Hatcher, & Holland, 2007; Saltmarsh, 2011; Stanton, 2008; Zlotkowski, 2011), and sparked the growth of the scholarship of engagement (SOE) movement.

Since Boyer’s landmark work, the scholarship on the scholarship of engagement has blossomed. Research on faculty engagement has focused on defining engagement (Boyer, 1990, 1996; Giles, 2008; O’Meara, 2002), examining dimensions of faculty life (Demb & Wade, 2012; O’Meara, 2008; Wade & Demb, 2009), exploring the impact of engagement on faculty (Rice, 2002; Rice, Sorcinelli, & Austin, 2000) and identifying activities that comprise faculty engagement (Glass, Doberneck, & Schweitzer, 2011; O’Meara, Sandmann, Saltmarsh, & Giles, 2010). Due to the range of engagement activities in which faculty and staff members participate, scholars have faced the challenge of determining which activities to emphasize (O’Meara et al., 2010), and how to ensure quality work (Glassick, Huber, & Maeroff, 1997) is made visible (Driscoll & Lynton, 1999). Expanding the scope of research from faculty to institutions, scholars have also examined the institutional context (Demb & Wade, 2012; Holland, 1997; O’Meara, 2005; Stanton, 2008; Wade & Demb, 2009), identified ways to integrate institutional research and learning within the broader context of their communities (Boyte & Hollander, 1996; Buzinski et al., 2013), and established key components to advance and institutionalize engagement efforts (Fitzgerald et al., 2016; Furco, 2010; Janke, Medlin, & Holland, 2015). Central to these research trajectories is the conscious effort to bring greater clarity and rigor to community engagement efforts (Barker, 2004; Glassick et al., 1997).

Given the range of research emerging from Scholarship Reconsidered (Boyer, 1990), it is not surprising that the SOE movement has evolved into a “multifaceted field of responses” (Sandmann, 2008, p. 91) that includes a range of community engaged practices such as service-learning, community-based participatory research, outreach, participatory action research, and public scholarship (Bringle, Games, & Malloy, 1999; Burawoy, 2005; Colbeck & Wharton-Michael, 2006; Fear & Sandmann, 1995; Glass et al., 2011; O’Meara & Rice, 2005; Sandmann, 2008; Strand, Marullo, Cutforth, Stoecker, & Donohue, 2003), as well as varying conceptualizations, terminology, and definitions (Barker, 2004; Bringle et al., 2007; Janke & Colbeck, 2008; O’Meara & Niehaus, 2009; Pearl, 2015; Sandmann, 2008; Wade & Demb, 2009). The study and practice of Community Engaged Scholarship (CES) is complicated further by different individual dimensions, academic disciplines, institutional types, and communal dimensions (Buzinski et al., 2013; Colbeck & Wharton-Michael, 2006; Demb & Wade, 2012; Holland, 1997; Townson, 2009; Wade & Demb, 2009).

Writ large, the wide-range of practices, conceptualizations, definitions, and influencing factors affecting CES among faculty warrant closer attention, especially as faculty and administrators strive to make informed decisions about how to invest time, talent, and resources in order to cultivate and sustain meaningful CES that is central to the academy (Fitzgerald et al., 2016). Hence, given what is known and not known about faculty involvement in CES, how can we make sense of it and articulate the complexity of CES among faculty without being unnecessarily reductionistic?

The creation and use of empirically-based classifications, such as typologies, are one way to balance complexity and simplicity by generating heuristics that offer parameters of understanding that then invite closer examination (Bailey, 1994). Within the CES literature, several classification systems exist (Barker, 2004; Pearl, 2015). Thus, the purpose of this article is twofold: (a) to review a conceptual framework and typologies of community engaged faculty with a critical eye toward reflecting on how the framework and typologies emerged and affect subsequent understanding, and (b) to describe Q Methodology—that offers a new way to extend and potentially challenge current understandings of community engaged faculty. To this end, we present the findings from a purposeful literature review that addresses a conceptual framework, typologies, and limitations to understanding CES among faculty; detail how Q Methodology may help refine that understanding; and, explore potential contributions from Q Methodology to understanding faculty engagement.

Conceptual Framework, Typologies, and Emerging Questions about Faculty CES

To thrive, 21st century higher education needs to ensure that community engagement is at the heart of its work (Fitzgerald et al., 2016), which means “anchor[ing] engagement firmly on the desk of our institutions and faculties as community-engaged scholarship” (Sandmann, 2009, p. 8). Given the critical role faculty play in this process, the literature on faculty engagement is extensive and examines a broad range of topics including, but not limited to, faculty motivations, supports and hindrances to engagement, disciplinary perspectives, and institutional context. Rather than discuss each of these streams in the literature, our focus is on reviewing the major conceptual framework and key typologies that have emerged from that literature and build on these collective scholarly efforts.

Conceptual Framework of Faculty Engagement

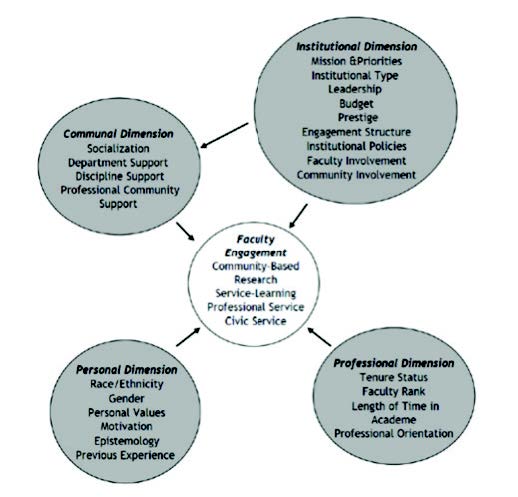

Several scholars have developed conceptual frameworks over the years to make sense of the myriad approaches, conceptualizations, and understandings of factors that affect faculty’s scholarly engagement in and with the community. To synthesize the literature, Wade and Demb (2009) developed a comprehensive framework of faculty engagement based on theoretical and empirical evidence. Drawing heavily on Holland’s (1997, 2005) matrix of ten organizational factors, the Kellogg Commission’s (1999) seven-part test for engaged campuses, and Colbeck and Wharton-Michael’s (2006) model of individual and organizational characteristics that influence faculty members’ motivation and engagement in public scholarship, Wade and Demb proposed the “Faculty Engagement Model (FEM),” explicating the dimensions and factors at play and the relationship among those factors.

Initially, faculty engagement consisted of an interaction and degree of balance between personal, professional, and institutional dimensions (Wade & Demb, 2009). Personal dimensions include race/ethnicity, gender, personal values, motivation, epistemology, and previous experience. Professional dimensions include tenure status, faculty rank, length of time in academe, and professional orientation. Institutional dimensions include mission and priorities, institutional type, leadership, budget, prestige, engagement structure, institutional policies, faculty involvement, and community involvement. (See Wade & Demb, 2009, for a full description of and evidence for each factor.) In 2012, Demb and Wade revised the framework, adding a communal dimension. The communal dimension (factors include socialization, department support, discipline support, and professional community support) can either affect faculty engagement directly or serve as a mediating effect of the institutional dimension on faculty engagement (see Figure 1).

Taken together, the four dimensions and 24 factors offer numerous combinations of factors influencing faculty engagement. While the original and revised FEM appear to have discrete factors and dimensions, the research to develop the model

revealed a spectrum of definitions whose complexity could undermine further research until those definitions are made specific and explicit. The model further demonstrates the need for a far more multi-dimensional, dynamic and holistic description of the factors that affect faculty proclivities to value, or become active with, engagement-related activities. (Wade & Demb, 2009, p. 14)

Typologies of Faculty Engagement

To reduce complexity such as that encountered in FEM, classifying many cases into a few meaningful groups offers social scientists one of the most useful descriptive tools available for analysis, research, and theory-building (Bailey, 1994).

Although we cannot focus upon all persons and all of their characteristics at once, by classifying persons according to salient underlying dimensions such as race, social class… political party, religion, and so forth, we can simplify our complex reality sufficiently to allow us to analyze it. (Bailey, p. 12)

Classifications shed light on the similarities and differences within and across groups and surfaces the dimensions on which they are based. Consideration of the relationships among types, comparisons, and contrasts facilitates better understanding of the cases in each class and the issues that are most relevant to them. Typologies are one form of classification, which represent a conceptual framework (Bailey). Unlike other forms of classification, typologies do not create criteria for classifying a construct into a type, but rather provide a rich description of the attributes that distinguish groups from each other (Doty & Glick, 1994).

The CES literature includes several examples of the use of typologies for deepening understanding of engaged faculty. One such example is Pearl’s (2015) typology, which used latent class analysis on a selection of items from the 2010 HERI Faculty Survey to identify five classes of faculty which he described as community engaged scholars, aspirational engagers, passive engagers, generational engagers, and traditional scholars. Little information is provided to explain how the labels and brief descriptions of each type were made; however, the survey items collected information about how faculty engage in the community and their beliefs about the role of colleges in their local communities and in shaping civic beliefs of their students.

Another classification structure is offered by Barker (2004), who reviewed the SOE academic literature, publications of civic engagement centers and higher education institutions, and interviewed SOE practitioners. From this inquiry, Barker created a classification system that describes five practices of engaged scholarship: public scholarship, participatory research, community partnership, public information networks, and civic literacy scholarship. Although the five practices appear distinct, they are not mutually exclusive. “Indeed, almost all of these practices overlap with one another, and indeed they are often practiced simultaneously by the same scholars and institutions” (p. 133).

Emerging Questions About Faculty Engagement

Since we know that each of the 24 factors and four dimensions presented in FEM (Demb & Wade, 2012) can affect faculty engagement, it is difficult to discern which factors have the most salience for individual scholars, the nature of the relationships between and among the factors and dimensions, and whether the more salient factors emerge in any sort of pattern within and across the dimensions. The possible number of factor combinations makes it difficult for scholars and practitioners to comprehend at once, let alone make informed, efficient decisions about the best way to allocate resources and support a diverse faculty. So, how can the complexity of faculty engagement be meaningfully classified?

Classifications such as those by Pearl (2015) and Barker (2004) offer ways to organize and simplify the complexity of approaches by providing distinct groups, noting their similarities and differences which allows for the generation of heuristics. While heuristics can expedite decision-making for scholars and practitioners, they may be misguided if the classifications are based on the researcher’s conceptualization of the most salient issues or if they are unintentionally influenced by the researcher’s (un)conscious assumptions (Morrison, 2015). In the case of Barker, SOE was conceptualized as forms of practice, while Pearl’s typology was based on the researcher’s selection of the salient issues from data already collected by the HERI 2010 Faculty Survey. Therefore, these classifications may be more representative of the researcher’s view of community engaged faculty than the faculty’s own views of CES.

Additionally, classifications are often developed based on demographic or other researcher-chosen variables (e.g., discipline, rank, time in academia). In some instances these variables may provide meaningful insight into a particular phenomenon; yet, there are other times when using these variables from the outset may limit, if not inadvertently minimize, subtle but important variations in the data. Rather than assume similarity within a particular variable (e.g., race, age, discipline) when examining a complex phenomenon, it is important to find ways to examine within groups as well as across groups. For example, a physicist, whose discipline is generally classified as a “pure hard” science (Becher, 1987), may have more in common with a social worker, whose discipline is generally classified as a “soft applied” science (Becher, 1987), than one might assume. Thus, it is important to avoid classifying responses and data prematurely. Research shows that there are some disciplinary differences; however, it may be presumptive to claim that a particular classification is the only one.

While there are distinct approaches, conceptualizations, and language regarding engagement, these specialized insights are not always apparent in practice. Despite research to inform the type of support campuses provide community engaged faculty, given limited staff time and resources, many campuses continue to have a “one size fits all” approach (Buzinski et al., 2013; Glass et al., 2011). However, as Pearl (2015) notes, having a typology may allow for customization that is simultaneously more individualized and more systemic.

The question then remains, if the goal is to more deeply understand how community engaged faculty make meaning of their work, how would the faculty themselves select the salient issues and frame them? What different typology might emerge if the classes were based on patterns of similarity and difference in the scholars’ overall perspectives on the scholarship of engagement rather than on their reaction to the issues the researcher poses as salient? To achieve that goal, researchers need a way to classify faculty based on the study participants’ own internal perceptions and their overall perspective rather than their responses to the researcher’s specifically defined and operationalized variables.

Q Methodology

Q Methodology (Q) is a research approach that classifies research study participants into groups based on their shared overall viewpoints on a particular subject. Q was developed by psychologist William Stephenson in the early 1930s (Brown, 1980), and is described as “qualiquantological” (Watts & Stenner, 2005, p. 69) because it integrates qualitative and quantitative analyses (McKeown & Thomas, 1988), allowing it to address some of the weaknesses of each respective methodology (Peterson, Owens, & Martorana, 1999). As comfort with social constructivist and other non-positivist epistemologies has increased, interest in Q increased, and examples of Q studies are available across a wide variety of disciplines in the social sciences (Brown, 1986, 2006; Jay, 1969; McKeown & Thomas; Watts & Stenner).

Both Q and the more traditional approaches to classification reduce the complexity of a construct by identifying groups that share common meaning. Traditional classification methods classify variables into groups, reflecting some shared commonalities such as a latent variable. Q analysis classifies the study participants into groups, reflecting their shared perspectives or a common way of thinking about the construct under study (Brown, 1980; McKeown & Thomas, 1988).

Foundational Assumptions

Q Methodology is grounded in several important assumptions that influence the study design and interpretation of results. Each serves as an indicator of the usefulness of Q for understanding the complexity that exists in the variety of ways that community engaged faculty make meaning of their work. The first assumption of Q is the goal of operant subjectivity. In typical quantitative research, the researcher’s aim is to be objective, so research instruments are designed to operationalize the variables so that each item will be interpreted by all participants in the same way (Brown, 1980). In Q, the goal is to capture the participants’ subjective perspectives and their unique points of view instead of their responses to an objective definition that has been carefully operationalized in order to have one narrow, universal meaning. Rather than using objective measures that are based on operationalized variables, the researcher uses items interpreted by participants in their own way. The researcher does not qualitatively interpret the meaning of the items until after the data is collected. While it is frequently the case that the meaning of an item on the instrument is interpreted differently by participants who later fall into different classification groups, researchers are able to interpret the findings by examining how participants responded to other items and identifying patterns within the responses.

Q Methodology is a good fit for a construct like the SOE, which is difficult to define or for which the definition/operationalization of the construct is a subject of debate (Barker, 2004; Bringle et al., 2007; Sandmann, 2008). The path toward a shared definition for the field would be informed by the use of a research method that can collect participants’ perspectives on their SOE without imposing a researcher’s operationalized definition of it.

The second assumption of Q is that participants’ perspectives are based on their own internal frames of reference (Brown, 1986, 1997). It is the study participants, not the researcher, who decide which items are meaningful, which issues are most significant, and which issues do not matter when conceptualizing the topic of study from their respective points of view, given their context (Watts & Stenner, 2005). The data reflects participants’ own internal frameworks for understanding the construct, not their reaction to the researcher’s framework. This aspect of Q makes it a particularly useful research method at this point in the field’s understanding of SOE. The current attempts to address faculty needs through administrative supports or policies are based on external, observable categories such as academic discipline, institution type, and contract type (tenure track, term, adjunct, etc.). Some research, such as the Pearl (2015) or Barker (2004) studies described earlier, have resulted in more sophisticated typologies of faculty; however, they are also based on externally observed variables rather than emerging from the internal frameworks of the faculty themselves. It is the understanding of these internal perspectives—how faculty define and make meaning of CES for themselves—that is truly needed at this time in order to make sense of the complex list of factors influencing how faculty engage, their reasons for doing it, and how institutions can support them.

Brown (2006) described Q Methodology as a particularly useful approach to gather perspectives from marginalized populations, as the process allows the subjects to construct meaning from their own self-reference rather than simply responding to the meaning held by the majority or dominant group. On some campuses, community engaged faculty find that their perspectives on scholarly work and the aims of higher education may differ from those reinforced by the established processes of promotion and reward structures (Boyer, 1990; O’Meara, 2002; O'Meara, Sandmann, Saltmarsh, & Giles, 2010). Q Methodology may also result in giving voice to the perspectives of faculty who may otherwise not feel comfortable pushing against established frameworks that define scholarly productivity solely as research journal publications. This is a useful method, then, for gathering and including their points of view.

The third assumption is that the researcher’s goal is to examine a holistic viewpoint. Rather than breaking a construct into component parts and designing the study with controls to hold other conditions or influences constant, Q Methodology is able to compare each participant’s overall viewpoint to that of the other participants. The data analysis process does not examine the responses to any single item; it examines correlations in the overarching patterns that emerge (Brown, 1980). It is because of this aspect that Q Methodology studies tend to have high reliability. While participants’ responses to specific items might shift from test one to test two, the pattern representing their overarching perspective does not typically change (Brown, 1980; D’Agostino, 1984; Thomas & Baas, 1993).

Data Collection to Maintain Subjective Self-reference

The foundational assumptions are met through each aspect of study design. Data collection involves three steps (McKeown & Thomas, 1988): (a) develop a set of statements that represent a diversity of perspectives on the subject being studied (Q Set); (b) identify participants whose points of view are representative of the contexts relevant to the study (Person Set); and (c) gather the data through a card-sort process in which the research participants sort the statements in the Q Set in a way that represents their overall perspective (Q Sort).

The Q Set. The Q Set is a series of statements that represent as wide a variety of perspectives on the topic under investigation as possible. The development of these statements is analogous to a process of population sampling. The “population” being the innumerable statements that could potentially relate to the topic of study, and “sampling” representing the selection of items from the population of potential statements. The primary objective of item selection is to provide enough breadth and variety of items in the sample that participants can convey their unique point of view without being constrained by the researcher’s perspective or framework (Brown, 1980, 1986; McKeown & Thomas, 1988). For example, statements in the Q Set for the topic of faculty engagement might include, “My involvement in the community has influenced the direction of my research,” “My teaching is more current and relevant because I am engaged in the community,” or “Our students should work directly with community members, addressing their immediate needs.”

The Person Set. The study participants who will be classified into groups are referred to as the Person Set. Participant selection in Q is analogous to the way traditional quantitative research selects variables to test. The Person Set is carefully selected to represent the perspectives the researcher is seeking to understand. The Person Set is neither a random sample nor a large number of participants. In Q, the items represent the sample and the subjects represent the measure, so it is typical to have more items than participants (Brown, 1980). The appropriate number of participants to include in the Person Set is a debated issue. Several Q Methodology researchers argue that too many participants can be problematic as complexity and subtle nuances can be missed (Brown, 1980; Watts & Stenner, 2005). Most Q Methodology researchers agree that the clearest indicator of the validity of the participant groups is having at least 5–6 participants in each resulting factor (Brown, 1980).

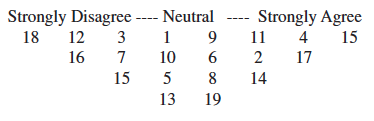

The Q Sort. To collect the data in Q Methodology, participants in the Person Set are presented a set of index cards with one statement from the Q Set printed on each card. The participants’ task is to sort the statements into stacks along a continuum to represent their degree of agreement with each statement relative to the other statements. Each stack has a limited number of cards that are allowed, with more cards allowed in the stacks at the center of the continuum, creating a normal or bell-shaped curve. Each card has a unique, randomly assigned number used to record the finished sort and the results are recorded as illustrated in Figure 2. In this example, the study participant has indicated the statement on card #15 is the one for which she feels the strongest agreement. She agrees with statements on cards #4 and #17, but these statements are not as important as #15. The data analysis process, typically using Principle Components Analysis, can then identify groupings of people based on the patterns in the Q Sorts that indicate the comparable ways the participants made sense of the Q Set items (Watts & Stenner, 2005).

Through the sorting process, participants are not assigning a discrete value to each statement in the Q Set; rather, they are assigning a level of agreement in relation to the other items. Participants sort the Q Set such that each item is ranked within the context of the other items (i.e., I agree with this item more than these items and less than those items). It is this feature that facilitates the data analysis process to correlate overall patterns and holistic perspectives rather than rely on responses to any one specific item.

The sorting process also facilitates an important dynamic described by Brown (1980) as “psychological significance” (p. 198). Participants not only indicate their agreement or disagreement, but also which items are most salient to forming their point of view. In theory, it is possible that a participant might agree with every statement in the Q Set, but the Q Sort process will still identify which issues play a larger role in representing the participant’s overall point of view.

The sorting process addresses the assumption of internal self-reference by avoiding the insertion of the researcher’s frames of reference as conventional standardized scales would. Through the sort, the participant (rather than the researcher) does the work of item reduction, with items sorted toward the middle being less important in the construction of participant’s perspective. “It is one thing to ‘put’ something to a subject, as in the form of scale items; it is quite another to allow the subject to speak for himself” (Brown, pp. 44–45).

Limitations of Q Methodology

There are several aspects of Q that limit its usefulness for some research goals. Q studies, with small Person Sets compared to the number of Q Set statements, bear more resemblance to qualitative research when it comes to issues like generalization and prediction (Krathwohl, 2004). For example, if a Q study of community engaged faculty grouped the study participants into five types, it would be appropriate to generalize that those five points of view do exist in the general population, and that the faculty in those groups hold the subjective perspectives described by the study. However, it would not be appropriate to use the study results to generalize that a similar proportion of people in the population would classify into each group as did participants in the study (Brown, 1980; Thomas & Watson, 2002). As with most qualitative research, the purpose of Q Methodology is to provide rich descriptions that deepen understanding of the participants’ perspectives (Stephenson, 1953).

Other classification methods, such as cluster analysis, ultimately result in a set of exhaustive categories where all participants classify into a group. The categories of classification are also distinct, meaning they have high within-group homogeneity and high between-group heterogeneity (Hair, Anderson, Tatham, & Black, 1998; Morf, Miller, & Syrotuik, 1976; Thomas & Watson, 2002). Q makes no claim to accomplish either exhaustive or distinct categories (Stephenson, 1953). Since Q Methodology classifications are based on participants’ overall point of view rather than responses to specific variables, the resulting groups are more nuanced, allowing for the possibility that some participants might be moderately associated with more than one group (Morf et al.). As is true for most typologies, Q also does not result in a way to classify people in the population into the groups identified in the study (Bailey 1994; McKeown & Thomas, 1998; Stephenson, 1953). Instead, Q offers rich descriptions that synthesize the issues that shape the perspective of each type, such that the general population of faculty can identify the type that aligns with their views. Q findings can certainly inform the creation of measurement scales that can determine a person’s type.

Potential Contributions from Q Methodology to Understanding Faculty Engagement

Given the list of factors and dimensions that affect faculty engagement (Demb & Wade, 2012), a classification system informed by Q Methodology based on engaged faculty’s holistic, internal frameworks may offer new insights into engagement. Current literature relies on externally observed variables like discipline, contract type, or engaged practices; however, this may be unintentionally limiting understanding. For example, as with the physicist and social worker mentioned before, a tenured faculty member in sociology who conducts community based research and an adjunct faculty member in mathematics who uses service-learning pedagogy may, because of their shared epistemology, beliefs about student learning, and personal connections to the community, actually have much more in common with each other when it comes to how they connect their scholarly work with the community than they do with others in their respective departments.

If patterns emerge across the complex combination of influencing factors for faculty engagement (Demb & Wade, 2012), then it may help explain why it can be difficult to describe CES and why the field has struggled to find a common definition of engaged scholarship. It might also help prevent misunderstandings among colleagues who represent different perspectives, or create more open dialogue about not only what “counts” as engaged work but also why it counts. Moreover, a classification system based on shared patterns in an overall internal framework might make it possible for administrators and colleagues to more quickly understand differing points of view, offer more customized supports and policies for engaged work, and invest resources in ways that deliver the best return.

The potential benefit from building a supportive community of engaged faculty cannot be over-stated. Calls for higher education faculty to be more collaborative, integrating knowledge across disciplines to address community concerns are consistent (Boyer, 1990; Fitzgerald et al., 2016; Pearl, 2015). Such a community is not accomplished by simply gathering scholars in a room, but by fostering understanding relationships and appreciation for each others’ contributions. As Boyer (1990) noted, the scholarship of integration speaks to an entire process of collaboration throughout a particular project. A community engaged faculty typology with rich descriptions of the various points of view could help faculty from different fields become aware of the perspectives they share in common. It could also help faculty come to appreciate the benefits of working with people whose perspectives on the work are very different from their own, creating opportunities for complementarity, innovation, and true interdisciplinary work.

Conclusion

We began this article with Boyer’s (1990) call to strengthen institutional diversity so that notions of faculty work can be redefined in ways that allow faculty to use their talents to meet the needs of society and uphold the purpose of higher education. Institutional diversity includes recognizing the variety of ways that faculty partner with the community and collaboratively generate new knowledge that informs our collective theories, practice, and research. Given the myriad combination of factors and dimensions that affect faculty engagement, as well as the strengths and limitations of existing typologies, it is helpful to reconsider how we can manage the complexities surrounding faculty engagement while still honoring unique faculty perspectives. Q Methodology offers a way to reconsider faculty engagement from faculty members’ perspectives rather than from the perspectives of researchers interpreting the perspectives of faculty. This approach invites a new way to explore whether there are patterns of faculty engagement that may otherwise be missed with more traditional or reductive approaches. The results of such an inquiry may complement, refine, or even challenge existing conceptualizations of the factors that affect faculty engagement, which in turn can inform practice and higher education’s ability to embody its civic aims.

References

- Bailey, K. D. (1994). Typologies and taxonomies: An introduction to classification techniques (Sage University Paper series on Quantitative Applications in the Social Sciences, series no. 07–102). Thousand Oaks, CA: Sage. https://doi.org/10.4135/9781412986397

- Barker, D. (2004). The scholarship of engagement: A taxonomy of five emerging practices. Journal of Higher Education Outreach and Engagement, 9(2), 123–137.

- Becher, T. (1987). The disciplinary shaping of the profession. In B. R. Clark (Ed.), The academic profession: National, disciplinary, and institutional settings (pp. 271–303). Berkeley, CA: University of California Press.

- Boyer, E. L. (1990). Scholarship reconsidered: Priorities of the professoriate. Stanford, CA: The Carnegie Foundation for the Advancement of Teaching.

- Boyer, E. L. (1996). The scholarship of engagement. Bulletin of the American Academy of Arts Orchestrating Change at a Metropolitan University. Metropolitan Universities, 18(3), 57–74. https://doi.org/10.2307/3824459

- Bringle, R. G., Games, R, & Malloy, E. A. (1999). Colleges and universities as citizens. Boston: Allyn & Bacon.

- Brown, S. R. (1980). Political subjectivity: Applications of Q Methodology in political science. New Haven, CT: Yale University Press.

- Brown, S. R. (1986). Q technique and method: Principles and procedures. In W. D. Berry & M. S. Lewis-Beck (Eds.), New tools for social scientists: Advances and applications in research methods (pp. 57–76). London, UK: Sage.

- Brown, S. R. (1997). The history and principles of Q Methodology in psychology and the social sciences. Retrieved from http://facstaff.uww.edu/cottlec/QArchive/Bps.htm

- Brown, S. R. (2006). A match made in heaven: A marginalized methodology for studying the marginalized. Quality & Quantity, 40(3), 361–382. http://doi.org/10.1007/s11135–005–8828–2 https://doi.org/10.1007/s11135–005–8828–2

- Burawoy, M. (2005). For public sociology. American Sociological Review, 70(1), 4–28. https://doi.org/10.1177/000312240507000102

- Buzinski, S.G., Dean, P., Donofrio, T.A., Fox, A., Berger, A.T., Heighton, L.P., et al. (2013). Faculty and administrative partnerships: Disciplinary differences in perceptions of civic engagement and service-learning at a large, research-extensive university. Partnerships: A Journal of Service-Learning & Civic Engagement, 4(1), 45–75.

- Colbeck, C. L., & Wharton-Michael, P. (2006). Individual and organizational influences on faculty members’ engagement in public scholarship. New Directions for Teaching and Learning, 105, 17–26. https://doi.org/10.1002/tl.221

- D'Agostino, B. (1984). Replicability of results with theoretical rotation. Operant Subjectivity, 7, 81–87.

- Delve, C. I., Mintz, S. D., & Stewart, G. M. (1990). Promoting values development through community service: A design. In C. I. Delve, S. D. Mintz, & G. M. Stewart (Eds.), Community service as values education. New Directions for Student Services #50 (pp. 7–29). San Francisco: Jossey-Bass. https://doi.org/10.1002/ss.37119905003

- Demb, A., & Wade, A. (2012). Reality check: Faculty involvement in outreach and engagement. The Journal of Higher Education, 83(3), 337–366. https://doi.org/10.1353/jhe.2012.0019

- Doty, H. D. & Glick, W. H. (1994). Typologies as a unique form of theory building: Toward improved understanding and modeling. Academy of Management Review, 19(2), 230–251.

- Driscoll, A., & Lynton, E. A. (1999). Making outreach visible: A guide to documenting professional service and outreach. Presented at the AAHE Forum on Faculty Roles and Rewards, Washington, D.C.: American Association for Higher Education.

- Fear, F., & Sandmann, L. R. (1995). Unpacking the service category: Reconceptualizing university outreach for the 21st century. Continuing Higher Education Review, 59(3), 110–122.

- Fitzgerald, H. E., Bruns, K., Sonka, S., Furco, A., & Swanson, L. (2016). The centrality of engagement in higher education. Journal of Higher Education Outreach and Engagement, 20(1), 223–243.

- Furco, A. (2010). The engaged campus: Toward a comprehensive approach to public engagement. British Journal of Educational Studies, 58(4), 375–390. https://doi.org/10.1080/00071005.2010.527656

- Giles, D. E. (2008). Understanding an emerging field of scholarship: Toward a research agenda for engaged, public scholarship. Journal of Higher Education Outreach and Engagement, 12(2), 97–106.

- Glass, C., Doberneck, D., & Schweitzer, J. (2011). Unpacking faculty engagement: The types of activities faculty members report as publicly engaged scholarship during promotion and tenure. Journal of Higher Education Outreach and Engagement, 15(1), 7–30.

- Glassick, C. E., Huber, M. T., & Maeroff, G. I. (1997). Scholarship assessed: Evaluation of the professoriate. San Francisco: Jossey-Bass.

- Hair, J. F., Anderson, R. E., Tatham, R. L., & Black, W. C. (1998). Multivariate data analysis (5th ed.). Delhi, India: Pearson Education.

- Janke, E. M., & Colbeck, C. L. (2008). An exploration of the influence of public scholarship on faculty work. Journal of Higher Education Outreach and Engagement, 12(1), 31–46.

- Janke, E., Medlin, K., & Holland, B. (2015, November). Collecting scattered institutional identities into a unified vision for community engagement and public service. Paper presented at the meeting of the International Association for Research on Service-Learning and Community Engagement Conference, Boston.

- Jay, R. L. (1969). Q technique factor analysis of the Rokeach dogmatism scale. Educational and psychological measurement, 29(1), 453–459. https://doi.org/10.1177/001316446902900223

- Kellogg Commission. (1999). Returning to our roots: The engaged institution. Kellogg Commission on the Future of state and Land-Grant Universities, National Association of State Universities and Land-Grant Colleges.

- Kezar, A. J, Chambers, A. C., & Burkhardt, J. C. (Eds.). (2005). Higher education for the public good: Emerging voices from a national movement. San Francisco: Jossey-Bass.

- Krathwohl, D. R. (2004). Methods of educational and social science research: An integrated approach. Long Grove, IL: Waveland Press.

- McKeown, B., & Thomas, D. (1988). Q Methodology. Newbury Park, CA: Sage. https://doi.org/10.4135/9781412985512

- Morf, M. E., Miller, C. M., & Syrotuik, J. M. (1976). A comparison of cluster analysis and Q factor analysis. Journal of Clinical Psychology, 32(1), 59–64. https://doi.org/10.1002/1097–4679(197601)32:1<59::AID-JCLP2270320116>3.0.CO;2-L

- Newman, F. (1985). Higher education and the American resurgence. Princeton, NJ: Carnegie Foundation for the Advancement of Teaching.

- O’Meara, K. (2002). Uncovering the values in faculty evaluation of service as scholarship. The Review of Higher Education, 26(1), 57–80. https://doi.org/10.1353/rhe.2002.0028

- O’Meara, K. (2005). Effects of encouraging multiple forms of scholarship nationwide and across institutional types. In K. O’Meara & R. E. Rice (Eds.), Faculty priorities reconsidered: Rewarding multiple forms of scholarship (pp. 255–289). San Francisco: Jossey-Bass.

- O'Meara, K. (2008). Graduate education and community engagement. New Directions for Teaching and Learning, 113, 27–42.

- O’Meara, K., & Niehaus, E. (2009). Service-learning is... How faculty explain their practice. Michigan Journal of Community Service Learning, 16(1), 17–32.

- O’Meara, K., & Rice, R. E. (2005). Faculty priorities reconsidered: Rewarding multiple forms of scholarship. San Francisco: Jossey-Bass.

- O'Meara, K., Sandmann, L.R., Saltmarsh, J., & Giles, D.E. (2010). Studying the professional lives and work of faculty involved in community engagement. Innovative Higher Education, 36, 83–96. https://doi.org/10.1007/s10755–010–9159–3

- Peterson, R. S., Owens, P. D., & Martorana, P. F. (1999). The group dynamics Q Sort in organizational research: A new method for studying familiar problems. Organizational Research Methods, 2, 107–139. https://doi.org/10.1177/109442819922001

- Post, M. A., Ward, E., Longo, N. V., & Saltmarsh, J. A. (Eds.). (2016). Publicly engaged scholars: Next generation engagement and the future of higher education. Sterling, VA: Stylus Publishing.

- Rice, R. E. (2002). Beyond scholarship reconsidered: Toward an enlarged vision of the scholarly

- work of faculty members. New Directions for Teaching and Learning, 90 (summer), 7–17

- Rice, R. E., Sorcinelli, M. D., & Austin, A. E. (2000). Heeding new voices: Academic careers for a new generation. Washington, DC: American Association for Higher Education.

- Saltmarsh, J. (2011). Engagement and epistemology. In J. Saltmarsh & E. Zlotkowski (Eds.), Higher education and democracy: Essays on service-learning and civic engagement (pp. 342–353). Philadelphia: Temple University Press.

- Sandmann, L. R. (2008). Conceptualization of the scholarship of engagement in higher education: A strategic review, 1996–2006. Journal of Higher Education Outreach and Engagement, 12(1), 91–104.

- Sandmann, L. R (2009). Placing scholarly engagement “on the desk.” Research University Engaged Scholarship Toolkit. Boston: Campus Compact.

- Stanton, T. K. (2008). New times demand new scholarship: Opportunities and challenges for civic engagement at research universities. Education, Citizenship, and Social Justice, 3(1), 19–42. https://doi.org/10.1177/1746197907086716

- Stephenson, W. (1953). The study of behavior: Q technique and its methodology. Chicago: The University of Chicago Press.

- Strand, K., Marullo, S., Cutforth, N., Stoecker, R., & Donohue, P. (2003). Community based research and higher education: Principles and practices. San Francisco: Jossey-Bass.

- Thomas, D. B., & Baas, L. R. (1993). The issue of generalization in Q Methodology: "Reliable schematics" revisited. Operant Subjectivity, 16(1/2), 18–36.

- Thomas, D. M., & Watson, R. T. (2002). Q Sorting and MIS Research: A primer. Communications of the Association for Information Systems, 8(1), 141–156.

- Townson, L. (2009). Engaged scholarship at land-grant institutions: Factors affecting faculty participation. (Doctoral dissertation). Retrieved from Dissertations and Theses database. (UMI No. 3363733)

- Wade, A., & Demb, A. (2009). A conceptual model to explore faculty community engagement. Michigan Journal of Community Service Learning, 15(2), 5–16.

- Wade, A., & Demb, A. (2012). Reality check: Faculty involvement in outreach and engagement. The Journal of Higher Education, 83(3), 337–366. https://doi.org/10.1353/jhe.2012.0019

- Watts, S., & Stenner, P. (2005). Doing Q Methodology: Theory, method and interpretation. Qualitative Research in Psychology, 2(1), 67–91. https://doi.org/10.1191/1478088705qp022oa

- Zlotkowski, E. (2011). Social crises and the faculty response. In J. Saltmarsh & E. Zlotkowki (Eds.), Higher education and democracy: Essays on service-learning and civic engagement (pp. 13–27). Philadelphia: Temple University Press.

Authors

EMILY MORRISON ([email protected]) is the director of the Human Services and Social Justice Program and an assistant professor of Sociology at The George Washington University in Washington, DC. Before becoming faculty, she directed volunteer and service-learning programs at both the undergraduate and graduate levels, as well as founded a 501(c)3 nonprofit organization focused on health education. Morrison received a B.S. in Psychology from Kansas State University, an M.A. in College Student Personnel from the University of Maryland, and her Ed.D. in Human and Organizational Learning from The George Washington University.

WENDY WAGNER ([email protected]) is the Honey W. Nashman Faculty Fellow at the Honey W. Nashman Center for Civic Engagement and Public Service, as well as a visiting assistant professor in Human Services and Social Justice at The George Washington University in Washington, DC. Prior to her current roles, she was the Director of the Center for Leadership and Community Engagement at George Mason University. Wagner received a B.A. in Communication Studies from the University of Nebraska-Lincoln, an M.A. in College Student Personnel from Bowling Green State University, and her Ph.D. in College Student Development from the University of Maryland-College Park.