Revisiting Request for Adminship (RfA) within Wikipedia: How Do User Contributions Instill Community Trust?

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution 3.0 License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Abstract

Research into successful Request for Adminship (RfA) within Wikipedia is primarily focused on the impact of the relationship between adminship candidates and voters on RfA success. Very few studies, however, have investigated how candidates’ contributions may predict their success in the RfA process. In this study, we examine the impact of content and social contributions as well as total contributions made by adminship candidates on the community's overall decision as to whether to promote the candidate to administrator. We also assess the influence of clarity of contribution on RfA success. To do so, we collected data on 754 RfA cases and used logistic regression to test four hypotheses. Our results highlight the important role that user contribution behaviors and activity history have on the user’s success in the RfA process. The results also suggest that tenure and number of RfA attempts play a role in the RfA process. Our findings have implications for theory and practice.

Keywords

Wikipedia, Request for Adminship (RfA), social contribution, content contribution, administrators, trust

Introduction

Wikipedia, self-billed as The Free Encyclopedia, is an online collaborative project that provides a wide range of freely available encyclopedic articles on different topics from medicine and health to history and psychology (Burke and Kraut, 2008b; Mesgari, Okoli, Mehdi, Nielsen, and Lanamäki, 2015). As of October 25, 2015, this website contained more than 37 million articles, out of which nearly 5 million articles were written in English ("Size of Wikipedia," 2015). The widespread use of Wikipedia has also revolutionized the encyclopedia section of the publishing industry. For instance, Britannica stopped publishing their popular encyclopedia in 2010 after 244 years (Nazaryan, 2012). The fact that people are increasingly depending on Wikipedia as an online encyclopedia highlights the importance of paying special attention to content quality assurance on that website.

Wikipedia is made possible by a vast network of independent volunteers located around the world. Volunteers, also known as editors or users, perform a variety of tasks such as article creation and maintenance as well as engage in online conversations regarding the articles (Kaplan and Haenlein, 2014; Viegas, Wattenberg, Kriss, and Van Ham, 2007; Welser et al., 2011). While anybody can register for an account on Wikipedia and contribute to the project, certain tasks are limited to those whom the community has deemed trustworthy. Activities such as modifying the main page, deleting inappropriate content, blocking users, and changing user login credentials are restricted to a class of volunteers known as administrators. As of October 25, 2015, Wikipedia had nearly 27 million users, out of which 1,331 users were administrators ("Size of Wikipedia," 2015). Administrators ensure the quality of the content generated on the Wiki pages (aka, articles). This quality control is a key factor in the success of Wikipedia as with any open source project (Benkler, 2002).

Wikipedia administrators are elected through a step-by-step election process. This process starts with a week-long Request for Adminship (RfA) period, during which a registered editor desiring to become an administrator submits themselves to the community for assessment (Burke and Kraut, 2008a; Derthick et al., 2011). Then, the community decides on whether or not to promote the candidate to an administrator position. RfA success in Wikipedia is important because the outcomes of this peer-review process determine what types of users with what characteristics are typically given policing rights and privileges in the community. Understanding the factors behind RfA success can enable the community providers to provide adminship candidates with information about how they can enhance the likelihood of their success in the promotion process. The candidates can accordingly align their activities within the community, as much as possible, with what the community expects from a successful adminship candidate. This will make the entire RfA process more transparent to the candidates and will allow them to make a more informed decision on when and how to become a candidate for adminship within Wikipedia. Accordingly, in this study, we aim to shed light on the factors that can significantly impact RfA success in Wikipedia. In particular, we investigate the role of user contributions to the community on the community’s trust in the user to become an administrator. Thus, the research question that we seek to address in this study is:

- What aspects of one’s contributions to Wikipedia can determine one’s success in the RfA process?

The results of this study will provide insights into RfA and similar voting processes within online communities for community developers, providers, administrators, and managers. The findings will also offer contributions to research in areas such as virtual communities and social processes within online social networks, online communities of practice, and open source projects.

The remainder of this paper is organized as follows. The second section provides a background on content quality on Wikipedia, community trust, and RfA success. The third section presents the research framework and hypotheses. The fourth section describes the method. The fifth section discusses the results of our analysis. Finally, the last two sections provide a discussion and conclusion.

Background

Content Quality on Wikipedia

Wikipedia administrators are in charge of ensuring the quality of content generated on that website. Content quality in the context of Wikipedia pertains to comprehensiveness, reliability, readability, and currency of the Wikipedia articles (Mesgari et al., 2015). Comprehensiveness of articles determines their breadth and depth of coverage and the amounts of details included in them (Lewandowski and Spree, 2011). This aspect of content quality in Wikipedia is crucial in that it determines to what extent the human knowledge is represented in this encyclopedia. More comprehensive articles increase the overall content readership and viability of the community (Mesgari et al., 2015). Wikipedia administrators can play a role in enhancing the comprehensiveness of articles by ensuring that important contents and details in articles are not intentionally or unintentionally deleted by users. If this happens, administrators can use their automated revision tool to quickly restore deleted pages or revert those pages to an earlier, more comprehensive version (“Administrators/Tools,” 2015).

The second aspect of quality in Wikipedia, namely reliability, accuracy, or freedom of errors, has always been a major concern for Wikipedia users due to the user-generated nature of content on the website (Mesgari et al., 2015). Interestingly, prior studies have found Wikipedia articles to be reasonably accurate (Kim, Gudewicz, Dighe, and Gilbertson, 2010; West and Williamson, 2009), even in comparison with other credible encyclopedias such as Britannica (Fallis, 2008; Giles, 2005). The acceptable level of accuracy and reliability of content in Wikipedia is, to a great extent, due to the fact that administrators monitor the content generated on that website and delete the pages that do not meet specific reliability criteria. Administrators can also see the pages that have been deleted by other users and restore those pages, if necessary. Therefore, reliability is perhaps the most significant aspect of contributions made by administrators to the quality of content in Wikipedia.

Readability is the third aspect of content quality in Wikipedia. It measures how well the articles are written, composed, structured, and presented to the users (Ehmann, Large, and Beheshti, 2008; Purdy, 2009). Although Wikipedia administrators are not in charge of ensuring the quality of writing, they have exclusive privileges to edit the style of the interface and presentation on the website by changing Cascading Style Sheets (CSS) or editing JavaScript code (“Administrators/Tools,” 2015). This may contribute to the overall readability of the articles on Wikipedia.

Currency (aka, up-to-dateness) is the fourth dimension of content quality on Wikipedia, which needs to be ensured primarily by the users and not the administrators. Thus, administrators may play a less significant role in improving the currency of content generated on that website. All in all, the roles of Wikipedia administrators in ensuring the quality of content, in particular in terms of reliability, comprehensiveness, and readability of articles stresses the importance of electing committed, highly engaged, and trustworthy users as administrators in that community through the RfA process.

Online Community Trust in Administrators

Trustworthiness of Wikipedia administrators has its roots in the general concept of one-to-one trust in online communities, which is cited as an important factor in enhancing the quality of user communications and increasing the reliability and performance of virtual environments (Sabater and Sierra, 2005). Trust is conceptualized differently in different domains. In a seminal work by Jøsang, Ismail, and Boyd (2007), the authors distinguished two forms of trust: reliability trust and decision trust. Accordingly, reliability trust “is the subjective probability by which an individual, A, expects that another individual, B, performs a given action on which its welfare depends” (p.3). Whereas, decision trust includes possible negative consequences of dependence on an individuals and is defined as “the extent to which one party is willing to depend on something or somebody in a given situation with a feeling of relative security, even though negative consequences are possible” (p.4). The authors also discussed that trust, particularly in social networks, is determined by one’s reputation in that network. Therefore, reputation can be considered as “a collective measure of trustworthiness (in the sense of reliability) based on the referrals or ratings from members in a community” (p.5). Similarly, other studies suggest that one-to-one trust in virtual settings is formed and maintained through the reputation that community members develop (Maloney-Krichmar and Preece, 2005; Preece, 2000). In online communities, members may trust one another based on their direct interactions or indirect observations of each other’s activities on those websites. Consequently, members who are deemed trustworthy by a majority of users may be given special roles that permit them to perform tasks associated with those roles.

In Wikipedia, administrators are elected through a peer-review process. Success in this process is determined by the community’s collective trust in the candidates as to whether they can accomplish their duties properly. This collective trust is a major decision factor in the election process because administrators are granted additional power, special privileges, and policing rights within the website (Hergueux, Algan, Benkler, and Morell, 2014). In order to facilitate recognizing trustworthy users within Wikipedia and making the RfA process more objective, historical records of users’ activities and contributions to the community are normally provided to the public. Those records, if positive, could facilitate forming a member’s reputation in the community, which can ultimately lead to the community’s trust in that member and electing him or her as an administrator. From that perspective, a candidates’ success in the RfA process can be considered an indication of the community’s trust in them. In this study, we adopt that perspective to understand the determinants of RfA success in Wikipedia.

RfA Success

Prior studies have adopted different perspectives to investigate RfA success and user promotion within Wikipedia. Some researchers believe that RfA success does not merely depend on the candidate’s contributions, but on the relationship between the candidate and each single voter. Leskovec, Huttenlocher, and Kleinberg (2010) discussed that a voter is more likely to support a candidate in an RfA process if there is a relationship between their characteristics, such as the total number of edits they have made, and the awards that they have received from other members of the community. Similarly, Jankowski-Lorek, Ostrowski, Turek, and Wierzbicki (2013) showed that similarity of experience between a voter and a candidate as well as the number of common topics in which they have edited on Wikis can increase the likelihood of the voter’s support for that candidate.

Other studies have adopted a social network perspective to examine the RfA voting process within Wikipedia and predict candidates’ success in that process. Cabunducan, Castillo, and Lee (2011), for example, viewed the RfA process through a social network lens and found that voters’ decisions on an RfA were significantly influenced by the actions of their contacts. Their results also revealed that candidates who could attract an influential coalition’s support in the RfA process would more likely succeed in the election process. Desai, Liu, and Mullings (2014) analyzed the social network structure of the Wikipedia community and discussed how the votes made by a small subset of influential voters within that community can, to a great extent, predict the community’s collective decision on a candidate’s RfA.

The aforementioned studies, however, have primarily focused on the factors that are not solely dependent on the candidate’s characteristics and actions within Wikipedia, but on the factors that are partially dependent on the relationship between the candidate and other members of the community, or the social network features of the community itself. Nonetheless, very few studies have specifically focused on the impact of one’s contribution behaviors and history of activities on Wikipedia on one’s success in the RfA process. This is important because edit histories are among the first and most accessible information that voters can use and rely on to determine if they should trust one to become an administrator in the community. One of the respondents in Derthick et al. (2011) who was asked how they make sure that a candidate has merit to become an administrator indicated that:

“I check their edits. I see what they’ve edited, where they’ve edited. Like if you’ve only got a few hundred edits, you’re likely not completely familiar with Wikipedia enough to become admin” (p. 7).

Among those few studies that have examined what candidate characteristics and contribution behaviors are reliable determinants of a successful promotion, Burke and Kraut (2008a) found that strong edit history and varied experience in terms of breadth score, user interaction, and edit summaries could significantly impact a candidate’s success in the RfA process. However, the authors of that study did not divide candidates’ contributions into different forms such as content and social contributions. Content contribution in the context of Wikipedia can be defined as the users' participation in content creation and maintenance of Wikipedia articles. Whereas, social contribution refers to engaging in one-to-one or many-to-many discussions revolving around Wikipedia articles. Those discussions are a key factor in improving the articles and achieving consensus on the content of them.

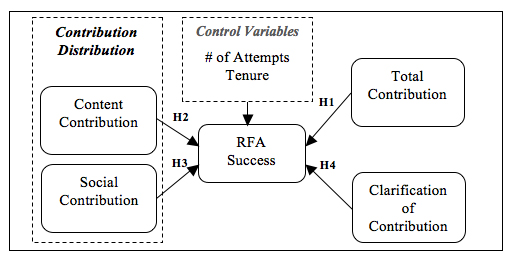

To extend Burke and Kraut’s (2008a) work, this study examines how content contribution and social contribution can each influence one’s success in the RfA process. We also examine the role of total contribution (content and social) as well as clarification of contribution in terms of a user’s explanations on what edits they have made on a Wiki page in the RfA success. It is worth mentioning that social contribution in this study is completely different from prior studies’ take on social factors in the RfA process. As discussed earlier, in the prior studies, researchers mainly focused on one-to-one relationships between adminship candidates and voters or the features of one’s social network in the community, whereas the social factor under examination in this study is related to the extent to which one contributes to discussion threads on Wikipedia.

Research Framework and Hypotheses

Various statistics on user contribution and edit histories are provided to the Wikipedia users who will evaluate one’s request for promotion in the community. This information contains different values, each of which belongs to a specific facet of the candidate's contribution that can be used by the voters as a basis for supporting or opposing a candidate. Total contribution refers to all the activities one performs in the community including offering content and social contributions to the community. A high level of total contribution can indicate that a user has overall been highly engaged and highly active in the community and has made efforts to help the community achieve its missions. This can provide a reason for the voters to trust that user in performing administrative tasks effectively and committedly, as Wikipedia administrators are in fact defined as “a particular class of highly engaged Wikipedia contributors” (Algan, Benkler, Morell, and Hergueux, 2013; p.4). As a result, a higher level of collective trust in a candidate can lead to a higher likelihood of their success in the RfA process. Thus, we hypothesize:

H1: There is a positive relationship between total contribution and success in the RfA process.

Although total contribution can demonstrate the candidate's commitment and trustworthiness, a particular area of concern is the perception of editcountitis and entitlement (Collier, Burke, Kittur, and Kraut, 2008). That is, those who have exceptionally large numbers of edits on articles, or have been members of the project for lengthy periods of time, feel entitled to be elected as administrators. As a result, editors desiring to become administrators may engage in editing behavior that inflates their edit counts. These activities include making many trivial edits (e.g., changing capitalization of a letter on thousands of pages), and making a few insignificant edits daily for lengthy periods of time (e.g., making a few minor changes each day for a year). Therefore, the voters should may not rely merely on total contribution as the only indicator of the candidate's merit. The voters may take into account the distribution of the candidate's contribution in each type of collaborative effort including content contribution and social contribution and make their final decision accordingly.

Content contribution measures the total number of times a user has created or edited an article. Writing freely-available articles has been the main purpose of creating Wikipedia. Thus, contributing to articles implies that one feels determined to engage in writing and adding articles to the encyclopedia and enhancing the quality of existing articles by making edits on them. Highly active users in terms of content contribution can be seen by the community as committed users who can lead the Wikipedia open source project and take the role of administrator effectively. Thus, we hypothesize:

H2: There is a positive relationship between content contribution and RfA success.

As discussed earlier, social contribution pertains to editor discussions that revolve around improving the content of articles within Wikipedia. Those computer-mediated conversations can improve the overall quality of articles because the more people discuss what they think should and should not be included in the articles, and the more they provide reasoning for what they think is correct, the more reliable the content of the articles will become. Although social contribution is not the major mission of the Wikipedia community, it can complement the activities one performs in terms of content contribution. Therefore, active social contributors can receive a higher level of support from the community in the RfA process. We hypothesize:

H3: There is a positive relationship between social contribution and RfA success.

The research conducted by Burke and Kraut (2008a) identified edit summary usage as a factor that significantly influences RfA success. Edit summary usage measures the information users provide about each contribution they make. Users with low edit summary usages generally provide no summary of their actions, decreasing transparency of the projects work and increasing difficulty of other users to summarize their work. This lack of activity transparency may work against candidates in two ways. First, by not providing enough explanations, users miss a key opportunity to improve their recognition by emphasizing what they do and how they contribute to the community. Second, lack of transparency may imply that one is not committed enough to put adequate time and effort to provide clear explanations for their activities. This may be perceived as laziness. Therefore, those who clarify what they do can hypothetically have a higher chance of being elected by the community as an administrator. In this study, we conceptualize clarification of contribution as providing explanations on contributions to articles. We hypothesize:

H4: There is a positive relationship between clarification of contribution and RfA success.

Prior studies suggest that number of attempts and tenure (days since a user has registered on Wikipedia) may play a role in one’s success in the RfA process (Burke and Kraut, 2008a; Jankowski-Lorek, Ostrowski, Turek, and Wierzbicki, 2013). Burke and Kraut (2008a), for example, found that every eight additional months of experience on Wikipedia could increase the chance of a candidate’s promotion by 3%. The results of that study also revealed that each additional attempt in the RfA process would decrease one’s likelihood of success by 11.8%. Accordingly, we include tenure and number of attempts as control variables in our model. However, we do not propose any hypotheses related to those variables as they are not the main focus of this study. Figure 1 presents the research framework and hypotheses.

Method

In order to better understand how we operationalize the constructs and collect data to test the hypotheses, we first describe the RfA process in more detail.

RfA Process

Each RfA process starts with a week-long RfA period, during which a registered editor desiring to become an administrator submits themselves to the community for assessment (Burke and Kraut, 2008a; Derthick et al., 2011). To do so, the candidate creates a request page and answers some community agreed upon, pre-defined questions. After an RfA has been presented to the community, a community member provides a set of descriptive statistics on the candidate’s history with the project providing an easily accessible high-level summary of how the candidate has contributed to the project. Those summary statistics provide information as to how long the candidate user has been registered (tenure), the number of contributions he or she has made to various areas of the project, and how consistent the candidate is at following measurable desirable behavior, such as providing a descriptive summary of every change. The same statistics are provided to all the community members for every user who starts the RfA process and desires to become an administrator. To assess whether a candidate is suitable for promotion, community members are encouraged to assess the candidate’s edit history and the cumulative contribution that the candidate has made to the project. This information can then be used as a basis for the voters to vote either Support or Oppose on each RfA. At the end of the process, a member of a very limited, highly trusted, and well established group of volunteers analyzes the outcome to determine community consensus. Candidates who receive an appropriate level of support from the community (usually greater than 70% support) are promoted to administrators.

At the core of the RfA evaluation process is the permanent record of every change that a volunteer makes, called an edit. An edit represents any change made to any editable page within the project (Zhao and Bishop, 2011). For example, the removal of an extra comma and the writing of a new article could both be considered a single edit. Furthermore, contributions to coordinative and social aspects of the project also count as edits. The total edit count is the sum total of all edits a volunteer has made to the project, regardless of size, quality, and currency, and is usually the first summary statistic posted once a candidate has started their RfA. Some Wikipedia users (aka, Wikipedians) have made more than one million edits on Wikipedia articles ("List of Wikipedians by number of edits," 2011). The summary statistic total edit count has many obvious problems, including the shortcoming of being unable to determine the quality of the contribution. To highlight this shortcoming, it would be trivial for a volunteer to perform 10,000 edits of adding a single space to the end of an article. As a result, the magnitude of contribution may appear large according to the total edit count, even though they have made no actual value-adding contribution to the project. A remedy to this issue is to consider contribution to namespaces as a supplemental measure of contributions.

Namespaces are divisions of contributions to the Wikipedia project and are used for maintaining specific tasks and housing specific content (Kriplean, Beschastnikh, and McDonald, 2008; Viegas et al., 2007). For instance, Main namespace “contains all encyclopedia articles, lists, disambiguation pages, and encyclopedia redirects” ("Namespaces," 2015), whereas User namespace “contains user pages and other pages created by individual users for their own personal use” ("Namespaces," 2015) and Wikipedia namespace “contains many types of pages connected with the Wikipedia project itself: information, policy, essays, processes, discussion, etc.” ("Namespaces," 2015). In fact, Main, User, and Wikipedia are three of the major namespaces commonly used in the Wikipedia project (Aaltonen and Lanzara, 2015; Panciera, Halfaker, and Terveen, 2009).

Each namespace (e.g., Main and User) is associated with two pages: a subject namespace/page, which contains the article itself and Talk namespace/page, which is an area dedicated to coordination between asynchronous editors and discussions on the content of the associated subject page (Viegas et al., 2007). For instance, the talk page for a discussion on improvements to the article Australia is named Talk: Australia and is used by editors for discussing how to enhance the quality of that article in terms of different quality dimensions. Thus, Main, User, and Wikipedia namespaces are associated with Main Talk, User Talk, and Wikipedia Talk pages, respectively. These six namespaces, which include subject namespaces and their associated talk namespaces, and the content within them reflect a major portion of editors’ contributions to the Wikipedia project. When a candidate submits an RfA, one of the community members provides summary statistics such as those seen in Table 1. Those summary statistics can be used by the community to assess an RfA and make a decision on promoting a user in the community.

| Scope | Edit counts |

|---|---|

| Mainspace | 3933 |

| Talk | 107 |

| User talk | 3203 |

| User | 631 |

| Wikipedia talk | 4 |

| Wikipedia | 539 |

| Average edits per article | 1.81 |

| Total Edits | 8417 |

| 2014/4 | 1 |

| 2014/7 | 0 |

| 2014/8 | 123 |

| 2014/9 | 65 |

| 2014/10 | 3416 |

| 2014/11 | 4812 |

| Earliest edit | 17:38, 24 April 2014 |

| Number of unique articles | 4686 |

Construct Operationalization

In this study, we operationalize content contribution in terms of the number of total edits one makes on the three major subject namespaces including Main, User, and Wikipedia. We also operationalize social contribution in terms of the number of times one engages in discussions on Main Talk, User Talk, or Wikipedia Talk pages. Moreover, total contribution is operationalized as the total edit counts on the aforementioned six namespaces (Main, User, and Wikipedia, Main Talk, User Talk, and Wikipedia Talk). All those statistics are available on editors’ edit history pages (similar to Table 1). We also operationalize clarification of contribution in terms of edit summary usage, which measures on how many occasions the candidate has provided explanations on his or her edits on Wikipedia articles. Finally, we use the officially documented decisions on the RfAs, which are all publicly visible on the record of all past RfAs, to determine whether an RfA was a success or a failure.

Data Collection

In order to address the hypotheses in this study, we directly collected data from Wikipedia.com on successful and unsuccessful RfAs over a period of two years (N = 954), and summary statistics on the editors who were being voted on. From our sample, 200 abnormal attempts with no votes counted, or with no edits outside of the RfA process were eliminated from the sample. After removing these abnormal RfAs we were left with a final N = 754. Edit counts and number of support and oppose votes for our final sample were collected through a custom written edit counter program, developed in Java. Due to the nature of Wikipedia, past information is available for analysis with detailed date and time stamps indicating when the action occurred (Priedhorsky et al., 2007). A random selection of the RfAs was manually inspected to verify that the data was being properly collected and all manually gathered values matched those collected automatically.

Analysis and Results

Each RfA has a dichotomous outcome, promotion or no promotion (Desai et al., 2014). Hence, binomial logistic regression was chosen for our analysis (Hosmer Jr and Lemeshow, 2004). The regression model has ten potential predictors. The first three variables are logarithm of days since registration (aka, tenure), logarithm of total edit counts since registration, and number of attempts (e.g., an editor that failed their first attempt may try again at a later date). Due to the skewness and wide distribution of days and total edits, we chose to take the log transformation of these measures to make their values more linear (Lattin, Carroll, and Green, 2003; Neter, Kutner, Nachtsheim, and Wasserman, 1996). The model also initially included six variables representing edit count within each of the six namespaces, divided by total edit count. These variables show what percentage of one’s contribution is devoted to each of the six namespaces. In addition, the model included edit summary usage, which measures the information users provide about each contribution they make. As discussed earlier, edit summary usage is a measure of clarification of contribution. Among those ten potential predictors, log(tenure) and number of attempts are considered control variables since they are not directly related to the proposed hypotheses in this study.

| Construct/Control Variable | Variable Name in the Regression Model | 95% C.I. for EXP(B) | ||||||

|---|---|---|---|---|---|---|---|---|

| B | S.E. | Wald | Df | Sig. | Lower | Upper | ||

| Control Variable | TENURE | 1.470 | .352 | 17.430 | 1 | .000 | 2.181 | 8.670 |

| Control Variable | NUM_ATTEMPTS | -.681 | .137 | 24.654 | 1 | .000 | .387 | .662 |

| Total Contribution | TOTAL_EDITS | 1.170 | .247 | 22.474 | 1 | .000 | 1.987 | 5.229 |

| Clarification of Contribution | EDIT_SUM_USAGE | 8.262 | 1.384 | 35.628 | 1 | .000 | 257.080 | 58427.962 |

| Social Contribution | USER_TALK | -2.315 | .777 | 8.879 | 1 | .003 | .022 | .453 |

| Social Contribution | WIKIPEDIA_TALK | 9.926 | 3.813 | 6.777 | 1 | .009 | 11.624 | 3.600E7 |

| CONSTANT | -15.610 | 1.702 | 84.154 | 1 | .000 | |||

| Cox & Snell R-Square = 0.31 | ||||||||

In order to perform the regression analysis, we used a forward selection approach. Using that approach, the statistical package (IBM SPSS) decides what predictor variables may have a significant relationship with the outcome and hence should be included in the final model. As a result of this process, six predictor variables showed significant impact on the outcome and were included in the model (Table 2). Those six variables are total edits, edit summary usage, contribution to User Talk, contribution to Wikipedia Talk, days since registration, and number of attempts.

We further analyzed the predictive power of the final model using a classification table measuring correct classification percentages. In this procedure, we applied the empirically collected voting data to the model to determine if the model could predict whether the decision would be to promote or not to promote. The predicted outcome produced by the model was then compared to the empirically collected outcome to determine how accurate the model was at predicting the true outcome. Overall, the outcome of 76.6% of the cases were predicted correctly using the regression model (Table 3).

| Predicted vs. Observed RfA outcomes | Success/Fail (Predicted) | Percentage Correct | ||

|---|---|---|---|---|

| Fail | Pass | |||

| (Observed) | Fail | 444 | 79 | 84.9 |

| Pass | 97 | 133 | 57.8 | |

| Overall Percentage | 76.6 | |||

Hypothesis Testing

According to the results, total edits has a significant relationship with RfA success (p-value < 0.001) indicating that H1 was supported. None of the subject namespaces (Main, User, and Wikipedia) showed a significant relationship with RfA success. Therefore, H2 was not supported. The results of the relationship between social contribution and RfA success, however, were mixed. Among Main Talk, User Talk, and Wikipedia Talk namespaces, only User Talk and Wikipedia Talk showed significant relationships with RfA success (p-value < 0.01), whereas the relationship between Main Talk and RfA success was not supported. The relationship between User Talk and RfA success, however, was demonstrated to be in the opposite direction of what we expected it to be. In other words, the results showed that those who contributed more to Talk User pages were less likely to be successful in their RfA. Thus, H3 was partially supported. According to the results, there was also a significant relationship between edit summary usage and RfA success (p-value < 0.001). Thus, H4 was supported.

Regarding the control variables, both tenure (number of days since registration) and number of attempts showed significant relationships with RfA success. The positive relationship between tenure and RfA success implies the overall community's trust in senior members. The negative relationship between the number of attempts and RfA success suggests that those who tried to become administrators several times were less likely to be supported by the community.

In summary, two of the four hypotheses in our study were supported (H1 and H4), while one hypothesis was partially supported (H3) and the other hypothesis was not supported (H2).

Discussion

The results of this study demonstrate that the readily available information on users’ contributions to the Wikipedia project can be used to satisfactorily predict the results of the promotion decisions. This information includes total contribution to the community as well as specific forms of contribution such as those related to Wikipedia Talk pages. Moreover, the results revealed that more senior and those who had made fewer attempts at becoming administrators were more likely to be successful in their RfA process. These findings are consistent with the results of the prior studies (e.g., Burke and Kraut, 2008a, 2008b). Additionally, the negative coefficient for the percentage of contributions to the User Talk namespace (B = -2.315) may represent the community’s awareness of wasted resources as User talk contributions are supposed to be used for coordination among users, not for general socialization. It could also be that users who are in trouble more often than not, as denoted by the number of times they have been warned by other editors or their account has been blocked, may have a higher number of edits to User Talk pages as they are required to explain their actions. This increased number of edits may be related to the fact that the community policy requires adherence to an Assume Good Faith (AGF) policy that requires users to be warned multiple times before adverse actions are taken against them. In this situation, problematic users spend time and edits responding to these warnings instead of contributing positively to the project. Accordingly, users who want to ultimately become candidates for adminship should be aware that over speaking in Talk pages, in particular User Talk pages, may work against them in the RfA process and thus they should refrain from that. Furthermore, our results imply that edit summary percentage is valued because it shows the community that the user is not lazy (i.e., entering edit summaries requires effort), and the user is in support of transparency.

A plausible explanation for the negative relationship between the number of attempts and successful promotion is that failure in several attempts by a candidate may give the voters the impression that the candidate has not been able to convince voters in the previous rounds of RfA process to vote in favor of him or her. Thus, the candidate may still not be good enough to become an administrator. An implication of this result for the Wikipedia community is that registered users who want to nominate themselves for adminship in Wikipedia should not see their first attempt as a free-trial because, if failed, they may have less chance of promotion in subsequent attempts.

The results of this study have additional contributions for research and practice. From a theoretical standpoint, our results extend prior studies’ results, such as Burke and Kraut’s (2008a) findings, on what factors may determine the likelihood of success in the RfA process. More specifically, our results highlighted the role of one’s contribution behaviors in one’s RfA success. From a practical perspective, our findings may be used by managers and providers of Wikipedia and other open source projects. For example, our results indicated that users’ contributions to User Talk and Wikipedia Talk had negative and positive impacts on community’s decisions on RfAs, respectively. Thus, community providers can make users aware of this, and accordingly, encourage them to contribute more to Wikipedia Talk pages and less to User Talk pages to increase the likelihood of their success in their requests for promotion within the community. Moreover, our results showed that clarification of contribution may significantly impact users’ success in RfAs. Therefore, users should be informed of this and be encouraged by the community managers to provide sufficient and clear summary of the edits they make to the Wikipedia pages.

The results of the study are also applicable to other contexts such as open source software (OSS) development. OSS development is a process through which software with publicly available code (e.g., Linux, Firefox web browser, and Apache OpenOffice) typically is developed by a group of volunteer developers (Mockus, Fielding, and Herbsleb, 2002; Stewart and Gosain, 2006). Those volunteers contribute to the project by writing code, fixing bugs, and adding new features to the software. In the OSS development process, once developers collectively make significant contributions to the project, a new version of the software gets released. However, determining what changes to the software should be included in the next release and which ones should be reverted, as well as identifying the developers who are not making legitimate changes to the software are among the responsibilities of project administrators (Von Hippel and Von Krogh, 2003). Those administrators may also be elected through a peer-review process similar to the RfA process within Wikipedia. Therefore, the similarities between the contribution processes and the social mechanisms such as election by the community in Wikipedia and OSSs makes the findings of this study applicable and useful in the context of OSS development.

Limitations of this study include incorporating the narrow view of readily available information as opposed to a more in depth analysis of edit history. In fact, contributions to each of the namespaces could be further broken down into the type of activity performed for a better analysis of the factors leading to a successful promotion. Furthermore, this study may not be used for inferences of causality as the measures are not experimental. It is possible and even likely that successful promotion is not due to the number of edits made, but the characteristics of users that may be evident in these measures.

Future research may perform a factor analysis to determine what underlying factors are important to the community and correlate those factors with objective measures. Moreover, future studies may explore the relation between lower-level summary factors and RfA success. Examples of these measures may include the number of featured articles, good articles, or other potential measures of quality. These measures, while not as easy to gather, may provide valuable insight into the quality and content of the contributions an editor has made. We hypothesize that these measures may also have a relationship, but are unsure if voters in the RfA process will go to the effort to utilize this more-difficult-to-acquire information, or instead, prefer the high-level summary data.

Conclusion

Wikipedia, as the largest open-source online encyclopedia, encompasses a community of individuals who contribute to the project by creating articles, editing articles created by others, and engaging in discussions related to the articles. Like other online and offline communities, the Wikipedia community revolves around various social processes. RfA is one of those social processes through which users with special privileges are selected. In this study, we examined several factors, mostly contributions-related, that can predict a candidate’s success in the RfA process.

Our results demonstrated that total contribution can significantly predict one’s success in the RfA success. We suspect that this relationship is due to the fact that community members are more likely to trust users who have a sizeable demonstrated history of contributing to the project without having any adverse actions against them. While it is possible for an editor to review every edit in a candidate’s history, this process can be extremely time consuming, and it may be more efficient to view and make decisions based on the high-level summary data we explored in this study, total number of contributions.

A potential problem with using high-level summary data to make promotion decisions is that it fails to take into account the quality and content of the contributions. An editor may make a large number of trivial edits, and without thorough review of their contribution, may be granted privileged access without substantially contributing to the community’s core goals and objectives. Similarly, users who have a smaller number of high quality contributions may also be passed up for administrative position, potentially leading to a lower number of qualified administrators, something notable considering the small percentage of community members who are promoted to this position. Thus, the fact that quantity of contributions is important in the RfA process may encourage users who want to become administrator candidates to focus on the number of edits rather than their actual role, which is quality control. In the long run, this may negatively affect the overall quality of articles on Wikipedia.

As we were expecting the distribution of contributions across the various namespaces to shed some insight into the content and quality of an editor’s contribution to the project, we were specifically surprised by the lack of support for the role of content contribution in RfA success. We suspect that this may be due to the fact that there may be many different paths to becoming an administrator, where different editors may specialize in contributions to specific areas of the project, and all of the areas represented are important to the project’s success.

Moreover, our results revealed that those who contributed more frequently to the User Talk pages were less likely to be successful in the promotion process. We believe that this relationship is due to the fact that users who spend a large amount of their effort in areas of personal communication may be wasting precious community resources while failing to contribute to the core goals of the project, building Wikipedia, The Free Encyclopedia.

This study is important because it examined how privileges are granted to users in open source communities. Additionally, the findings of this study confirmed and extended prior research in the area of Wikipedia by exploring the data over a larger time period. Specifically, these results confirmed and extended Burke and Kraut’s (2008a, 2008b) findings on how user contributions and edit histories could predict users’ promotion success in the RfA process.

References

- Aaltonen, A. and Lanzara, G. F. (2015) Building Governance Capability in Online Social Production: Insights from Wikipedia. Organization Studies, 16, 12, 1649-1673.

- Administrators/Tools. (2015) https://en.wikipedia.org/wiki/Wikipedia:Administrators/Tools. Retrieved December 2015.

- Algan, Y., Benkler, Y., Morell, M. F., and Hergueux, J. (2013) Cooperation in a peer production economy experimental evidence from wikipedia. Paper presented at the Workshop on Information Systems and Economics, 1-31.

- Benkler, Y. (2002) Coase's Penguin, or, Linux and "The Nature of the Firm". Yale Law Journal, 112, 3, 369-446.

- Burke, M., and Kraut, R. (2008a) Mopping up: modeling wikipedia promotion decisions. Proceedings of the 2008 ACM conference on Computer Supported Cooperative Work, November 8-12, San Diego, CA, USA, 27-36.

- Burke, M., and Kraut, R. (2008b) Taking up the mop: identifying future wikipedia administrators. Proceedings of the CHI'08 extended abstracts on Human Factors in Computing Systems, April 5-10, Florence, Italy, 3441-3446.

- Cabunducan, G., Castillo, R., and Lee, J. B. (2011) Voting behavior analysis in the election of Wikipedia admins. Proceedings of the 2011 International Confernece on Advances in Social Networks Analysis and Mining (ASONAM), 25-27 July, Kaohsiung City, Taiwan, 545-547.

- Collier, B., Burke, M., Kittur, N., and Kraut, R. (2008) Retrospective versus prospective evidence for promotion: The case of Wikipedia. Proceedings of the 2008 Annual Meeting of the Academy of Management, August 8-13, Anaheim, CA, USA.

- Derthick, K., Tsao, P., Kriplean, T., Borning, A., Zachry, M., and McDonald, D. W. (2011) Collaborative sensemaking during admin permission granting in Wikipedia, in Ant Ozok and Panayiotis Zaphiris (Eds.) Online Communities and Social Computing, 100-109, Springer.

- Desai, N., Liu, R., and Mullings, C. (2014) Result Prediction of Wikipedia Administrator Elections based on Network Features. Retrieved October 2015 from http://cs229.stanford.edu/proj2014/Nikhil%20Desai,%20Raymond%20Liu,%20Catherine%20Mullings,

%20Result%20Prediction%20of%20Wikipedia%20Administrator%20Elections%20based%20ondNetwork%20Features.pdf - Ehmann, K., Large, A., and Beheshti, J. (2008) Collaboration in context: Comparing article evolution among subject disciplines in Wikipedia. First Monday, 13, 10.

- Fallis, D. (2008) Toward an epistemology of Wikipedia. Journal of the American Society for Information Science and Technology, 59, 10, 1662-1674.

- Hara, N., Shachaf, P., and Stoerger, S. (2009) Online communities of practice typology revisited. Journal of Information Science, 35, 6, 740-757.

- Giles, J. (2005). Internet encyclopaedias go head to head. Nature, 438, 900-901.

- Hergueux, J., Algan, Y., Benkler, Y., and Morell, M. F. (2014). Cooperation in Peer Production Economy: Experimental Evidence from Wikipedia. Paper presented at the Lyon Meeting, March 18.

- Hosmer Jr, D. W., and Lemeshow, S. (2004) Applied logistic regression: John Wiley & Sons.

- Jankowski-Lorek, M., Ostrowski, L., Turek, P., and Wierzbicki, A. (2013) Modeling Wikipedia admin elections using multidimensional behavioral social networks. Social Network Analysis and Mining, 3, 4, 787-801.

- Jøsang, A., Ismail, R., and Boyd, C. (2007) A survey of trust and reputation systems for online service provision. Decision Support Systems, 43, 2, 618-644.

- Kaplan, A., and Haenlein, M. (2014) Collaborative projects (social media application): About Wikipedia, the free encyclopedia. Business Horizons, 57, 5, 617-626.

- Kim, J. Y., Gudewicz, T. M., Dighe, A. S., and Gilbertson, J. R. (2010) The pathology informatics curriculum wiki: Harnessing the power of user-generated content. Journal of Pathology Informatics, 1, 1, 10.

- Kriplean, T., Beschastnikh, I., and McDonald, D. W. (2008) Articulations of wikiwork: uncovering valued work in wikipedia through barnstars. Paper presented at the 2008 ACM conference on Computer Supported Cooperative Work, November 8-12, San Diego, CA, USA, 47-56.

- Lattin, J. M., Carroll, J. D., and Green, P. E. (2003) Analyzing Multivariate Data: Thomson Brooks/Cole Pacific Grove, CA.

- Leskovec, J., Huttenlocher, D. P., and Kleinberg, J. M. (2010) Governance in social media: A case study of the wikipedia promotion process. Proceedings of the Fourth International AAAI Conference on Weblogs and Social Media, May 23-26, Washington, DC, USA.

- Lewandowski, D., and Spree, U. (2011) Ranking of Wikipedia articles in search engines revisited: Fair ranking for reasonable quality? Journal of the American Society for Information Science and Technology, 62, 1, 117-132.

- List of Wikipedians by number of edits. (2011) https://en.wikipedia.org/wiki/Wikipedia:List_of_Wikipedians_by_number_of_edits, Retrieved October 2015.

- Maloney-Krichmar, D., and Preece, J. (2005) A multilevel analysis of sociability, usability, and community dynamics in an online health community. ACM Transactions on Computer-Human Interaction (TOCHI), 12, 2, 201-232.

- Mesgari, M., Okoli, C., Mehdi, M., Nielsen, F. Å., and Lanamäki, A. (2015) The sum of all human knowledge: A systematic review of scholarly research on the content of Wikipedia. Journal of the Association for Information Science and Technology, 66, 2, 219-245.

- Namespaces. (2015). https://en.wikipedia.org/wiki/Wikipedia:Namespace, Retrieved October 2015.

- Mockus, A., Fielding, R. T., and Herbsleb, J. D. (2002) Two case studies of open source software development: Apache and Mozilla. ACM Transactions on Software Engineering and Methodology (TOSEM), 11, 3, 309-346.

- Nazaryan, A. (2012). Britannica No More: Wikipedia Wins. http://www.nydailynews.com/blogs/pageviews/britannica-no-wikipedia-wins-blog-entry 1.1637871, Retrieved October 2015.

- Neter, J., Kutner, M. H., Nachtsheim, C. J., and Wasserman, W. (1996). Applied Linear Statistical Models, Irwin Chicago.

- Panciera, K., Halfaker, A., and Terveen, L. (2009) Wikipedians are born, not made: a study of power editors on Wikipedia. Proceedings of the ACM Conference on Supporting Group Work, May 10-13, Sanibel Island, FL, USA, 51-60.

- Preece, J. (2000) Online communities: designing usability, supporting sociability. Industrial Management & Data Systems, 100, 9, 459-460.

- Priedhorsky, R., Chen, J., Lam, S. T. K., Panciera, K., Terveen, L., and Riedl, J. (2007) Creating, destroying, and restoring value in Wikipedia. Proceedings of the ACM Conference on Supporting Group Work, November 4-7, Sanibel Island, FL, USA, 259-268.

- Purdy, J. P. (2009) When the tenets of composition go public: A study of writing in Wikipedia. College Composition and Communication, 61, 2, W351.

- Rahman, M. M. (2009) An analysis of Wikipedia. Journal of Information Technology Theory and Application (JITTA), 9, 3, 81-98.

- Rullani, F., and Haefliger, S. (2013) The periphery on stage: The intra-organizational dynamics in online communities of creation. Research Policy, 42, 4, 941-953.

- Sabater, J., and Sierra, C. (2005) Review on computational trust and reputation models. Artificial Intelligence Review, 24, 1, 33-60.

- Size of Wikipedia. (2015). https://en.wikipedia.org/wiki/Wikipedia:Size_of_Wikipedia, Retrieved October 2015.

- Stewart, K. J., Gosain, S. (2006) The impact of ideology on effectiveness in open source software development teams. MIS Quarterly, 30, 2, 291-314.

- Turek, P., Spychała, J., Wierzbicki, A., and Gackowski, P. (2011) Social mechanism of granting trust basing on polish Wikipedia requests for adminship, in Anwitaman Datta, Stuart Shulman, Baihua Zheng, Shou-De Lin, Aixin Sun, Ee-Peng Lim (Eds.), Proceedings of the Third International Conference on Social Informatics, October 6-8, Singapore, 212-225, Springer Berlin Heidelberg.

- Viegas, F. B., Wattenberg, M., Kriss, J., and Van Ham, F. (2007) Talk before you type: Coordination in Wikipedia, Proceedings of the 40th Annual Hawaii International Conference on System Sciences (HICSS), January 3-6, Waikoloa, HI, USA, 78.

- Von Hippel, E., and Von Krogh, G. (2003) Open source software and the" private-collective" innovation model: Issues for organization science. Organization Science, 14, 2, 209-223.

- Welser, H. T., Cosley, D., Kossinets, G., Lin, A., Dokshin, F., Gay, G., and Smith, M. (2011) Finding social roles in Wikipedia, Proceedings of the 2011 iConference, February 8-11, Seattle, WA, USA, 122-129.

- West, K., and Williamson, J. (2009) Wikipedia: Friend or foe? Reference Services Review, 37, 3, 260-271.

- Zhao, X., and Bishop, M. (2011) Understanding and supporting online communities of practice: lessons learned from wikipedia. Educational Technology Research and Development, 59, 5, 711-735.