Peer Review Personas

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 United States License. Please contact mpub-help@umich.edu to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Abstract

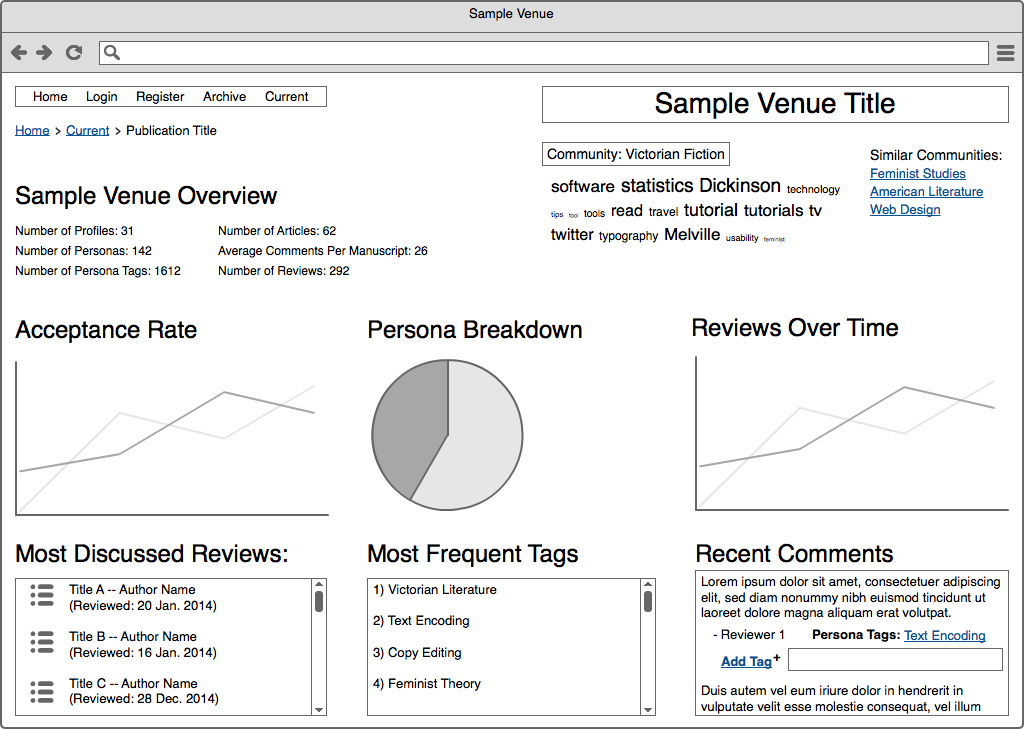

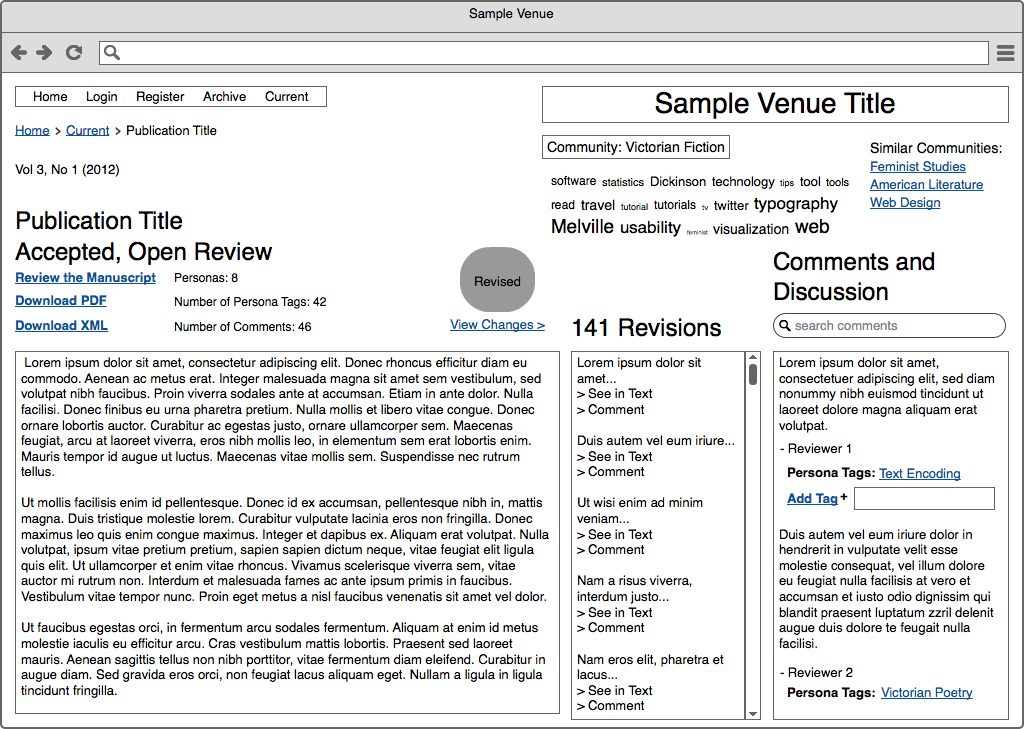

Arguing for the relevance of speculative prototyping to the development of any technology, this essay presents a “Peer Review Personas” prototype intended primarily for authoring and publication platforms. It walks audiences through various aspects of the prototype while also conjecturing about its cultural and social implications. Rather than situating digital scholarly communication and digital technologies in opposition to legacy procedures for review and publication, the prototype attempts to meaningfully integrate those procedures into networked environments, affording practitioners a range of choices and applications. The essay concludes with a series of considerations for further developing the prototype.

Prototyping a Plugin for Peer Review

Web–based, networked knowledge production is an increasingly relevant scholarly practice, sparking an array of responses, from the understandably enthusiastic to the justifiably skeptical. From one popular perspective, a networking mechanism such as Twitter fosters social relations, academic exchange, and knowledge mobilization. For scholarly organizations, it frequently functions as “a conference platform” that allows a “community to expand communication and participation in events amongst its members.”[1] It is also a “backchannel and professional grapevine for hundreds of people who...use it daily to share information, establish contacts, ask and answer questions, bullshit, banter, rant, vent, kid, and carry on.”[2] Yet, from other perspectives, Twitter is difficult to follow, reference, or archive: “conversations...are hopelessly dispersed and sometimes even impossible to reconstitute only a few months after they have taken place.”[3] It can also enable exclusion, as conversations within tightly knit communities may appear intimidating or closed to outside observers.[4] What’s more, it can be a vehicle for norming, harassment, prejudice, and bullying.[5] And it risks reducing the complexity of social relations to the vulgarity of social capital—to the retweets, favorites, followers, and Klout of attention economics.[6] With these tensions in mind, we are particularly interested in the recursions between networked knowledge production and peer review. After all, amidst social media trends, scholars now routinely call for the reform of scholarly communication, often with an interest in translating existing mechanisms (e.g., Twitter, Facebook, and Disqus) into dynamic modes of scholarly review, curation, and community building.[7] Responding to such calls, we prototyped a plugin that could enrich the affordances of authoring and publication platforms (e.g., Open Journal Systems, WordPress, and Scalar), expand peer review, and further contextualize the practices of networked knowledge making. As we argue in the following paragraphs, the prototype—which we call “Peer Review Personas”[8]—enacts strategies to transform individuated feedback into peer–to–peer networks for scholarly communication. Inspired especially by CommentPress, Digress.it, Hypothes.is, and Commons in a Box, it also highlights the various, often divergent roles practitioners play on a daily basis, without the assumption that peer review is performed uniformly across situations and settings.

But the prototype is not without its problems. In fact, we are rather ambivalent about it, largely because the affordances of technologies are always culturally embedded and thus difficult to generalize. As such, our aim in this essay is to avoid evangelization and instrumentalist advocacy—to refrain from promoting our prototype and its implementation. Building upon the design work of Kari Kraus,[9] Jonathan Lukens, Carl DiSalvo,[10] Alan Galey, and Stan Ruecker,[11] we propose “Peer Review Personas” as an opportunity to stage, better understand, and even speculate about the potential relations, mechanics, iterations, consequences, and values of networked peer review prior to its engineering.[12] While skeptics may contend that such speculation is merely conducive to vaporware or thought pieces divorced from the material particulars of programming and infrastructure, we argue that thorough, iterative prototyping prompts deliberation and self–reflexivity during the development process. True, it may brush against the grain of efficiency; nevertheless, it ultimately lends itself to the robust yet flexible frameworks that persuasive scholarly communication demands.

Importantly, the prototype is predicated on the belief that web–based, digital scholarship does not—somehow inherently—relate antagonistically with print culture or legacy procedures for review. As an argument, it acknowledges the value of established practices while adding layers of engagement to them. As a plugin, it could work within, rather than against, existing scholarly infrastructures, meaning it could be integrated into a platform without drastically affecting core themes or base code. As a protocol, it could be intertwined with the procedures of blind peer review without abandoning practitioners’ longstanding investments in them. As a feature, it could be activated as a supplement to, or in lieu of, legacy processes. And as a form of mediation, it constructs the openness of peer review across a spectrum; feedback and revision are not simply visible or invisible, but expressed through degrees of access. In short, the prototype promotes negotiations over binaries in digital environments, while encouraging practitioners to archive, connect, exhibit, and share the review work they regularly conduct.

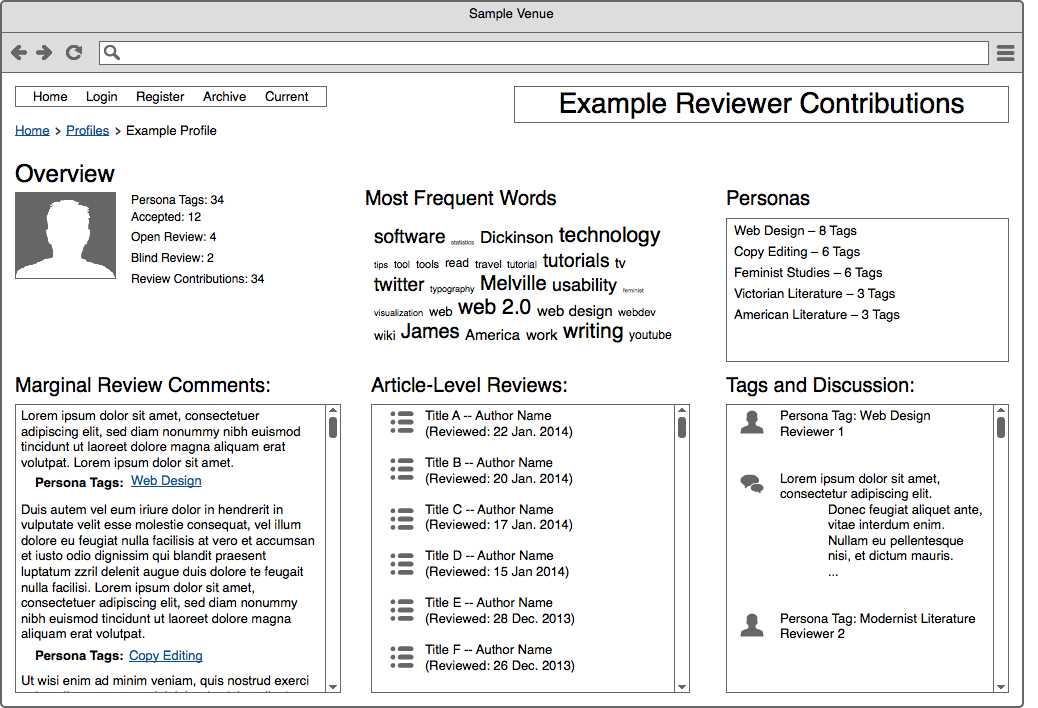

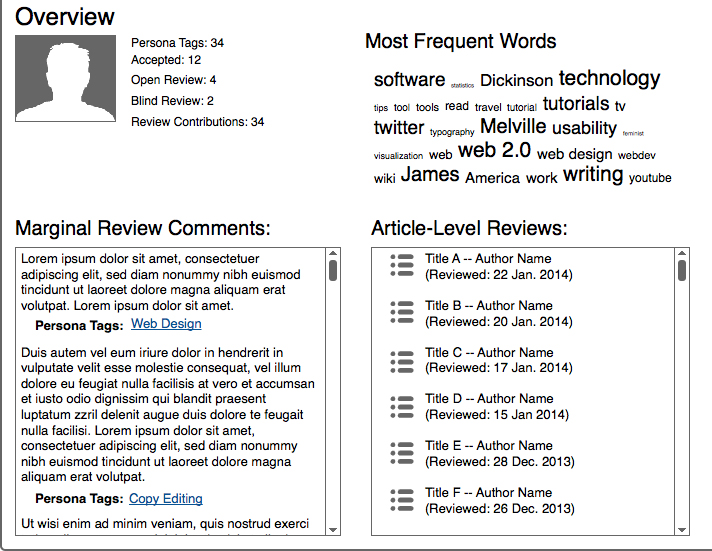

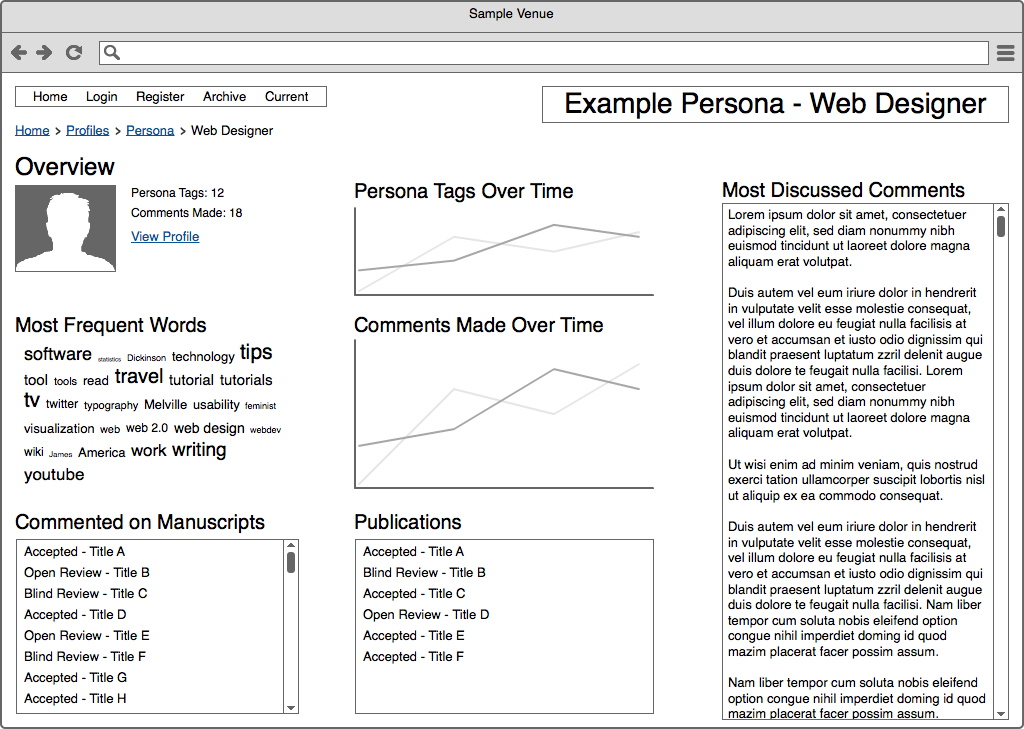

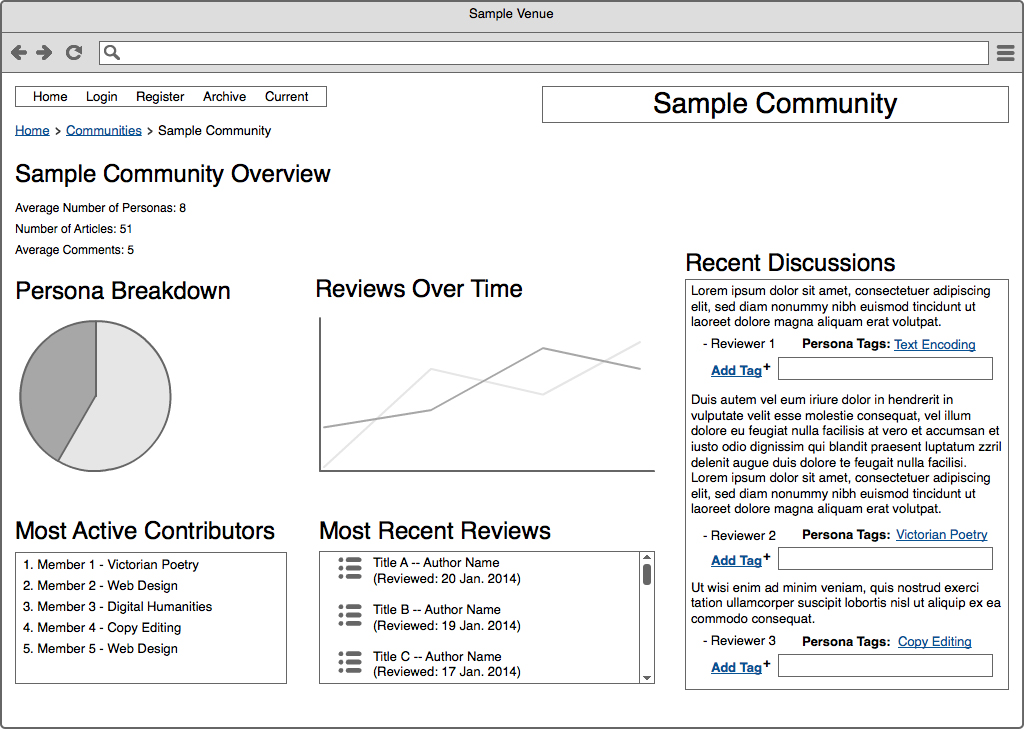

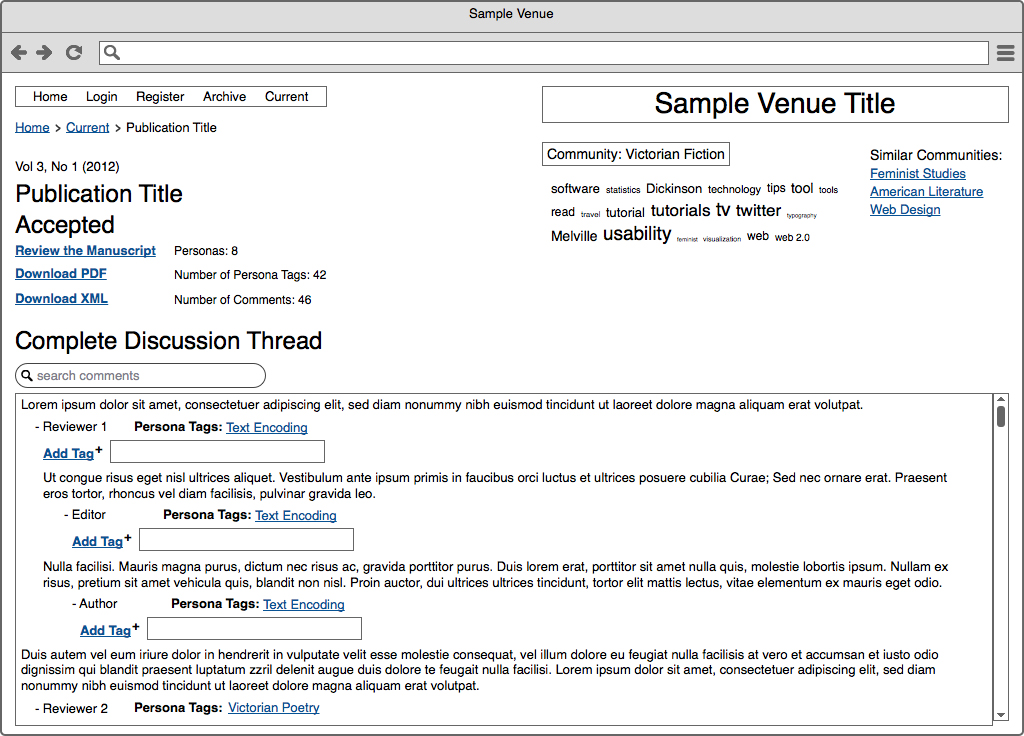

Here, the notion of a “persona”—or a specific social role performed, in this context, by a member of a scholarly community—is central to the prototype’s ethos and design. In the prototype, peer review personas help practitioners: 1) provide a range of commentary on scholarly communications, 2) document otherwise ephemeral or overlooked labor, 3) express activity with granularity over time and across venues, 4) develop reputations in their domains of expertise as well as authority in their communities of practice, 5) collaborate with a collective of practitioners, and 6) assess the tendencies and biases of peer review in the aggregate. Reviewers maintain a profile exhibiting multiple personas, which correspond with metadata, or “tags,” produced by them as well as their scholarly communities. These personas exhibit the diverse contributions made by reviewers, and—in consultation with their editorial boards and review teams—publication venues can individually decide how persona data is shared (e.g., render the data open access, restrict it to a venue’s editorial team, or distribute it only to an invited group).

Such decisions are anchored in one of the prototype’s fundamental premises: publications can—or even should—circulate with designations indicating how, or to what degree, they have been reviewed. As it complicates what “publish” and “review” mean in the first place, this premise encourages practitioners to iteratively push and pull versions of manuscripts, generating and visualizing feedback along the way. Motivated by projects such as HASTAC, PressForward, MediaCommons, Hybrid Pedagogy, and Digital Humanities Now, the prototype facilitates, rather than dissuades, publication. At its core, it is a mechanism for dialogue, not gatekeeping. And it prompts all involved to rethink the very metrics we use to measure the value of publishing and review, starting with the ways we measure how this manuscript becomes that one.

Review Designations for Scholarly Communication

In the interests of broadening scholarly communication, the prototype gives a manuscript at least one of three “review designations,” which do not assume a particular publication sequence.

“Accepted”: These manuscripts have been accepted for publication by a scholarly

venue and are circulated via its publication platform. They can be rendered discoverable by Internet search engines, hidden behind a passcode–protected wall (for subscribers only), or located somewhere in between (e.g., neither discoverable by search engines nor passcode–protected). Based on criteria expressed by the publication venue, they are deemed of interest to a scholarly community. While the venue’s editorial team has read, formatted, and copy–edited them, they have not undergone any formal review. This designation is comparable to how many practitioners and projects currently use blogging platforms, or even Twitter, to circulate their work. However, in this case, the publication venue curates and maintains the content, which—depending on the preferences of the venue and author—can be open for commentary and feedback. Authors may choose to have only this designation for their publications, or they can have their publications reviewed.

“Open Review”: These manuscripts have been accepted by a publication venue, formatted, copy–edited, circulated via its platform, and reviewed by the public and/or an identified group of readers (say, members of a venue’s editorial team). The degree to which these manuscripts are open (e.g., discoverable by readers and search engines) and reviewed is determined by the publication venue. Perhaps anyone can comment on a given manuscript, or maybe only the venue’s subscribers provide feedback. Authors may choose to have only this designation for their publications, to bypass open review altogether, or to conduct open review before or after blind review.

“Blind Review”: These manuscripts have been accepted by a publication venue, formatted, copy–edited, circulated via its platform, and reviewed by practitioners whose identity is concealed. During the process, the author’s identity may also be concealed (“double blind”). The degree to which these publications are open (e.g., discoverable by readers and search engines) and reviewed is determined by the publication venue. Blind review could follow open review, precede it, or not involve open review at all. Authors and publication venues could make these decisions on a case–by–case basis, if they so desired. This designation is comparable to how many peer–reviewed journals currently function.

Some Possible Implications of Review Designations

To be sure, this approach to review designations has a number of possible implications, which are neatly tied to specific assumptions. First, following Clary Shirky’s “publish, then filter” model,[13] more manuscripts could enter into circulation than in the past, especially if practitioners submitted materials they would typically publish on their own websites. By extension, the costs and labor of publishing could increase. As such, venues would need to decide whether, for instance, the “Accepted” designation warrants a venue’s investment in it. Additionally, the designations are not merely technical matters. For many readers, there would be a learning curve when discerning one designation from the next, and practitioners would need to decide how designations inform their reference and citation practices, not to mention their curricula vitae. For example, practitioners may be more inclined to cite publications with the “Blind Review” designation than publications with only the “Accepted” designation. On the other hand, given the time that blind review can consume, publications with the “Accepted” designation may be made available rather quickly and represent more current work on a pressing topic. This emphasis on speed and relevance often motivates claims that citations via Twitter and scholarly blogs have greater immediacy, with ex nunc commentary, than formally reviewed, ex post publications.[14] Whatever the preference, a publication venue’s designation(s) would need to be clear to audiences, included in publication metadata, and accompanied by a succinct explanation of each designation (e.g., on a venue’s “about,” “policies,” “review,” or “submit” page).

If a given publication were revised—for instance, across its “Accepted” and “Open Review” designations—then it could also be versioned. Fortunately, platforms such as WordPress and Scalar have built–in mechanisms for versioning texts, allowing authors and audiences to compare differences between iterations or witnesses of manuscripts. In this case, versioning would add detail and depth to the designations, extending them beyond their status as labels and into the particulars of how a manuscript morphed over time, across states of revision. Here, Matthew Kirschenbaum’s extensive research on versioning electronic media, including the “Save As” chapter in Mechanisms, is deeply informative.[15] We might also borrow from the language of Git—a version control system that is popular in software development communities—to suggest that authors, reviewers, and even audiences would be able to see specific “snapshots” of a manuscript and then examine when, by whom, and to what effects feedback and revisions were “committed” to it. Such practices would help practitioners and venues better contextualize when and where review happens.

Put this way, review designations underscore the social character of manuscripts by highlighting their change and feedback histories. As Kathleen Fitzpatrick argues in “CommentPress: New (Social) Structures for New (Networked) Texts,” there is a growing “need to situate the text within a social network, within the community of readers who wish to interact with that text, and with one another through and around that text.”[16] To be sure, CommentPress, Digress.it, and publications such as Debates in the Digital Humanities exhibit this community–based impulse. In concert with these projects as well as Fitzpatrick’s claim, our prototype suggests that specific mechanisms for peer review could facilitate how texts are situated and expressed within a social network, without alienating those texts or rendering them static. It also implies that such mechanisms could help us better understand and share the labor that goes into review and editing processes—to not only invite revision and interaction around texts but also treat review and editing as central to the work we do, and thus worth studying, archiving, and even sharing.

Foregrounding these issues and others, below we detail six key aspects of our prototype and their practical relation to the notion of personas for peer review. (Again, in this context, personas are specific social roles performed by a member of a scholarly community, and many of them can be exhibited by a single profile.) As we hope readers will notice, these six aspects understand technologies as social and cultural objects, not value–neutral instruments for hammering metrics deterministically onto scholarly communication. Put differently, peer review personas enact an investment in thinking through metrics in order to prompt self–reflexive alternatives to, say, the vulgar quantification of scholarship in digital environments. These six aspects also help us speculate about the possible consequences and values of networking peer review by staging scenarios before any plugins are built or any significant amount of code, labor, and resources is committed.

Aspect 1: Provide a Range of Commentary on Scholarly Communications

By now, many scholars have recognized the various ways in which—to echo Fitzpatrick in “Beyond Metrics”—there is “a profound mismatch between conventional peer review as it has been practiced since the mid–twentieth century and the structures of intellectual engagement on the Internet.”[17] However, few scholars have actually constructed alternatives that better correspond with everyday intellectual engagements on the web. Among the few is Fitzpatrick, who—aside from publishing Planned Obsolescence—has contributed to environments such as CommentPress and MediaCommons, which offer community–based commentary in addition to, or in place of, publisher–derived selections.[18] She effectively describes the strengths of such systems when she explains how “crowdsourced review can improve on traditional review practices not just by adding more readers but by placing those readers into conversation with one another and with the author, deepening the relationship between the text and its audience.”[19] Importantly, Fitzpatrick’s research does not suggest, in a reactionary fashion, that “traditional” review should be discarded or abandoned entirely.[20] Her argument also refrains from instrumentalist, determinist, or positivist interpretations, and her publications on the topic of metrics—not to mention the mechanisms she helped develop—support hybrid approaches to scholarly communication. Beyond conceptual critiques of peer review, a significant amount of her work can be traced across venues, media, and formats, from a draft in CommentPress to its eventual publication online (in The Journal of Electronic Publishing) and/or in print (with New York University Press.)

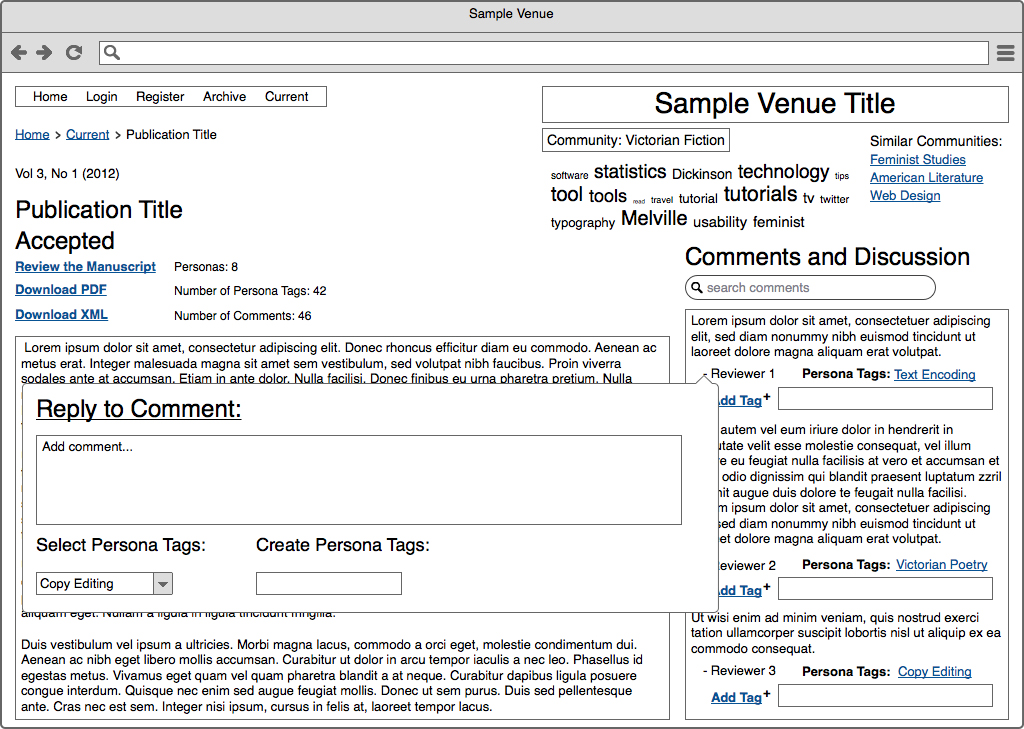

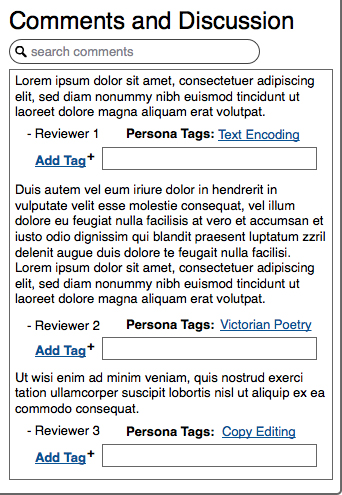

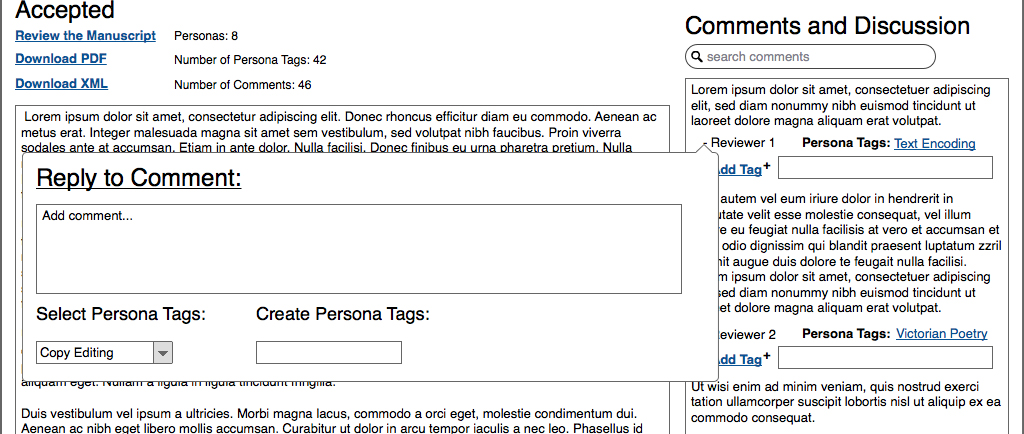

Inspired by Fitzpatrick’s research, our personas prototype stresses the often alienated social dynamics routinely at work in scholarly communication. It articulates peer review relationally—across individual chunks, commits, and layers—to enrich legacy processes through mechanisms typically found in social media platforms. For instance, authors pursuing the “Open Review” designation can receive feedback online in at least two ways: via both end and margin comments.

If these review fields are accessible by either a broad public or a specific audience (e.g., subscribers who comment behind a passcode–protected wall), then they can be designed dialogically, allowing authors to see how groups of reviewers are responding incrementally to a particular manuscript over time.

Here, the networked review process for Debates in the Digital Humanities is an incredibly compelling example. In the introduction to that University of Minnesota Press collection, editor Matthew Gold writes:

For Debates in the Digital Humanities, we chose to go with a semipublic option, meaning that the site was password protected and accessible only to the scholars involved in its production. Draft essays were placed on the site along with a list of review assignments; each contributor was responsible for adding comments to at least one other text. The process was not blind: reviewers knew who had written the text they were reading, and their comments were published under their own names. Often, debates between contributors broke out in the margins of the text.[21]

In the case of Debates, we have an instance of not only crowdsourced feedback (though the “crowd” was small, known, and assigned) but also the implementation of margin comments in a semipublic setting via the CommentPress theme for WordPress. Importantly, Gold observes how peer review in this setting also sparked debates in the margins. Rather than facilitating review solely through individuation (e.g., using Microsoft Word to provide individual feedback on individual articles), Debates constructed a space that highlighted the social relations—and thus the disagreements, concurrences, queries, and clarifications—fundamental to persuasive review. This approach complicates established, one–to–one relations between author and editor (or author and venue), even if today those one–to–one relations remain important to many venues. That said, the Debates approach to peer review should not be relegated to a reactionary, whiz–bang tactic. Quite the contrary, it builds upon peer review in sophisticated ways that clearly understand the social and cultural dimensions of technologies. And it does so in a hybrid fashion. In 2012, it was first released as a print volume. Then later, with assistance from Cast Iron Coding, it was published online and rendered open access at dhdebates.gc.cuny.edu, with a rich interface impossible to replicate in print. Among other features, that interface expresses feedback data for each paragraph of Debates. Yet it also extends the debates publicly, beyond the 2012 release date, and gives authors a discrete sense of how others are interpreting their work over time. As a novel approach to review and publishing, Debates in the Digital Humanities thus expands scholarly communication, makes it more accessible (by human and machine readers), and encourages the robustness of argumentation through both old and new media.

Learning from Debates, our prototype suggests that hybrid approaches to peer review demand flexible forms of feedback: feedback that can be expressed as a margin comment, an end comment, a response to existing comments, or an ongoing dialogue between reviewers and even authors across the review designations of a given manuscript. As we explain later in this essay, through the use of personas, this array of commentary could gradually help publication venues, their boards, and their contributors better understand their own review cultures (e.g., who is participating, when, how, and with what tendencies), without reducing review to matters of accountability, surveillance, or quantification.

Aspect 2: Document Otherwise Ephemeral or Overlooked Labor

One of the primary obstacles—perhaps the most significant obstacle—to networked modes of review is incentive. Just because you build a whiz–bang review mechanism does not mean people will use it, or that it warrants use. For many reviewers, new layers of review may also seem like more tasks and labor added to the already elaborate procedures of publication. Additionally, as Dan Cohen observes, “When the phrase academia is best known for is ‘publish or perish,’ it should come as no surprise that like most human beings, professors are highly attentive to the incentives for validation and advancement. Unfortunately, those incentives often involve publishing in gated journals.”[22] Indeed, due to persistent and all too often faulty assumptions that open access scholarship is not rigorously reviewed or edited, many authors need clear incentives to submit to web–based venues without gates. What’s more, they need substantial incentive to participate in experimental approaches to review, which—truth be told—may involve a learning curve, demand significant time and labor, or fail to deliver on their promises. (And a miasma of positivist promises frequently envelops the word, “digital.”) That said, as an incentive of sorts, our prototype invests in techniques that make the review work practitioners do visible to themselves and, if they so choose, to others. After all, the labor of review can seem ephemeral. Across venues, it is difficult to track, and it is usually distributed across machines. Feedback can be found in Word documents, email clients, and databases, not to mention on pieces of paper. Building up visible review profiles could thus help practitioners aggregate review content, search it, and present it as a core part of their scholarly labor—not a service somehow divorced from research and teaching but rather a critical practice essential to scholarly communication.

Through the articulation of peer review personas, aggregated review content could also be organized into clusters of activity, based on reviewer interest areas and domains of expertise. For example, a given practitioner might want to categorize review content based on, say, a literary or cultural period (e.g., “Victorian literature”), a technique (e.g., “Text Encoding), a critical perspective (e.g., “Feminist Studies”), or an editorial practice (e.g., “Copy Editing”). Categories could be generated manually as well as computationally (e.g., based on text analysis that identifies word frequencies in review content). Of course, they would inevitably overlap, but the main intent is for venues and practitioners to increase self–reflexivity toward their own review cultures while sharing—again, if they are so willing—their review practices with others, including other venues and reviewers as well as interested authors and review committees. In so doing, they could exhibit the diverse roles scholars perform while reviewing and editing material. They could also underscore how, in practice, review highly depends on what a given venue and manuscript ask of a reviewer.

For instance, consider how reviewing for journals such as Kairos: A Journal of Rhetoric, Technology, and Pedagogy or Vectors: Journal of Culture and Technology in a Dynamic Vernacular might differ from text–centric journals intended solely for print. In “Speaking with Students”[23] and “Assessing Scholarly Multimedia,”[24] Virginia Kuhn and Cheryl Ball, respectively, stress how multimedia scholarship demands assessment strategies that legacies of text– or print–centric review may not afford. As an editor of Kairos, Ball notes: “authors compose the equivalent of a peer–reviewed article for Kairos, but instead of relying only on words (and maybe a few figures), they use whatever media and modes of production they need, such that the media and modes complement, if not create, the point the author wants to make.”[25] And as Tara McPherson (editor of Vectors) elaborates elsewhere, temporalities and editorial functions are gradually changing alongside scholarly multimedia and digital platforms: “[r]eview and editing will likely need to happen in incremental stages with more ongoing connection between scholars and editors.”[26] Taken together, these two remarks foreground how the review of digital materials corresponds (or should correspond) with the modes, media, and platforms through which they are made. In the case of journals such as Kairos and Vectors, together with presses such as Computers and Composition Digital Press, reviewers need to account for the scholarly contributions of an argument as well as how it is expressed through, say, a browser, a video, an encoded text, an algorithm, or a map. Since so many multimedia projects are collaboratively authored, reviewers must also frequently reconsider legacy assumptions about authorial voice, linearity, citation, and standards in scholarly arguments. On the whole, such review demands not only ongoing connections between scholars and editors but also a tremendous scope of expertise, which is typically represented by a range of practitioners, vocabularies, roles, and styles. By scholars who are unfamiliar with its intricacies, this review work can be easily misunderstood or overlooked; by practitioners who are deeply immersed in it, the same work can be difficult to explain or exhibit to the unfamiliar. Consequently, our prototype aims to render the scholarly labor of peer review visible while also communicating how heterogeneous and multifaceted it is. Embedded in setting and situation, peer review—and perhaps especially the review of scholarly multimedia—cannot be expressed solely at scale, only through abstraction, or simply through quantification. It must be understood socially, as a multilayered engagement.

Aspect 3: Express Activity with Granularity over Time and across Venues

In “Beyond Metrics,” Fitzpatrick observes that “[d]igital scholarly publishing will require rethinking the ways that [web] traffic is measured and assessed, moving from a focus on conversion to a focus on engagement—and engagement can be quite difficult to measure.”[27] To be sure, the work taking place in any authoring and publication environment is ultimately impossible to translate seamlessly, without loss, into documents for assessment by committees, boards, readers, and other review groups. This problem is not new or at all unique to digital technologies. But it persists. In the context of web–based scholarly communications, a page view says little about how an article was read, and—while it is easy to measure—a click hardly indicates interest or endorsement. Here, we have plenty to learn from the history of, say, Nielsen ratings and box office numbers, which measure audience size and demographics but cannot capture the complexities of embodied attention, interpretation, and social engagement. As Fitzpatrick implies, the profit–driven, marketing–devised benchmark of conversions (e.g., the conversion of a visitor into a customer, or a click into capital) in private or industry sectors may not be especially relevant to scholarly communications. In academia, engagement will likely be defined and formulated uniquely by publication venues and authors, and it will repeatedly resist reduction to numbers and impact factors.

That said, our prototype responds to recent advocacy for article–level metrics, decoupled journals, and altmetrics, including Priem et al.’s “Altmetrics: A Manifesto.”[28] This work frequently endorses the use of computational mechanisms, meta–venues, and social media platforms to reconstruct how we evaluate the ostensible impact of scholarship, and it can—or ultimately could—be adopted to better reflect the actual economies and contours of scholarly communication. It could also allow practitioners to better examine the particulars of circulation and citation, with more detail than journal– or book–level analytics.

Yet article–level metrics and altmetrics generally privilege the reception, not the revision or review, of scholarly communications. For instance, even if it encourages consideration of both the “how and why” and the “how many” of scholarly communications, the altmetrics manifesto generally privileges the latter. At one point, it declares the following:

The speed of altmetrics presents the opportunity to create real–time recommendation and collaborative filtering systems: instead of subscribing to dozens of tables–of–contents, a researcher could get a feed of this week’s most significant work in her field. This becomes especially powerful when combined with quick “alt–publications” like blogs or preprint servers, shrinking the communication cycle from years to weeks or days. Faster, broader impact metrics could also play a role in funding and promotion decisions.[29]

While these overarching investments in impact, speed, circulation, and filtering no doubt shape and even resituate the conversations we have about scholarly communication, they should not lose track of everyday, scholarly labor or differences across disciplines. Again, the altmetrics manifesto does make this gesture toward balancing the abstract with the particular—to “correlate between altmetrics and existing measures, predict citations from altmetrics, and compare altmetrics with expert evaluation” (n. pag.). Nonetheless, its business–like emphasis is clearly breadth and rapidity. In our prototype, we temper such emphasis with thorough attention to the iterative and granular expression of review. These expressions include sentence–level edits, brief remarks made in the margins, lengthy end comments, and routine responses to authors and other reviewers. As expressions accumulate, they are exhibited on each profile, and they can be tagged with descriptive metadata that corresponds with particular categories or practices. Personas are thus constructed based on labor committed over time.

To some, attention to granularity may seem counterintuitive to shrinking the cycles of scholarly communication. For instance, it could generate too much data, over–incrementalize scholarly production, bloat authoring and publication platforms, exaggerate the meaningfulness of banal activities, or be easily exploited. All of these criticisms are fair, and they each warrant serious consideration. That said, the other end of this spectrum should concern scholars as well: an extreme focus on the personalization and real–time buzz afforded by social networking mechanisms could be quite conducive to groupthink, hive minds, echo chambers, or popularity contests. Indeed, scholars and publication venues should speculate seriously about the effects of shrunken cycles, including what labor, materials, and points of view could be easily ignored amidst the viral hype and rapid pace of technological changes. Recalling Wendy Hui Kyong Chun in “The Enduring Ephemeral, or the Future Is a Memory,” “we need to get beyond speed as the defining feature of digital media or global networked communications.”[30] Comprehensive peer review remains relevant amidst the network fury,[31] even if it apparently plods behind trending topics and real–time responses.

As we develop authoring and publishing platforms with these concerns about metrics and labor in mind, we might encourage what John Maxwell calls a “research–rich counterpoint.” In “Publishing Education in the 21st Century and the Role of the University,” Maxwell argues that “[p]ublishing cannot be reduced to its industrial manifestation, especially in the 21st century, when new publishing environments and models push at traditional boundaries. University–based programs can provide a constructive, research-rich counterpoint that both strengthens and challenges the industrial context by simultaneously engaging and transcending it.”[32] In the case of our prototype, we understand “industrial manifestation” as a tendency to privilege vulgar quantification and efficiency over numerous research–rich counterpoints: thorough deliberation, constructive review, iteration and revision, and balancing multiple—often conflicting—perspectives and sources. As our prototype suggests, there’s no need to polarize here; without being reactionary or instrumentalist, aspects of social networks can certainly be blended with legacy procedures for review.

Still, we are reminded of Alexander Galloway’s recent remark in Excommunication: “The network form has eclipsed all others as the new master signifier.”[33] Which is to say: given the current ubiquity of networks (as rhetorical, cultural, and technical devices), we might determine what other forms or signifiers can help us better understand the intricate processes of peer review, beyond counting, diagramming, filtering, and visualizing them.[34] Through our prototype, we suggest that granularity—especially the ways it can detail the messy, iterative development of scholarly communication over time and across contributors—is one way to demystify the current allure of networks and strategically balance interests in “how many” with the research–rich counterpoints of “how and why.” But to be sure, a significant question is how that granularity is actually expressed by publishing venues through scholarly design.

Aspect 4: Developing Reputations and Authority

Across the web, reputation engines such as Stack Exchange translate the granularity of activity into contributor ratings or scores. These engines often combine the question–and–answer format of bulletin board systems with ranking mechanisms, which let community members indicate—with a single click—whether member contributions are “useful” or “informative” (adjectives that are highly dependent upon context, member interest, and community needs). Through these engines, social networks algorithmically push popular content to the top of pages, allowing visitors to ignore or skim the balance. Echoing Jeff Atwood (co–designer of Stack Exchange and Stack Overflow), such mechanisms ostensibly prompt people to “play,” or at least participate more frequently, in networks, “collecting reputation and badges and rank and upvotes” as they go.[35] Yet, aside from Fitzpatrick’s claim that profit–driven, marketing–devised techniques will not translate neatly (if at all) into scholarly communication environments, scholars will likely have significant concerns about integrating reputation engines into peer review. Those concerns might be justifiably informed by Ian Bogost’s now popular critique of exploitationware:[36] Would reputation engines gamify scholarly production? Would they cheapen the labor of review? Would they flatten the complexity of social relations? Would they merely patch an issue that really demands significant research and debate? Would anyone use them, who might resist them, and—in practice—who would they actually benefit?

All of these questions, especially the last one, engage the cultural and social biases known to proliferate throughout reputation engines (in particular) and technocultures (in general).[37] As many practitioners of science, technology, media, and cultural studies have claimed for decades, technologies are not innocent, and the Internet is not inherently democratic. In the specific case of reputation engines, any given algocratic measure—what A. Aneesh defines as “rule of the algorithm, or rule of the code”[38]—is by no means passive. Operating in the background, it may only appear as such. By extension, its effects (e.g., the reputation scores generated by Stack Exchange) should be interpreted with a healthy dose of skepticism, even if the system is framed around an ethos of play, collaboration, or community. To borrow an example from Lisa Nakamura’s compelling article on Goodreads and socially networked reading: “a folksonomic, vernacular platform for literary criticism and conversation” can also be a site for “the operationalization and commodification of reading as an algocratic practice.”[39] To be sure, Nakamura’s observations about networked reading are applicable to networked peer review. If we are to even consider reputation engines for the review of scholarly communication, then we must also acknowledge that they not only are value–laden; they also favor norming, privilege, and industrialization. We must also be willing to analyze how they are culturally or socially embedded, and then—if we are still curious about their applications for peer review—construct or rewire them with a deep awareness of the values and exchanges they support. Here, Tara McPherson’s blending of design studies with feminist studies, including her inquiry in “Designing for Difference,” is incredibly informative.[40] So, too, are indie videogames built by Anna Anthropy, merritt kopas, Paolo Pedercini, and Robert Yang.

In our prototype, profiles and personas help practitioners develop reputations and authority without defaulting to voting techniques, ranking mechanisms, or exploitationware that might “[push] processes of negotiability into the background.”[41] One way they avoid this push is through the use of persona tags, which are used, keyword–like, to identify and describe practitioners’ areas of expertise.

Reiterating two points made previously in this essay: to highlight difference, a single profile can express multiple personas, and people can categorize their work based on, say, a literary or cultural period (e.g., “Victorian Literature”), a technique (e.g., “Text Encoding), a critical perspective (e.g., “Feminist Studies”), or an editorial practice (e.g., “Copy Editing”). Through persona tags, practitioners can apply these descriptions to their own profiles. Or, in a more social fashion, other practitioners (e.g., members of a venue’s editorial team) can submit or apply them. Of course, publication venues may wish to implement tag moderation to let practitioners approve, reject, or suspend a tag suggested by another person prior to the tag’s appearance on a profile. Additionally, controlled vocabularies or predictive text could be used in tandem with folksonomies to foster consistency in data entry. But perhaps most importantly, in the prototype these tags can be attached to profiles as well as to discrete instances of activity (e.g., an end comment or margin comment).

Put this way, personas grow over time through the accumulation of encoded activities, which exemplify how exactly practitioners perform different areas of expertise during review. As ways to organize information, they are simultaneously abstract and concrete.[42] If practitioners are willing to let others describe their review work through descriptive encoding (i.e., tagging), then personas might also foster self–reflexivity. That is, they could afford practitioners a sense of how others perceive their contributions to scholarly communities. In fact, the social application of persona tags could even tell practitioners where their peers consider them authorities. True, WorldCat Identities already generates similar data about authors, allowing people to view publication timelines, information about audience level, and—most relevant here—the subject areas with which an author’s publications are associated. However, the production of this data is largely automated, without a recognizable social component. And its expression feels rather cold and decontextualized. Nonetheless, WorldCat Identities, not to mention Google Scholar Citations, provides us with an example of how review profiles and reputations could be constructed within scholarly publication platforms. There, they will no doubt require some sort of social element—perhaps persona tags, perhaps something else entirely—that prompts perspective and feedback from practitioners. Otherwise, their future may resemble the current footer of every Google Scholar profile: “Dates and citation counts are estimated and are determined automatically by a computer program.”

Aspect 5: Collaborate with a Collective of Practitioners

As we argue earlier in this essay, shifting peer review from one–to–one to many–to–many exchanges is fundamental to our personas prototype. The prototype’s mechanics of engagement as well as its approaches to description and attribution—including profiles, personas, and persona tags—allow practitioners to demonstrate how otherwise individuated activities and contributors function relationally.

More specifically, a many–to–many paradigm for peer review recognizes that practitioners typically author multiple publications, and publications typically have multiple reviewers. It also acknowledges that publications and reviews need not be treated as whole or complete texts. Instead, they can be treated in parts (e.g., sentences, annotations, or lexia), which can be lumped or clustered together (e.g., through titles, paragraphs, or dates) and navigated accordingly. The key, then, is articulating these various components, allowing each of them to have multiple values (e.g., a single article with forty unique paragraphs or a reviewer with twenty unique comments for a specific author), and persuasively representing how they are related. This way, review can be expressed and encoded dialogically. And a group can collectively review the content at hand across a spectrum of openness (e.g., behind a passcode–protected wall, with a URL but not discoverable, or with a URL and discoverable).

In this scenario, authors do not receive—by email or the like—a series of contiguous reviews from individual reviewers. They view their manuscripts as opportunities for staging scholarly conversations, with reviewers gathering on that stage to agree, disagree, reply, clarify, question, and so on. Depending on a venue’s preference, this approach could be understood or labeled as “social,” “collaborative,” or “networked,” and it could be conducted collectively and dialogically over a period of time. Perhaps, too, authors could participate in the conversation as it emerges or after a certain milestone in the review process. Again, Debates in the Digital Humanities and its semipublic review comprise a convincing example here: “Often, debates between contributors broke out in the margins of the text.”[43] In situations where authors, venues, or reviewers prefer blind review to something semipublic, identifiers could be hidden entirely or masked with generic handles (e.g., “Reviewer 1”). Through its recent adoption of Hypothes.is, The Journal of Electronic Publishing (JEP) is already experimenting with this mode of review. As Maria Bonn and Jonathan McGlone note in Volume 17, Issue 2 of the journal, Hypothes.is is “an open platform for the collaborative evaluation of knowledge.”[44] It is also “a tool for community peer–review.”[45] Even though it is in alpha, Hypothes.is already allows people create accounts through which granular activity (e.g., annotations) can be clustered, archived, and time–stamped. That activity can also be tagged (e.g., “JEP”) and read accordingly. Comparable to platforms such as Scalar as well as other initiatives inspired by the W3C’s Open Annotation Data Model, Hypothes.is annotations have unique addresses. They are also searchable, viewable in condensed or detailed forms, and persistent throughout revisions made to the primary text. In fact, practitioners can compare differences across versions, and the interaction design is rather dialogic in character, stressing engagement over vulgar quantification. (Of note, as of this writing, Hypothes.is is not employing a voting tool, reputation engine, or any exploitationware.) Practitioners can reply to each other’s comments, create threads, and reference each other. Like the Open Video Annotation Project at Harvard, the Annotation Studio at MIT, and AustESE (developed across numerous universities), Hypothes.is uses annotator.js, an open–source JavaScript library that allows it to function as an agile plugin—similar to Disqus—across venues and browsers. In fact, the founder of Hypothes.is, Dan Whaley, argues that “[a]nnotation eventually belongs in the browser,”[46] as a kind of overlay. But, in order for it to be more conducive to scholarly review, Hypothes.is may need to balance its focus on primary texts with a focus on profiles and practitioner histories. As our prototype suggests, review environments informed by networking mechanisms may benefit from views that allow contributors to see and examine relations between authors, reviewers, and texts, without primary texts always acting as the privileged anchor points.

That is, while primary texts serve as stages for scholarly conversation, they are one among many means to ground peer review and analyze it as a social process. Robust profiles and rich personas matter for review networks, too, especially if publication venues are interested in building complex review histories.

Aspect 6: Assess the Tendencies and Biases of Peer Review in the Aggregate

As part of this sixth and final aspect of the personas prototype, we underscore the relevance of networking mechanisms to assessing how peer review unfolds in specific publication venues. Echoing a point made earlier in this essay, review and editing are central to scholarly work, and thus warrant study, archiving, and even sharing. Through that study, perhaps publication venues can use authoring and publishing platforms to learn more about how they review material, who submits that material, who participates in the review process, how, and with what (often unacknowledged) tendencies and biases over time. Of course, these issues are not new or unique to digital technologies. And they remain a matter of representation: examining the demographics of scholarly communication in order to better understand who participates and to what effects. Yet they are also about scholarly communication cultures: how—consciously or not—venues privilege particular methods, develop their own styles and language practices, appeal to and ignore certain groups, reference what they consider foundational histories and publications, and even foster worldviews or ideologies. When scholarly communication is approached with an investment primarily in business-like impact, citation, or quantification, these types of social and cultural factors can be easily overlooked. After all, they are largely about everyday processes that frequently escape the transduction of practice into product.[47]

For the purposes of our prototype, then, a key question is how and through what conditions a publication happens. As a matter of emphasis, this question is significantly different from asking how a publication is received or where it is cited. Although these two questions are important, too much attention to them can overshadow or “black box” the iterative production of scholarly communication.[48] What’s more, attention to impact factors may not adequately address now ubiquitous remarks that the peer review process is opaque, “unhelpful,” “broken,” and “generally awful.”[49]

By pushing publication venues to build and assess review histories, profiles, and personas through web–based mechanisms, our prototype endorses the treatment of peer review as an object of scholarly inquiry. In order for that treatment to occur, venues need archives with electronic submissions and review content accompanied by, say, Dublin Core metadata (e.g., the title, description, date, creator, relation, and coverage elements). Aside from being able to search these archives, practitioners should also be able to use text analysis tools, such as those found through the Text Analysis Portal for Research (TAPoR), to study the most common words mentioned during peer review, and they should be able to use data visualization tools, such as the D3 JavaScript library, to graph social relationships between profiles, submissions, and reviews. Many platforms, including Scalar, already have visualization mechanisms integrated into their authoring and publishing workflows, and this multimodal approach encourages practitioners to develop various perspectives on their scholarship and its expression (as text, graph, image, or dynamic media). It also prompts them to consider how other practitioners may read as well as repurpose their research (as data). Such consideration can foster awareness within scholarly communities about the long–term storage, curation, and reuse of digital projects, including publication venues and their review histories. That said, learning from the Internet Archive’s Wayback Machine, it is crucial for archives to include more than the most recent state of digital resources. As our prototype implies, how resources such as manuscripts change—through revision and in response to peer review—can say a tremendous amount about the development of social and cultural biases over time.

Similar to services such as GitHub, our prototype lets practitioners see what has been added, deleted, and annotated, by whom, and when during the course of review. In principle, these change histories prompt venues to ask how, why, and under what assumptions revision is happening in their communities. They also prompt those venues to blend human and computer vision in their inquiries. Part of this analysis could include questions about not just who is contributing to publications but also through what personas they are contributing. That is, the articulation of personas stresses the importance that specific roles—including author, editor, moderator, reader, domain expert, and designer—play in the review process. Since practitioners do not always assume the same roles, they do not always write or review in the same way. Their biases are therefore social and cultural in character, difficult to detach from the settings in which they emerge. This aspect of peer review is important to recall as we examine how this manuscript becomes that one.

Next Steps, Including Some Concerns and Limitations

The most obvious next steps for the “Peer Review Personas” prototype are to further develop its code and have several communities test it (e.g., through special journal issues) prior to implementation. Here, software such as Open Journal Systems appears ideal for testing. It is used widely across disciplines, and it is familiar to many authors, editors, reviewers, and readers. Until this testing is conducted, it is difficult to fully understand the implications of the prototype, hence the ambivalence we express in this essay’s introduction. For now, then, we will conclude with a few concerns as well as some additional observations about the prototype’s limitations: 1) developing a plugin to function consistently across multiple platforms or even across several instances of the same software is no small task, especially where encoding and programming are concerned; 2) by extension, practitioners need to avoid reinventing the wheel, or building new authoring, review, annotation, and publication mechanisms when similar, open source tools already exist (in this case, Hypothes.is); 3) author disambiguation, such as the persistent digital identifiers provided by ORCID, will be essential to the lifespan, effectiveness, and appeal of the prototype; 4) collaboration toward the prototype’s implementation would benefit greatly from teams with diverse expertise, from theorists and historians of technology to programmers, librarians, and designers; 5) like all prototypes, this one will change during testing and implementation (if it is ever implemented); 6) social, cultural, and methodological differences across disciplines—including differences between the arts, humanities, and sciences—dramatically affect how material is reviewed, suggesting that the prototype cannot be implemented uniformly; 7) technologies should not be perceived as solutions to cultural issues, and peer review is deeply embedded in culture; and 8) finally, to produce a metric—or a tool for standardized measurement—is also to subject it to exploitation. For this reason, we have taken speculation quite seriously, despite our own quibbles with vaporware. As Wendy Hui Kyong Chun, with Lisa Rhody, claims in “Working the Digital Humanities”: “To live by the rhetoric of usefulness and practicality—of technological efficiency—is also to die by it.”[50] And culture often thrives precisely when it resists efficiency, no matter how necessary efficiency may appear.

Acknowledgements

With funding from the Social Sciences and Humanities Research Council of Canada, this research was conducted as part of a partnership between Implementing New Knowledge Environments (INKE) and the Modernist Versions Project (MVP). We would like to thank the INKE and MVP research teams, especially Jon Bath, Stephen Ross, Jon Saklofske, and Ray Siemens, for their support. We would also like to thank everyone at the Electronic Textual Cultures Laboratory and the Maker Lab in the Humanities at the University of Victoria, where this research was conducted.

Nina Belojevic is Assistant Director of the Maker Lab in the Humanities and a Research Developer and Information Architect with the Electronic Textual Cultures Laboratory at the University of Victoria.

Jentery Sayers is Assistant Professor of English; Member of the Cultural, Social, and Political Thought Faculty; and Director of the Maker Lab in the Humanities at the University of Victoria.

Implementing New Knowledge Environments (INKE) is a collaborative group of researchers and graduate research assistants working with other organizations and partners to explore the digital humanities, electronic scholarly communication, and the affordances of electronic text.

The Modernist Versions Project (MVP) aims to advance the potential for comparative interpretations of modernist texts that exist in multiple forms by digitizing, collating, versioning, and visualizing them individually and in combination.

Notes

Ross, Claire, Melissa Terras, Claire Warwick, and Anne Welsh. “Enabled Backchannel: Conf. Twitter Use by Digital Humanists.” Journal of Documentation 67.2 (2011): 214.

Kirschenbaum, Matthew. “Digital Humanities As/Is a Tactical Term.” Debates in the Digital Humanities. Ed. Matthew K. Gold. (Minneapolis: University of Minnesota Press, 2012), 417.

Gold, Matthew K. “The Digital Humanities Moment.” Debates in the Digital Humanities. Ed. Matthew K. Gold. (Minneapolis: University of Minnesota Press, 2012), x.

Awan, Imran. “Islamophobia and Twitter: A Typology of Online Hate Against Muslims on Social Media.” Policy & Internet 6.2 (2014): 134-38.

Beller, Jonathan. The Cinematic Mode of Production: Attention Economy and the Society of the Spectacle. (Hanover, NH: Dartmouth College Press, 2006), 303-312.

See Mandavilli, Apoorva. “Peer Review: Trial by Twitter.” Nature 469 (2011): 287. Also see Priem, Jason, and Bradley M. Hemminger. “Decoupling the Scholarly Journal.” Frontiers in Computational Neuroscience 6(2012): 10-12).

Given its prominence in information, design, and publishing studies, we use “personas” instead of “personae” throughout this essay.

Kraus, Kari. “Conjectural Criticism: Computing Past and Future Texts.” Digital Humanities Quarterly 3.4 (2009).

Lukens, Jonathan, and Carl DiSalvo. “Speculative Design and Technological Fluency.” International Journal of Learning and Media 3.4 (2012): 23-40.

Galey, Alan, and Stan Ruecker. “How a Prototype Argues.” Literary and Linguistic Computing 25.4 (2010): 405-424.

Shirky, Clay. Here Comes Everybody: The Power of Organizing Without Organizations. (New York: Penguin Press, 2008), 81.

See Groth, Paul, and Thomas Gurney. “Studying Scientific Discourse on the Web Using Bibliometrics: A Chemistry Blogging Case Study.” Proceedings of the WebSci10: Extending Frontiers of Society On-Line. (Raleigh, NC. 26-27 April 2010), 4. Also see Priem, Jason, and Kaitlin Light Costello. “How and Why Scholars Cite on Twitter,” Proceedings of the American Society for Information Science and Technology 47.1 (2010): 3.

Kirschenbaum, Matthew G. Mechanisms: New Media and the Forensic Imagination. (Cambridge, MA: MIT Press, 2008).

Fitzpatrick, Kathleen. “CommentPress: New (Social) Structures for New (Networked) Texts.” Journal of Electronic Publishing 10.3 (2007): n. pag.

Fitzpatrick, Kathleen. “Beyond Metrics: Community Authorization and Open Peer Review.” Debates in the Digital Humanities. Ed. Matthew K. Gold. (Minneapolis: University of Minnesota Press, 2012), 452.

Fitzpatrick, Kathleen. Planned Obsolescence: Publishing, Technology, and the Future of the Academy. New York: New York University Press, 2011.

Indeed, despite common tendencies to associate all things digital with revolution, facilitating knowledge production in networked environments need not involve the absolute revocation of old media. To reiterate a gesture made earlier in this essay: digital, networked, or web-based media do not in and of themselves relate antagonistically with print, analog, or offline artifacts. The relations between new and old are recursive, intertwined, blurred, and often subtle. Among many others, scholars such as Jay David Bolter, Richard Grusin, N. Katherine Hayles, Alan Liu, Nick Montfort, Rita Raley, and Jonathan Sterne have argued and demonstrated this point quite thoroughly since the 1990s.

Gold. “The Digital Humanities Moment,” xiii, emphasis added.

Cohen, Dan. “To Make Open Access Work, We Need to Do More Than Liberate Journal Articles.” Wired, 15 January 2013, n. pag.

Kuhn, Virginia. “Speaking with Students: Profiles in Digital Pedagogy.” Kairos: A Journal of Rhetoric, Technology, and Pedagogy 14.2 (2010).

Ball, Cheryl. “Assessing Scholarly Multimedia: A Rhetorical Genre Studies Approach.” Technical Communication Quarterly 21.1 (2012).

McPherson, Tara. “Scaling Vectors: Thoughts on the Future of Scholarly Communication.” The Journal of Electronic Publishing 13.2 (2010): n. pag.

Priem, Jason, Dario Taraborelli, Paul Groth, and Cameron Neylon. “Altmetrics: A Manifesto.” Altmetrics.org. 28 September 2011.

Chun, Wendy Hui Kyong. “The Enduring Ephemeral, or the Future Is a Memory.” Critical Inquiry 35.1 (2008): 170.

Galloway, Alexander R. “Love of the Middle,” in Excommunication: Three Inquiries in Media and Mediation, Alexander R. Galloway, Eugene Thacker, and McKenzie Wark. (Chicago: University of Chicago Press, 2014), 61.

Maxwell, John W. “Publishing Education in the 21st Century and the Role of the University.” The Journal of Electronic Publishing 17.2 (2014): n. pag., emphasis added.

Atwood, Jeff. “The Gamification.” Coding Horror: Programming and Human Factors. 12 October 2011.

Bogost, Ian. “Persuasive Games: Exploitationware.” Gamasutra. 3 May 2011.

For instance, research by Bogdan Vasilescu et al. found that “the percentage of women engaged in SO is greatly unbalanced, and men represent the vast majority of contributors....Moreover, women are not only a minority in SO, but their levels of participation are significantly different from men’s: men participate more, earn more reputation, and engage in the ‘game’ more than women do” (6-7). As many readers will quickly note, this research is premised on a gender binary as well as various slippery assumptions about how researchers should identify someone’s gender on- or offline. Vasilescu et al. acknowledge these flaws in the conclusion of their paper, mentioning (among other things) that future research should expand gender beyond the binary.

Aneesh, A. Virtual Migration: The Programming of Globalization. (Durham, Duke University Press, 2006), 5.

Nakamura, Lisa. “‘Words with Friends’: Socially Networked Reading on Goodreads.” PMLA 128.1 (2013): 242.

McPherson, Tara. “Designing for Difference.” Differences: A Journal of Feminist Cultural Studies 25.1 (2014).

Bowker, Geoffrey C., and Susan Leigh Star. Sorting Things Out. (Cambridge, MA: MIT Press, 1999), 297.

Gold. “The Digital Humanities Moment,” xiii, emphasis added.

Bonn, Maria, and Jonathan McGlone. “New Feature: Article Annotation with Hypothes.is.” The Journal of Electronic Publishing 17.2 (2014): n. pag.

Whaley, Dan. “An Open Letter to Marc Andreessen and Rap Genius.” Hypothes.is. 3 October 2012. n. pag.

Blanchot, Maurice, and Susan Hanson. “Everyday Speech.” Yale French Studies 73 (1987), 15.

Latour, Bruno. Science in Action: How to Follow Scientists and Engineers Through Society. (Cambridge, MA: Harvard University Press, 1987), 2-3.

Schuman, Rebecca. “Revise and Resubmit!” Slate. 15 July 2014. N. pag.

Chun, Wendy, and Lisa Marie Rhody. “Working the Digital Humanities: Uncovering Shadows between the Dark and the Light.” Differences: A Journal of Feminist Cultural Studies 25.1 (2014): 21.