Measuring Openness and Evaluating Digital Academic Publishing Models: Not Quite the Same Business

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 3.0 License. Please contact mpub-help@umich.edu to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

This paper was refereed by the Journal of Electronic Publishing’s peer reviewers.

1. Introduction

In this article we raise a problem, and we offer two practical contributions to its solution. The problem is that academic communities interested in digital publishing do not have adequate tools to help them in choosing a publishing model that suits their needs. We believe that excessive focus on Open Access (OA) has obscured some important issues; moreover exclusive emphasis on increasing openness has contributed to an agenda and to policies that show clear practical shortcomings. We believe that academic communities have different needs and priorities; therefore there cannot be a ranking of publishing models that fits all and is based on only one criterion or value. We thus believe that two things are needed. First, communities need help in working out what they want from their digital publications. Their needs and desiderata should be made explicit and their relative importance estimated. This exercise leads to the formulation and ordering of their objectives. Second, available publishing models should be assessed on the basis of these objectives, so as to choose one that satisfies them well. Accordingly we have developed a framework that assists communities in going through these two steps. The framework can be used informally, as a guide to the collection and systematic organization of the information needed to make an informed choice of publishing model. In order to do so it maps the values that should be weighed and the technical features that embed them.

Building on our framework, we also offer a method to produce ordinal and cardinal scores of publishing models. When these techniques are applied the framework becomes a formal decision–making tool.

Finally, the framework stresses that, while the OA movement tackles important issues in digital publishing, it cannot incorporate the whole range of values and interests that are at the core of academic publishing. Therefore the framework suggests a broader agenda that is relevant in making better policy decisions around academic publishing and OA.

2. Our experience with Open Access

Our engagement with OA originates in our experience of working within the Agora project.[1] What struck our attention and pushed us to inquiry into the driving reasons behind OA was the experience of the Nordic Wittgenstein Review (NWR), a new journal that was launched by the Nordic Wittgenstein Society under the auspices of the Agora project.[2] The focus in our project was to explore new business models for public–private partnerships in philosophical publishing. The journal adopted a delayed dual–mode (print and electronic) model, consisting of a subscription–based print version with a three month embargo on a free online version. The copyright license adopted for published material was CC–BY–NC–SA, which requires attribution to the author, forbids commercial use, and requires that any derivative work using it be distributed under the same license as the original.[3] The choice of the delayed dual–mode model and of the licensing type was made in the attempt to meet the needs and preferences of the communities that the journal addresses (Wittgenstein scholars and analytical philosophers) while taking advantage of a partnership with a small publisher (Ontos Verlag).[4] Furthermore, both the publishing model and the licensing represent the removal of considerable barriers compared to the ‘traditional’ mode of publication for academic journals. Free availability on the internet 3 months after print publication and the right to use, copy, store, reprint, circulate, build on etc. certainly represent a major increase in openness as compared to the benchmark. Yet, both the delayed dual–mode model and licensing type adopted caused problems with the institutions that are supposed to assist and promote OA: the Directory of Open Access Journals (DOAJ) refused to index the NWR, because of the delay to OA, while the Open Access Scholarly Publishers Association (OASPA) denied membership to the journal, on account of the fact that the journal uses a license more restrictive than the minimal CC–BY.[5] These refusals obviously affect the journal negatively: it loses visibility and access to useful services. We felt that these closures—these barriers, if we may—suffered by the NWR were the symptom that something was wrong and in need of further analysis.

3. From OA to a publishing values analysis

Digital technologies offer many interesting opportunities in scientific and scholarly publishing and are currently driving important changes in publishing culture and practices. So far the discourse around digital academic publishing has been dominated by the theme of OA.[6] The OA agenda no doubt addresses some fundamental issues associated with the new opportunities offered by digital media. While we acknowledge the importance of the issues tackled by the OA movement, we believe that it is wrong to frame the choices faced by journal promoters exclusively in terms of OA. There are important opportunities in digital publishing that are not captured by the ideas of open access and open use.[7] For instance journals published in digital format only (i.e. with no print version) allow for the possibility of publishing a greater number of articles and to publish them much more quickly. Since long waiting time between acceptance and publication is a problem for authors, this is no doubt an important possibility afforded by digital publishing models.

Furthermore, there is a regrettable trend to adopt a simplistic opposition between ‘open’ and ‘closed’ and to equate ‘open’ with ‘good’ and ‘closed’ with ‘bad’. A moment of reflection on the concept of barrier (i.e. what the quest for openness aims to remove) is enough to show why that value judgment is unwarranted. Barriers as such are neither good nor bad. Barriers protect and exclude. The desirability or justifiability of barriers depends on who or what they protect, on who or what they exclude, and on the conditions required for lifting them. This is unfortunately a complexity that has been lost in most visions of OA. An assumption that barriers are hindrances and limitations of freedom is often at work, but not discussed explicitly. Similarly and symmetrically, the idea that openness is good and progressive is equally uncritically assumed. We will see that some barriers are desirable and justifiable and hence the evaluative opposition between open and closed is misleading.[8] Nevertheless, advocates of OA are right in claiming that there are barriers to the circulation and use of knowledge that do not serve any value and that these can and should now be removed. But trying to remove these specific and unjustified barriers is different from a vague attempt to maximize openness.[9]

Another problem with the OA movement is that definitions of OA are not merely theoretical contributions, they are features of a practical agenda and in fact they create reality. There are two types of realities that are shaped by definitions of OA: 1) examples of actual OA publications —that are often designed so as to conform to a given definition, and 2) services and infrastructures for OA —that typically operationalize a given definition. As mentioned in the previous section, we have learned through our experience that the latter can generate barriers and be exclusionary towards publication models that serve some academic communities and their public well.

We have thus come to the conclusion that instead of thinking in terms of openness we need to map the values and interests that can be promoted or thwarted through the adoption of different publishing models. This value analysis can be useful in different contexts, for instance we think it should help current discussions around the opportunity of OA mandates to see the issue within the broader context of academic publishing and its values. It can also provide the background for normative arguments and policy recommendations. For instance it helps in explaining why the influential Budapest OA definition is too demanding and is blind to important considerations, as we discovered in our experience with the Agora project. To give another example, our value analysis helps to understand the discontent—widespread in the humanities—towards Article Processing Charges, for it shows that they go against what we call fair access to publishing.[10]

However, in this paper we consider the problem mainly from the point of view of the group of people involved in launching a new journal or in moving an existing journal to a different publishing model. We will call the group of people involved in steering or founding the journal the promoters. We hope to offer to the promoters a framework that helps them in making their publishing objectives explicit and in assessing which available publishing models satisfy them. We aim at helping journal promoters to find the right balance between the various desiderata pursued by academic publishing, some of which are overlooked in the OA discourse.

4. The choice faced by journal promoters

Typically the promoters are a group of scholars or scientists, but they may include other stakeholders. Several disciplines have journals that are not addressed to an exclusively academic audience; for instance engineering, medicine, business, planning and architecture, organization theory and public administration science etc. Representatives from the professions that access and use these kinds of knowledge may very well be among the promoters of academic journals. We do not include in our definitions of promoters those who have an exclusively economic interest in the profit that the journal may generate. This qualification is aimed at including the interests of learned societies that also have an interest in generating some revenues, while excluding the interests of so–called predatory publishers and of publishers that see journals only in terms of profit.[11]

Promoters may have many different reasons for wishing for a new journal or a new publication model. Promoters of a new journal may want to fill a gap in the existing range of journals and launch one that serves a new discipline, or field of studies. Or they may simply want to provide another publication option to a scholarly community that is growing or that is not currently finding enough publication outlets. But they may also be interested in journals that meet the needs of a broader audience of professionals or educated readers. Promoters of a new publication model may be trying to save a journal that is struggling to survive, or they may want to offer better service to their authors and readers. Whatever the reasons of the promoters may be, they all need to face 3 key questions:

How can we find the financial resources for running the journal regularly? ⬇ Financial sustainability | How can we gain the support of authors, reviewers, readers etc.? ⬇ Community sustainability | How can we know which publishing options best suit our objectives? ⬇ Worthiness[12] |

In order for a journal to be successful, all three questions need to find a satisfying answer. That means that they all point to conditions that have to be satisfied for the journal to be successful—they are necessary conditions, but none is in itself sufficient. The task is therefore to help promoters to single out the publishing model(s) that meet all three conditions (stated in bold at the bottom of the table). Of course it may well be the case that no available publishing model meets this objective. When this is the case, the promoters’ plan needs either to be abandoned or to be revised in more realistic terms.

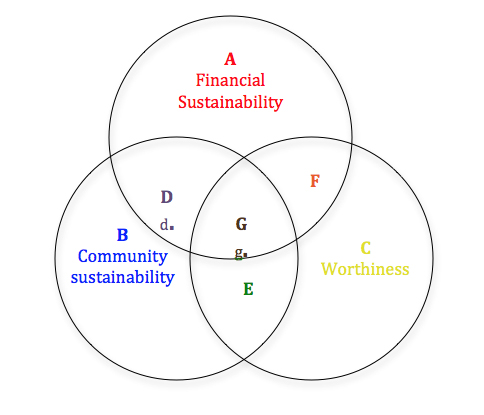

The following diagram illustrates the set of necessary conditions and the way they may overlap.

All the subsets in the figure (D, E, F and G) are also part of the larger sets. So for instance every member d of D is at the same time a member of A and B, and every member g of G is at the same time a member of A, B, C, D, E and F.

Table 2 illustrates the point we have made above: we want to help promoters to find out which publishing models (if any) populate set G. Set G includes the publishing options that satisfy the conditions of financial sustainability, community sustainability and worthiness. If G is populated by at least one member, the effort of the promoters has a point;if it is empty their current wishes and needs cannot be met. If more than one model populates G, then the promoters have an interest in understanding in which respects these models differ and whether any of them is significantly better than the others.

5. Defining the aim of the framework for evaluating publishing models

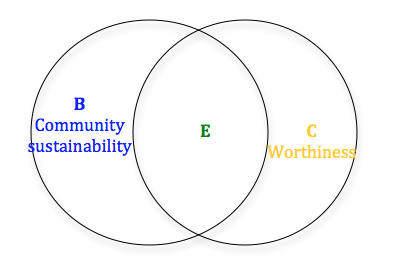

Our framework will focus on 2 of the 3 necessary conditions that we have highlighted, namely community sustainability and worthiness. We assume that the tools and know–how to make a financial sustainability assessment already exist. Thus we focus on the less familiar tasks of carrying out an assessment of community sustainability and of worthiness. We will treat the two issues together, because many factors that contribute to the satisfaction of one condition contribute to the satisfaction of the other as well. This happens because typically the promoters are a subset of the community whose support is needed and hence there is a substantial overlap in purposes and needs.

We can define as the key stakeholders, those groups whose support is needed in order for the journal to exist: for instance authors, reviewers, editors, readers, funders etc. If the journal does not consider and satisfy the needs, interests and values of key stakeholders it will lose their support and will be unable to survive. This is the idea captured by the concept of community sustainability. By contrast, we define worthiness as the ability to satisfy the promoters’ objectives (is the journal bringing enough added value to the current publishing situation to be worth the effort to promote it?).[13] At a closer look we can see that typically promoters will also be authors, reviewers, editors and readers of the journal, so they are not an altogether different bunch of people. To be sure, as promoters they are playing a more specific role and hence they can be analytically distinguished, but still they continue to share many needs, aspirations and values with those members of the scholarly community that they serve.

In short, we believe that there is enough convergence between community’s needs and promoters’ objectives to justify treating them together in constructing our list of desiderata that publishing models should satisfy.[14]

6. Mapping the values to be built into the framework

| Core values | Advancement of knowledge | Scholarly virtues (accuracy, originality, intellectual honesty etc.) | |

| Efficiency (in the production and dissemination of knowledge) | |||

| Fairness | Fair equality of opportunity | ||

| Desert rewarded | |||

| Additional values (whose relevance and applicability needs to be assessed for each research domain) | Philanthropic values | Human development | |

| Human well–being | |||

| Democratic values | Democratic participation | ||

| Democratic accountability | |||

| Democratic empowerment | |||

There are two main ways for mapping the values involved in academic publishing: 1) a functional analysis of its purposes, and 2) stakeholders analysis. Here we focus mainly on the former, because it turned out that it is the one that points to the core values of academic publishing. Stakeholders analysis has produced important positive results when focused at scientists and scholars, and at public opinion and humanity. When directed at other stakeholders—namely publishers, librarians and funders—it has not revealed any further value (see Appendix).

6.1 Functional analysis

Academic publishing nowadays performs two different functions:

- it promotes the advancement and sharing of knowledge;[15]

- it provides the information necessary to assess the performance and merit of researchers.

Arguably the second function is subsidiary because academic publishing provides a reasonably reliable indication of the quality of researchers only if it is actually organized around the purpose of promoting knowledge efficiently—it could not perform this function if, say, its aim was to make profit or to boost the self–esteem of all researchers. But even if we acknowledge that one function is conditional to the successful performance of the other, it is nonetheless very important not to dismiss the second function and its implications.

The first function implies two values: knowledge and efficiency. The value of knowledge is too well established to require a justification here.[16] Efficiency is necessary in order to achieve as much knowledge as possible and to disseminate it as widely as possible. If we accept that knowledge is valuable and that the more of it the better, then efficiency in its pursuit and circulation is an effective way of having more of the desired goal. The assumption that having more knowledge is better does not need to be true for individuals (it is perfectly plausible to believe that pursuing knowledge beyond a certain point produces an unbalanced personality), but from a collective point of view: the advancement of learning provides a pool from which people can draw if and when they have a need or desire for knowledge.

There is a second argument in support of efficiency in the advancement of knowledge. If we pursue knowledge efficiently we can minimize the opportunity costs of our quest for knowledge. If we admit that there are other valuable things that can be pursued and that research needs resources, then efficiency is always a way of minimizing opportunity costs, i.e. the things that we have to give up or sacrifice in order to have something we have chosen (in this case knowledge). This expresses not only a commitment to knowledge but also a sense of social responsibility and of respect for other values. Indeed, the case for efficiency becomes stronger if we see it as a rejection of wastefulness out of respect for valuable things.

Although knowledge and efficiency are two analytically distinct values, for our present purpose we can lump them together under the label of efficiency in the generation and sharing of knowledge.

Now we need to turn to the second function that we have attributed to academic publishing: providing an indicator of the productivity of scholars and scientists, and hence of their professional achievements (i.e. merit). Academic careers are not open to everyone, rather they can be considered ‘merit’ careers: i.e. careers in limited supply and reserved for those able to contribute to excellence. Accordingly, there are restrictions in access and advances, as well as hierarchical structures that determine not only the success of their career, but also their chances of getting the resources necessary to carry out first class research and of disseminating their findings. Let us assume that in light of the value of efficiency and of some plausible assumptions about limited resources, competition and hierarchy are necessary. This leads to a situation in which scientists and scholars are in a relation of both collaboration and competition. This creates a tension, which can be handled and reconciled (at least up to a point that guarantees a reasonably stable equilibrium) only through the operation of another important value: fairness. Fairness here has to be understood both as fair equality of opportunities and as proportionality between merit and rewards.[17] These are the conditions that make competition acceptable and that allow collaboration among scientists and scholars who are also in a competitive relationship. Like in sport, fair play is necessary in science and scholarship. Therefore on account of its fundamental role as an indicator of researchers’ merit and productivity, academic publishing needs to incorporate and express the value of fairness. Without fairness in access to publishing opportunities and fairness in evaluating publications, publishing could not be a reliable indicator of desert and hence it would undermine the reliability of the whole mechanism of recruitment and career advancement. This in turn would have an enormous detrimental effect on efficiency in the production of knowledge. So here we have a first argument asserting the value of fairness as a necessary means to pursue knowledge efficiently.

While academic publishing is an activity with its own goals and functions, it is not exempt from more general moral principles—whether these are universal or relative to a specific society does not matter for our present purpose. Such broader ethical notions provide the boundaries within which the ethical norms of any particular professional activity (like academic research and publishing) have to operate. These wider social norms include a principle of fairness, which is often derived from a basic value—the dignity of the individual—and from the principle of respect for persons—which is often assumed as a normative axiom. When this and other ethical constraints are satisfied, the pursuit of the goals of the activity and of the goods associated with success in such a pursuit are perfectly legitimate individual interests. We can thus construct the following argument starting from the legitimate interests of researchers and a general principle of fairness.

Normative premises:

- Researchers are entitled to pursue success and recognition in their field.

- Researchers have a legitimate demand that success and recognition are allocated on the basis of actual individual desert.

Factual premise:

- Success and recognition in knowledge production are measured by the quality and quantity of academic publications.

From these premises two conclusions follow:

- the quantity of academic publications ought to be determined by scientific and scholarly criteria alone (namely efficiency in the production of knowledge)—this is equivalent to fair equality of opportunities;

- the quality of academic publications should be measured as accurately as possible—this is a condition for achieving proportionality between merit and rewards.

The principles of fairness justified as instrumental in the efficient pursuit of knowledge are thus confirmed by the argument derived from the legitimate individual interests of researchers.

6.2 Stakeholders analysis

Let us now look at the stakeholders involved in the process of academic publishing. We can first look at them as a broad group: scientists and scholars. Taken collectively we can assume that they have (or at least that they should have) a commitment to the value of knowledge and of efficiency in its pursuit. This commitment brings with it some interesting implications: namely the further commitment to the ethos and values required by the effective performance of their tasks. Being devoted to knowledge and truth means to endorse values like accuracy, sincerity, honesty, trustworthiness, originality and a certain degree of collaborative spirit. Such values (or more detailed lists of discipline–specific values) constitute the scholarly ethos and are embodied in scholarly virtues.

Let us now have a look at the impact of research on the larger public, or, if you prefer, on humankind. Two kinds of arguments seem to us to have some relevance and validity. The first claims that access to knowledge promotes human development.[18] The argument has two versions: one considers the impact of knowledge on societies and the other on individuals. The first is based on the assumption that knowledge has great instrumental value and its growth has positive effects on society: it promotes economic and social innovation, good health, education, intelligence, creativity, productivity and competitiveness. In short, knowledge promotes the growth of society.[19] The second version maintains that knowledge enriches the life of individuals and makes it more full and enjoyable.[20]

The second argument is that knowledge, like information, is necessary in order to empower a democratic citizenship to assess government and public policies, as well as in order to enable a full development of public discussion and public deliberation.[21]

We believe that these arguments have some plausibility and force. It would be hard to understand why societies invest so much in research if such claims were ungrounded. However, we also think that it is very important to consider these values in relation to specific kinds of knowledge, for there can be little doubt that different kinds of knowledge have quite different impact on human well–being and on democratic life. Immunology is more likely to have an impact on human well–being than Glottology and Social Sciences more likely than Crystallography to have an impact on democratic life. Furthermore, while we fully accept that knowledge is valuable from the point of view of human development and of democracy, we also believe that it is important to realize that the impact of knowledge depends on causal chains that can be rather long and complex and that often produce their results only in the medium or long term. Both the qualifications that we suggest are meant as an invitation to assess the marginal contribution of different disciplines realistically and in view of the broader context that mediates their translation into results effectively available to the public that can benefit from them. So we recommend that the magnitude, the conditions and the time–scale of the impact of various types of knowledge on human development or on democracy be carefully considered in order to achieve a sensible weighing of these values. In order to highlight the need of such contingent evaluation of relevance, in table 4 we have put philanthropic and democratic values in grey.

It is now time to offer to our readers a practical tool. On the basis of our ideas of community sustainability and worthiness, and of our analysis of the values relevant in academic publishing, we are constructing a table of technical desiderata. These desiderata are the features of a publishing model relevant for achieving both community sustainability and worthiness. They will not always all be relevant, but they represent a checklist of the characteristics that promoters need to consider. Their relative importance and weight will depend on the practices and needs of the research area covered by the journal, and on the objectives of the promoters. We cannot therefore offer any general ranking: this is a task that needs to be performed in context, with knowledge of the particular realities and aspirations of the community and of the promoters.

These desiderata are the specific features through which the general values indicated above can be implemented and become operational. So they represent the point where abstract values can be translated into realities. Moreover, they represent functional parts of a working publishing model, and as such they also need to satisfy requirements of functionality and viability that are determined by the working of the publishing process as a whole. In short they are the points where the aspirations (desiderata) become functional requirements (technical specifications). We believe that it is a fairly comprehensive list; however we invite users to modify (either expanding or shrinking) it on the basis of the circumstances under which they operate. It is offered more as a useful example than as a rigid grid.

| Publishing model | TD–A. Quality control barriers | TD–B. OA to publications | TD–C. Fair access to publishing | TD–D. Visibility | TD–E. Content usability | TD–F. Authorship and work protection | TD-G. Adequacy and specificity of evaluation metrics | |||

| Absence of financial barriers | Absence of quality–independent caps to publication | Acceptance–publication gap | Indexing | Archiving stability | ||||||

| X | ||||||||||

| Y | ||||||||||

| Z | ||||||||||

Goals and values expressed by the various parameters:

TD–A: Goal: selecting what is worth publishing. Values: efficiency (reduction of noise), scholarly values

TD–B: Goal: promoting the broadest circulation of knowledge. Values: efficiency, fairness, philanthropic values, democratic values

TD–C: Goal: securing that all that is worth publishing is published promptly. Values: fairness, efficiency (quick impact)

TD–D: Goal: securing that the target audience can reach the publications they need. Values: Efficiency, fairness

TD–E: Goal: promoting the further production and impact of knowledge. Values: efficiency, philanthropic values

TD–F: Goal: preventing abuses and undeserved rewards; protecting the integrity and securing that the meaning of works is not distorted. Values: scholarly virtues, fairness

TD–G: Goal: enabling an accurate and article–specific evaluation of quality and impact. Values: fairness (more accurate evaluation of merit), efficiency (more precise selection of most valuable contributions)

Notice that focus on OA is directly concerned only with B and E, although often A is discussed as well in response to worries about the compatibility between OA and the peer review process.

Notice that parameters E and F are the only two in a straightforwardly antagonistic relation: one can only be promoted by limiting the other, so that they are inversely proportional. However, that does not mean that the truly important use rights and copyrights protection are incompatible. It is therefore advisable to consider them with the aim of understanding where the best balance can be achieved, since we cannot improve the score of one without worsening that of the other (but we may well have a compromise that allow the uses that we deem important while protecting authors and their work in the respects that really count).

7. How to use the framework

The table of technical desiderata is the keystone of our framework and can be used in many different ways. We will illustrate some possible uses of the table, from the simpler and more informal to the more formalized and sophisticated. Here again we expect promoters to have quite different needs and opportunities as well as quite different resources and time. So according to how much choice they have, how much is at stake and how much time and resources they are prepared to invest in selecting a publishing model they can work out the most appropriate use of the framework.

7.1 The framework as an informal checklist

The simpler and most informal use of the framework consists in making a list of the publishing models available to the journal and in considering how they fare by the various parameters listed in table 5. Going through this exercise may be enough to make explicit all the relevant differences between the available options and to appreciate their strengths and weaknesses. It definitely helps in showing the trade–offs between different desiderata that are involved in choosing one model rather than alternative options. For small journals with limited options, this may be enough to make an informed choice. In these cases it is advisable to run a financial sustainability check before starting this exercise, in order to narrow down the options to evaluate.

7.2 The framework as a guide towards a set of thresholds

The next step towards a decision more systematic than the informal approach suggested above consists in looking at the framework and singling out those parameters that are particularly relevant for the promoters. It is quite likely that the reasons for launching a journal or for changing its publishing model find expression in some of the parameters in table 5. So suppose that the promoters single out 3 or 4 parameters as crucial for achieving their objectives, and then they have to establish for each of them what is the minimal level that a publishing model must satisfy in order to be an eligible option. The threshold can be described in natural language or through their ‘natural’ metrics. Here by natural metrics we intend units of measures like money for financial barriers, months for acceptance–publication gaps, how many years an archive can guarantee to host a publication etc. Examples of descriptions in natural language are, for instance, ‘we do not want anything less than blind peer review by 2 referees for each article,’ ‘articles should be freely available on the journal website no later than one year from publication,’ etc.

Once the relevant thresholds have been set, promoters should make a list of available publishing models and check which of them, if any, passes the thresholds. In terms of table 2, this means determining set C (worthy options). Once this is done, promoters can move on to check which worthy options pass a financial sustainability test and a community sustainability test. Now this last test poses more problems than the worthiness test, because the information necessary to establish worthiness is available to the promoters (it is information about their objectives, and they must know them or be able to work them out), while information about key stakeholders is not immediately available to them. However, remember that we have noticed that typically the promoters are a subset of the key stakeholders. So the simpler solution would be to consider themselves a representative sample of the key stakeholders and work out the relevant information through reflection, so as to establish community acceptance thresholds for the parameters in table 5. The most demanding solution would be to carry out a proper survey among key stakeholders. (Of course in between these extremes there are a host of intermediate possibilities, for instance an informal survey among colleagues.)

The procedure illustrated so far has been to work out C (see table 2), and then move to determining F or E and finally G. The order depends on the cost of getting the necessary information. It is advisable always to start with the tests that are less demanding, so as to save time and effort in case every option fails the tests that are more easily carried out. The principle is always to move along the line of lowest resistance, where resistance is understood as the costs (broadly construed, i.e. not only in terms of money but also of time and work). When the information needed for establishing thresholds for community sustainability is easily accessible it is advisable not to split the process and construct an aggregate table of thresholds that includes both worthiness and community sustainability thresholds. Such a comprehensive table will allow us to determine E (see table 2) if we have not performed the financial sustainability analysis before, while if we had and restricted the options accordingly (i.e. if we are working within set A), it will take us straight to G.

To summarize, table 2 will help us in deciding in which order to proceed and which subset to aim at first, while table 5 will guide us in identifying the population of B, C, D, E, F, G (notice that we will not need to identify the population of every subset, which subsets we need to discover depends on the order in which we proceed. E.g. if we start by identifying A, and then we use an aggregate thresholds table we move directly to G).

This approach can be described as proceeding by eliminating options that do not satisfy one of the 3 fundamental criteria of evaluation (those stated in table 1) and will lead us to identifying the set G of satisfying options. In most cases we believe that this is all that is needed and that this can be achieved at a reasonable cost (in terms of time and effort).

7.3 The framework as a guide to parameter–specific ordinal rankings

We believe that ordinal and cardinal rankings may be worth performing only after the set of thresholds has been constructed and G has been found populated by 2 or more publishing models. Here we explore ordinal rankings, which are much simpler than cardinal, although they do not guarantee a determined and clear result.

On the basis of our assumptions all publishing models in G are considered viable and satisfying options, but it is not clear which one is the best choice. The first step towards making a decision is to rank the parameters of table 5. It is fundamental to make clear that here we are not interested in an abstract ranking, but we need to rank how important it is to improve on each parameter once the threshold has been reached. The exercise of establishing thresholds is based on the assumption that all parameters are important, indeed that reaching the threshold is a necessary condition for either the viability or the desirability of the journal: it is as if in a 0–1 scale of importance they all count as 1 until the threshold has been reached.[22] But once the threshold level has been achieved, it makes sense to ask: how important is it to improve above the required minimum? But there is another important question to consider: are the publishing models in G different in all parameters or only in some? If there are some parameters on which there are no differences, then obviously we do not have to rank these parameters and we can simplify our task. Suppose that all our models in G have the same quality control and the same type of licensing, so that there are no differences in TD–A, TD–E, and TD–F. It follows that we need to rank only TD–B, TD–C, TD–D, and TD–G. At this point we need to consider two different questions in ranking them.

- We begin with a factual and contingent question. Remember that we are now concerned only with parameters for which there is variability in G. Let us call them VG–parameters. For each VG–parameter we ask: how much variability is there among the publishing models we are considering? So for each VG–parameter we have to look at how large is the difference between the 2 publishing models that present the greater difference and ask: how significant is this difference? We can use a very simple scale: a) moderately significant; b) fairly significant; and c) very significant.

We then move to a more abstract and subjective question. For each remaining parameter we ask: how important is it for us? Here again we can use a very simple scale: a) of limited importance; b) fairly important; and c) very important.

Now let us give a numerical value to a), b), and c) according to the following table:

Table 6: scores for weighing parameters1 (range of variability) 2 (value) a) 0.33 0.33 b) 0.66 0.66 c) 1 1

Every parameter will have two scores, by multiplying which we establish its weight. For instance, suppose that the VG parameters are TD–B, TD–C, and TD–D and that we have the following results (where the first letter indicates the score in variability and the second the score in value): TD–B: (b,b = 0.435); TD–C (c,b = 0.66); and TD–D (b,a = 0.217)

So our ranking will be: 1st TD–C, 2nd TD–B, and 3rd TD–D. (Notice that we have not only a ranking, but also a numerical interval that gives us an idea of how much difference in importance there is. Having used a very simple scale with only 3 possible scores, we are only capturing differences of a certain magnitude, if one wants a finer grained measure of the interval one needs to use a finer graded scale of scores.)[23]

Now we need to rank how the publishing models (let us call them X, Y and Z) perform on each VG–parameter, in our example TD–B, TD–C, and TD–D. So suppose that we rank them as follows:

| TD–C | TD–B | TD–D | |

| 1st | Z | X | Y |

| 2nd | X | Z | X |

| 3rd | Y | Y | Z |

This table helps us in seeing how well the publishing models perform relatively to each other, but there is no guarantee that it will provide any clear answer to our question: which of the models in G is actually the best for us?

In our example it shows that one of the 3 is the least preferable (Y), but the question remains open whether Z or X is best.

The most interesting question is whether the result of this ranking procedure is worth the effort of producing it. First, let us not to be too influenced by our example and remember that we may have had both a clearer result (e.g. if X and Z swap position in TD–C, see table 7b below) and an even more ambiguous one (if Y had been 2nd in C and B, see table 7c below). How helpful would such results be?

| TD–C | TD–B | TD–D | |

| 1st | X | X | Y |

| 2nd | Z | Z | X |

| 3rd | Y | Y | Z |

| TD–C | TD–B | TD–D | |

| 1st | Z | X | Y |

| 2nd | Y | Y | X |

| 3rd | X | Z | Z |

In every case they give us some interesting information: 7a tells us that Y should not be our choice and that X and Z are roughly equivalent. 7b gives us a winner (X), while 7c tells us that overall our 3 options are roughly equally desirable: no option will be significantly worse overall.

That said, we think that the opportunity of constructing an ordinal ranking table very much depends on two considerations. First we should consider whether after having constructed the thresholds (as described in 7b) the promoters have strongly conflicting preferences about the models in G. Second we should consider how much effort it would require for them to construct the ranking. The latter question depends on how many options there are in G, on how many parameters they differ and how large a sample of the promoters is needed to represent fairly accurately their preferences. It may also be helpful to keep in mind that the exercise of ranking may provide enough information even before it is completed. For instance, suppose that during the first stage, that of ranking the parameters we realize that the range of variability is moderately significant for all the parameters (or for all but one that has low importance). Then we may decide that there is not much at stake in our choice and decide that it is not worthwhile to proceed any further and make instead a random decision. Or suppose that we realize that only one parameter has a high score (say 1 or 0.66) and all the others very low scores (0.217 at most). Then we may decide that it is enough to make the ranking for the one high scoring parameter.

In short, when promoters have several satisfying options, but they feel that the choice is not indifferent and something important may be at stake, it is advisable at least to begin the ranking exercise, although it may not turn out to be necessary to complete it.

7.4 The framework as the basis for weighed parameters and cardinal standardized evaluations

Suppose that we have carried out our ordinal ranking and that we have achieved a situation like that illustrated in table 7c, and among the promoters there is persistent disagreement about the choice of the best publishing model. Is there a way of helping them to find out which is the rational choice? In order to do so we need to help them to construct cardinal rather than ordinal evaluations of each model. This means that we have to construct a table in which each model receives a numerical score for every VG–parameter and these numerical scores are adjusted for importance and variability magnitude. Can this be done in a reasonably simple way? In the following part of this section we describe what seems to us the simpler way to construct reasonably reliable cardinal evaluations. Whether it is simple and reliable enough we leave to readers and to promoters to judge. We are not committed to the idea that a formal decision making procedure based on numerical scores is superior to an informal, but well–informed, choice. The kind of choice that we are tackling is not based on physical features that are measurable objectively; it is instead based on attempts to give numerical scores to preferences (or, if you prefer, to values) and this is by no means as precise and as impartial a procedure as we would like. Whether such methods provide any genuine advantage over an informal deliberation depends on how acceptable and significant are the simplifications and idealizations involved in the process and this cannot be decided by a formal procedure, but only by an informal evaluation. In practice, we suggest that if promoters have carried out a parameter–specific ordinal ranking, but this has not indicated the best option clearly, then they need to ask themselves whether they want to make their decision on the basis of the general information provided by the framework (as described in 7a) or whether they want to embark in the construction of cardinal evaluation explained below. We do not believe that it is irrational to opt for the former method.[24]

So let us go back to our problem of constructing cardinal evaluations. Our task is considerably simplified if the models that populate G are not differing in all parameters, i.e. if the VG–parameters are fewer than the seven parameters considered in table 5 (technical desiderata). So we start by constructing a table that includes only the VG–parameters. The other piece of good news is that we have already established minimal thresholds for every parameter. In fact we have established more than one minimal threshold: a worthiness threshold (the threshold to be passed in order to be part of set C in table 2) and a community sustainability threshold (the threshold to be passed in order to be part of set B in table 2). What we need to use now is the threshold to be passed in order to be part of set E (defined by the overlap between B and C). This E threshold is simply the higher between the 2 thresholds mentioned. These E thresholds are our bottom lines, and (as in section 7.3 above) we are interested in assessing the significance of improvements above this bottom line.

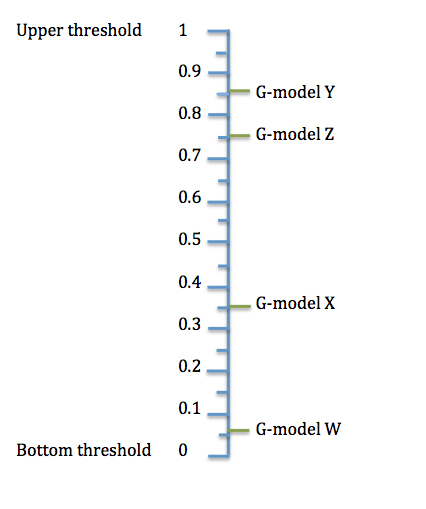

Our next task is to establish for every VG–parameter an upper threshold as well. The upper threshold marks the point at which we are no longer interested in improving on that parameter. In technical terms this is the point where the utility curve for a parameter becomes flat. For instance, suppose that for both promoters and key stakeholders double double–blind peer review (plus, if needed, a third double–blind peer review) represents as good a quality control as one may wish and that any further quality control is not perceived to add anything significant. And suppose that full OA at the time of publication of the best version of the article is all that can be asked in terms of OA to publications. Then these would be our higher thresholds for parameters TD–A and TD–B. Clearly it is possible that the lower threshold and the upper threshold coincide. For instance it is perfectly conceivable that double double–blind peer review and full OA to the best version at the moment of publication are also considered minimum thresholds. However, this will not be the case for VG–parameters, for by definitions these are parameters for which there is significant variation above the threshold. Once we have established a lower and upper threshold and these two do not coincide we have determined what we call the range of relevant variability (henceforth RRV). That is what we need to establish for all our VG–parameters. At this point we will give a score of 0 to the bottom threshold and a score of 1 to the top threshold.

Since in respect to each VG–parameter we have already ranked the publishing models in G (let us called them G–models for brevity) now we only need to place them on the 0–1 scale delimited by the bottom and top thresholds. What is important here is to establish the correct intervals. How do we move from a simple ranking to establishing the intervals? A fairly simple way is by using a visual analogue scale. For each VG–parameter we need to single out the G–models that present between them the shorter and the larger intervals and then place them on a visual scale (e.g. a 10 cm long, graded vertical line). See figure 1 below.

At this point we need to add any remaining (if any) G–models and place them according to our ranking. We now have a provisional interval scaling that we need to refine by comparing each interval with all others until we reach a scale that we believe faithfully reflects the desirability gaps between the options. When we have achieved this result we can convert the spatial distance into a numerical interval and this gives us cardinal scores for each G–model for a given parameter.

Now we have to construct these scores for every VG–parameter. Once we have done this we only have to multiply these scores by the importance coefficient of each VG–parameter. Remember that we have attributed to each VG–parameter a variability score and value score (see table 6 above and footnote 23) and by multiplying one for the other we have worked out the importance coefficient of each VG–parameter.

Once we have multiplied parameter–specific scores by the coefficient of importance we have standardized them, i.e. we have made them commensurable. So now we only have to sum the standardized parameter–specific scores of each G–model in order to calculate their overall score. The G–model with the highest overall score is the best available option.

8. An example of application

Suppose that a group of scientists and scholars wants to launch a new journal. They come from a variety of disciplines ranging from natural sciences (biology, chemistry) to humanities (history, philosophy), from applied disciplines (medical sciences, engineering) to social sciences (STS, economics). They want to have a forum for discussing the practical and methodological issues stemming from trans–disciplinary collaboration; accordingly the journal will be called Perspectives on Trans–disciplinary Integration. Because of their diverse backgrounds they have different publishing cultures and needs. Table 5 will greatly help them immediately to put into focus some of the issues that they will have to tackle. Suppose that they all agree on the type of peer review they want and that it would be a very good thing for the journal to be OA. But as they move on through the list differences begin to emerge. Humanists are strongly adverse to Article Processing Charges because they do not have funds to cover these costs, but some of them are not so keen on giving up printed publishing. Younger members of the group are very sensitive to the need of having the shortest possible gap between acceptance and publication because they are on tenure–track or on temporary contracts. Researchers from medical sciences and engineering give a lot of importance to very effective indexing so that their target audiences will be able to find their contributions. Natural scientists are positive towards very liberal licensing, while engineers, medical scientists, social scientists and humanists are more worried and want to secure tighter copyright protections, albeit for different reasons—humanists and social scientists because they care about authorship, researchers from medical and engineering sciences because they are concerned about patents and funders demands. Finally, researchers from medical sciences and other high impact disciplines are pushing to have article specific metrics, so as not to suffer too much from publishing in a journal that will probably have a lower IF than their disciplinary journals. Most of these issues will have not been stressed if they had discussed only OA. Furthermore the diversity of needs and preferences suggests that they will need at least to reflect together on their preferred publishing model and possibly that they will need to rank and measure their preferences and therefore that they may well take advantage of the tools presented in section 7.

9. Conclusions and recommendations

Digital technologies have brought novelties and new possibilities to academic publishing. Some constraints of the printing age have been removed or relaxed. This fact, combined with the continuous expansion of academic research and publishing, is pushing many scholarly communities to consider the possibility of founding new journals or of changing the publishing model of existing journals. This wave of change is currently understood as a move towards OA publishing, and there is a widespread assumption that OA is good and that editorial boards of new journals or of journals changing their publishing model should try to be as open as possible (cf. SPARC’s How Open Is It? Guide[25]). We believe that the emergence of digital technologies has brought more meaningful opportunities than are captured by the concept of OA alone, even when this is understood, as it should, as Open Access and Use. For instance, emphasis on OA tends to overlook a problem intensely felt by many academics, namely the very long publishing times of many traditional journals; these waiting times could often be cut drastically by moving from a print to a digital–only publication.[26] It seems paradoxical to demand immediate OA while accepting that some journals impose delays longer than 2 years because of their backlogs.[27] Another example is offered by the need of developing article–specific metrics. For instance the impact factor (IF) of a journal gives a very inaccurate estimate of the IF of each article published in it. Inferring the IF of an article from that of the host journal can be compared to inferences that are standardly considered examples of poor reasoning, like group thinking (i.e. attributing to individuals the features of a group to which they belong; e.g. she is Swiss therefore she is punctual). Digital technologies promise to offer corrective to these poor inferences, but such possibilities are beyond the concerns of the OA movement.

Furthermore, we believe that barriers are not necessarily negative: quality barriers in academic publishing are nearly universally considered fundamental and the desirability of barriers to use aimed at protecting authorship varies across disciplines.[28] Finally, our experience with the Agora project has shown us that an exclusive focus on trying to maximize openness has led to definitions of OA that are, in our view, unfairly exclusionary. We have therefore tried to offer a more exhaustive map of the values and desiderata important in academic journals publishing. We hope that the framework generated by this mapping will be useful for editorial boards considering different publishing models. We also believe that our framework helps to stimulate a better understanding of the key values in academic publishing. This information should be of interest to all those involved in academic publishing and in the OA movement. Their strategic and policy choices would benefit from the broader point of view offered by the framework. For instance the choices of those agencies trying to promote OA should not set standards that may not be met without sacrificing other legitimate and important desiderata in academic publishing. Helping academic communities to find the best viable publishing model to address their multiple desiderata should be the priority, while promoting openness should be pursued within the limits afforded by this broader agenda, not as an end in itself.

Appendix: Further analysis of stakeholders values

In this appendix we report the part of stakeholders analysis that has not revealed any value worth including in table 4. Readers may nonetheless be interested in knowing why the interests of librarians, publishers and research funders have not been represented by any additional value.

Publishers are either commercial or non–profit. Non–profit publishers do not bring into the picture new values: they are supposed to be dedicated to the promotion and circulation of knowledge and hence to efficiency, scholarly virtues and fairness. Commercial publishers are instead supposed to make a profit as well. There is nothing wrong with being able to provide a service (or to produce a good) in such a way that makes a profit. In this respect making a profit is like pursuing fame: it is a legitimate interest as long as it respects the relevant side–constraints (e.g. fairness). So commercial publishers have a legitimate claim to be able to make a profit as long as they play within the rules of academic publishing and as long as they respect its purpose and values (i.e. those we have illustrated above). But they do not have any legitimate right to determine the rules of academic publishing so as to make it a profitable activity, at least not when this interferes with the aim and values of the practice. This boils down to the very obvious point that when non–profit alternatives are not available or not working, commercial publishers are needed and hence they have some bargaining power and they will only enter the game if they can make a profit. This, in our view, is perfectly legitimate and acceptable. But it is very different from claiming that things have to remain so as to allow commercial publishers to make profits, when some changes (e.g. some possibilities afforded by digital technologies) may make the process more efficient in serving the value of knowledge production and circulation. Let us illustrate the point with an analogy.

Suppose that most of the elderly who need care are looked after by their families. Some elderly people have no caring families. Charitable, non–profit organizations manage to look after some of these elderly people. Among the elderly who need care and have no caring families, some can pay for the care they need. Obviously it is perfectly legitimate for commercial companies to provide this service and make a profit from it. But suppose that charitable organizations find a way of providing their services much more efficiently and are now able to care for all the elderly without caring families. This would drive commercial organizations out of the business, but they would have no claim that they are entitled to make a profit and that therefore, say, charitable organizations should be prevented from adopting the new management strategy that enables them to serve all the elderly. Similarly, commercial publishers have no claim that academic publishing should be organized or subject to legal regulations so as to make possible for them to make a profit. In short, if commercial publishers provide indispensable services, they will have the bargaining power to negotiate conditions that enable them to make a profit, but if they are not indispensable there is no reason why they should be entitled to dictate conditions that enable them to make a profit. We believe that this amounts to saying that commercial publishers have no ethical claim about the conditions to be imposed on non–commercial publishing. If there is a market for academic publishing the commercial publishers are free to try their luck in this market, but if the market shrinks or disappears, then tough luck.

Things are not very different when it comes to librarians. Their activity is defined by the function of serving the preservation and distribution of knowledge. As long as they perform this task efficiently and successfully, they are entitled to appropriate recognition, rewards (including financial rewards) etc. So as long as their services are needed, publishing should not undermine their profession and their ability to perform their valuable function. But if, say, new technologies bring about deep transformations in the storage and circulation of knowledge, this is just tough luck for the librarians. It is their task to adapt to changed circumstances; they cannot claim that other actors forfeit, say, some gains in efficiency in order to preserve the current role of librarians.[29]

Things are different when we consider research funders. It seems quite reasonable to claim that funders should be entitled to have a say about the kind of knowledge that they are interested in funding and about the ways in which it should be disseminated. It seems hard to deny that the simple principle that ‘who pays the piper picks the tune’ has some force. To be sure, that does not mean that funders should be allowed to interfere with the principles that govern research and its criteria of scientific or scholarly validity. Furthermore, both in science and in scholarship there is a well–established epistemological requirement that results be made public and open to public scrutiny and criticism (at least by experts in the relevant field of knowledge). From this it seems to follow that funders do not have a prerogative to hinder or prevent these forms of open scrutiny. If the purpose of research is the pursuit and dissemination of knowledge, any action or restriction that obstructs the pursuit and circulation of knowledge contradicts the purpose of research and hence is a pragmatic contradiction (i.e. it is inconsistent with the stated purpose of promoting research). So a funder seems to have a legitimate prerogative in deciding to fund Pathology rather than Histology, or Greek Philology rather than Sociology; but they do not have a prerogative to decide that the knowledge they have funded should be disclosed only to people over 50 years old or only to institutions based in countries lying north of the 14th parallel. Luckily, recently funders have actually been pushing in the direction of promoting a wider circulation of the research they fund and have taken the initiative to remove some barriers to the circulation and use of knowledge.[30] This seems a completely acceptable demand, which is open to question not in terms of principle but only in terms of the most effective strategies for achieving this result.[31] However, here the bottom line is that funders’ claims are valid only when they are consistent and compatible with the values that we have already pointed out and this is true also when we deal with the claim of ownership. Results that are kept hidden pervert the pursuit of knowledge and lose their status as genuine pieces of knowledge. Unfortunately they also affect the status of the knowledge that is disclosed, as is well exemplified by the problem of the non–publication of negative results (a notorious problem with medical trials funded by pharmaceutical companies).[32] To put it roughly the principle of ownership cannot be applied to research in any way that undermines the practices of epistemic validation and refutation.

We thus come to the conclusion that looking at the interests of publishers, librarians and funders we do not find any new relevant value that should be upheld in academic publishing. This does not mean that the claims of these stakeholders should be dismissed or ignored. It simply means that their claims have weight when they can be convincingly put in terms of the values relevant to academic publishing, or at least compatible and supportive of these values. So, for instance, as long as libraries are a necessary medium in the preservation and circulation of knowledge, their needs are relevant.[33]

Acknowledgments

The authors would like to thank the anonymous referees for helpful comments and the Journal editors for their help and support.

Giovanni De Grandis was a postdoc at the University of Copenhagen and an AGORA project researcher.

Yrsa Neuman is a philosopher and researcher at Åbo Akademi Foundation Research Institute and was an AGORA project researcher.

Notes

The project was a CIP–project co–funded by the European Commission under the “Information and Communication Technologies Policy Support Programme” (ICT PSP). Project Agora, AGORA Scholarly Open Access Research in European Philosophy, http://www.project-agora.org/ (Accessed May 4, 2014).

Nordic Wittgenstein Review (ISSN 2242–248X), http://www.nordicwittgensteinreview.com/ (Accessed May 4, 2014).

Creative Commons, Attribution-Non Commercial-Share Alike 2.0 Generic, https://creativecommons.org/licenses/by-nc-sa/2.0/(Accessed May 4, 2014).

In the meanwhile Ontos has been acquired by De Gruyter. The NWR first 3 numbers have been published by Ontos, currently it is published by De Gruyter (ISSN 2194–6825).

See Directory of Open Access Journals (DOAJ), http://doaj.org/(Accessed May 4, 2014) and Open Access Scholarly Publishers Association (OASPA), http://oaspa.org/ (Accessed May 4, 2014).

What we are claiming is simply that to most academics who are not especially involved with digital technologies OA is the most visible issue around digital technologies. The large echo of recent initiatives like the Finch Report, the Academic Spring as well as events like Aaron Schwartz’s suicide have brought a lot of attention on OA and made it a popular topic for discussion. The Finch Report is available through the Research Information Network, http://www.researchinfonet.org/wp-content/uploads/2012/06/Finch-Group-report-FINAL-VERSION.pdf (Accessed May 4, 2014); for a good starting point and useful links on the Academic Spring see Eliza Anyangwe, “A (free) roundup of content on the Academic Spring,” Higher Education Network, The Guardian, April 12, 2012 http://www.theguardian.com/higher-education-network/blog/2012/apr/12/blogs-on-the-academic-spring (Accessed May 4, 2014); for an example of how Schwartz’s suicide brought attention to OA issues see Andrea Peterson, “Will Aaron Schwartz’s Suicide Make the Open-Access Movement Mainstream?,” Slate, January 13, 2013 http://www.slate.com/blogs/future_tense/2013/01/13/aaron_swartz_s_suicide_may_make_the_open_access_movement_mainstream.html (Accessed May 5, 2014). No other issue around digital technologies in academic publishing has had a comparable visibility and media coverage. Even such a broad-ranging book as Martin Weller’s The Digital Scholar: How Technology is Transforming Scholarly Practice (London: Bloomsbury, 2011) http://www.bloomsburyacademic.com/view/DigitalScholar_9781849666275/book-ba-9781849666275.xml;jsessionid=2229AEFD55A03FC84798F39CAB80FEE5 (Accessed May 5, 2014) in its chapter on publishing (chapter 12) focuses on Open Access.

On the importance of open use and on the difference (and complementarity) between gratis OA (i.e. removal of price barrier) and libre OA, see Peter Suber, Open Access (Cambridge Ma: MIT Press, 2013), 4–6.

For a similar argument cf. Alice Bell, “Beyond Open Access: Understanding Science’s Closures,” The Guardian, November 18, 2013, www.theguardian.com/science/2013/nov/18/beyond-open-access-understanding-sciences-enclosures (Accessed May 5, 2014). See also Ellen Collins, “Why Open Access Isn’t Enough in Itself,” Higher Education Network, The Guardian, August 14, 2013, www.theguardian.com/higher-education-network/blog/2013/aug/14/open-access-media-coverage-research (Accessed May 5, 2014).

As Weller writes, “the ostensible aim is to remove (not just reduce) barriers to access” (Weller, The Digital Scholar, chapter 12). As noted above, policies like the DOAJ decision to exclude any form of delayed OA also betray an uncompromising attitude. SPARC’s now popular diagram How Open Is It? (http://www.sparc.arl.org/sites/default/files/hoii_guide_rev4_web.pdf [Accessed May 4, 2014]) also implicitly suggests that people should try to get as closer as possible to the top of the openness scale.

A large survey by Taylor and Francis/Routledge shows that authors believe that ability to publish should not depend on ability to pay; Will Frass, Jo Cross and Victoria Gardner, Open Access Survey: Exploring the Views of Taylor and Francis and Routledge Authors (London: Taylor and Francis, 2013), 7 http://www.tandf.co.uk/journals/explore/Open-Access-Survey-March2013.pdf (Accessed May 4, 2014). In a survey we conducted among NWR authors, 28 out 46 respondents chose the answer “I would never pay nor have my institution pay Open Access fees,” and less than 15 were prepared to pay a fee.

Of course we neither mean to suggest that predatory publishers and commercial publishers are on a par, nor we have any hostile intentions towards the latter. Nevertheless, here we are not interested in journals as profit opportunities, but in journals as services to the scholarly/scientific community. There are examples of interests that may pervert the mission of academic publishing. Probably the most striking example is the attempt by pharmaceutical industry to exercise a control on the publication process in some areas of biomedical science.

The reasons why we call it worthiness are explained in section 5 below.

One can see community sustainability as the set of conditions that a journal addressing—and counting on—a specific community needs to meet, while worthiness as the objectives that a specific journal—with its distinctive mission—aims to realize.

Nevertheless, in section 7b we take advantage of the possibility of handling worthiness and community sustainability one at a time to make things simpler.

Knowledge is clearly the principal value in Merton’s description of the ethos of science; see Robert Merton, “The Normative Structure of Science,” reprinted in Merton, The Sociology of Science, Norman W. Storer, ed. (Chicago: University of Chicago Press, 1973). Many have criticized his account as very idealistic and very few would accept it today, but that does not mean that the ideal of the pursuit of knowledge has disappeared from science.For a recent example of its enduring importance see, for instance, Alma Swan, “Open Access and the Progress of Science,” American Scientist 95, May-June (2007), 198–200 http://eprints.soton.ac.uk/263860/1/American_Scientist_article.pdf (Accessed May 4, 2014).

Still there are different views on the value of Knowledge: for instance it can be seen either as having intrinsic value, or as having instrumental value, or both.

Here the equality of opportunities is understood in a narrow sense: namely as equality of opportunity among those who have received the education and training that make them capable of contributing scholarly work. It is not understood in the broader sense, required by some theories of social justice—like Rawls’s theory, for instance; see John Rawls, A Theory of Justice (Cambridge, MA: Harvard University Press, 1971)—that every citizen is given fair equality of opportunities to pursue their favourite course of life. In other words, it is fair equality of opportunities within academia, not within society.

I use the concept of human development because of its breadth, current popularity and its applicability to both individuals and communities. The loci classici for a detailed account of the concept are Amartya Sen, Development as Freedom (Oxford: Oxford University Press, 1999) and Martha Nussbaum, Creating Capabilities: The Human Development Approach (Cambridge, MA: Harvard University Press, 2011).

This argument is often found in the literature that advocates OA; typically in relation to the benefit of OA for developing countries. See for instance the Salvador Declaration on Open Access: The Developing World Perspective (http://www.icml9.org/meetings/openaccess/public/documents/declaration.htm) and the OASIS page on Open Access and Developing Countries (http://www.openoasis.org/index.php?option=com_content&view=article&id=28&Itemid=412), which also provides a useful bibliography on this topic.

We have not found an explicit version of this argument, however the final report of the UNESCO Netherlands Expert Meeting on Open Access (http://www.unesco.org/new/fileadmin/MULTIMEDIA/HQ/CI/CI/pdf/themes/access_to_knowledge_societies/open_access/en%20-%20UNESCO%20expert%20meeting%20Open%20Access%20conclusions.pdf) suggests a possible argument along these lines when it describes OA as “an instrument for realizing the rights to share in scientific advancement and its benefits, to education and to information (articles 27, 26, and 19 of the Universal Declaration of Human Rights respectively).”

Cf. Michael Parker, “The Ethics of Open Access Publishing,” BMC Medical Ethics 14, no. 16 (2013). For an overview of the importance of public participation and involvement in policy-making see Maarten A. Hajer and Hendrik Wagenaar (eds.), Deliberative Policy Analysis. Understanding Governance in the Network Society (Cambridge UK: Cambridge University Press, 2003).

This is true even if all actual publishing models satisfy a certain parameter. This simply means that the threshold is so easy to satisfy that it is in practice a non-exclusionary parameter. If this is the case, we can simply remove it from the table for it does not play any practical role in our choice, but this does not pose any conceptual challenge to our approach.

A scale with 5 options (e.g. 1. Marginal [0.1]; 2. Moderate [0.3]; 3. Fair [0.5]; 4. Significant [0.7]; 5. Very important [0.9]) will already provide a much finer graded measurement of differences and more precise tracking of intervals. We recommend these finer graded scales when parameters importance and variability is measured in the context of the cardinal evaluations described in the next section (7d).

The theoretical reason for not considering the ‘informal’ option inferior is that we do not consider given preferences as perfectly rational and immutable. The informal deliberative method allows for the reflective modification of preferences, while the formal method does not. Hence the informal method has at least one important advantage.

http://www.sparc.arl.org/sites/default/files/hoii_guide_rev4_web.pdf (Accessed May 4, 2014).

Yet some people (especially in the humanities) still prefer to have a printed version of the journal as well. For instance, NWR has a small and unexpected number of individual subscriptions to the print version.

A good example of how long publications delay can be and how serious a problem they are for authors can be found in a discussion in Brian Leiter’s blog, Leiter Reports: A Philosophy Blog, “Open Access Journals in Philosophy: Why Aren't There More, and More Better Ones?” http://leiterreports.typepad.com/blog/2009/06/open-access-journals-in-philosophy-why-arent-there-more-and-more-better-ones.html (Accessed May 4, 2014). See comment 13 by Mark Schroeder.

Most advocates of OA fully acknowledge the need of quality barriers and stress that OA is compatible with peer review; for instance Suber in his Open Access begins a section entitled “What OA is not” writing that “OA isn’t an attempt to bypass peer review” (p. 20). We are not suggesting that OA is against quality controls, but that it considers them an ad hoc exception to their intention to remove barriers. Concerning authors’ rights, Nigel Vincent for instance argues that in the humanities (and to a lesser extent also in the social sciences) the writing and the organisation and exposition of the content are themselves an essential feature of the scholarly work, so that authors often prefer licenses that do not allow derivatives (ND). See Nigel Vincent, “The Monograph Challenge,” in Debating Open Access, eds. Nigel Vincent and Chris Wickham (London: The British Academy, 2013), 110–1, https://www.britac.ac.uk/openaccess/debatingopenaccess.cfm (Accessed May 4, 2014). Translations provide another example of derivatives for which in the humanities there are good reasons for wanting restrictions.

Of course there is nothing wrong if they decide to renounce some advantages in order not to undermine the role of librarians. But this is, technically speaking, a supererogatory act and not a duty. Actually from the point of view of the efficient generation and dissemination of knowledge this may be an inefficient course of action that should be justified in terms of some other value, for instance the well-being of librarians and their families.

See the list of OA mandates provided by ROARMAP (http://roarmap.eprints.org); Suber, Open Access, chapter 4 is also helpful and gives many useful references and links.

We come back to this issue in the concluding section of the article.

See for instance Daniele Fanelli, “Negative Results are Disappearing from Most Disciplines and Countries,” Scientometrics 90, no. 3 (2012), and Sergio Sismondo, “Pharmaceutical company funding and its consequences: A qualitative systematic review,” Contemporary Clinical Trials, 29, no. 2 (2008).

From a practical point of view, though, these are interests that need to be considered at the level of policy making, not by promoters.