Language Technology Enables a Poetics of Interactive Generation

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial 3.0 United States License. Please contact mpub-help@umich.edu to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Abstract

Computer poetry generation has historically been pursued from a number of different traditions: Digital Poetry, Oulipo, recreational programming, and language research. This article makes several suggestions for distributing interactive poetry generators given the contemporary technological environment. A number of case studies are provided to illustrate these points, and the popular n-gram poetry-generation technique is described.

1. Building on Traditions of Interactive Poetry Generation

1.1 Introduction

Just as networked personal computers are encouraging new approaches to poetry that depend on computation (Funkhouser 2008), recent trends in language technology are enabling new types of poetic expression. These language technologies include algorithms, computational models, and corpora. There are a number of ways that these technologies can be used to produce poetry. Such production often involves developing new ways for the computer to generate text, with various types of input from a human. This approach to poetry exposes and innovates relationships between the author, authoring tool, text, means of distribution, and reader.

There is no unified community practicing such a poetics. This is probably due to the cultural and historical divisions between artists, humanists, engineers, and scientists, as well as to the fact that humans are generally good enough at writing expressive poetry that the input of computer programs is not needed. However, because computer poetry generation can be considered one of the “key benchmarks of general human intelligence” (Manurung 2003), it has been pursued by various researchers and programmers in the past and is likely to be of continued interest in the future. Likewise, poets and theorists have explored new computational tools in the past and are likely to do so in the future. Those who develop an interest in generating computer poetry should consider how they can effectively chronicle their efforts to both contemporary and future fellow practitioners, acknowledging the difficulties created by technological obsolescence and distances of time and location.

This article begins by describing historical related work in interactive poetry generation. Section 2 describes the importance of implementations, interactive interfaces, and online communities, and Section 3 discusses case studies and related issues.

1.2 Related Work

One of the earliest computer generators of expressive text was programmed by physicist Christopher Strachey in 1953 and produced stylized love letters as shown below. The algorithm producing the text was based on a template that randomly selected words from sets that were classed principally by part of speech. In the example below, the first word is selected from a set of adjectives, and the second word from a set of nouns. Variations in the body of the text are produced by randomly selecting one of two sentence templates (Link 2006).

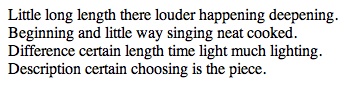

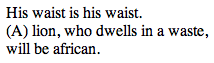

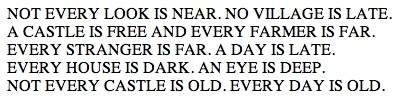

Strachey’s love letters are arguably prose rather than poetry, but a stronger case for poetry can be made for the Stochastic Texts generated in 1959 by German academic Theo Lutz. Figure 1.2 shows a selection of output from Lutz’s generator, which worked by using templates to randomly select from sets of words. Notably, Lutz selected nouns and verbs from Franz Kafka’s novel, “The Castle” (Lutz 1959).

In 1954, American poet Jackson Mac Low begin using aleatoric (random) composition techniques to produce poems with the goal of trying to “evade the ego” (Mac Low and Ott 1979). One of his earliest efforts was “5 biblical poems” written in 1954–1955 (Campbell 1998), in which verses from Judeo-Christian holy texts were copied with words randomly replaced by punctuation. This approach is an early example of the erasures technique, in which a poem is created by removing words from an existing poem (Macdonald 2009).

Mac Low also composed using a diastic technique, which worked by analogy to acrostics: Given an input text and seed text, the poet would look through the input text to select the first word whose first character was the same as the first character of the seed text, then the next word whose second character was the same as the second character of the seed text, and so on (Hartman 1996). Although Mac Low’s techniques were initially performed by hand, later poems used computer tools; Figure 1.4 below shows a later diastic poem composed by Mac Low using Charles Hartman’s program DIASTEX5, using input from Gertrude Stein with some editing of the output. Like erasures, diastics foreshadow later techniques that work by modifying an existing text.

A major contribution occurred in 1960 with the founding of Oulipo, a French group of writers, poets, and mathematicians (Matthews and Brotchie 2005). Oulipo’s approaches include new poetic forms defined by constraints for the human author and generative methods that produce automated output. The group was founded by the poet and novelist Raymond Queneau after he developed “100,000,000,000,000 Poems,” a sonnet that provided 10 interchangeable possibilities for each line. Another of the group’s earliest contributions was the “n+7” method of poet Jean Lescure. In this method, the poet would select a text and a dictionary and replace every noun of the text with the seventh noun that followed it in the dictionary. Oulipo grew to include members such as Italo Calvino and Marcel Duchamp, and is still active as of 2011, principally in France but with affiliates in several other countries. Oulipo’s methods did not initially use computers, although later efforts did as described below.

One of the next generators of note was developed by R. W. “Bill” Gosper in the early 1970s at the Artificial Intelligence lab at the Massachusetts Institute of Technology (Gosper 1972). Gosper’s approach is related to that of n-gram generation, which is part of several later generators. N-gram generation is further described in Appendix A; in brief, it counts the frequency of sequences of words or characters in a corpus, and uses that count to guide generation. Gosper does not use the term “n-gram” and the implementation he describes would only create a subset of a full n-gram language model, but in the same brief article he also describes an approach that is functionally equivalent to character n-gram generation. Gosper’s approach was later named Dissociated Press and as of 2011 is still part of the default distribution of the open-source Emacs text editor (Free Software Foundation 2011; Wikipedia 2011). Representative output is shown in Figure 1.5. Note the high number of portmanteaus such as “uneasilent,” “Footmance,” and “trings.”

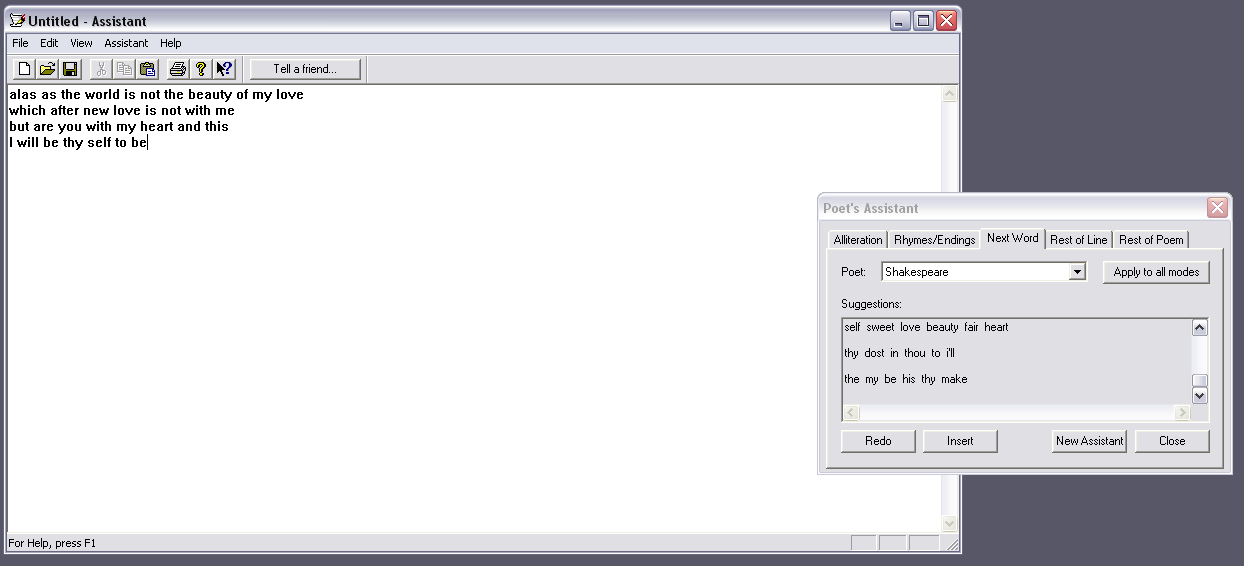

A full character n-gram generator called Travesty was created by Hugh Kenner and Joseph O’Rourke of Johns Hopkins University by 1984 (Hartman 1996) and a word n-gram generator called Mark V. Shaney was created by Bruce Ellis and Don P. Mitchell of AT&T Bell Laboratories by 1989 (Dewdney 1989), both of which produced text as unformatted prose rather than formatted as poetry. Contemporaneously, entrepeneur Ray Kurzweil began development of “Ray Kurzweil’s Cybernetic Poet” (RKCP), which eventually became a product distributed over the web (Kurzweil CyberArt Technologies 2000). RKCP’s last supported platform was Windows 98, but it included an n-gram generator constrained by form and rhyme schemes, and the ability to build custom language models (Kurzweil CyberArt Technologies 2007). RKCP’s generator produced brief poems, words, and rhymes as part of a screen saver. RKCP also contained a generator interface that used human text input to suggest next words, lines of poetry, and entire poems.

In 1982, members of Oulipo founded ALAMO, a group focusing on literature produced by mathematical and computational techniques. Their early efforts were “small scale” and “never completed” (Matthews and Brotchie 2005). Later works included the template-based generator LAPAL, as well as “Rimbaudelaires,” which dynamically replaced the nouns, adjectives, and verbs from Arthur Rimbaud’s sonnet “Le dormeur du val” with those from the writings of Charles Baudelaire (ALAMO 2000).

In the 1980s, poet and academic Charles Hartman published a number of poems using output selected from poetry generators, and developed a number of programs including the above-mentioned DIASTEX5, the grammar-based generator Prose, and the automatic scansion analyzer Scandroid (Hartman 1996).

Also in the 1980s, American programmer Rosemary West developed POETRY GENERATOR (Dewdney1989), a template-based generator for DOS PCs. It was originally sold as a consumer product, but later became freeware as part of a “Creativity Package” including the generators Versifier and Thunder Thought, which were meant to allow “computer-aided brainstorming” (West 1996).

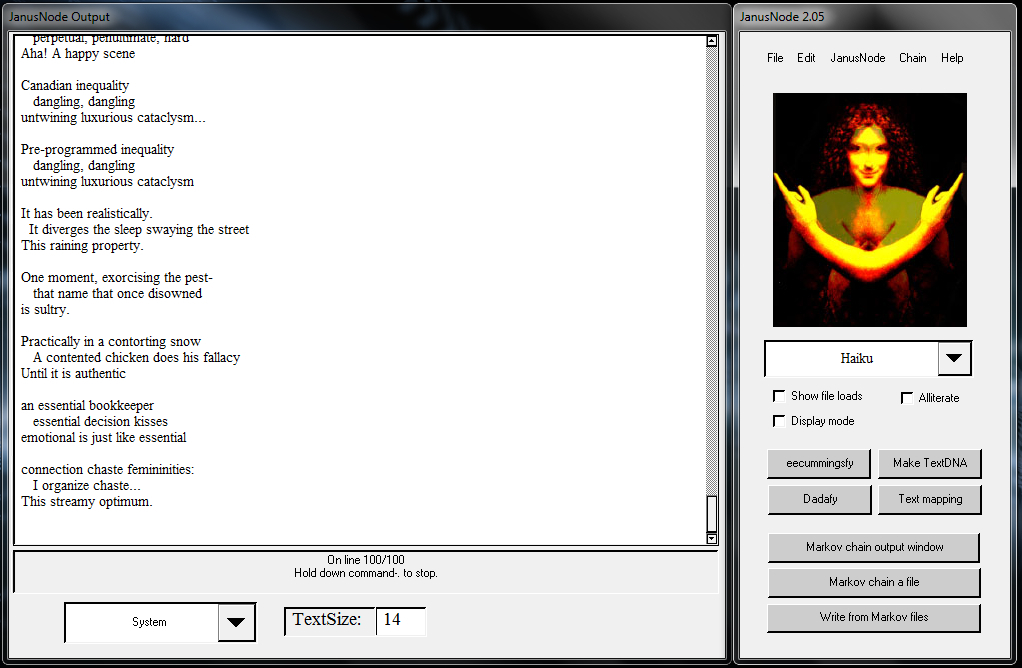

JanusNode is a text generator and manipulator that was also developed and distributed in the freeware/donationware tradition. JanusNode was programmed in the 1980s as a program called “McPoet,” and re-written in the 2000s (Westbury 2003). The latest versions of JanusNode contain a graphic user interface for rule-based poetry and text generation, bigram and trigram word and character modeling and generation, and post-generation text replacements. The user can modify the rules for generation and replacement, and edit the output before or after replacements.

The turn of the century saw the beginning of published research in poetry generation from the perspective of Natural Language Processing, Computational Linguistics, and Artificial Intelligence (NLP/CL/AI). Manurung’s dissertation research in Informatics at the University of Edinburgh (Manurung 1999; Manurung 2003) culminated in the development of a system that used evolutionary algorithms on a human-authored Lexicalized Tree Adjoining Grammar with semantic and metrical constraints. Manurung’s generator, MCGONAGALL, produced haiku, limericks, and mignonnes (a form based on a poem by Clement Marot).

That same time period also saw the beginning of a long-term effort in poetry-generation research by Pablo Gervás and his collaborators at the Universidad Complutense de Madrid (Gervás et al. 2007). Initial generators included a pair of rule-based systems to generate Spanish poetry: WASP, which used heuristics given a set of words and a target form (Gervás 2000), and ASPERA, which used case-based reasoning (Gervás 2001). Later research focused on issues such as replacing existing lines of poetry with those that contain increased alliteration (Hervás et al. 2007).

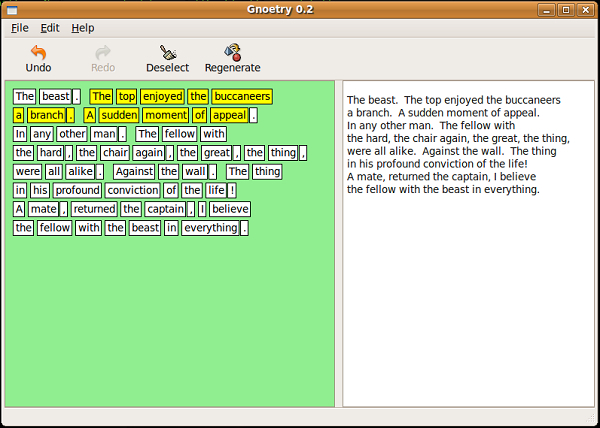

The turn of the century also saw the development and use of Gnoetry, an interactive poetry generator developed by programmer Jon Trowbridge and poet Eric Elshtain (Dena and Elshtain 2006; Beard of Bees 2009). Gnoetry generates poetry using constraints on an n-gram generator built on user-supplied texts; the innovation is in the interface, which allows the poet to iteratively select and regenerate the poem’s words (Trowridge 2008).

In this time period, programmer Jim Carpenter developed and published several poems from his ETC Poetry Engine (Carpenter et al. 2004), which used probabilistic grammars.

Recent work from the NLP/CL/AI communities includes an interactive system incorporating models from cognitive science of human conceptual blending and metaphor processing (Harrell 2006); the Gaiku system, which generates haiku using Word Association Norms— words that are associated in peoples’ minds with a given cue word (Netzer et al. 2009); and the use of statistical techniques such as cascaded finite-state transducers and trigram language models for analyzing, generating, and translating poetry (Greene et al. 2010).

The preceding examples are only provided to support the discussion that follows, and should in no way be considered an exhaustive coverage of work in poetry generation. Important omissions include Tristan Tzara’s Dada Poems, the text generator RACTER, the “Tape Mark” poems of Nanni Balestrini, the musician David Bowie’s lyrics generator Verbasizer, the French literary group Transitoire Observable, and the critical perspectives of Marjorie Perloff, N. Katherine Hayles, and Loss Pequeño Glazier, though even this list is incomplete. Furthermore, the preceding is focused on text poetry, while much of the contemporary effort in Digital Poetry is heavily influenced by multimedia tools that became popular around the turn of the century (Funkhouser 2008); this omission is made because the case studies provided later are principally text-based, and because the focus of this article is on the algorithms and interactive elements of poetry generation rather than on the visual elements that are emphasized in most contemporary Digital Poetry.

1.3 Traditions of Interactive Poetry Generation

As the examples in the preceding section show, a number of poets, programmers, and researchers have explored a variety of language technologies and authoring algorithms for generating poetry. For the purposes of this article, we may consider there to be four traditions in poetry generation.

- The Poetic tradition includes those who are interested primarily in the resulting poems: for Mac Low and the Gnoetry poets, the automated techniques being used are generally secondary to the poems being produced, and the program’s output may be modified to produce an interesting poem. Most of the Digital Poets of the turn of the century may be classified here.

- The Oulipo tradition includes those who are interested in developing novel poetic forms, and in studying the types of poems that are generated from a given constraint or combinatorial method. To these poets, the authoring method being used is usually more interesting than the poem that it produced.

- The Programming tradition includes those aligned with the hacker sensibility, referring to those who enjoy understanding the workings of complex systems and finding creative and effective solutions to technical problems (Raymond 2004). Such programmers traditionally value the free distribution of resources such as programs, information, and infrastructure (Raymond 2011). JanusNode, ETC, and West’s POETRY GENERATOR were distributed for the general good; Strachey’s love letters, Dissociated Press, Travesty, and Mark V. Shaney were developed in computer labs principally for enjoyment.

- The Research tradition covers those interested in understanding language use and cognition (including critical work in the humanities) as well as those developing innovative techniques in language engineering. Examples are Lutz, Manurung, Gervás, and the NLP/CL/AI investigators of the past decade.

Some individuals fall into more than one category. Charles Hartman, for example, can claim membership in the poetic, programming, and research traditions. But there is no overarching community that brings together all of these approaches, whose practitioners are spread through time in numerous locations over various disciplines. Digital Poets, Oulipo poets, and researchers are generally aware of work in their own communities, but it is rare, for example, to see a computer science researcher citing critical work in the digital humanities let alone Oulipo, or a Digital Poet using anything more sophisticated than a template generator.

2. Suggested Approaches: Implementations, Interactivity, and Online Communities

Poetry generation tends to break down the traditional distinctions between poetry, poet, publisher, distributor, and audience. A poet may be one who modifies an existing poem, who develops a new way of authoring poems, who programs and distributes a generator, or who masters an existing generator. An audience member may be one who uses a poetry generator and distributes the output. A poem can be “re-mixed” as part of an authoring process or used to build a language model for interactive generation and become a co-author of sorts.

These are exciting realities for practitioners of poetry generation and suggest a number of productive interactions. For example, Oulipo poets can benefit from the technological sophistication of programmers and researchers, and programmers can benefit from familiarity with the range of expression in Oulipo and recent Digital Poetry. This section suggests three approaches to working in such a context, after which Section 3 provides a set of case studies. But clearly, these approaches are not for everyone. This article is not advocating that any particular approach to poetry be adopted even by contemporary Digital Poets; it is, rather, describing a set of approaches that seem promising given the contemporary language technology environment.

One of the most significant computing advances in the past 50 years has been improvements in ease of use. Today’s graphic user interface-based operating systems may be flawed, but they are a definite improvement over the days of Strachey and Lutz, when CRT monitors were used to show the contents of memory as a matrix of dots, and input had to be provided with punch-cards (Link 2006). Besides operating system infrastructure, today’s computational poets can also use versions of the programs described in Section 1.2, specialized poetry toolkits such as RiTa (Howe 2009), general natural language resources such as OpenNLP (OpenNLP 2010), and widely distributed infrastructure to develop and run JavaScript, Java, and Flash programs. This enables the movement away from poetry generators that run infrequently in situated environments by specialized technicians, to tools that can be used on demand by end-users without concern for the underlying complexities.

So the first suggested approach to poetry generation is: distribute implementations.

A similar suggestion has recently been made in the NLP/CL community (Pedersen 2008), with the goal of ensuring the reproducibility of research results and the availability of language technology resources. Indeed, none of the implementations from the Research community described above are readily available. It is worth noting that in NLP/CL/AI, implementations are developed not as artifacts whose importance is in their unique existence, but as proofs-of-concept, experimental testbeds, or tools.

This suggestion does not mean that poetry generation needs to involve the software engineering of robust products with a promise of end-user support and immediate bug-fixing. Rather, the poetry-generator implementation may be seen as a prototype meant to enable those users willing to explore it. Nor does this suggestion require that the poet actively promotes the use of the implementation. Rather, it is enough that the poet announces the availability of the resource to the appropriate online communities and search engines.

This approach begins to move Digital Poetry away from a perspective in which poetry generators are unique artifacts. Poems that are generated away from the initial programmer’s computing environment are thus opened to variations inherent in platform differences. For example, not all browsers interpret JavaScript in the same way. Some commands that work on a given version of Internet Explorer do not work on earlier versions or on different browsers such as Firefox. If the program is well-designed, it will still work to some extent though it may not have certain features that it would elsewhere. Even with something as seemingly unambiguous as computer programming languages, interpretation is subjective and performative.

Distributing generators forces poets to acknowledge that they are not producing an aesthetic experience that they uniquely control. This minimizes the importance of archiving an implementation exactly as the programmer intended it: No matter how well preserved, the experience will usually not be the same as the original poet created it. Rather, the worthwhile ideas in a given generator will be preserved and re-implemented even as the OS, runtime, or other infrastructure supporting the original implementation becomes obsolete. In fact, after explanations of Travesty and Mark V. Shaney appeared in technical publications, various programmers developed variations that are still available on the web. Implementations are ephemeral; output, methods, and algorithms are eternal.

The second suggested approach to poetry generation is: empower the end-user through interactivity.

An important level of interactivity was pioneered by Dissociated Press, which allowed editing as well as on-demand generation from texts of the user’s choice; interactivity is also a key component of RKCP, JanusNode, and Gnoetry. Significantly, all of these generators also allow the end-user to define the language resources to be used: production rules in the case of JanusNode and corpora for n-gram models in the case of Dissociated Press, RKCP, JanusNode, and Gnoetry.

An objection to allowing interactivity was suggested by ETC programmer Jim Carpenter.

My goals in the etc project include making software whose first draft is the final draft. Since Charles Hartman, folks in this field have held that their generated poetry was a dropping off point, that the work wanted a human touch. But the problem with that approach is that it gives the human-centric critics, with their socially constructed and entrenched intelligism, what they see as a reason to dismiss us. The argument goes that if a piece requires editing, the machine isn’t really doing the work. (Carpenter 2007)

But it seems that the argument “if a piece requires editing, the machine isn’t really doing the work” is weak. By definition, the computer or a programmable algorithm will always be doing some work in poetry generation. It is also true that a human will always be doing some work as well.

Poetry generators are artifacts developed by humans. Even systems with low levels of runtime interaction such ETC, MCGONAGALL, and Gaiku depend on algorithm implementations, language resources, and parameters that are developed and fine-tuned by humans. Consider the extreme case of AI research in which robots develop their own language without human intervention (Steels 2005; Steels and Loetzsch 2008). Even if such robots one day developed poetry in their own non-human languages, those robots themselves would still be human-created artifacts that represent one approach that humans have taken to generate language. Humans would still have done some work in producing those poems.

Furthermore, it is difficult to say how the problem of poetry generation could ever be fully solved. Poems produced by template-based poetry generators can be indistinguishable from those produced by human authors, but there might still be many more approaches to study that may more closely mimic human behavior, may work more efficiently, or may produce “better” poetry.

Of course, it is still valuable to quantify how much of the work is being done by the humans involved and how much by the computers. It is also valuable to explore how a poetry generator’s output could be inherently meaningful rather than meaningful only as a result of the human’s efforts; this is an open AI research question that is only recently being addressed through approaches to subjectivity recognizable to humanists (de Vega et al. 2008). Some of the more interesting of these approaches to meaningful language use emphasize the importance of human-program coordination (DeVault 2006), which is another argument for interactivity.

Programmers of poetry generators perform a poetic task related to that of Queneau’s “100,000,000,000,000 Poems”: authoring not a single poem, but a (potentially infinite) set of poems by using a computer. Interactive poetry generators enable end-users to identify the poems that they prefer in that set of potential poems. End-users are heuristics, guiding the generation algorithms toward the optimal solutions for their current reading situation.

So, computer poetry generation unavoidably involves a human author. Given this, it is useful to explore the possibilities of the various humans involved in the poetry-making, -distribution, and -reading process: not only the programmer, but non-programmers who may be the end-users of the generator, and who may also be the ultimate readers of the poems. With poetry generation, the end-user is also acting as an editor, mediating the re-writing of existing texts (for corpus-based methods) or production rules (for grammar-based methods) as constrained by poetic forms and interaction methods implemented by the programmer. This approach to poetry is empowering to all involved. The end-user obtains new ways of experiencing poetry, the programmer is provided with users to explore and master the generators, and the source texts used as corpora are appreciated in new ways.

The final suggested approach to poetry generation is: leverage online communities.

As discussed in Section 1, there are a number of traditions in poetry generation, several of which interact in limited ways. It is important to acknowledge the roles that poets, theorists, programmers, and researchers all play in poetry generation. Doing otherwise risks the danger of duplicating effort or pursuing approaches that have already been exhausted.

However, there is no overarching poetry-generation community to provide conferences or printed publications that will bridge the relevant disciplines. Online communities such as those practiced on group blogs, discussion boards, or e-mail lists fill this void by allowing inexpensive communications between traditions with varying degrees of formality. Discussions that in-person may be hampered by specialized jargon can be made easier through informal explanations or references to explanatory online resources. Because these discussions are asynchronous, they enable participation with a minimal initial commitment. Finally, conducting these discussions online greatly increases the likelihood that their results will be archived indefinitely. In this way, future generations will discover the efforts of today more easily than contemporary researchers discover the efforts of previous generations.

3. Case Studies

3.1 Introduction

Section 1 described the various traditions of poetry generation, and Section 2 provided suggestions that acknowledge the reality constructed by contemporary language technology. This section provides case studies of a poetics that follows from this context. Unless otherwise noted, the generators and poems in this section were developed by myself posting under the pen name edde addad on the group blog Gnoetry Daily during 2010 and early 2011 (Gnoetry Daily 2011). Using my own work as examples allows me to describe relevant details about the authoring process; to increase generalizability, work by other poets plays a central role in each example.

To emphasize: This paper is not advocating that this poetics be privileged over others. Rather, this approach is being presented as one that has describable benefits that may be of interest to other poetic practices.

3.2 ASR Feedback Failures

In Automated Speech Recognition (ASR) technology, a human’s spoken words are transcribed in real-time through a microphone linked to a computer. ASR systems can be robust when trained to a specific user on a specific set of words and used in an otherwise-quiet environment, but in any other case, they can produce transcriptions with numerous errors.

This method of poetry generation was developed while exploring the capabilities of consumer ASR systems. It is related to the “telephone game” (described as “Chinese Whispers” by Oulipo) in which a set of humans whisper a message to one another in sequence; because of comprehension errors, the message received by the final human usually bears little resemblance to the initial message. In the case of ASR Feedback Failures, the human speaks a brief sentence to the ASR system, looks at the transcription it produces, and then reads that transcription back into the ASR system. The process is repeated as needed. Each sentence transcribed becomes a line in the poem, with equivalent lines (in which there were no ASR errors) removed. Each initial line is used as the seed of a stanza, and the lines are presented in reverse order.

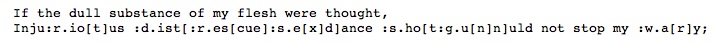

Figure 3.1 shows an example of a poem produced with this technique using Dragon NaturallySpeaking 9 (DNS9) on Windows XP with music being played in the background. To its credit, DNS9 performed well even without training a custom speaker model, and the background noise had to be increased considerably to create an appropriate number of errors.

The initial sentences of “Disappointment and Self-Delusion,” shown in italics, were taken from one of the final letters of a physics postdoc who murdered several academics and administrators during a school shooting before killing himself (Beard 1999). In method and tone, “Disapointment and Self-Delusion” is similar to the google-sculpting of Flarf poetry (Swiss and Damon 2006) in which the results of Google queries are used as a basis for poems. In both cases, the technique uses a pre-existing language technology resource as a compositional tool to produce a provocative text. In the case of ASR Feedback Failures, the method produces a text with initially incomprehensible lines whose ASR-error noise is gradually reduced to reveal a coherent message. In this way, the ASR Feedback Failures method appropriates a technological shortcoming, making art of error.

One way of approaching this method is considering the language models involved. Contemporary ASR uses acoustic models, which represent how a given speaker pronounces a given phoneme, and language models, which represent the types of words that a given speaker might use. In the example above, I did not train the acoustic or language models to my voice and document, so the new lines of this poem were generated:

- By errors in the ASR’s understanding of generic acoustic model (i.e., how the ASR software developers believe their customers speak to the world)

- By errors in the ASR’s own generic language models (i.e., how the ASR software developers believe their customers use language)

- Based on a set of initial lines (i.e., the language used by a mentally unstable physics researcher)

- Expanding a text that will be forever hidden (i.e., the language used by that physics researcher in the letters and death notes that have been suppressed)

Future work might involve understanding the connections between each of those language models, down to a formal level equivalent to the mathematics used in the acoustic and language models of items 1 and 2 and the grammatical level possible with 3 and 4, and tying them to all the other language models involved, such as those of the author, the reader, and other members of language-use communities that work together to make meaningful language use possible. Note that the problem here is not that of generating the “hidden text” of the suppressed letters; that might involve developing a generative language model (grammar-based or statistical) of mentally unstable researchers, and determining what type of sentences followed sentences line the seed texts above. Instead, the problem is one of finding ways that these representations could be used for generating interesting poems.

3.3 Codework: Parenthetical Insertions and Pseudohaiku

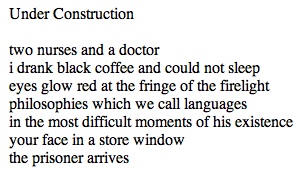

The codework tradition in contemporary poetry uses symbols and formatting inspired by the ways that computers mediate and mangle texts (Cayley 2002; Raley 2002). The codework community heavily relies on the Internet for communication and poetry distribution, thus providing a tradition that is easy to investigate and build on. Figure 3.2 shows a representative codework line by the poet Mez.

The line contains a number of substrings divided by formatting symbols to create a number of possible strings to be read: “Art,” “Arthroscopic,” “robotic,” “Nintento,” “ten,” “dos,” and “intentions,” most obviously. The result’s aesthetic beauty is both in its physical presentation as well as in the way it opens four rather mundane words to a multiplicity of readings. However, most codework is completely human-authored. This section considers two ways in which the generation of codework such as that shown in Figure 3.2 can be assisted by computational techniques.

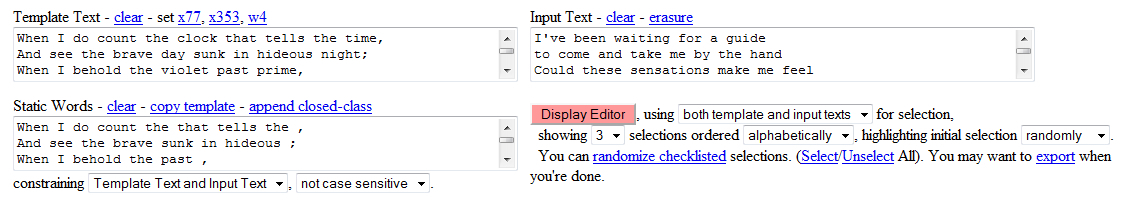

The first technique considers the ways in which the substring “botic” is inserted into “arthroscopic” to create the suggested word “robotic,” and “tions” is appended to “Nintendos” to create the suggested word “intentions.” This suggests the generalized approach of Parenthetical Insertions. Given a list of words to consider inserting, and an existing poem, a program searches for substrings that the poem has in common with any word in the poem, and inserts it using punctuation. For example, Figure 3.3 shows the results of using the words “riot, rescue, sex, distressed, shotgun, gun, war” as parenthetical insertions to the first two lines of a Shakespearean sonnet.

In the second line, the method finds that the original word “Injurious” has the substring “rio” in common with “riot,” which triggers the insertion of the substring “t” to create “Inju:r.io[t]us”. The method was implemented using a Perl script to create Mappings files for the JanusNode poetry tool (Westbury 2003). The period in “Inju:r.io[t]us” was added to prevent the repeated identification of the “rio” substring, which would trigger JanusNode to create “Inju:rio[t][t][t][t]us”. However, adding the period made it difficult for the reader to identify the inserted word “riot,” so a colon was added to highlight where the inserted word began. The first line had no replacements because there were no common substrings.

The word “:d.ist[:r.es[cue]:s.e[x]d]ance” in Figure 3.3 shows how the method can produce multiple insertions. First, the script finds the words “distance” and “distressed” have the common substring “dist”; this creates “:d.ist[ressed]ance.” Second, the script finds that “:d.ist[ressed]ance” and “rescue” have the common substring “res”; this creates “:d.ist[:r.es[cue]sed]ance”. Finally, the script finds that “:d.ist[:r.es[cue]sed]ance” and “sex” have the common substring “x”; this creates “:d.ist[:r.es[cue]:s.e[x]d]ance.” This final word contains not only the original and inserted words, but also the suggested words “cue” and “dance.”

The effects of this method are highly dependent on the set of words to consider inserting. An issue with the initial set of words used during development provides an example of the ways that “traditional” scholarship sometimes hinders intellectual endeavors, and the ways that new technologies circumvent these hindrances. The initial set of words was taken from a set that psychologists identified as “high arousal” words in terms of affective response in human subjects. The study authors will only directly release the data set to non-student researchers affiliated with an academic institution who request the data via an online form, to which an answer is promised “[w]ithin 30 days of receiving your request” (Bradley and Lang 2010). However, waiting this amount of time would have delayed and potentially derailed the development of the codework insertion technique, which was initially explored during a late-night programming session. Furthermore, requesting the resource as part of an academic institution potentially provides that institution with a claim to the intellectual property of the method, which would complicate the eventual free distribution of the method’s implementation. Finally, the data was published in table form as part of a pdf file (Bradley and Lang 1999), which was then processed by a third party to produce a file in comma-separated-value form (Lee 2010). In fact, this was the format that my web search first found, and which I used in the exploration that suggested the promise of the method. After a full examination of the situation, I decided to avoid the Bradley and Lang data to forestall potential problems. So, currently, lists of words to insert are manually selected from a corpus. For example, the words inserted in the second verse of the poem in Figure 3.4 below were generated by identifying the unique words in Joseph Conrad’s “Heart of Darkness” and manually removing those that did not seem promising.

The second technique inspired by the codework line in Figure 3.2 considers creating lines of overlapping syllable-like substrings. This suggests a Pseudohaiku, a three-line verse whose first and last lines contain five substrings, and whose second line contains seven substrings. Figure 3.4 shows a poem whose first and last stanzas are pseudohaiku. The line “we][st][r][ong][oing” was created from the words “west,” “strong,” and “ongoing,” and includes the created word “we” as well as highlighting the visual near-repetitions “ong” and “oing.”

We may define a “strict” pseudohaiku line as one in which every substring with two neighbors is created from exactly two words, for example, if we took the words “killed,” “ledge,” “gene,” and “neon” to create “kil][led][ge][ne][on.” The pseudohaiku lines in Figure 3.4 are “normal” lines, meaning that some substrings with two neighbors only create one word, such as “[r]” in “we][st][r][ong][oing.”

Parenthetical insertions and pseudohaiku can be combined to create a single poem, as shown in Figure 3.4. Stanzas 1 and 3 were generated by a perl script that reads a text file and generates a number of pseudohaiku lines for the poet to select from. Stanza 2 is the result of interactive generation using the poetry generator WpN (described in Section 3.4 below), whose output was then modified by parenthetical insertions using JanusNode.

![Figure 3.4: “March 2011: y.ou[th]r Tremors work,” displaying codework pseudohaiku and parenthetical insertions Figure 3.4: “March 2011: y.ou[th]r Tremors work,” displaying codework pseudohaiku and parenthetical insertions](/j/jep/images/3336451.0014.209-00000015.jpg)

The poem in Figure 3.4 is part of the “Decline and Fall: 2011” project to generate poems from a corpus that combines current news articles with classical texts including Gibbon’s “Decline and Fall of the Roman Empire.” The pseudohaiku of verses 1 and 3 were generated from words in selected March 2011 Wikinews articles (Wikinews 2011). Verse 2 was generated from those Wikinews articles supplemented by Gibbon’s Chapter XXXVI: “Total Extinction Of The Western Empire,” with insertion words taken from Conrad’s “Heart of Darkness.” The intended effect of the “Decline and Fall: 2011” poems is to contextualize contemporary events as historical moments. The modified words create affordances for interpretation, which can be pessimistic (due to the Gibbons source and the subject matter of much contemporary news) or representative of the contemporary blend of cultures (due to juxtapositions such as “Omari” and “sphoeristerium”).

Generating poetry from online news sources introduces complications related to those of the affective data described above. Early codework poems with these techniques used articles from the Local News section of the Los Angeles Times, to depict contemporary life in Los Angeles (“Decline and Fall of the Southland Empire”). However, according to the Los Angeles Times’ Terms of Service, “. . .you may not archive, modify, copy, frame, cache, reproduce, sell, publish, transmit, display or otherwise use any portion of the Content. You may not scrape or otherwise copy our Content without permission” (Los Angeles Times 2011). Presumably, the poet has recourse to the fair use, without which the Times’s Terms of Service itself could not be quoted and discussed, but it is not clear to what extent fair use covers the types of techniques discussed in this section. The fair use argument becomes weaker for potential future uses of these techniques, such as automated programs that generate pseudohaiku from online news sources and distribute them via RSS feeds. Fortunately, there are good substitutes such as the free news source Wikinews, whose license specifically allows remixing and distribution with attribution.

3.4 WpN

This section provides an example of exploring mathematical properties of poetry generation by developing a program. By implementing an algorithm, the poet-programmer commits to precise definitions of data sources and manipulations; a happy side-effect is the production of a resource for others to use.

WpN was developed to explore the nature of the Oulipo n+7 method, in which every noun in a poem is replaced by the seventh succeeding noun in a dictionary (Matthews and Brotchie 2005). Oulipo’s w±n method is a generalization in which any word type is replaced by the nth succeeding word of that type in a dictionary, where n is defined as part of the method; for example, every verb may be replaced by the fifth succeeding verb in a dictionary. WpN was developed to investigate what happens when w±n is further generalized to allow the replacement of any word with any other word from any other source in an arbitrary way. The “p” in WpN stands for “person,” emphasizing the human element. Loosening the constraints of n+7 and w±n allows the re-imposition of new constraints and generative methods, and a more precise understanding of the data sources and algorithms used for generation. As shown by the end of this section, it also highlights the relationship between n+7 and erasures.

WpN (pronounced “whuppin’“ or “weapon”) begins by defining the relevant data sources.

- The Template Text, which in n+7 is the initial poem whose nouns are to be replaced

- The Input Text, which in n+7 is the dictionary (to be precise: an alphabetically ordered set of nouns) from which the seventh following noun will be selected

- The Static Words, which in n+7 is the set of all words in the poem that are not nouns

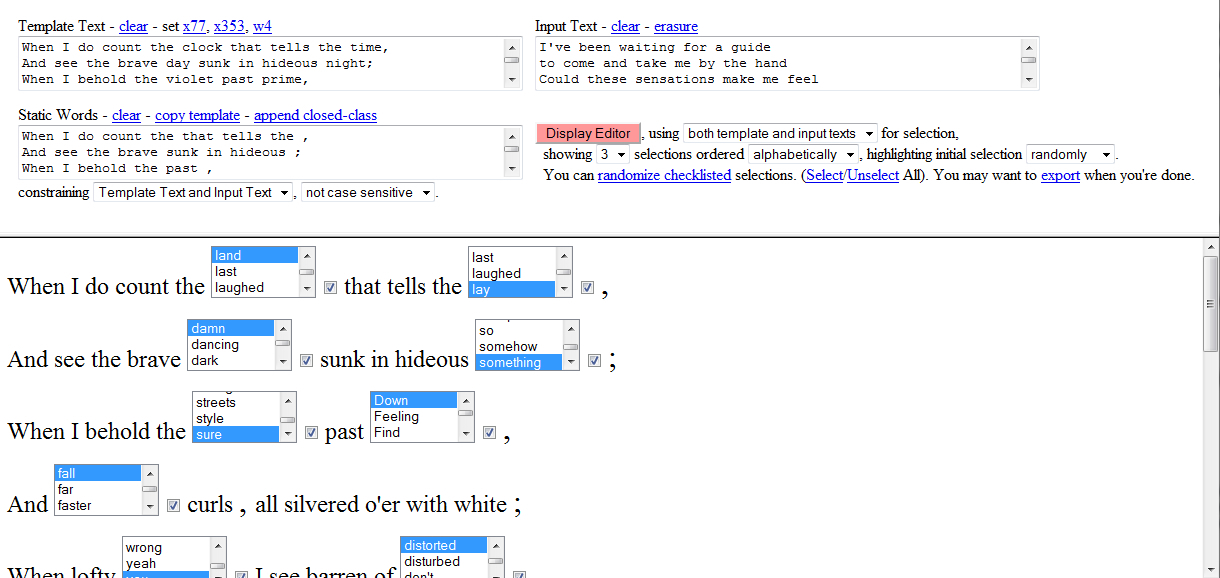

Figure 3.5 shows an HTML/JavaScript implementation of WpN, with a Shakespearean sonnet about to be used as the Template, lyrics from Joy Division’s “Unknown Pleasures” album as Input Text, and a copy of the Shakespearean sonnet with its nouns removed as the Static Words.

Clicking on “Display Editor” creates an interactive form in the bottom half of the interface. The basis for the form is the Template Text, whose every word that is not in the Static Text box is replaced with an HTML Select form element. The choices in each Select element are made up of the Input Text and/or the Template Text. The user can either use the initially suggested selections, manually scroll through the form to make a new choice, or click “randomize checklisted” to make new suggestions.

To use WpN for n+7, the user would paste a set of nouns into the “Input Text” area, select “highlight initial selection by original” to show the poem’s original nouns, and for each noun scroll down seven selections.

WpN generalizes the initial text of n+7 and w±n into a template that can contain arbitrary tokens to be replaced. As shown below, a simple four-line template provides the user with nine word choices to make.

WpN can be used for erasures (Macdonald 2009) by providing the original poem as Template Text, emptying the Static Text area, and providing a series of erasure words (such as ‘x’s) for Input Text. The user can then either replace each word with an erasure word, or remove the word completely.

WpN was designed to be a versatile tool: For example, words can be randomly generated or selected, and poems can be produced and exported to be used as templates for subsequent poems using different combinations of Input Texts or Static Texts. The second verse of the codework poem in Figure 3.4 was generated using WpN with a combination of random and human word selections, before the parenthetical insertions were made. This allowed a balance of random juxtapositions with nouns selected to highlight the contemporary nature of the text.

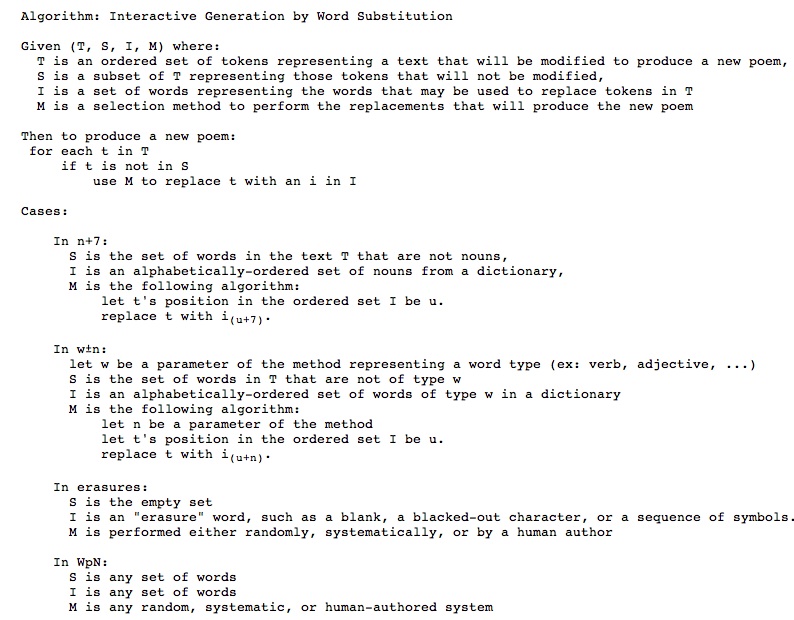

WpN was also developed as an exercise similar to a mathematical sketch on a scrap piece of paper to better understand a computational truth. As Figure 3.9 shows, n+7, w±n, erasures, and WpN share a set of data sources and a common basis for generation. What makes each approach unique is the constraints on its data sources and the way in which its generation algorithm selects replacement words.

Class-, word-, and character n-gram models (as well as the limited n-gram approach of Dissociated Press) can also be considered special cases of this algorithm: “T” would represent the format of the desired output, “S” would be empty, “I” would be the words (or characters) that make up the language model, and “M” would be constrained by the type of n-gram under consideration. The cut-up techniques of Tzara can similarly be considered variations of word n-grams, and Mac Low’s diastic approach can be seen as a word unigram model with additional character-level constraints. However, that modeling is left for future work, as there are several details that remain to be worked out. For example, it seems desirable to have a more formal definition of the types of language elements that make up the sets T, S, and I, to provide enough detail for the constraints in the algorithms in M. Also, it would be beneficial to describe more formally the types of actions that the human and the algorithm can make in M.

At any rate, this suggests an approach toward developing a taxonomy of interactive poetry-generation approaches. By formalizing the data sets and algorithms used by a given poetry-generation approach, we may develop classifications that build on the categories and classifications suggested by others (Montfort 2003; Funkhouser 2007), and come to a more precise definition of what contributions to the output text are made by the human as opposed to the algorithms.

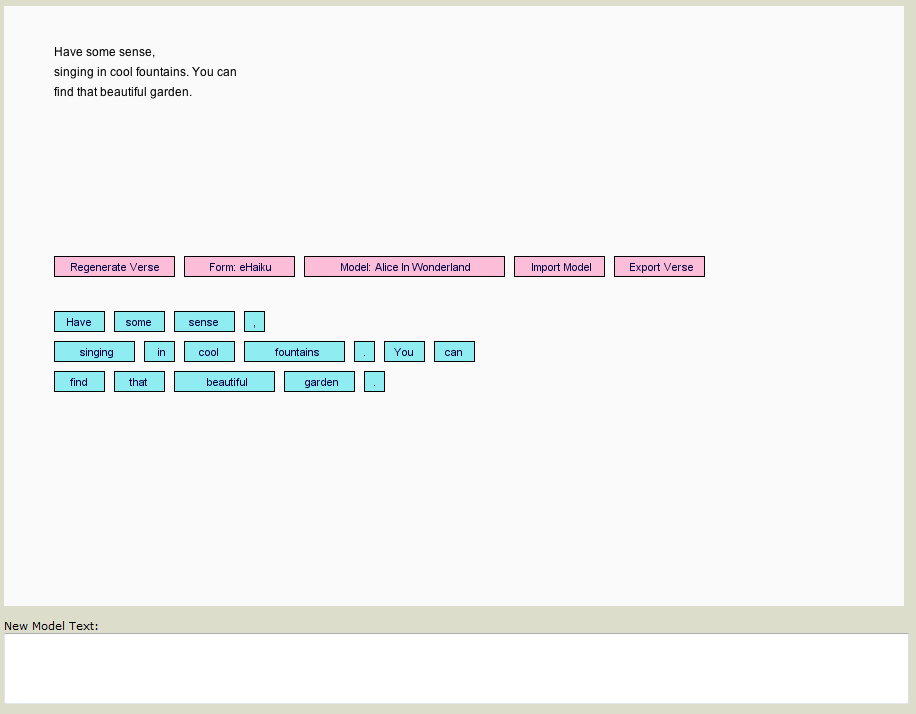

3.5 eGnoetry

The Gnoetry n-gram poetry generator introduced in Section 1.2 is defined by an interface that allows the human to select sets of words for regeneration. This interface allows the human to build meanings or seek interesting juxtapositions while constrained by probabilistic language models of the source text. This is in contrast to generators such as Dissociated Press and JanusNode, which output n-gram lines for the human to select from. Because of this level of interactivity, Gnoetry is a good first tool for newcomers to interactive poetry generation.

However, Gnoetry was developed as a Python module in Linux and never packaged for distribution to other platforms, which limits its potential user base. eGnoetry was developed in response to this, and provides an example of how language technology infrastructure enables poetry-generator implementations.

eGnoetry, whose interface is shown below, is a variation on the original Gnoetry, whose interface is shown in Figure 1.10. They both display the text of the poem being composed, as well as an interface that allows the selection of words to be re-generated. However, while Gnoetry allows the selection of several words for simultaneous re-generation, eGnoetry only allows the re-generation of a single word at a time.

Also, when Gnoetry re-generates a set of words, the first word in the sequence it re-generates will always make up a bigram with the word before it and the last word in the sequence it re-generates will always make up a bigram with the word after it, while only the first of these is true with eGnoetry. That is, given an ordered set of words wn. . .wn+m to regenerate, Gnoetry will generate words such that (wn-1, wn) and (wn+m, wn+m+1) are in the bigram language model, but given a word wn to regenerate, eGnoetry will only guarantee that (wn-1, wn) will be in the bigram language model. But even in the worst-case scenario, if an eGnoetry user clicks each word from the end of the poem to the beginning and by chance generates a poem with no (wn, wn+1) bigrams in the language model, the output will still be consistent with a unigram language model on the same corpus.

Also, while Gnoetry allows the user to submit weights for a set of texts for language modeling, eGnoetry only allows a work-around of this in which the user pastes multiple copies of a text into its ‘New Model Text’ text area.

eGnoetry was implemented using the Processing toolkit (Fry and Reas 2004) with the RiTa (Howe 2009) and g4p (Lager 2009) libraries. This set of tools provides a Java-like language and an interface that exports Java applets that can then easily be uploaded to the web for distribution. In particular, RiTa provides a set of classes for n-gram modeling and generation, which allowed the development to focus on the interface. Java plug-ins are not as widely installed in web browsers as Flash or JavaScript interpreters are, but they are nevertheless more common than Python interpreters and the interested user is likely to find a Java-enabled browser at some point at home, school, or work.

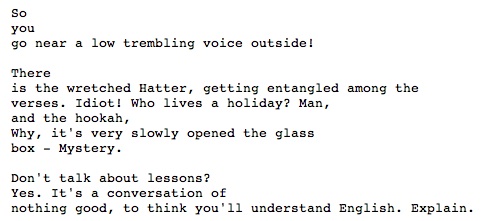

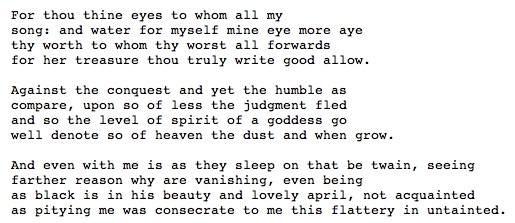

Figure 3.11 below shows an example of a poem written interactively with eGnoetry. It highlights the importance of source material on the poem’s tone and how a user can suggest a narrative that is coherent yet open to a number of different readings.

Although the poem in Figure 3.11 is hardly Great Literature, it was generated interactively in well under five minutes and provided a variation on Alice in Wonderland that was at least as interesting as anything that was on TV at the time. This usage is in line with the vision of Gnoetry co-developer Eric Elshtain.

One of the best moments I have witnessed with Gnoetry occurred during one of our early field tests—a gentleman who is a self-proclaimed “non-artist” reluctantly used the software and announced that he “felt like a poet,” that he had a hand in making language do interesting things. Imagine people using (abusing?) work hours composing poetry using Web-based computer-generated poetry software rather than playing yet another round of FreeCell. (Elshtain and Sebastian 2010)

Gnoetry or eGnoetry are not likely to surpass the Microsoft-distributed FreeCell in popularity, but they point to a genre of poetry generators whose level of interaction is suited to casual use. Future work in this genre includes exploring a range of interface affordances to create a fun and productive game-like experience.

3.6 ePoGeeS

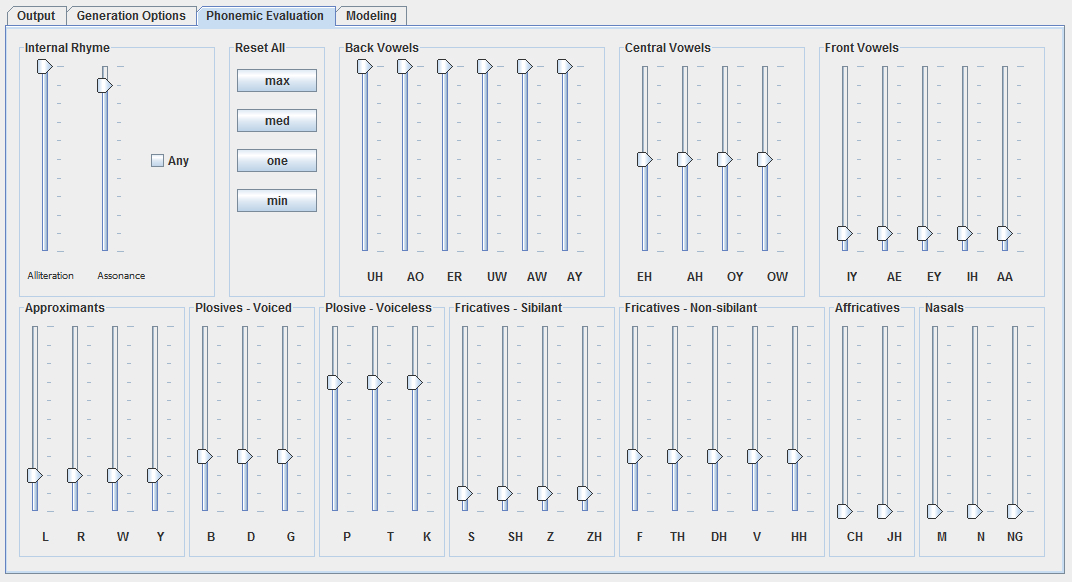

ePoGeeS is a Poetry Generation Sketchbook that can generate stanzas, lines, words, and rhymed words. Generation is done from a bigram or class-based language model, and while you’re working on a poem you can examine a list of all possible next or previous words in the language model.

The user can change several verse-generation variables such as number of lines and rhyme scheme. Each individual line is actually generated from a number of possible candidates that are then evaluated and ranked; you can select random sampling or stochastic beam search to manage the evaluation. Evaluation of candidate lines is done by analyzing the phonemes that make up the sounds of the words in the candidate line. The human user can set the sound types that are more likely to end up in the final candidate line selected.

The default language model is Shakespeare’s sonnets. The user can create new word language models by pasting in text and clicking “build.” The user can also examine various aspects of the language, phoneme, and rhyme models. Finally, ePoGeeS was developed as a Java applet, making it available to others, although its primary purpose was for my own poetry generation.

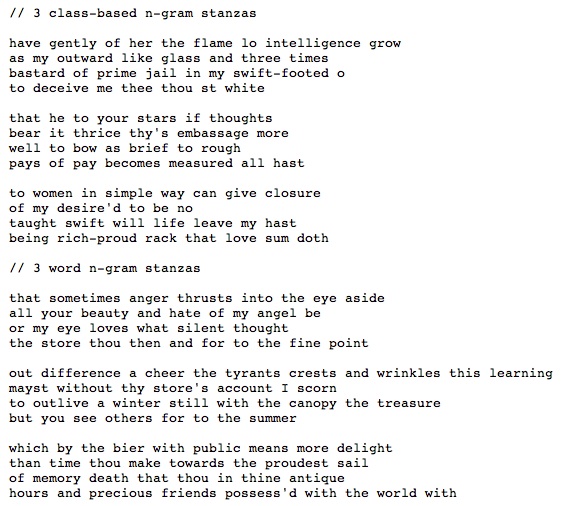

ePoGeeS also contains a class-based n-gram language model built on Shakespeare’s sonnets. Appendix A contains a description of class-based n-grams; briefly, they are built from sequences of parts-of-speech rather than sequences of words. ePoGeeS used a corpus of the sonnets that had been part-of-speech tagged using the Stanford Tagger (Toutanova et al. 2003) to build the language model. Figure 3.13 shows three verses built from a class-based n-gram model, compared to three verses built from a word n-gram model. These verses have not been edited or culled in any way; they are shown as the generator output them.

Class-based n-grams contain adjacent words that the source text’s author never put together, creating less local coherence. For example, the pair “have gently” from the class-based verses does not occur in Shakespeare’s sonnets, while “that sometimes” and every other pair of adjacent words in the word verses does. The local coherence of word verses comes with a disadvantage, though; the line “that sometimes anger thrusts into the eye aside” has five overlapping words with the original Shakespeare line, “That sometimes anger thrusts into his hide.” This is a danger of relatively small corpora, as described in Appendix A. Studying the output of various algorithms in this way creates an approach to poetry related to that of the Language poets: for example, meaning in the act of creation, and an appreciation for non-intentional meanings (McGann 1988).

As shown in Figure 3.14, ePoGeeS provides an interface through which the user can determine the phonemes that are likely to be in the lines being generated. This is implemented by analyzing the phonemes in a line and weighed scoring the line by how many requested phonemes are present. ePoGeeS supports random sampling, in which a number of lines are generated and the one highest-scored line is selected. ePoGeeS also supports stochastic beam search, in which a number of lines are generated and scored, the best are kept, one word per line is changed, and the lines are scored again; this is done for a number of turns. This is in the tradition of phonemic analysis (Plamondon 2005; Hervás et al 2007) and is implemented using the CMU Pronouncing Dictionary (Carnegie Mellon University 2008).

This process is full of errors because it relies on a pronunciation dictionary: Of the 3,229 unique words in Shakespeare’s sonnets, 2,605 have pronunciations in the dictionary and 624 do not. In this case, the errors are not prohibitive, because the 81 percent of the words that are in the dictionary are enough to effectively influence the output, and those words without pronunciations tend to be selected against. However, some of the pronunciations are ambiguous, and it is likely that sometimes the wrong pronunciations are identified.

ePoGeeS’s phonemic analysis enables the production of sound poetry, as practiced by Dadaists and Futurists, in which “the phonematic aspect of language became finally isolated and explored for its own sake” (McCaffery 1978). Figure 3.15 shows a poem written in the sound poetry tradition. The first stanza selected for back vowels, the second stanza selected for central vowels, and the third stanza selected for front vowels: This is the Backwards Swallowing method. For each stanza, I generated several candidates using stochastic beam search at various population and iteration settings, and selected the best for each stanza.

Figure 3.16 shows the percentage of phonemes by type per stanza. For example, Stanza 1, which emphasized back phonemes, ended up with 76.5 percent (or 39) of its phonemes being back vowels. Also, Stanza 1 had 100 percent of its unique words in the dictionary (note that this is unique words: for example, “for” occurs twice in the first stanza, but is only counted once for word coverage). Phoneme types in the entire corpus are also shown; phoneme groups are almost evenly distributed, slightly favoring front phonemes.

| Data | Back Phonemes | Central Phonemes | Front Phonemes | Word Coverage |

| Stanza 1 | 88.9% (32) | 8.3% (3) | 2.8% (1) | 100% (26) |

| Stanza 2 | 0% (0) | 86.4% (38) | 13.6% (6) | 100% (25) |

| Stanza 3 | 10.2% (5) | 10.2% (5) | 79.6% (29) | 100% (34) |

| Corpus | 29.6% (5968) | 32.4% (6520) | 38.0% (7653) | 80.7% (2605) |

Figure 3.16: Distribution of phonemes in poem from Figure 3.15

ePoGeeS allows interactive generation in a number of ways. Figure 3.17 shows one approach, in which a core of a poem is generated under close human supervision, after which the rest of the poem is generated with fewer chances for the human to intervene. The goal is to balance the human’s contribution with the generator’s.

Figure 3.17: Method 770ac8b0-5626-426c-bb79-1adf9ad13324 (human accommodating increasing levels of machine randomness)

The method is indexed by a UUID followed by a text description, an approach useful for cataloging the numerous ways of working with interactive poetry generators. Figure 3.18 shows an example of a poem generated with this technique, and further post-processed with Method bf61fb24-0840-46bb-bfb2-3f5f5b4c8891 (adding punctuation to improve TTS output) in which punctuation and capitalization are added to improve the performance of a Text-To-Speech (TTS) engine while speaking the poem.

In Figure 3.18, Stanzas 2 and 4 were the ones in which the human’s contribution was constrained to removing 1 line per stanza. This forced the use of the odd (although suggestive) phrase “a horse has the fellow,” because the alternative added proper nouns in ways that were even worse. But the overall result was to create a poem that has some discourse coherence, but still maintains enough generated juxtapositions to allow a multiplicity of readings.

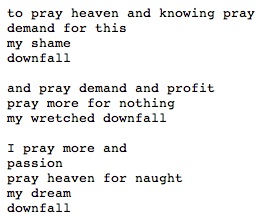

Another authoring method leverages ePoGeeS’s ability to suggest words that occur before and after a given word.

The effect is to have a phrase or sentence surrounded by words from a possibly unrelated language model. Figure 3.20 below provides an example.

In Figure 3.20, the words “pray and pray for my downfall” are taken from a song by Christopher Wallace (stage name: The Notorious BIG) and recited by rapper Darryl McDaniels (stage name: DMC), and are surrounded by words from Shakespeare’s Othello. The word “downfall” is not in Othello, so there is no bigram with it in the language model, and as a consequence there are no words to be selected after it in the poem. The seed text creates a repetition that provides some coherence to the poem; the method constrains the user’s selections enough to ensure some number of juxtapositions, which is balanced by a coherence ensured by the human user. This particular poem contains a poignancy in the way it reflects both Othello’s tragic end and Wallace’s own death in a drive-by shooting.

A number of other poems and methods were authored using ePoGeeS, which contains a number of features not discussed here. ePoGeeS was conceived of and used as a sketchpad, a tool that was added to as needed. Because of the initial decision to develop it as a Java applet, it can easily be accessed and used by others, although it contains idiosyncrasies due to its focus on the needs of the poems it was used to generate.

Future directions for ePoGeeS-style interactive poetry generation include the application of more NLP/CL/AI techniques, such as in information extraction, advanced parsing, and discourse and narrative models. Tying poetry generation to tools that closely analyze text is also promising. Finally, qualitative evaluation of poetry is a major open problem.

4. Conclusions

This paper describes a tradition of interactive poetry generation stemming from early work with computers, experimental poetics, recreational programming, and language research. This poetics is enabled by language technologies such as algorithmic approaches to modeling and generating language. Central to this effort is using the Internet to distribute, discuss, and archive methods, programs, and poems. Poetry generators create sets of potential poems, which do not exist without users. Poetry generators never die so long as they are remembered and re-implemented as technology evolves.

Poetry generation feeds on everyday texts such as news stories and song lyrics, but since the time of Lutz it also acknowledges the importance of classical texts. Personally, when developing new techniques, I frequently use Shakespeare as a “Hello World” or first set of source texts as a way of paying homage. The approach of this poetry generation to classical texts is one of humility and sincerity. When approaching these classical texts, all I have to offer is my knowledge of language and computation.

Interactive poetry generation makes up only a part of the overall poetry and research communities, but it is worth taking note of this effort because it is capable of generating worthwhile insights, poems, tools, and techniques that may be interesting to other traditions. Language technologies provide poets with a potentially infinite number of approaches to inspire their poetry. Poetry generation provides NLP/CL/AI researchers with testbeds to investigate ideas, on tasks that are near-term achievable but AI-complete in depth. Readers around the world are provided not only with poems but with tools to make poetry of their own. But ultimately, pursuing interactive poetry generation is its own reward.

Antonio Roque received his PhD in Computer Science from the University of Southern California and his AB in English (Creative Writing) from the University of Michigan.

Acknowledgments

Portions of this text appeared previously on the group blogs Gnoetry Daily and Netpoetic.

Although I was affiliated with the University of Southern California and the University of California Los Angeles during the production of the work described here, this was not part of a funded effort nor was it conducted in their facilities or using their resources. Nevertheless, I am grateful that I had the chance to develop my knowledge of NL/CL/AI research at these institutions.

I am also grateful to:

- Eric Goddard-Scovel, Eric Elshtain, and Matthew Lafferty for accepting me as a member of the Gnoetry Daily group blog, even though I wasn’t always using Gnoetry

- Jason Nelson, for allowing me to post on his group blog Netpoetic

- Chris Funkhouser, for his assistance and encouraging words

- David DeVault, for not ridiculing me no matter how ill-informed my rants or unfounded my speculations

- The Santa Monica Public Library, where most of this article was written

- My wife and daughter, in whose presence most of my poetry was composed

References

- ALAMO. “Littéraciels.” http://alamo.mshparisnord.org/litteraciels.html (accessed March 20, 2011).

- ALAMO. “Rimbaudelaires.” http://alamo.mshparisnord.org/programmes/rimbaudelaires.html (accessed March 20, 2011).

- The American Council of Trustees and Alumni (ACTA). April 2007. “The Vanishing Shakespeare.” http://www.goacta.org/publications/PDFs/VanishingShakespeare.pdf (accessed April 8, 2011).

- Beard, Jo Ann. 1999. “The Fourth State of Matter.” In The Boys of My Youth. New York: Back Bay Books.

- Beard of Bees Press. 2009. “Gnoetry.”http://www.beardofbees.com/gnoetry.html (accessed April 11, 2011).

- Bradley, Margaret M. and Peter J. Lang. 1999. Affective Norms for English Words (ANEW): Stimuli, Instruction Manual and Affective Ratings. Technical report C-1. Gainesville, FL: The Center for Research in Psychophysiology, University of Florida. http://dionysus.psych.wisc.edu/methods/Stim/ANEW/ANEW.pdf (accessed March 28, 2011). Mirrored at http://www.uvm.edu/~pdodds/files/papers/others/1999/bradley1999a.pdf (accessed November 11, 2011).

- Bradley, Margaret M. and Peter J. Lang. 2010. “ANEW Message.” http://csea.phhp.ufl.edu/media/anewmessage.html (accessed March 28, 2011).

- Campbell, Bruce. 1998. “Jackson Mac Low.” In Dictionary of Literary Biography, Volume 193 of American Poets Since World War II, ed. Joseph Conte. Detroit: The Gale Research Group. 193–202. http://epc.buffalo.edu/authors/maclow/about/dlb.html (accessed March 22, 2011).

- Carnegie Mellon University. 2008. “The CMU Pronouncing Dictionary.”http://www.speech.cs.cmu.edu/cgi-bin/cmudict (accessed April 1, 2011).

- Carpenter, Jim, Bob Perelman, Nick Montfort, and Jean-Michel Rabaté. 2004. “Poetry Engines and Prosthetic Imaginations.” Slought Foundation Online Content, April 29. http://slought.org/content/11199/ (accessed March 20, 2011).

- Carpenter, Jim. 2007. “etc4.” July 1. http://theprostheticimagination.blogspot.com/2007/07/etc4.html (accessed March 23, 2011).

- Cayley, John. 2002. “The Code is not the Text (unless it is the Text).” Electronic Book Review 9(10). http://www.electronicbookreview.com/thread/electropoetics/literal (accessed March 29, 2011).

- Cramer, Florian. 2002. “‘Mez, ,,RE(AD.HTML.’” May 18. http://cramer.pleintekst.nl/all/mez/mez-presentation.pdf (accessed March 28, 2011).

- de Vega, Manuel, Arthur Glenberg, and Arthur Graesser, ed. 2008. Symbols and Embodiment: Debates on Meaning and Cognition. New York: Oxford University Press.

- Dena, Christy and Eric Elshtain. 2006. “Gnoetry: Interview with Eric Elshtain.” Writer Response Theory. April 2. http://writerresponsetheory.org/wordpress/2006/04/02/gnoetry-interview-with-eric-elshtain/ (accessed April 11, 2011).

- DeVault, David, Iris Oved, and Matthew Stone. 2006. “Societal Grounding is Essential to Meaningful Language Use.” In Proceedings of the Twenty-First National Conference on Artificial Intelligence (AAAI-06) Boston, Massachusetts, July, 2006. http://people.ict.usc.edu/~devault/publications/aaai06.pdf.

- Dewdney, A. K. 1989. “Computer Recreations.” Scientific American 260(6):122.

- Elshtain, Eric and Nic Sebastian. 2010. “Ten Questions on Poets & Technology: Eric Elshtain.”Very Like A Whale. July 28. http://verylikeawhale.wordpress.com/2010/07/28/ten-questions-on-poets-technology-eric-elshtain/ (accessed March 29, 2011).

- Free Software Foundation, Inc. 2011. “The Emacs Editor.” http://www.gnu.org/software/emacs/manual/html_node/emacs/Dissociated-Press.html (accessed April 11, 2011).

- Fry, Ben and Casey Reas. 2004. “Processing.”http://www.processing.org/ (accessed March 29, 2011).

- Funkhouser, Christopher T. 2007. Prehistoric Digital Poetry: An Archaeology of Forms, 1959–1995. Tuscaloosa: University of Alabama Press.

- Funkhouser, Christopher. 2008. “Digital Poetry: A Look at Generative, Visual, and Interconnected Possibilities in its First Four Decades.” In A Companion to Digital Literary Studies, ed. Susan Schreibman and Ray Siemens. Oxford: Blackwell. http://www.digitalhumanities.org/companion/view?docId=blackwell/9781405148641/9781405148641.xml&chunk.id=ss1-5-11&toc.depth=1&toc.id=ss1-5-11&brand=9781405148641_brand (accessed April 11, 2011).

- Gervás, Pablo. 2000. “WASP: Evaluation of Different Strategies for the Automatic Generation of Spanish Verse.” Symposium on Creative & Cultural Aspects and Applications of AI & Cognitive Science. http://nil.fdi.ucm.es/sites/default/files/GervasAISB2000.pdf (accessed April 11, 2011).

- Gervás, Pablo. 2001. “An Expert System for the Composition of Formal Spanish Poetry.”Journal of Knowledge-Based Systems 14:181–188. http://nil.fdi.ucm.es/sites/default/files/GervasJKBS2001.pdf (accessed April 11, 2011).

- Gervás, Pablo, Raquel Hervás, and Jason R. Robinson. 2007. “Difficulties and Challenges in Automatic Poem Generation: Five Years of Research at UCM.” Presented at e-poetry 2007, Université Paris8. http://epoetry.paragraphe.info/english/papers/gervas.pdf (accessed April 22, 2011).

- Gnoetry Daily. http://gnoetrydaily.wordpress.com/ (accessed March 24, 2011).

- Gosper, R. William. 1972. “Item 176.” In HAKMEM, Memo 239, ed. Michael Beeler, R. William Gosper, and Rich Schroeppel. . Cambridge: Artificial Intelligence Laboratory, Massachusetts Institute of Technology.. ftp://publications.ai.mit.edu/ai-publications/pdf/AIM-239.pdf (accessed April 11, 2011).

- Greene, Erica, Tugba Bodrumlu, and Kevin Knight. 2010. “Automatic Analysis of Rhythmic Poetry with Applications to Generation and Translation.” In Proceedings of the 2010 Conference on Empirical Methods in Natural Language Processing. 524–533. http://www.aclweb.org/anthology/D/D10/D10-1051.pdf (accessed April 11, 2011).

- Harrell, D. Fox. 2006. “Walking Blues Changes Undersea: Imaginative Narrative in Interactive Poetry Generation with the GRIOT System.” In Proceeding of the AAAI 2006 Workshop in Computational Aesthetics: Artificial Intelligence Approaches to Happiness and Beauty. AAAI Press. http://silver.skiles.gatech.edu/~dharrell3/pps/Harrell-AAAI2006.pdf (accessed March 22, 2011).

- Hartman, Charles O. 1996. Virtual Muse: Experiments in Computer Poetry. Hanover, NH: University Press of New England (Wesleyan University Press).

- Hervás, Raquel, Jason Robinson, and Pablo Gervás. 2007. “Evolutionary Assistance in Alliteration and Allelic Drivel.” Applications of Evolutionary Computing, Lecture Notes in Computer Science, Volume 4448. http://nil.fdi.ucm.es/sites/default/files/HervasEtAlEVOMUSART07.pdf (accessed April 1, 2011).

- Howe, Daniel C. 2009. “RiTa: Creativity Support for Computational Literature.” In Proceedings of the 7th ACM Conference on Creativity and Cognition, Berkeley, California. http://mrl.nyu.edu/~dhowe/RiTa-Creativity_Support_for_Computational_Literature_p205-howe09.pdf (accessed March 29, 2011).

- Jurafsky, Daniel and James H. Martin. 2008. SPEECH and LANGUAGE PROCESSING. An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition (2nd edition). Upper Saddle River, New Jersey: Prentice Hall.

- Kurzweil CyberArt Technologies. 2000. “Ray Kurzweil’s Cybernetic Poet.”http://www.kurzweilcyberart.com/poetry/ (accessed March 18, 2011).

- Kurzweil CyberArt Technologies. 2007. “Basic Poetry Generation.” United States Patent 7184949. http://www.freepatentsonline.com/7184949.pdf (accessed March 18, 2011).

- Lager, Peter. 2009. “GUI for Processing.” http://www.lagers.org.uk/g4p/index.html (accessed March 29, 2011).

- Lee, Tom. 2010. “Generating the Affective Norms for English Words (ANEW) dataset.” Manifest Density. June 16th. http://www.manifestdensity.net/2010/06/16/anew/ (accessed March 28, 2011).

- Link, David. 2006. “There Must Be an Angel. On the Beginnings of the Arithmetics of Rays.” In Variantology 2. On Deep Time Relations of Arts, Sciences and Technologies, ed. Siegfried Zielinski and David Link. 15–42. Cologne: König. http://www.alpha60.de/research/muc/DavidLink_RadarAngels_EN.htm, http://www.alpha60.de/research/muc/Link_MustBeAnAngel_2006.pdf (accessed March 21, 2011).

- Los Angeles Times. 2007. “Terms of Service.” Last modified September 2007. http://www.latimes.com/terms (accessed March 28, 2011).

- Lutz, Theo. 1959. “Stochastische Texte.” Augenblick 4(1):3–9, trans. Helen MacCormack. 2005. http://www.stuttgarter-schule.de/lutz_schule_en.htm (accessed April 11, 2011).

- Mac Low, Jackson and Gil Ott. 1979. “Jackson Mac Low, interviewed by GIL OTT at PS 1.” http://phillysound.blogspot.com/2007_10_01_archive.html (accessed March 22, 2011).

- Mac Low, Jackson. 1998. “LITTLE BEGINNING (STEIN 1).” http://epc.buffalo.edu/authors/maclow/stein/stein01.html (accessed March 22, 2011).

- Macdonald, Travis. 2009. “A Brief History of Erasure Poetics.” Jacket 38. http://jacketmagazine.com/38/macdonald-erasure.shtml (accessed April 11, 2011).

- Manurung, Hisar Maruli. 1999. “A Chart Generator for Rhythm Patterned Text.” In Proceedings of the First International Workshop on Literature in Cognition and Computer, Tokyo, Japan. http://staf.cs.ui.ac.id/~maruli/pub/iwLCC.ps.gz (accessed April 11, 2011).

- Manurung, Hisar Maruli. 2003. An Evolutionary Algorithm Approach to Poetry Generation. PhD thesis, University of Edinburgh. College of Science and Engineering. School of Informatics. http://www.inf.ed.ac.uk/publications/thesis/online/IP040022.pdf (accessed April 11, 2011).

- Matthews, Harry, and Alastair Brotchie, ed. 2005. Oulipo Compendium. Los Angeles: Make Now Press.

- McCaffery, Steve. 1978. “Sound Poetry—A Survey.” In Sound Poetry: A Catalogue, ed. Steve McCaffery and bpNichol. Toronto: Underwich Editions. http://www.ubu.com/papers/mccaffery.html (accessed March 29, 2011).

- McGann, Jerome J. 1988. Social Values and Poetic Acts: A Historical Judgment of Literary Work. Cambridge, Mass.: Harvard University Press. http://www.writing.upenn.edu/~afilreis/88v/mcgann.html (accessed April 1, 2011).

- Montfort, Nick. 2003. “The Coding and Execution of the Author.” In The Cybertext Yearbook, ed. Markku Eskelinen and Raine Kosimaa. 201–217. Jyväskylä, Finland: Research Centre for Contemporary Culture, University of Jyväskylä. http://nickm.com/writing/essays/coding_and_execution_of_the_author.html (accessed March 29, 2011).

- Netzer, Yael, David Gabay, Yoav Goldberg, and Michael Elhadad. 2009. “Gaiku: Generating Haiku with Word Associations Norms.” In CALC ‘09: Proceedings of the Workshop on Computational Approaches to Linguistic Creativity. 32–39. http://portal.acm.org/ft_gateway.cfm?id=1642016&type=pdf&CFID=17596346&CFTOKEN=46516340 (accessed April 11, 2011).

- OpenNLP. 2011. http://opennlp.sourceforge.net/projects.html (accessed March 22, 2011).

- Pedersen, Ted. 2008. “Empiricism Is Not a Matter of Faith.” Computational Linguistics 34(3): 465–470. http://www.aclweb.org/anthology/J/J08/J08-3010.pdf (accessed March 24, 2011).

- Plamondon, Marc R. 2005. “Computer-Assisted Phonetic Analysis of English Poetry: A Preliminary Case Study of Browning and Tennyson.” TEXT Technology 14(2). http://texttechnology.mcmaster.ca/pdf/vol14_2/plamondon14-2.pdf (accessed April 1, 2011).

- Raley, Rita. 2002. “Interferences: [Net.Writing] and the Practice of Codework.” Electronic Book Review 9(8). http://www.electronicbookreview.com/thread/electropoetics/net.writing (accessed March 28, 2011).

- Raymond, Eric S., ed. 2004. “Hacker.” In Jargon File, version 4.4.8. http://www.catb.org/jargon/html/H/hacker.html (accessed March 19, 2011).

- Raymond, Eric Steven. 2011. “Status in the Hacker Culture.” In How to Become a Hacker. http://www.catb.org/~esr/faqs/hacker-howto.html#status (accessed March 19, 2011).

- Shakespeare, William. 2010. “The Sonnets.” Project Gutenberg. http://www.gutenberg.org/dirs/etext97/wssnt10.txt (accessed April 13, 2011).

- Steels, Luc. 2005. “The Emergence and Evolution of Linguistic Structure: From Lexical to Grammatical Communication Systems.” Connection Science 17(3–4):213–230. http://www.csl.sony.fr/downloads/papers/2005/steels-05h.pdf (accessed March 24, 2011).

- Steels, Luc and Martin Loetzsch. 2008. “Perspective Alignment in Spatial Language.” In Spatial Language and Dialogue, ed. K. R. Coventry, T. Tenbrink, and J. A. Bateman. New York: Oxford University Press. http://www.csl.sony.fr/downloads/papers/2008/steels-07c.pdf (accessed March 24, 2011).

- Strachey, Christopher. 1954. “The ‘Thinking’ Machine.” Encounter. Literature, Arts, Politics. 13: 26. Cited in Lutz, Theo. 1959. “Stochastische Texte.” Augenblick 4(1): 3–9.

- Swiss, Thom and Maria Damon. 2006. “New Media Literature.” In Creative Writing: Theory Beyond Practice, ed. N. Krauth and T. Brady. Teneriffe, Qld: Post Pressed. 65–77.

- Toutanova, Kristina, Dan Klein, Christopher Manning, and Yoram Singer. 2003. “Feature-Rich Part-of-Speech Tagging with a Cyclic Dependency Network”. In Proceedings of HLT-NAACL 2003. 252–259. http://nlp.stanford.edu/~manning/papers/tagging.pdf (accessed April 8, 2011).

- Trowridge, Jon. 2008. “How Gnoetry 0.2 Works”, mid)rib 2. http://www.midribpoetry.com/issue2.html (accessed March 22, 2011).

- Tzara, Tristan. “TO MAKE A DADAIST POEM.” In dada manifesto on feeble love and bitter love. Originally published December 12, 1920. http://www.391.org/manifestos/19201212tristantzara_dmonflabl.htm (accessed April 8, 2011).

- West, Rosemary K. “Creativity Package.” http://cd.textfiles.com/psl/pslv7nv07/dos/EDUCA/ (accessed March 22, 2011).

- Westbury, Chris. 2003. “JanusNode 2.05: A User-configurable Dynamic Textual Projective Surface.” http://janusnode.com/ (accessed April 11, 2011).

- Wikinews. 2011. “Wikinews, the Free News Source.” http://en.wikinews.org/wiki/Main_Page (accessed March 28, 2011).

- Wikipedia. 2011. “Dissociated Press.” http://en.wikipedia.org/wiki/Dissociated_press (accessed March 24, 2011).

- Wikipedia. 2011. “N-Gram.” http://en.wikipedia.org/wiki/N-gram (accessed April 8, 2011).

- Wikipedia. 2011. “Universally Unique Identifier.” http://en.wikipedia.org/wiki/Uuid (accessed April 1, 2011).

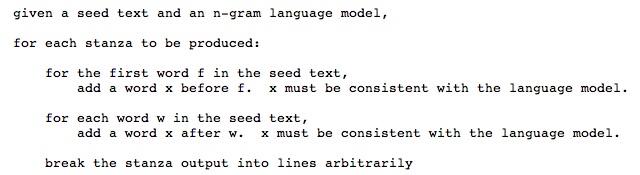

Appendix A: N-Gram Language Models for Interactive Poetry Generation

A.1 Overview

N-gram language models are built from a source text (which may include multiple documents), and can be used to generate new text that looks more or less like the source text. When Dadaist Tristan Tzara cut the words out of a newspaper article and put them in a bag (Tzara 1920), he was building a type of n-gram language model. When he randomly selected those words from a bag to make a poem, he was using that language model for generation.

Tzara’s cut-up poems had the same type of words as the source text, but of course the sentences themselves were generally incoherent. More sophisticated language models could generate more coherent poems. Tzara’s output would have been more coherent if he had cut up pairs of words, or sequences of three words. He would have generated different effects by cutting out characters (or sequences of characters) rather than words. Or he could have labeled each word’s part of speech (noun, verb, etc.) and replaced it with another word with the same part of speech.

Computational Linguists would model all of these as different types of n-grams. The sections below describe several variations, using Shakespeare’s sonnets as a sample source text. Shakespeare is used not out of a lack of respect, but as a positive way of reacting to reports that the study of Shakespeare is becoming less frequently required in academia (ACTA 2007).

A.2 Unigrams

Consider Shakespeare’s line: “When I do count the clock that tells the time.” It contains several words.

- when

- I

- do

- count

- the

- clock

- that

- tells

- the

- time

Computational linguists would call each word a 1-gram or a unigram, as part of a language model.

We could generate our own poetry by rolling a 10-sided dice.

- If a “3” came up, we’d write “do.”

- If a “9” came up, we’d write “the.”

- If a “1” came up, we’d write “when.”

. . .and so on. This is equivalent to Tzara pulling individual cut-out words from a bag. Note that “the” is twice as likely to be picked because it occurs twice in the original sentence. After rolling the dice several times, we might get something like:

do the when clock the when when that

It is incoherent but can be improved upon.

A.3 Bigrams from a Single Line of Shakespeare