Stage 1.X—Meta-Design with Machine Learning is Coming, and That’s a Good Thing

Skip other details (including permanent urls, DOI, citation information)

: This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. Please contact [email protected] to use this work in a way not covered by the license.

For more information, read Michigan Publishing's access and usage policy.

Abstract

The capabilities of digital technology to reproduce likenesses of existing people and places and to create fictional terraformed landscapes are well known. The increasing encroachment of bots into all areas of life has caused some to wonder if a designer bot is plausible. In my recent book FireSigns,[1] I note that the acute cultural, stylistic, and semantic articulations made possible by current semiotic theory may enable digital resources to work within territories that were once considered the exclusive creative domain of the graphic designer. Such meta-design assistance may be resisted by some who fear that it will altogether replace the need for the designer. In this article, I first suggest how meta-design might enter the design process, and then explore its use to affect layout and composition. I argue that, at least within that restricted domain, it promises greater creative benefits than liabilities to human graphic designers.

Introduction

It is commonplace to hear designers and other artists dismiss the possibility that digital systems could replace their creativity. Of course, no one denies that the digital revolution has transformed the world in many ways we never could have foreseen just a generation ago. Since 1975, computers have quickly moved beyond their initial function as lightning fast “computation machines” and by now have proven to be powerful technical allies in every field, and exist in approximately three quarters of the homes in developed nations like the U.S., South Korea, France and Chile.[2] They are even more widespread in the forms of smartphones, existing in the pockets of roughly 60% of the world’s current population.[3] Within design, in some important technical areas, such as typesetting and pre-press, digital media have utterly transformed the printing floor and the graphic design office. But substitute for the aesthetic and conceptual ability of a fine designer?—that last human bastion has seemed beyond the scope of silicon. No one pretends to understand the creative spark, the almost spiritual realm from which it seems to emanate, the leap of the unconscious that is at its heart. How can we—and should we—program the deep semiotic net we take for granted into a machine, along with the symbols that are packed into every artifact in a given culture, that fluid network of signs that shifts with fashion and generations, through space and time?

Francisco Laranjo, in his dystopian memoir from the near-future automated design-bot world, recounts in the narrator’s voice looking back from 2025:

A devastating gulf has opened up between the residual manual labour of letterpress, screen-printing and calligraphy, and the long, rigorous, research-led and -based design with reflective and critical analysis. The rest—the majority of graphic designers—have disappeared, apart from a few who survive with faithful, technophobe clients.[4]

Whereas Laranjo sees this gulf as the inevitable product of bot designers, I would suggest that the gap of which he speaks has already transpired even without designer robots. Today, our design profession is already bifurcated into precisely these craft and analyst sectors, and any designer occupying the middle, “production,” ground has either lost her job, or is being paid subsistence wages to perform it. So—in this article I want to do three things: predict that artificial intelligence (AI) is indeed coming sooner rather than later, and that it will take the form of “meta-design;” suggest how meta-design will work in one specific design area; and finally, express the reasons for my optimistic opinion that creative bot assistants will prove to be a boon for human graphic designers, rather than endanger the occupation of graphic design at the higher levels.

I grant that it is a bewildering exercise to imagine a truly creative AI assistant. But then, it is rare to have a truly creative flesh and blood assistant, too. In our design practices, we often follow trends, repeat successful tropes, imitate our mentors, play with slight variations on long-trod themes. Certain kinds of creative graphic design choices are already well within the growing capabilities of artificial intelligence, while other design activities may resist AI applications. I’ll restrict the present discussion to a particular design activity that is not only universal but that also seems especially ready for the entrance of a meta-design bot: layout and composition.

The first section of this article will provide an overview of meta-design, how it fits within the process of design and how it will likely evolve. The second section will discuss certain limitations, ethical considerations and some of the dangers of meta-design.

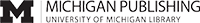

Section One: What Meta-Design Is and How It Will Enter the Traditional Design Process

Meta-design is the design of design.[a] As the term is used here, meta-design is a sub-routine, that, when inserted as a stage or protocol into a design procedure, innovatively contributes to the course of the design process itself. So meta-design is designing the process of design, causing a change or disruption in what would otherwise be the flow of the procedure, and this disruption results in a final state for a designed object that is different from what it would have otherwise been. The meta-design stage creates something new that influences the outcome. To see how this works, let’s start by looking at the current design process. Naturally, there are many models to choose from (Dubberly 2005),[b] but if reduced to the simplest of these possible, current design process is made up of just three fundamental and essential stages: 1. the gathering of content (C), 2. the running of iterative candidate conceptualizations (r), and 3. the production into the world of the chosen idea or object (p). These are depicted in Figure 1.

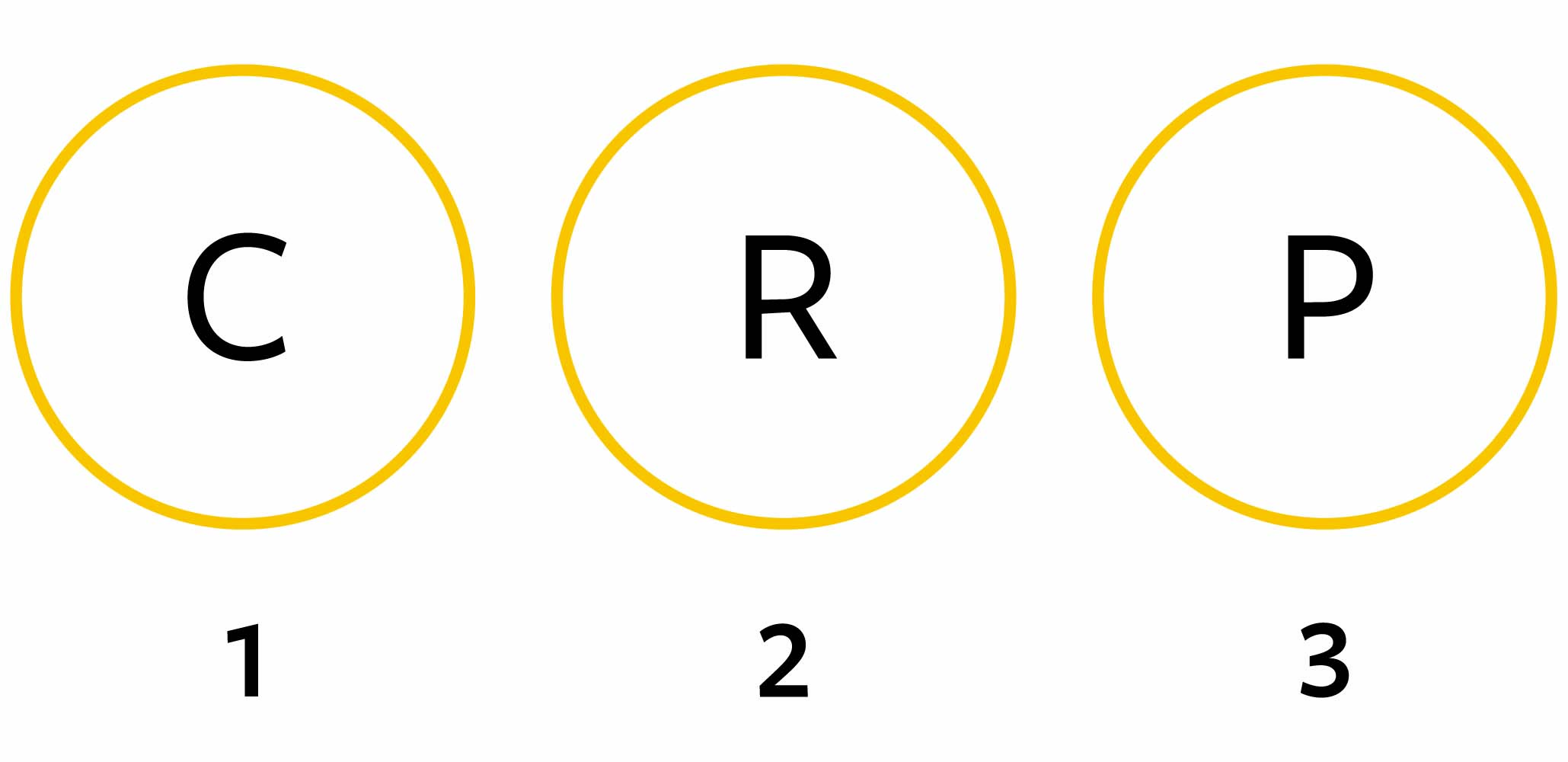

Meta-design can influence any of these three components, but for graphic designers, the most significant location for the insertion of a meta-design component is between the first and second stage of the process, as depicted in Figure 2.

Let’s call this meta-design insertion, “stage 1.X”. Stage 1.X would contain at least three sub-components that would be bundled as algorithms into software. The three particular sub-component modules that would be essential: Expertise (e), Semantic Profiles (sp), and an Inference Engine (i). These component modules will be discussed below, but first, let’s take a look at an example of how the general process flows.

An Example of How Inserting a Meta Design Component into a More Traditionally Formulated Design Process Could Work

Here’s how meta-design would work to affect the creative composition of, say, a catalog or book. Just for fun, let’s say the meta-design program is called “Milton.” A design director would assemble the contents—the text, the images and any ancillary graphic elements.[c] This assembly of contents constitutes stage 1 of the design process. But now, instead of immediately beginning iterative manual sketches, a process of layout and format conceptualization that is stage 2, stage 1.X—Milton—would be called upon. The goals of the project as delineated in the project brief (including such information as audience profile, cultural or stylistic milieu, and possible associative alliances) would be input into Milton. The design director might request 10 or 20 sets of layouts. Rather than the design director producing sketches, Milton would then set up or facilitate stage 2 by running out the requested number of iterations, in a variety of compositions, each taking into account the parameters of the brief. Now working within conventional stage 2, the design director would review the layouts, make decisions based on these candidate solutions, editing and manually revising as required.

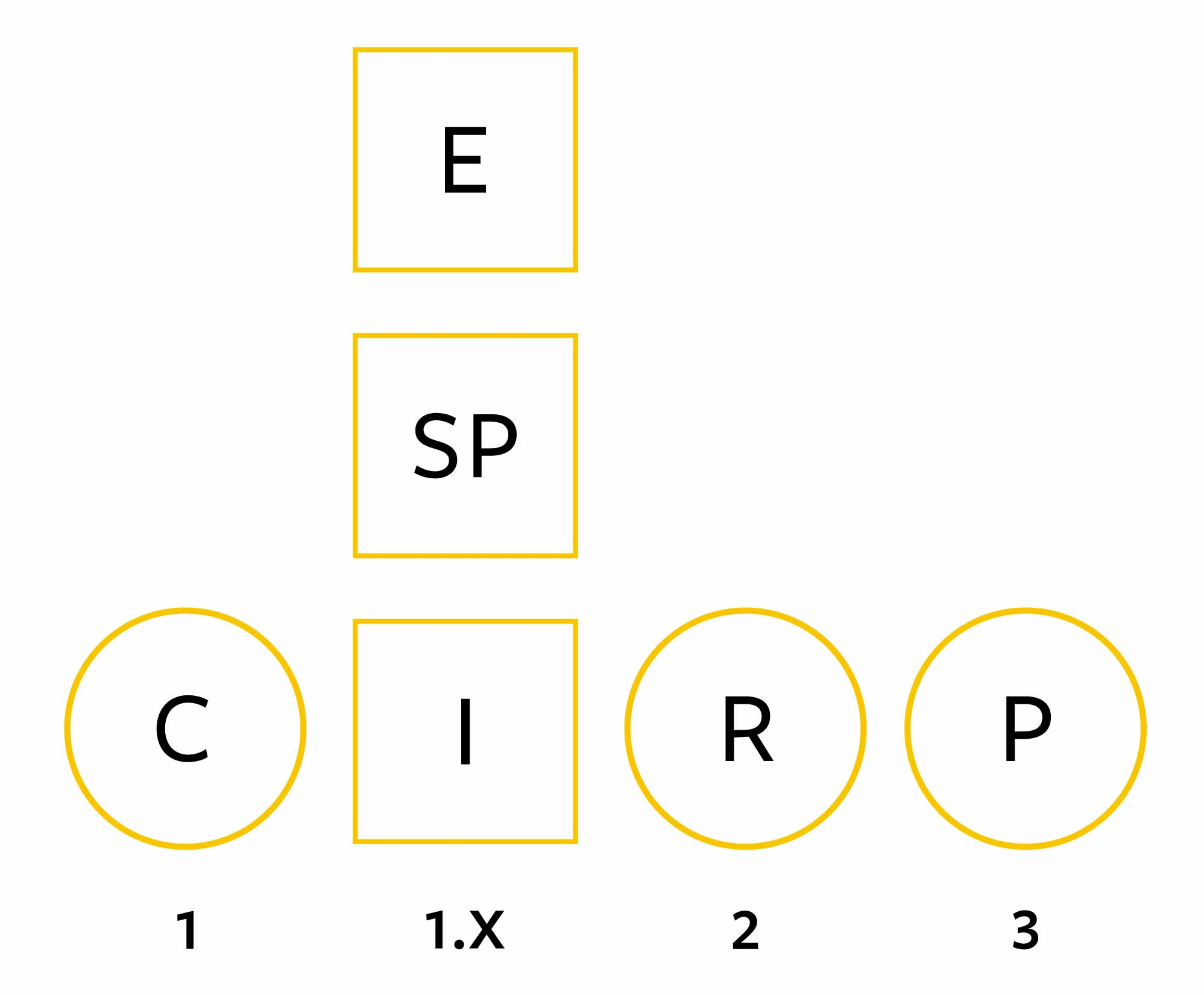

Just as happens upon completion of a conventional design process, feedback is received that informs future similar iterations. The feedback would proceed through two channels, as depicted in Figure 3.

The first, a conventional channel, would be used to allow the designer and client to note the apparent success or weaknesses of the results of the design once it is produced and distributed into the world. The second feedback path would be directed to Milton. Part of the machine learning training for Milton is this continual feeding back that informs Milton about which edits and changes were favored by the design director (expertise). In much the way as speech recognition programs get better at learning a speaker’s dialect and diction with time and utilization, so too will Milton begin to learn the design director’s formal preferences and come closer to aligning with them as similar projects are undertaken in the future. Milton, over time, acquires your taste. Also—by the way—over time and with repeated usage, Milton influences your taste. This mutual reactivity, an inherent component of meta-design informed by machine learning, can be expected to lead to innovations, some of which will be mentioned later.

Examining the Critical Modules of Stage 1.X: Expertise, Semantic Profiles, Inference Engine

Now let’s look more closely at what constitutes the three essential modules—expertise, semantic profiles, and inference engine—that comprise stage 1.X.

E: Expertise Module

Expertise is the most difficult of the modules to produce and effectively operate, and why there have been no eloquent meta-design programs to date.[d] Digital technology is very good at quickly making yes/no, on/off decisions, but understanding what a goat represents in Tanzanian culture, or the aesthetic feel of vernacular-versus-modernist genres has almost always been thought to be the special domain of actual people, not machines. I grant to those that say meta-design with AI is impossible that this is a really difficult problem. It’s more difficult than faking a face-on-body transposition, or making an AI image of a bird, cat, or Princess Leia. It’s difficult because it does not rely on iconicity (pictorial resemblance), but on appropriate metaphorical and symbolic discourse within a subculture.

It’s a difficult problem, but, at least at basic levels, such as those that address formal layout and composition, it is not insurmountable. For instance, similar problems arise in areas such as mineralogy, where a particular rock must be defined as a type. Very few species of rock are “pure” examples of their type. So how does one decide how to classify a vague or ambiguous sample? It requires years of experience for a geologist to acquire the expertise to consistently make that judgment with accuracy. But recently, using fuzzy set theory[e] and applying it to digital systems, programs have been developed that can begin to approach the expertise of the finest geologist.[5]

Designers, especially those who have many years of experience within the industry, have absorbed a tremendous amount of cultural and implicit knowledge. Understanding how to employ both grids and classical compositional systems, the links between stylistic forms and cultures, looks and styles preferred by various demographic groups, a judicious use of typographic choices—all of these are encyclopedic, semiotic domains of knowledge. Being able to take this expertise and fold it into an algorithm is key to employing fuzzy logic within the AI system. To what extent this expertise can be encoded in AI is yet unclear. Using a grid to guide composition is one thing, but at some point we humans pass from understanding how to use a grid to understanding the cultural relevance of a grid. Can we expect that level of cultural expertise to be translatable to an algorithm? We should be aware that on the immense spectrum from our current dumb templates to sophisticated cultural knowledge, there are many hash marks. It’s reasonable to assume that at least to some degree, the knowledge of the linkages between cultural connotations and compositional form can find its way into Milton.

Sp: Analyzing the Semantic Profile Module: Presence, Expression, Denotation, Connotation

The Expertise component would then be filtered through a Semantic Profile module. Semantic profiles are a kind of “personality profile type” for a display.[6] Semantics, taken in its broadest interpretation, involves all of the effects, or impacts, that a visual display elicits. There are four basic classes of these effects: presence, expression, denotation and connotation.[f] The semantic effects of presence and expression constitute the “affective register,” while denotation and connotation constitute the “conceptual register.”

Presence, the most context-dependent of the four semantic effects, refers to the degree of attention-attraction a display might be expected to elicit, solely on the basis of its color, contrast, size and other qualities of its syntax.

Expression, also within the affective register, is “feeling from form.” It is the degree and particular kind of feeling engendered within the receiver from a display, due solely to the display’s form and syntax. It does not refer to feelings or emotions that may ensue based on the “content” or subject matter of the display. For example, a photograph of a political icon whom you vehemently loathe might yet be very low expression if, for example, his portrait was taken in a completely unremarkable, mundane manner and printed in faded gray ink on a white page. On the other hand, the “Obama Hope Poster” was high in expressivity, no matter what one’s political leanings, because of the deployment of color, contrast and cropping within this display.

Denotation is within the conceptual register, and it refers to the nuts-and-bolts informational content of the display. If, in order to pay a visit to your dentist who has recently moved, you are looking at a building’s directory, it is the denotative semantics that gets you to the right office. Legibility is a denotative variable. In the famous Josef Müller-Brockmann Musica Viva[g] poster series, regardless of the presence or expression various typography clusters might possess, it was their denotative success that got the audience in their seats on the right day and at the correct time. (Note, however, that what makes these posters famous is precisely the other three semantic vehicles: i.e. everything that is not denotative about them.)

Connotation, the most personally variable and experience-dependent of the semantic effects, has to do with all of the associations and connections that a receiver makes, both consciously and sub-consciously, upon seeing the display.

A semantic profile assigns, for a display, a value of importance, called valence, to each of these four classes of effects. The simplest semantic profile uses a bi-valent value system, ascribing a target of either high or low valency to each semantic category.[h]

The Semantic Profile (SP) module provides a way for the program to make decisions about emphasis, hierarchy, and tone. The SP module probably will not specify the particular quality of expression, only that the display is expressive. It doesn’t specify what connotations, only the degree of connotative richness; likewise, it doesn’t pinpoint what the specific denotative content is, only how important denotative clarity is to the overall message.

I: Inference Engine

Meta-design procedures must, as the final stage, be run through an Inference Engine module. The Inference Engine Module essentially involves running a fuzzy logic algorithm off of the input from the e and SP modules. It is a means to facilitate the making of guesses, and dumb guesses at that. In a composition that incorporates typography, heads and subheads need to be identified, captions need to be linked to their images, and textual information needs to be provided with hierarchical labels (much as we do now with style sheets). Any physical parameters, such as size and colors, need to be specified. Given this framework, the inference engine will begin to produce compositions—to be played out at full size or reduced to thumbnails, as preferred.

Exploring the Benefits of Meta-Design

Imagine that you are the design director of a company that publishes on-line and print catalogs. What if once you receive the photos and the copy intended for use in a given issue, you could turn to a staff of twenty layout artists and have each one of them lay out the entire catalog. The twenty designers come back in two weeks, and, from those twenty layout design concepts, you choose the one you think is most exciting and best solves the visual communication problem. Now, of course, working with twenty design assistants in parallel on a single project would be an inefficient and impossibly costly thing to do! No one does this; instead, the job goes to a single specialist (often the design director herself) who sketches out some potential “looks.” Once these have been determined, possibly a team of people work—each on a single section—and adhere to a fairly rigid, pre-determined layout format. But imagine that you had those twenty designers, and each of them were good typographers and adept at composing catalogs—you would have the extreme pleasure of choosing the best from a set of twenty very fine layouts. Of course, you could still revise the design, and if you really had a large budget at your disposal, you could even add some freshly-shot master photography and repeat the entire procedure, making variations on that winning compositional theme, refining and adapting the chosen look until you were completely satisfied in every detail.

Meta-design with machine learning takes those twenty designers and places them at your service. Instead of requiring two weeks to produce the twenty layouts, they give you the twenty layouts in two minutes. You can still tweak, revise, and do variations on the theme. In the time it takes you to do some rough conceptual sketches of a few possible compositions, you are given as many compositions to study as you wish.

Not only that, those twenty iterations that you are provided with in a couple of minutes are completely “live” in terms of content. In other words, they are not created using so-called “Greeking,” or other forms of simulated content, unless you ask the meta-design module to do so. Any tweaks you make immediately reflow the live information throughout the design. If you change typeface or type size, the entire layout, or array of layouts, accommodates it just as it does now in InDesign. The format doesn’t change unless you ask to see additional formats and repeat the procedure.

Finally, just like the design office with twenty designers in your employ, and, maybe to an even greater degree, your designbot assistant learns your taste. Over time, the iterations you are offered grow closer to what you consider refined, workable, functional and eloquent. The Expertise module is noticing your preferences and associating certain modes of visual style with particular content areas or audience groups. You can feed the program samples of compositions you like from the award pages of design magazines. The machine will not plagiarize them, but it will use the visual characteristics to expand its library of potential influences. As you work with it, the baseline Expertise module is learning and adapting to an increasingly clearer universe of discourse. Within a year or two, you may find that your particular AI meta-design assistant is surprising you with unexpected, but uncannily appropriate compositions. The meta-design program begins to be a creative extension of yourself as an individual designer. You and it are blending in a peculiar way, you begin anticipating what it will make, and it is improvising off of what it “senses” you like.

Design and culture critic Kenneth Fitzgerald has written, “Economic forces play the crucial role in determining graphic design’s capability.”[7] If that is so, then the speed advantages of meta-design, once translated into costs of production, will inevitably lead studios to meta-design solutions.

Section Two: Investigating the Downsides of Incorporating Meta Design Components into Traditional Design Processes

But what if that design studio currently employs two production artists to do layout and composition? Aren’t their positions in jeopardy? The question leads us to examine the possible problems that cause many designers to fear the dawning of the AI age.

The rosy picture I have painted ignores some “missing links”—vital insurance policies if the meta-design is to turn out to be workable and advantageous, not just to the designer-bots, but also to the homo sapiens in the room. Because, in the end, graphic design must remain both a successful and humanistic enterprise.

Missing Link 1: Expertise? And Who’s Expertise?

It’s easy to draw a diagram with an “Expertise Module”—it’s a lot more difficult to actually encode that expertise in a functional algorithm. Certainly, the procedure of programming and training will, at least on the surface, be vastly different than what happens in a university studio where students, over the course of several years, begin to acquire the ability to compose complex information. Even the most raw and naive Freshman design student comes into a classroom armed with a lifetime of cultural associations, aesthetic preferences, and the ability to make visual choices. The burgeoning AI algorithm has none of that. It must “learn” things such as the gestalt principles of visual perception without actually having visual perception. That the technical challenges are severe is one reason we don’t already have advanced AI bundled into the Adobe Creative Suite. And yet...

In some ways what happens when a student learns graphic design is precisely the same as developing an AI meta-design agent. They progress from being decoders of visual messages, to being encoders of them. First, students are provided a vocabulary so they can conceptually subdivide what had previously been undifferentiated visual material into discrete parts. That conceptual and verbal definition allows us to talk to each other about the variety of formal features inherent in a given typeface, photograph, or composition. Then, art and design principles are classified, broken down, experimented with. Students are next encouraged, when confronted with a variety of choices, to make progressively more acute discriminations in judgment, so that those choices become increasingly targeted toward a desired semantic outcome. Finally, students are saturated with explicit and implicit cultural linkages, so that (to give one example) a Swiss grid is associated with modernism while the violation of the grid is treated as a postmodern strategy. Students are made conscious of those kinds of deeply historical and cultural connotations only through lessons where the connections are pointed out, consciously experimented with, and eventually assimilated into the unconscious working memory of the graduate designer.

Every lesson that can be made explicit through words can be translated into code for a machine. Unlike Milton Glaser, meta-design “Milton” may not have an aesthetic soul, but it will not, in the end, need one for the work (of a certain kind[i]) it is asked to do: with the design director’s oversight, it simply needs to be suggestive and, like in horseshoes, come close; the design director can tweak the composition into a ringer. Over time, by observing and remembering those adjustments, the meta-design program continues to learn, developing a more uncanny ability to arrive at the ringer the first time.

But wait—isn’t all of this terribly elitist? If a design program “memorizes” all the best design compositional practices of a certain strata of privileged design professionals, where is there room for “the street”?

As I imagine it, the basic meta-design program would come equipped with a “best practices” library of core concepts—gestalt principles, grid systems, traditional symmetrical book typography, and so on. Overlaid on that core could be extensions that would represent stylistic off-shoots, culturally linked sub-styles, that would allow a diversity of outputs. A second source of diversity would be the trainability of the program. It must be possible with meta-design to have machine learning, so that it is trained over time by being shown new images. A category—“tattoo art” for example—would permit thousands of images of tattoos to be entered and the program could begin to discover the formal compositional relationships that they share.[j] This process would be also guided by the design director who would assist the program, through labeling and pairing strategies, in categorizing and classifying the input. We already see such procedures in dictation programs and in early image-production AI programs.

Missing Link 2: Whence, Creativity?

All of this sounds as if it takes the one thing that is by far most satisfying about being a graphic designer and gives that job to a machine. Where is the creativity, the discovery, the surprise of working intuitively?

First of all, let’s recognize that there are different kinds of creativity. The creativity that is responsible for inventing a humorous concept for an ad campaign is the kind of creativity that is most rare and the hardest to achieve with meta-design. Maybe no program will ever be able to concoct a series of fifteen-second spots like Dos Equis’s “Most Interesting Man in the World.” But would it be able to compose a successful ad combining the photograph, the typography, the logos and the product shots? Why not? That’s an assertion of a different kind of creativity—something that relies somewhat less on cultural understanding, double entendres, the mysterious core of metaphor and humor, and more on the classification schemes of formal structures.

For this kind of compositional creativity—a deeply aesthetic, almost haptic feel for what is right in terms of pacing, emphasis, contrast and balance—meta-design, ironically, would seem ideally suited. When we use optical margin alignment in setting text, we don’t cede authority to InDesign, we allow InDesign to suggest something that it “has been told” will look better to our eyes.[k] This is actually a nascent example of meta-design. We, as design directors, allow the optical margin alignment at a certain value, we review it, make tweaks, and never feel our compositional creativity is being impinged. Our human aesthetics are predicted by the program on the basis of our expertise, our input into the program.

In meta-design compositions, we will maintain final design control. We will be the ones setting the values, determining the stylistic universe of the iterations, and ultimately revising until we are satisfied. Meanwhile, our meta-Milton will be learning what we changed, each revision being recorded and which, in time, will provide it with the information it needs to guess closer to the mark.

In another important way, our own human creative capacity will be increased. Currently, if we have a complex or lengthy project, we don’t have the time to do a great number of conceptual studies, and we are pressed to move fairly quickly toward a solution. We use our intuition and experience (expertise) to do this, but usually this means doing things that are not a huge departure from things we have done before. We stay fairly close to the tried-and-true, modifying a few peripheral details. But, using stage 1.X, we will be able to instruct Milton to give us, for example, three International Style looks, three Traditional Symmetrical looks, and three Random Choice looks. In looking at the variations, we might be expected to get ideas that we would not be able to invent with the time pressures we are working under.

This leads us to the related issue of...

Missing Link 3: Convergence to Mundanity

Since the program always stays within its parameters, so, over time, as the “taste parameters” become more clearly articulated via feedback to the program, fewer digressions can be expected. But it is precisely those digressions, and even mistakes, that often lead us to unexpected new insights and novel directions. Especially with the most competent meta-design, in which the bot has unerringly mastered your look, how can anything be truly new?

It’s certainly the case that any program that can only observe, imitate, and repeat must always enter a death spiral of stasis. But in a smart meta-design program that allows fresh—even random—input, this cycle can be broken. Exposing the program to new compositional stimuli that is foreign and hitherto not available to the feedback loop, injects new strains of possibility. Even if the program is essentially in an imitating mode, it can be shaken out of it by crossing two disjunctive compositional strategies (for instance what would combining symmetry with a grid produce?). Three techniques can be employed to avoid the death spiral: first, expose the program to a variety of novel solutions within a given category; second, expose the program to new categories of composition (i.e. an outside category); third, ask for occasional random settings which then suggest compositions that could not have been foreseen and which, after revision, can be reinserted either within a given category or as a new category.

Indeed, the more interesting question here may be what keeps us human designers from converging to the safe and redundant composition now, in the absence of meta-design? Only our fatigue at seeing the utterly familiar? We purposefully seek out new stimuli by going to galleries, looking at award-winning work, and traveling. Even so, we are often in an imitative stance as is shown by design’s own “me too” movement—I speak of the “me toos” that relay redundant and plagiarized visual tropes all over the Behanced and Pinterested internet to many of the redundant results found within every Google Image Search. And so, if the convergence to the utterly familiar is so seemingly deeply ingrained in all of us, it must be actively thwarted in meta-design by creating a negative parameter in the programming of the meta-design algorithm; it must be proactively avoided.

Missing Link 4: Employment Ethics

It has been estimated that at the height of the pre-digital age there were over 4,000 typesetting shops in North America.[8] With the developments in 1984-5 of the 300 dpi Apple Laserwriter, the 1200 dpi Linotronic, and the incorporation the PostScript Linotype type library in new Macs, tens of thousands of typesetters lost their jobs. The broad adoption of page layout programs such as Aldus PageMaker and Quark XPress meant the designer could handle the entire composition of text as well as the positioning of all graphic elements without leaving the office—or paying for galley repros. A 400-hundred-year-old profession disappeared in about eighteen months.

Meta-design will, for the first time, automate and personalize not just the typesetting, but the entire compositional design of screens, books, magazines, and catalogs. Won’t it be expected to cost a lot of jobs? If so, is it ethical to develop a technology that threatens so many livelihoods?[l]

The answer may depend somewhat on what activity defines the real essence of the field of graphic design. If I made my living laying out catalog issues month after month—not as the art director but as a production artist—I would certainly be concerned. But if I were the art director for that catalog company I might welcome the new world we’re moving into. Who is the graphic designer in that case: production artist, art director, both?

It is the repetitive and routinized operations that will be replaced. The design director will still be tasked with overseeing the aesthetic and qualitative feel of the project. The AI support will remain just that—a supporting technique. It will be a trainable technique, but it is a long way from being a competing freelancing entity.

The key to what will make the meta-design profession vital will be the cultural awareness, the ability to think in metaphors, symbols, to understand the symbolic message within the compositional choices. This is a level of expertise that will remain in constant flux and therefore a set of perceptions that cannot—with the required acuity—be built into the Expertise module.

Indeed, designers (as opposed to production assistants) will be in more demand than ever, because one of our tasks will be the training of Expertise modules within a meta-design system. No one will be better positioned to make the judgments necessary to input for, edit, and select from the vast number of candidate compositions. Design is continuing to move further from its legacy as an intermediary step in the technological process of printing. Increasingly, we are the mediators of messages, semiotic professionals, links not between one technology and another, but between the form, semantics and function of the communication. If that is our role, meta-design will only facilitate the process of making the graphic designer indispensable.

All of this alleviating of fears, of making sure the missing links are tended to, however, is dependent upon one very important ingredient: the general culture has to learn that graphic design is not about merely making stuff with type and images. Design is not making, but moving. We are not makers, we are movers. Graphic design is about causing people to be moved: moving them in their heads, in their hearts, in their actions and beliefs, and ultimately in their knowledge of the world. That is the core expertise that we alone have as designers. It’s not completely transferable to a bot because that which moves a particular person or group at any time is fluid, semiotically inflected, constantly in flux. The design director imparts into the Expertise Module, and the design director edits, revises, extends. But this position of the designer, as someone who understands the semiotics of visual communication—and who stands in some awe of it—must be broadcast to the society at large. Otherwise, “economics alone will play the crucial role,” and Milton will work directly under the CEO. That would not be a utopian visual world to live in, nor a utopian world for a creative person to work in.

Conclusion

Graphic design, which barely existed as a professional or academic discipline before 1920, has experienced one hundred years of growing complexity. We are no longer confined to setting type for print, or gathering photographs and typography together, or combining illustration and copy for composition. We are now doing all of that plus preparing web sites, interactive displays, environmental and experiential design systems, and managing increasingly intricate, integrated sign systems of almost every imaginable medium. Our work as graphic designers is to direct visual semiotic events, to move people through what they see. That will not change one iota by the addition of meta-design technology. Rather, while the introduction of this new meta-design tool, this “stage 1.X,” will represent a sophisticated new capability, its capacity for beneficial visual decisions ultimately rests not within the algorithm, but in the very human design director who employs it. But having made this claim, it is vitally important that this deeper semiotic understanding at the core of graphic design be appreciated by a general public, who will soon have increasingly powerful—and teachable—visual composing machines at their disposal.

References

- Baraniuk, C. “Would you care if this feature had been written by a robot?” BBC News. Business. 30 January 2018. Online. Available at: https://www.bbc.com/news/business-42858174 (Accessed June 10, 2019).

- Dubberly, H. How Do You Design? A Compendium of Models. Dubberly Design Office, 2005. Online. Available at http://www.dubberly.com/articles/how-do-you-design.html (Accessed June 15, 2019).

- Fitzgerald, K. Volume: Writings on Graphic Design, Music, Art and Culture. New York, NY, usa: Princeton Architectural Press, 2010. Pg. 6.

- Laranjo, F. “Ghosts of Designbots Yet to Come.” Eye Magazine (blog). December 21, 2016. Online. Available at: http://www.eyemagazine.com/blog/post/ghosts-of-designbots-yet-to-come (Accessed May 31, 2019).

- Otay I., Kahraman C. “Fuzzy Sets in Earth and Space Sciences.” In: Kahraman C., Kaymak U., Yazici A. (eds.) Fuzzy Logic in Its 50th Year. Series: Studies in Fuzziness and Soft Computing, vol 341. Basel, Switzerland: Springer, Cham, 2016.

- Research and Analysis Team. “Internet usage worldwide–Statistics & Facts,” Statista, April 5, 2019. Online. Available at: https://www.statista.com/topics/1145/internet-usage-worldwide/ (Accessed June 10, 2019).

- Romano, F. “The Day the Typesetting Industry Died.” What They Think. December 16, 2011. Online. Available at: http://whattheythink.com/articles/55522-day-typesetting-industry-died/ (Accessed February 2, 2018.)

- Skaggs, S. FireSigns: A Semiotic Theory for Graphic Design. Cambridge, ma, usa: mit Press, 2017.

Biography

Professor Skaggs is the Head of the Graphic Design BFA track at the Hite Art Institute, Department of Fine Arts at the University of Louisville in Louisville, Kentucky, USA. His research in graphic design theory and visual semiotics explores ways in which verbal and visual meanings intersect. His work over twenty-five years in providing a semiotic theory for graphic design resulted in the publication of the book FireSigns from mit Press, which appeared in 2017. An earlier work, Logos: the Development of Visual Symbols, was published in 1994 by Crisp Learning. It tells the story of the development of a single symbol through 254 developmental drawings.

Professor Skaggs also designs fonts for specialized purposes. Rieven, a postmodern, Romanized uncial with italics, received a major international award in 2010, the TDC2 Certificate of Typographic Excellence in Typeface Design. Maxular Rx (2017) is the first font designed specifically for people who suffer from macular degeneration.

A longtime proponent of the experimental possibilities of calligraphy, his fine art often explores expressive improvisation. His work is in the permanent collections of the Klingspor Museum, the Sackner Archive of Visual Poetry and the Akademie der Künste in Berlin, Germany.

A volume of his poetry, Poems From Elsewhere appeared in 2006 from Arable Press.

Notes

- a

While I use graphic design as the model for this article, it should be noted that the many of the themes are transferrable to other kinds of design as well.

- b

The search term “graphic design process models” returns over thirty different models. Hugh Dubberly documents hundreds. The most common variety is some variation of a six-stage process, beginning with defining the problem, moving through research, and then engaging in conceptualization, development, feedback, and finally refinement. Some of the models divide these stages further, and many include a post-production feedback stage as well. The three-part scheme I describe in this article is not meant to refute the efficacy of any of the more elaborate models, but simply to arrive at the smallest common denominator inherent in these kinds of models: the most essential, non-disposable, elements that are true to all.

- c

These items may, themselves, be the product of AI generation, as is now becoming increasingly common, but for the limited purpose here of layout composition, we will assume all the individual graphic parts are extant.

- d

The author is currently working with an engineering software team exploring this problem.

- e

Fuzzy set theory and its relative, fuzzy logic, are ways of making decisions based on partial set membership. Where traditional Aristotelian logic sees true and false, black or white, fuzzy set theory allows for, say, a 30% gray to have partial membership in both the set of whitish things and the set of blackish things. It has particular relevance to the areas of vision, decision-making, stylistics, and most other human affairs.

- f

These four semantic effects will be briefly described here, but for further reading, chapters 5, 6 and 7 in FireSigns deal with these categories quite extensively.

- g

The Swiss graphic designer, photographer and design educator Josef Müller-Brockman designed posters to promote musical performances, including more than a dozen that constitute the Musica Viva series, on behalf of the Zurich Tonhalle from 1951 until 1971. Images of this array of posters may be viewed online at the following web address: http://socks-studio.com/2016/11/30/joseph-muller-brockmann-musica-viva-posters-for-the-zurich-tonhalle/ (Accessed June 14, 2019).

- h

More complex systems of semantic profiles are possible, with the number of potential profile types quickly expanding from 16 (high or low valency), 48 (high, medium, low), 192 (high, high-medium, low-medium, low) and 768 (high, high-medium, medium, low-medium, low). In practice, even with the iterative ease offered by meta-design with AI, it is probably not helpful to nuance semantic profiles beyond a three-factor—high, medium, low— valency set. A more detailed description of these valency sets, and the semantic profiles that contextualize them, can be found in: Skaggs, S. FireSigns: A Semiotic theory for Graphic Design, Cambridge, MA, USA: MIT Press, 2017. pgs. 111–113.

- i

Let me be clear that I am in no way implying that one may be able to purchase a “Milton Glaser in a box.” The kind of design that meta-design will likely be able to execute (especially initially) will not be the work of a master or even a designer of average proficiency; rather meta-design will be a tool for the judgment of the design director who will maintain executive control over final production. At its finest, unassisted meta-design can be expected to produce relatively common, competent compositions. To go beyond that will require the eye and decision-making soul of the human design director. However, formulaic solutions would be immediately within grasp, and, over time, working in tandem with an excellent human designer, it is difficult to predict a limit to the creative output of meta-design.

- j

One could imagine the ability to interweave Mr. Cartoon compositional density with traditional 15th century calligraphic formalities. What would such a mixtape look like?!

- k

- l

In other communicative fields, the use of AI has been going on for some time. The BBC reported (http://www.bbc.com/news/business-42858174) that by 2018 a number of “news alerts” were being drafted by bots, but these are largely the result of massive data-analysis protocols, which draft template messages. So far, job losses in journalism have had more to do with the rise of social media and the ubiquity of decentralized sources of information than a direct replacement of a human reporter by a bot.

Skaggs, S. FireSigns: A Semiotic Theory for Graphic Design. Cambridge, MA, USA: MIT Press, 2017.

Research and Analysis Team. “Internet usage worldwide-Statistics & Facts,” Statista, April 5, 2019. Online. Available at: https://www.statista.com/topics/1145/internet-usage-worldwide/ (Accessed June 10, 2019).

Laranjo, F. “Ghosts of Designbots Yet to Come.” Eye Magazine (blog). December 21, 2016. Available at http://www.eyemagazine.com/blog/post/ghosts-of-designbots-yet-to-come (Accessed May 29, 2018).

Otay I., Kahraman C. “Fuzzy Sets in Earth and Space Sciences.” In: Kahraman C., Kaymak U., Yazici A. (eds) Fuzzy Logic in Its 50th Year. Series: Studies in Fuzziness and Soft Computing, vol 341. Basel, Switzerland: Springer, Cham, 2016.

Skaggs, S. FireSigns: A Semiotic theory for Graphic Design, Cambridge, MA, USA: MIT Press, 2017. pgs. 107–115.

Fitzgerald, K. Volume: Writings on Graphic Design, Music, Art and Culture. New York, NY, USA: Princeton Architectural Press, 2010. Pg.6.

Romano, F. “The Day the Typesetting Industry Died.” What They Think. December 16, 2011. Online. Available at: http://whattheythink.com/articles/55522-day-typesetting-industry-died/ (Accessed February 2, 2018.)